当前位置:网站首页>Hands-on Deep Learning_NiN

Hands-on Deep Learning_NiN

2022-08-04 21:08:00 【CV Small Rookie】

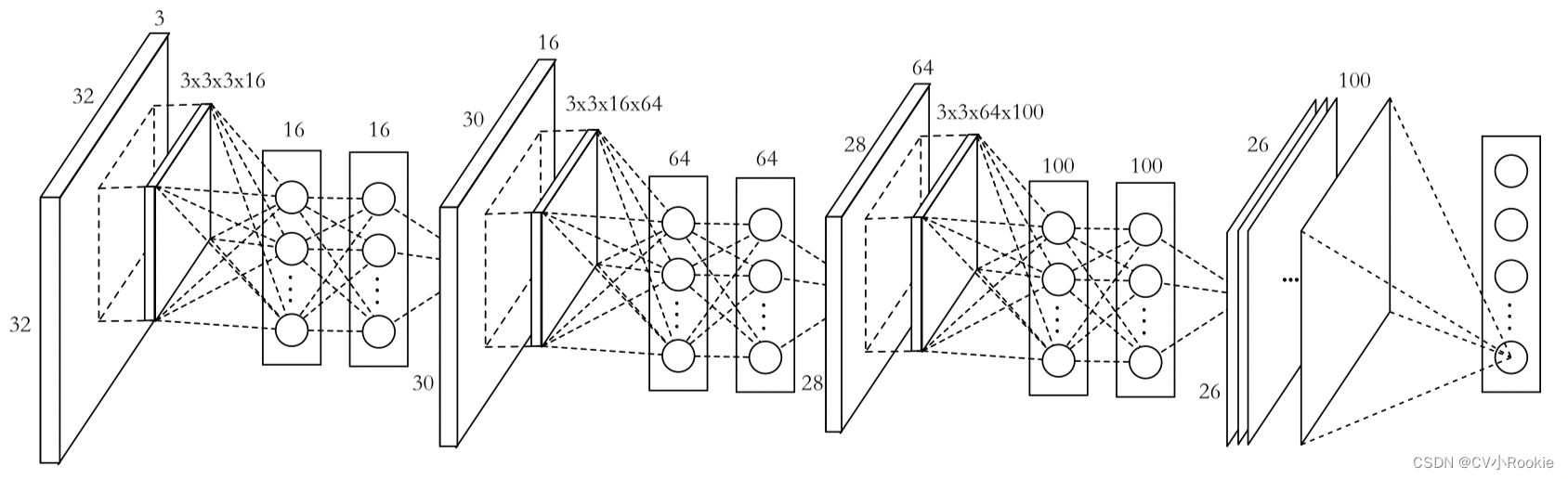

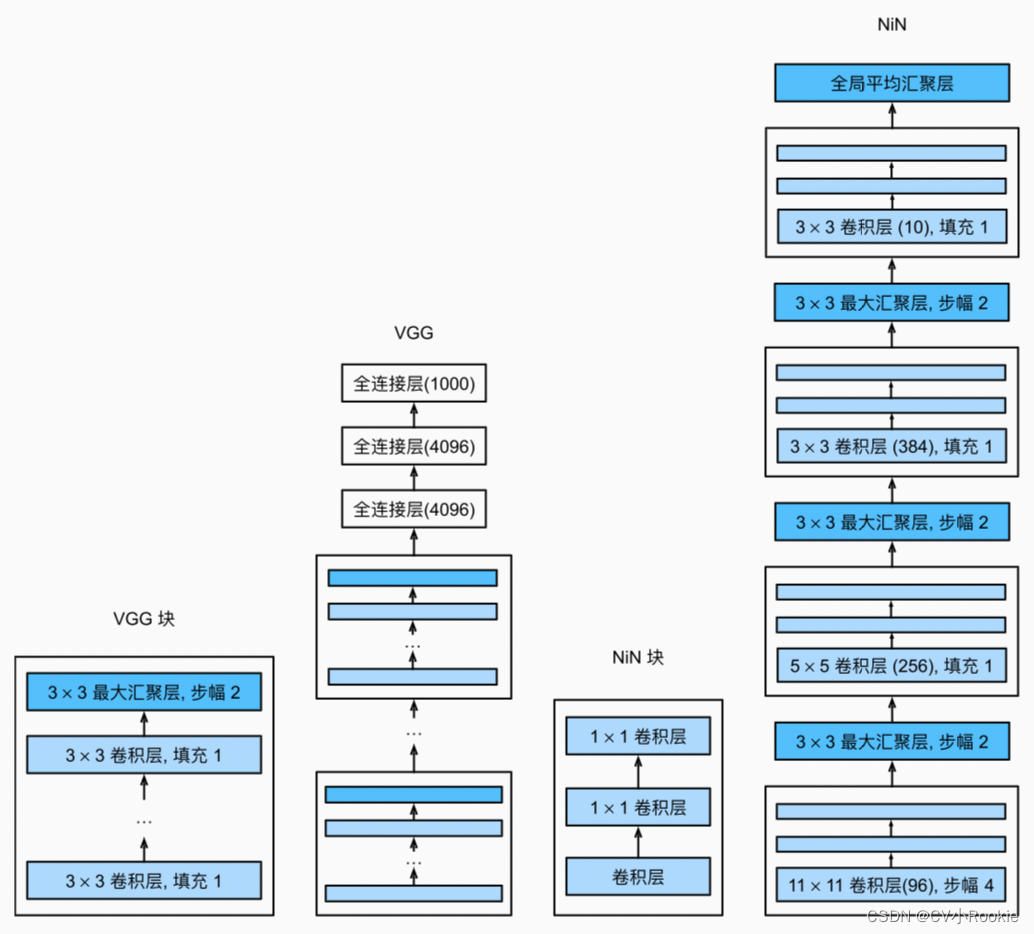

LeNet, AlexNet and VGG all share a common design pattern: extract spatial structure features through a series of convolutional layers and pooling layers; and then process the representation of features through fully connected layers.The improvement of LeNet by AlexNet and VGG mainly lies in how to expand and deepen these two modules.

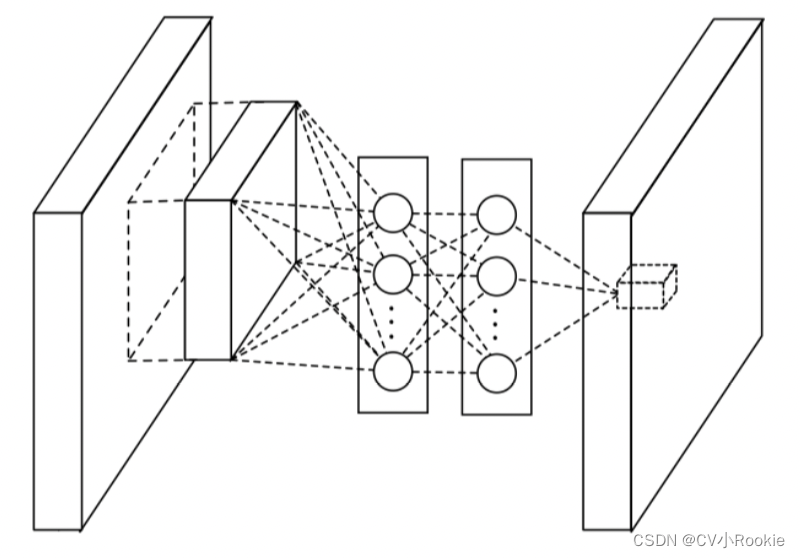

However, if fully connected layers are used, the spatial structure of the representation may be discarded entirely.The network in network (NiN) provides a very simple solution: use a multi-layer perceptron on each pixel channel (in fact, add two layers of 1 x 1 convolution, because as mentioned earlier, 1 x 1The convolution is equivalent to a parameter-sharing MLP)

As you can see from the diagram, the NiN network is composed of nin_blocks, and a nin_block consists of a convolutional layer + two

1 x 1 convolution composition:

The final output cancels the use of MLP, but uses a global Pooling to change the height and width of the feature map to 1, and finally uses Flatten to flatten to get the output.

def nin_block(in_channels, out_channels, kernel_size, strides, padding):return nn.Sequential(nn.Conv2d(in_channels, out_channels, kernel_size, strides, padding),nn.ReLU(),nn.Conv2d(out_channels, out_channels, kernel_size=1), nn.ReLU(),nn.Conv2d(out_channels, out_channels, kernel_size=1), nn.ReLU())class NiN(nn.Module):def __init__(self):super(NiN, self).__init__()self.model =nn.Sequential(nin_block(1, 96, kernel_size=11, strides=4, padding=0),nn.MaxPool2d(3, stride=2),nin_block(96, 256, kernel_size=5, strides=1, padding=2),nn.MaxPool2d(3, stride=2),nin_block(256, 384, kernel_size=3, strides=1, padding=1),nn.MaxPool2d(3, stride=2),nn.Dropout(0.5),# The number of tag categories is 10nin_block(384, 10, kernel_size=3, strides=1, padding=1),nn.AdaptiveAvgPool2d((1, 1)),# Convert the 4D output to a 2D output with shape (batch size, 10)nn.Flatten())def forward(self,x):x = self.model(x)return xThe size of the output of each layer:

Sequential output shape: torch.Size([1, 96, 54, 54])MaxPool2d output shape: torch.Size([1, 96, 26, 26])Sequential output shape: torch.Size([1, 256, 26, 26])MaxPool2d output shape: torch.Size([1, 256, 12, 12])Sequential output shape: torch.Size([1, 384, 12, 12])MaxPool2d output shape: torch.Size([1, 384, 5, 5])Dropout output shape: torch.Size([1, 384, 5, 5])Sequential output shape: torch.Size([1, 10, 5, 5])AdaptiveAvgPool2d output shape: torch.Size([1, 10, 1, 1])Flatten output shape: torch.Size([1, 10])

边栏推荐

- Matlab画图2

- dotnet delete read-only files

- 如何进行AI业务诊断,快速识别降本提效增长点?

- 3. Byte stream and character stream of IO stream

- Feign 与 OpenFeign

- STM32MP157A驱动开发 | 01- 板载LED作为系统心跳指示灯

- MySQL stored procedure introduction, creation, case, delete, view "recommended collection"

- 88.(cesium之家)cesium聚合图

- 机器学习_02

- win10 uwp 使用 WinDbg 调试

猜你喜欢

随机推荐

[AGC] Build Service 1 - Cloud Function Example

[Academic related] Tsinghua professor persuaded to quit his Ph.D.:I have seen too many doctoral students have mental breakdowns, mental imbalances, physical collapses, and nothing!...

idea2021版本添加上一步和下一步操作到工具栏

Data warehouse (1) What is data warehouse and what are the characteristics of data warehouse

[Teach you to use the serial port idle interrupt of the STM32HAL library]

C语言知识大全(一)——C语言概述,数据类型

C#之app.config、exe.config和vshost.exe.config作用区别

run command for node

Zynq Fpga图像处理之AXI接口应用——axi_lite接口使用

c语言小项目(三子棋游戏实现)

adb shell input keyevent 模拟按键事件

【C语言】指针和数组的深入理解(第三期)

LayaBox---TypeScript---结构

buu web

vs Code runs a local web server

拒绝服务攻击DDoS介绍与防范

【TypeScript】深入学习TypeScript枚举

Configure laravel queue method using fort app manager

【Programming Ideas】

Debug locally and start the local server in vs code