当前位置:网站首页>Spark submission parameter -- use of files

Spark submission parameter -- use of files

2022-06-24 10:31:00 【The south wind knows what I mean】

Project scenario :

We have two clusters (ps: Computing Cluster / Storage cluster ), Now there is a need for , Computing cluster runs Spark Mission , from kafka Write the data to the storage cluster hive

Problem description

Read and write data across clusters , We tested writing hbase It can be written from the computing cluster to the storage cluster , And it can be written in .

But once you write hive He just doesn't write about storage clusters hive in , Each time, it only writes about the computing cluster hive in .

It's hard for me to understand , And I am here IDEA During the test , Can be written to the storage cluster hive in , Once you get on the dolphin, put it on the cluster and run He wrote that he had deviated , It is written to the computing cluster hive Inside the . I am here resource The folder also contains the storage cluster core-site.xml hdfs-site.xml hive-site.xml The file , I also wrote in the code changeNameNode The method . But the program still seems unable to switch to the storage cluster when running NN Up

/*** * @Author: lzx * @Description: * @Date: 2022/5/27 * @Param session: bulid well Sparkssion * @Param nameSpace: The namespace of the cluster * @Param nn1: nn1_ID * @Param nn1Addr: nn1 Corresponding IP:host * @Param nn2: nn2_ID * @Param nn2Addr: nn2 Corresponding IP:host * @return: void **/

def changeHDFSConf(session:SparkSession,nameSpace:String,nn1:String,nn1Addr:String,nn2:String,nn2Addr:String): Unit ={

val sc: SparkContext = session.sparkContext

sc.hadoopConfiguration.set("fs.defaultFS", s"hdfs://$nameSpace")

sc.hadoopConfiguration.set("dfs.nameservices", nameSpace)

sc.hadoopConfiguration.set(s"dfs.ha.namenodes.$nameSpace", s"$nn1,$nn2")

sc.hadoopConfiguration.set(s"dfs.namenode.rpc-address.$nameSpace.$nn1", nn1Addr)

sc.hadoopConfiguration.set(s"dfs.namenode.rpc-address.$nameSpace.$nn2", nn2Addr)

sc.hadoopConfiguration.set(s"dfs.client.failover.proxy.provider.$nameSpace", s"org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider")

}

Cause analysis :

1. I'll go first Spark On the running interface of Environment Under the hadoop Parameters of , I searched nn1 Went to have a look , Look at my changenamenode Has the method worked for me

2, result dfs.namenode.http-address.hr-hadoop.nn1 The value of the or node03( Calculate the cluster ) No node118( Storage cluster ) Explain that the method is still not effective

Why not take effect ???

Configuration conf=new Configuration();

Create a Configuration Object time , Its construction method will load... By default hadoop Two configuration files in , Namely hdfs-site.xml as well as core-site.xml, There will be access in these two files hdfs Required parameter values

I have this in my code , Why didn't you load it ??

3, After analysis, I found that , The code is submitted to the cluster for execution , It loads the... On the cluster core/hdfs-site.xml file , Directly discard the configuration file in the code

Solution :

1. In the code , Replace the cluster configuration file with your own configuration file , In this way, you can find the information of the storage cluster

val hadoopConf: Configuration = new Configuration()

hadoopConf.addResource("hdfs-site.xml")

hadoopConf.addResource("core-site.xml")

If both configuration resources contain the same configuration item , And the configuration item of the previous resource is not marked as final, that , The following configuration will overwrite the previous configuration . In the example above ,core-site.xml The configuration in will override core-default.xml Configuration with the same name in . If in the first resource (core-default.xml) A configuration item in is marked as final, that , When loading the second resource , There will be a warning .

2, It's not possible to do that just above , It says , Once packaged and run on the cluster , He will put resource Under the folder core/hdfs-site.xml File discard , then .addResource(“hdfs-site.xml”) I can't find my own document , Go to the configuration file of the cluster

3, Put your two configuration files in the execution directory , Submit again spark When the task , Specify in the submission parameters

--files /srv/udp/2.0.0.0/spark/userconf/hdfs-site.xml,/srv/udp/2.0.0.0/spark/userconf/core-site.xml \

4, Expand :

--files Transferred files :

If you are in the same cluster as the current submission cluster , It will prompt that the current data source is the same as the target file storage system , The copy is not triggered at this time

INFO Client: Source and destination file systems are the same. Not copying

If you are in a different cluster from the current submission cluster , The source file is updated from the source path to the current file storage system

INFO Client: Uploading resource

边栏推荐

- uniapp实现禁止video拖拽快进

- [EI分享] 2022年第六届船舶,海洋与海事工程国际会议(NAOME 2022)

- Six states of threads

- Learning to organize using kindeditor rich text editor in PHP

- Caching mechanism for wrapper types

- tf.contrib.layers.batch_norm

- leetCode-面试题 16.06: 最小差

- 自定义kindeditor编辑器的工具栏,items即去除不必要的工具栏或者保留部分工具栏

- 栈题目:括号的分数

- Leetcode-1089: replication zero

猜你喜欢

SQL Sever关于like操作符(包括字段数据自动填充空格问题)

Distributed transaction principle and solution

numpy. linspace()

leetCode-498: 对角线遍历

Spark提交参数--files的使用

Uniapp implements the function of clicking to make a call

Role of message queuing

Juul, the American e-cigarette giant, suffered a disaster, and all products were forced off the shelves

88.合并有序数组

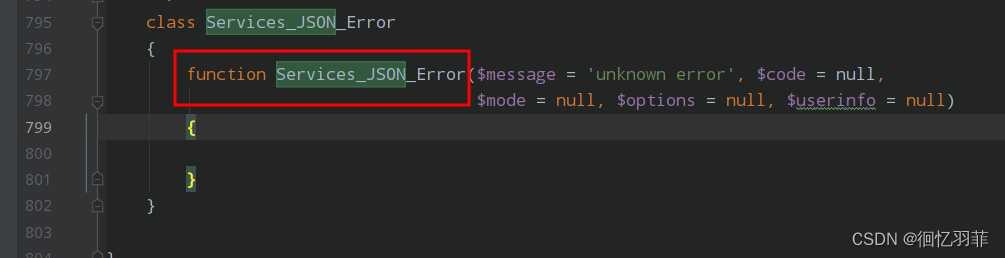

解决Deprecated: Methods with the same name as their class will not be constructors in报错方案

随机推荐

6.套餐管理业务开发

Cross domain overview, simple accumulation

Learn to use PHP to implement unlimited comments and unlimited to secondary comments solutions

如何在一个页面上使用多个KindEditor编辑器并将值传递到服务器端

uniapp开发微信小程序,显示地图功能,且点击后打开高德或腾讯地图。

SQL sever试题求最晚入职日期

卷妹带你学jdbc---2天冲刺Day1

numpy. logical_ and()

解决DBeaver SQL Client 连接phoenix查询超时

What you must know about distributed systems -cap

leetCode-1089: 复写零

栈题目:括号的分数

学习使用KindEditor富文本编辑器,点击上传图片遮罩太大或白屏解决方案

牛客-TOP101-BM29

小程序 rich-text中图片点击放大与自适应大小问题

Uniapp develops a wechat applet to display the map function, and click it to open Gaode or Tencent map.

Appium自动化测试基础 — 移动端测试环境搭建(一)

2022全网最全最细的jmeter接口测试教程以及接口测试流程详解— JMeter测试计划元件(线程<用户>)

微信小程序rich-text图片宽高自适应的方法介绍(rich-text富文本)

正规方程、、、