当前位置:网站首页>Yolov3 pytoch code and principle analysis (II): network structure and loss calculation

Yolov3 pytoch code and principle analysis (II): network structure and loss calculation

2022-06-11 19:28:00 【Autumn mountain and snow】

YOLOv3 Pytorch Code and principle analysis ( One ): Run through code

YOLOv3 Pytorch Code and principle analysis ( Two ): Network structure and Loss Calculation

1. Network structure

notes :

(1) The output in the diagram is missing a batch-size Dimensions , for example yolo1 The actual output of is [bs, 3, 13, 13, 85]

(2)yolo Layer function :yolo Layer in forward Only the structure of the input feature is adjusted , No change in value

(3)yolo Layer output :3 representative anchor Number ;13*13 Represents the mesh divided by the image ;85 Represents network prediction [x, y, w, h, obj, cls], That is, the central coordinate of the predicted target 、 Length and width 、 Is there a goal 、 Target categories (coco In Chinese, it means 80 class )

2. Loss

Whole loss It's made up of three parts :

lbox( Corresponding to the output x, y, w, h)

lobj( Corresponding to the output obj)

lcls( Corresponding to the output cls)

2.1 lobj

Calculate for all forecasts lobj

label : For each of the grids anchor, If there is a goal that meets the requirements, it is 1, If not, it is 0

requirement : The central coordinates of the target are in the grid ;anchor And target size wh_iou > iou_t(0.2)

wh_iou Calculation method :

i n t e r = m i n ( a w , t w ) ∗ m i n ( a h , t h ) \mathrm{inter} = min(\mathrm{aw,tw})*min(\mathrm{ah,th}) inter=min(aw,tw)∗min(ah,th)

w h _ i o u = i n t e r / ( a w ∗ a h + t w ∗ t h − i n t e r ) \mathrm{wh\_iou} = \mathrm{inter} /(\mathrm{aw*ah+tw*th-inter}) wh_iou=inter/(aw∗ah+tw∗th−inter)

aw、ah by anchor Size ,tw、th Is the target size

loss Calculation method :

Use nn.BCEWithLogitsLoss, Is to make a... For the input first sigmoid Then calculate the cross entropy

l n = − w n [ y n ⋅ log σ ( x n ) + ( 1 − y n ) ⋅ log ( 1 − σ ( x n ) ) ] l_{n}=-w_{n}\left[y_{n} \cdot \log \sigma\left(x_{n}\right)+\left(1-y_{n}\right) \cdot \log \left(1-\sigma\left(x_{n}\right)\right)\right] ln=−wn[yn⋅logσ(xn)+(1−yn)⋅log(1−σ(xn))]

2.2 lcls

Only for the prediction calculation of the position where there are qualified targets lcls

label : Target category one-hot vector

loss Calculation method :

And lobj identical , Use nn.BCEWithLogitsLoss

2.3 lbox

Only for the prediction calculation of the position where there are qualified targets lbox

All relevant values shall be in accordance with this yolo The step size of the layer is reduced , Including the label target x, y, w, h, and anchor Of w, h

With yolo1 For example : In steps of 32,anchor by [116,90],[156,198],[373,326]→[ 3.62500,2.81250],[ 4.87500,6.18750],[11.65625,0.18750]

Reduced label x, y The integer part of the can know which grid the central coordinate is in , The decimal part is the offset from the upper left corner of the grid , The final network prediction x, y Of sigmoid To approach this fraction ; Online learning is not practical x, y, It's the relative position in the grid .

Network prediction w, h do exp And ride on anchor Of w, h Close to the label w, h; Online learning is not practical w, h, But the goal and anchor The proportion of .

loss Calculation method : 1 − g i o u 1-\mathrm{giou} 1−giou

g i o u = i n t e r / u n i o n − ( c _ a r e a − u n i o n ) / c _ a r e a \mathrm{giou = inter/union - (c\_area-union)/c\_area} giou=inter/union−(c_area−union)/c_area

i n t e r \mathrm{inter} inter Is the overlapping area of two areas

u n i o n \mathrm{union} union Is the total area of the two areas

c _ a r e a \mathrm{c\_area} c_area Is the smallest rectangular area that can contain two areas

g i o u \mathrm{giou} giou The range is ( − 1 , 1 ) (-1,1) (−1,1), When the two areas overlap completely g i o u = 1 \mathrm{giou}=1 giou=1, When the area of two regions is finite and the distance between them is infinite g i o u = − 1 \mathrm{giou}=-1 giou=−1, g i o u \mathrm{giou} giou be relative to i o u \mathrm{iou} iou It can measure the distance between two regions when they do not intersect .

Loss Core code

pxy = ps[:, :2].sigmoid()

pwh = ps[:, 2:4].exp().clamp(max=1E3) * anchors[i]

pbox = torch.cat((pxy, pwh), 1)

giou = bbox_iou(pbox.t(), tbox[i], x1y1x2y2=False, GIoU=True)

lbox += (1.0 - giou).mean()

lcls += BCEcls(ps[:, 5:], t) # t:

lobj += BCEobj(pi[..., 4], tobj) # tobj: shape[bs, 3, 13, 13], The existing target location is 1, For the other 0

among :

BCEcls = nn.BCEWithLogitsLoss(pos_weight=ft([h['cls_pw']]), reduction=red) # 'cls_pw': 1.0

BCEobj = nn.BCEWithLogitsLoss(pos_weight=ft([h['obj_pw']]), reduction=red) # 'obj_pw': 1.0

pi For all forecast outputs of the network

ps For satisfactory output

lobj Calculate for all forecasts ,lcls and lbox Calculate the prediction that meets the requirements

requirement :

1. The central coordinates of the target are in the grid

2. anchor And the target box wh_iou > iou_t(0.2)

wh_iou Calculation method of :

inter = min(aw,tw)*min(ah,th)

wh_iou = inter/(aw*ah+tw*th-inter)

3. The code analysis

3.0. Darknet

The core of network construction is create_modules; The whole network forward After reading the text, the construction of each layer will be more intuitive , The resulting yolo_out, contain 3 individual yolo Layer output .

because ONNX_EXPORT = False, augment = False, verbose = False

For the sake of brevity, the code will be temporarily connected with these three parameters as True Delete the statement when

# train.py 91

model = Darknet(cfg).to(device)

# models.py 225

class Darknet(nn.Module):

def __init__(self, cfg, img_size=(416, 416), verbose=False):

super(Darknet, self).__init__()

self.module_defs = parse_model_cfg(cfg)

self.module_list, self.routs = create_modules(self.module_defs, img_size, cfg)

self.yolo_layers = get_yolo_layers(self) # yolov3:[82, 94, 106]

self.version = np.array([0, 2, 5], dtype=np.int32) # (int32) version info: major, minor, revision

self.seen = np.array([0], dtype=np.int64) # (int64) number of images seen during training

self.info(verbose) if not ONNX_EXPORT else None # print model description

def forward(self, x, augment=False, verbose=False):

if not augment:

return self.forward_once(x)

def forward_once(self, x, augment=False, verbose=False):

img_size = x.shape[-2:] # height, width

yolo_out, out = [], []

if verbose:

print('0', x.shape)

str = ''

for i, module in enumerate(self.module_list):

name = module.__class__.__name__

if name in ['WeightedFeatureFusion', 'FeatureConcat']: # sum, concat

x = module(x, out) # WeightedFeatureFusion(), FeatureConcat()

elif name == 'YOLOLayer':

yolo_out.append(module(x, out))

else: # run module directly, i.e. mtype = 'convolutional', 'upsample', 'maxpool', 'batchnorm2d' etc.

x = module(x)

out.append(x if self.routs[i] else [])

if self.training: # train

return yolo_out

else: # inference or test

x, p = zip(*yolo_out) # inference output, training output

x = torch.cat(x, 1) # cat yolo outputs

return x, p

3.1. parse_model_cfg

Input : Model configuration file path

Output : A list containing multiple dictionaries , The configuration information of each layer is a dictionary

# utils/parse_config.py 6

def parse_model_cfg(path):

# Parse the yolo *.cfg file and return module definitions path may be 'cfg/yolov3.cfg', 'yolov3.cfg', or 'yolov3'

if not path.endswith('.cfg'): # add .cfg suffix if omitted

path += '.cfg'

if not os.path.exists(path) and os.path.exists('cfg' + os.sep + path): # add cfg/ prefix if omitted

path = 'cfg' + os.sep + path

with open(path, 'r') as f:

lines = f.read().split('\n')

lines = [x for x in lines if x and not x.startswith('#')]

# lstrip() Remove the leading white space ( Include \n、\r、\t、' ', namely : Line break 、 enter 、 tabs 、 Space )

# rstrip() Remove trailing white space

lines = [x.rstrip().lstrip() for x in lines]

mdefs = [] # module definitions

for line in lines:

if line.startswith('['): # This marks the start of a new block

mdefs.append({

})

mdefs[-1]['type'] = line[1:-1].rstrip()

if mdefs[-1]['type'] == 'convolutional':

mdefs[-1]['batch_normalize'] = 0 # pre-populate with zeros (may be overwritten later)

else:

key, val = line.split("=")

key = key.rstrip()

if key == 'anchors': # return nparray

mdefs[-1][key] = np.array([float(x) for x in val.split(',')]).reshape((-1, 2)) # np anchors

elif (key in ['from', 'layers', 'mask']) or (key == 'size' and ',' in val): # return array

mdefs[-1][key] = [int(x) for x in val.split(',')]

else:

val = val.strip()

# isnumeric() Determine whether a string is composed of only numbers , Cannot detect floating point number ( The decimal point in the string is False)

# TODO: .isnumeric() actually fails to get the float case

if val.isnumeric(): # return int or float( The judgment here is temporarily superfluous , It should be all int)

mdefs[-1][key] = int(val) if (int(val) - float(val)) == 0 else float(val)

else:

mdefs[-1][key] = val # return string

# Check whether all fields are supported

supported = ['type', 'batch_normalize', 'filters', 'size', 'stride', 'pad', 'activation', 'layers', 'groups',

'from', 'mask', 'anchors', 'classes', 'num', 'jitter', 'ignore_thresh', 'truth_thresh', 'random',

'stride_x', 'stride_y', 'weights_type', 'weights_normalization', 'scale_x_y', 'beta_nms', 'nms_kind',

'iou_loss', 'iou_normalizer', 'cls_normalizer', 'iou_thresh', 'probability']

f = [] # fields

for x in mdefs[1:]:

[f.append(k) for k in x if k not in f]

u = [x for x in f if x not in supported] # unsupported fields

assert not any(u), "Unsupported fields %s in %s. See https://github.com/ultralytics/yolov3/issues/631" % (u, path)

return mdefs

3.2. create_modules

stay yolov3.cfg in , The network layer categories are [convolutional]、[shortcut]、[yolo]、[route]、[upsample]

1. [convolutional]

Conv2d + BatchNorm2d + LeakyReLU

notes :

(1) because size All are single-size, So convolution layers are ‘Conv2d’, Each parameter is set according to the configuration file .

(2) In the document pad=1 Is not padding value ,padding Values are determined by kernel_size//2 determine .

(3) except yolo The previous convolution layer of the layer has BatchNorm layer .

(4) yolo The previous convolution layer of the layer corresponds to routs by True

# models.py 8

def create_modules(module_defs, img_size, cfg):

# Constructs module list of layer blocks from module configuration in module_defs

img_size = [img_size] * 2 if isinstance(img_size, int) else img_size # expand if necessary

_ = module_defs.pop(0) # cfg training hyperparams (unused)

output_filters = [3] # input channels

module_list = nn.ModuleList()

routs = [] # list of layers which rout to deeper layers

yolo_index = -1

for i, mdef in enumerate(module_defs):

modules = nn.Sequential()

if mdef['type'] == 'convolutional':

bn = mdef['batch_normalize']

filters = mdef['filters']

k = mdef['size'] # kernel size

stride = mdef['stride'] if 'stride' in mdef else (mdef['stride_y'], mdef['stride_x'])

if isinstance(k, int): # single-size conv

modules.add_module('Conv2d', nn.Conv2d(in_channels=output_filters[-1],

out_channels=filters,

kernel_size=k,

stride=stride,

padding=k // 2 if mdef['pad'] else 0,

groups=mdef['groups'] if 'groups' in mdef else 1,

bias=not bn))

else: # multiple-size conv

modules.add_module('MixConv2d', MixConv2d(in_ch=output_filters[-1],

out_ch=filters,

k=k,

stride=stride,

bias=not bn))

if bn:

modules.add_module('BatchNorm2d', nn.BatchNorm2d(filters, momentum=0.03, eps=1E-4))

else:

routs.append(i) # detection output (goes into yolo layer)

if mdef['activation'] == 'leaky': # activation study https://github.com/ultralytics/yolov3/issues/441

modules.add_module('activation', nn.LeakyReLU(0.1, inplace=True))

elif mdef['activation'] == 'swish':

modules.add_module('activation', Swish())

elif mdef['activation'] == 'mish':

modules.add_module('activation', Mish())

# other type

else:

print('Warning: Unrecognized Layer Type: ' + mdef['type'])

# Register module list and number of output filters

module_list.append(modules)

output_filters.append(filters)

routs_binary = [False] * (i + 1)

for i in routs:

routs_binary[i] = True

return module_list, routs_binary

2. [shortcut]

[shortcut]

from=-3

activation=linear

notes :

(1) be applied to res_block, Put the upper layer and from The output of the specified layer is added .

(2) In the final module_list Li Wei WeightedFeatureFusion()

(3) from forward final Adjust channels As can be seen in the section , The output feature dimension remains the same as the previous level (-1 layer ) Same output .

(4)from The layer pointed to corresponds to routs by True

elif mdef['type'] == 'shortcut': # nn.Sequential() placeholder for 'shortcut' layer

layers = mdef['from']

filters = output_filters[-1]

routs.extend([i + l if l < 0 else l for l in layers])

modules = WeightedFeatureFusion(layers=layers, weight='weights_type' in mdef)

# utils/layers.py 38

class WeightedFeatureFusion(nn.Module): # weighted sum of 2 or more layers https://arxiv.org/abs/1911.09070

def __init__(self, layers, weight=False):

super(WeightedFeatureFusion, self).__init__()

self.layers = layers # layer indices Network layer index

self.weight = weight # apply weights boolean

self.n = len(layers) + 1 # number of layers

if weight:

# nn.Parameter send weights Can be trained

self.w = nn.Parameter(torch.zeros(self.n), requires_grad=True) # layer weights

def forward(self, x, outputs):

# Weights

if self.weight:

w = torch.sigmoid(self.w) * (2 / self.n) # sigmoid weights (0-1)

x = x * w[0]

# Fusion

nx = x.shape[1] # input channels

for i in range(self.n - 1):

a = outputs[self.layers[i]] * w[i + 1] if self.weight else outputs[self.layers[i]] # feature to add

na = a.shape[1] # feature channels

# Adjust channels

if nx == na: # same shape

x = x + a

elif nx > na: # slice input

x[:, :na] = x[:, :na] + a # or a = nn.ZeroPad2d((0, 0, 0, 0, 0, dc))(a); x = x + a

else: # slice feature

x = x + a[:, :nx]

return x

3. [route]

[route]

layers = -1, 61

notes :

(1)layers Specified layer output feature splicing , The output feature dimensions are all input dimensions and , Single layer refers to the output of this layer

(2) In the final module_list Li Wei FeatureConcat()

(3)layers The layer pointed to corresponds to routs by True

elif mdef['type'] == 'route': # nn.Sequential() placeholder for 'route' layer

layers = mdef['layers']

filters = sum([output_filters[l + 1 if l > 0 else l] for l in layers])

routs.extend([i + l if l < 0 else l for l in layers])

modules = FeatureConcat(layers=layers)

# utils/layers.py 28

class FeatureConcat(nn.Module):

def __init__(self, layers):

super(FeatureConcat, self).__init__()

self.layers = layers # layer indices

self.multiple = len(layers) > 1 # multiple layers flag

def forward(self, x, outputs):

return torch.cat([outputs[i] for i in self.layers], 1) if self.multiple else outputs[self.layers[0]]

4. [upsample]

[upsample]

stride=2

initial y o l o _ i n d e x = − 1 , i m g _ s i z e = ( 416 , 416 ) \mathrm{yolo\_index} = -1,\mathrm{img\_size}=(416, 416) yolo_index=−1,img_size=(416,416)

hypothesis O N N X _ E X P O R T = T r u e \mathrm{ONNX\_EXPORT=True} ONNX_EXPORT=True

yolo1 Upper sampling after g=1/16,size=(26,26)

yolo2 Upper sampling after g=1/8,size=(52,52)

# stay models.py It is set at the beginning ONNX_EXPORT = False

elif mdef['type'] == 'upsample':

if ONNX_EXPORT: # explicitly state size, avoid scale_factor

g = (yolo_index + 1) * 2 / 32 # gain

modules = nn.Upsample(size=tuple(int(x * g) for x in img_size))

else:

modules = nn.Upsample(scale_factor=mdef['stride'])

5. [yolo]

[yolo]

mask = 6,7,8

anchors = 10,13, 16,30, 33,23, 30,61, 62,45, 59,119, 116,90, 156,198, 373,326

classes=80

num=9

jitter=.3

ignore_thresh = .7

truth_thresh = 1

random=1

Deal with the parameters , And then use it YOLOLayer structure yolo layer

(try Some are on hold )

elif mdef['type'] == 'yolo':

yolo_index += 1 # 0,1,2 yolo Layer index

stride = [32, 16, 8] # P5, P4, P3 strides

if any(x in cfg for x in ['panet', 'yolov4', 'cd53']): # stride order reversed

stride = list(reversed(stride))

layers = mdef['from'] if 'from' in mdef else [] # layers = []

modules = YOLOLayer(anchors=mdef['anchors'][mdef['mask']], # anchor list

nc=mdef['classes'], # number of classes (80)

img_size=img_size, # (416, 416)

yolo_index=yolo_index, # 0, 1, 2...

layers=layers, # output layers

stride=stride[yolo_index])

# Initialize preceding Conv2d() bias (https://arxiv.org/pdf/1708.02002.pdf section 3.3)

try:

j = layers[yolo_index] if 'from' in mdef else -1

# If previous layer is a dropout layer, get the one before

if module_list[j].__class__.__name__ == 'Dropout':

j -= 1

bias_ = module_list[j][0].bias # shape(255,)

bias = bias_[:modules.no * modules.na].view(modules.na, -1) # shape(3,85)

bias[:, 4] += -4.5 # obj

bias[:, 5:] += math.log(0.6 / (modules.nc - 0.99)) # cls (sigmoid(p) = 1/nc)

module_list[j][0].bias = torch.nn.Parameter(bias_, requires_grad=bias_.requires_grad) # bias When adjusting the value ,bias_ The corresponding value will also be adjusted

except:

print('WARNING: smart bias initialization failure.')

# With yolo1 For example YOLOLayer The input of

anchors = array([[116,90], [156,198], [373,326]])

nc = 80

img_size = (416,416)

yolo_index = 0

layers = []

stride = 32

Training phase , Transform the output of the previous convolution layer

Input :(batch_size, 255, 13, 13)

Output :(batch_size, 3, 13, 13, 85)(batch_size, anchors, grid, grid, classes + xywh)

forward part ASFF Put on hold for the time being

class YOLOLayer(nn.Module):

def __init__(self, anchors, nc, img_size, yolo_index, layers, stride):

super(YOLOLayer, self).__init__()

self.anchors = torch.Tensor(anchors)

self.index = yolo_index # index of this layer in layers

self.layers = layers # model output layer indices

self.stride = stride # layer stride

self.nl = len(layers) # number of output layers (3) Should be 0

self.na = len(anchors) # number of anchors (3)

self.nc = nc # number of classes (80)

self.no = nc + 5 # number of outputs (85)

self.nx, self.ny, self.ng = 0, 0, 0 # initialize number of x, y gridpoints

self.anchor_vec = self.anchors / self.stride

self.anchor_wh = self.anchor_vec.view(1, self.na, 1, 1, 2)

def create_grids(self, ng=(13, 13), device='cpu'):

self.nx, self.ny = ng # x and y grid size

self.ng = torch.tensor(ng, dtype=torch.float)

# build xy offsets

if not self.training:

yv, xv = torch.meshgrid([torch.arange(self.ny, device=device), torch.arange(self.nx, device=device)])

self.grid = torch.stack((xv, yv), 2).view((1, 1, self.ny, self.nx, 2)).float()

if self.anchor_vec.device != device:

self.anchor_vec = self.anchor_vec.to(device)

self.anchor_wh = self.anchor_wh.to(device)

def forward(self, p, out):

ASFF = False # https://arxiv.org/abs/1911.09516

if ASFF:

i, n = self.index, self.nl # index in layers, number of layers

p = out[self.layers[i]]

bs, _, ny, nx = p.shape # bs, 255, 13, 13

if (self.nx, self.ny) != (nx, ny):

self.create_grids((nx, ny), p.device)

# outputs and weights

# w = F.softmax(p[:, -n:], 1) # normalized weights

w = torch.sigmoid(p[:, -n:]) * (2 / n) # sigmoid weights (faster)

# w = w / w.sum(1).unsqueeze(1) # normalize across layer dimension

# weighted ASFF sum

p = out[self.layers[i]][:, :-n] * w[:, i:i + 1]

for j in range(n):

if j != i:

p += w[:, j:j + 1] * \

F.interpolate(out[self.layers[j]][:, :-n], size=[ny, nx], mode='bilinear', align_corners=False)

else:

bs, _, ny, nx = p.shape # bs, 255, 13, 13

if (self.nx, self.ny) != (nx, ny):

self.create_grids((nx, ny), p.device) # The training phase is to let self.nx, self.ny = nx, ny

# p.view(bs, 255, 13, 13) -- > (bs, 3, 13, 13, 85) # (bs, anchors, grid, grid, classes + xywh)

p = p.view(bs, self.na, self.no, self.ny, self.nx).permute(0, 1, 3, 4, 2).contiguous() # prediction

if self.training:

return p

else: # inference

io = p.clone() # inference output

io[..., :2] = torch.sigmoid(io[..., :2]) + self.grid # xy

io[..., 2:4] = torch.exp(io[..., 2:4]) * self.anchor_wh # wh yolo method

io[..., :4] *= self.stride

torch.sigmoid_(io[..., 4:])

return io.view(bs, -1, self.no), p # view [1, 3, 13, 13, 85] as [1, 507, 85]

3.3. Loss

# train.py 278

# Forward

pred = model(imgs)

# Loss

loss, loss_items = compute_loss(pred, targets, model)

if not torch.isfinite(loss):

print('WARNING: non-finite loss, ending training ', loss_items)

return results

# utils/utils.py 353

def compute_loss(p, targets, model):

Input parameters :

1. pred The predictive output of the network

shape: [yolo Number of layers , batch_size, anchor Number , grid, grid, classes + xywh]

2. targets label

shape: [nt, 6]

among , nt For the sake of batch_size The number of objects the image contains ,6 For label information [ Image number , Category , x, y, w, h]

# utils/utils.py 420

def build_targets(p, targets, model):

Input and compute_loss The inputs are the same

Output :tcls, tbox, indices, anch

4 Each output contains 3 An array ( Corresponding 3 individual yolo layer ) A list of , Each array is contained in the yolo The layer meets the requirements targets Information about ;

The requirement is anchors and targets Of wh_iou > Model superparameter iou_t;

wh_iou Calculation method of :

inter = min(aw,tw)*min(ah,th)

wh_iou = inter/(aw*ah+tw*th-inter)

1. indices: In the list 3 Tuples (yolo), In tuple 4 individual tensor, tensor Inside are Image number 、anchor Number 、y、x ( The value is rounded off , Take the whole part ; anchor The number is 0,1,2)

2. tcls: In the list 3 individual tensor, tensor Internal Category number

3. tbox: In the list 3 individual tensor, [dx, dy, w, h], dx,dy by x,y Decimal part of

4. anch: In the list 3 individual tensor, anchor Size

xywh and anchor The size values are scaled to this yolo Layer grid size coordinates , Such as (0,1),(0,416)→(0,13)

# utils/utils.py 239

def bbox_iou(box1, box2, x1y1x2y2=True, GIoU=False, DIoU=False, CIoU=False):

Output is giou

giou = inter/union - (c_area-union)/c_area

among :

inter Is the overlapping area of two areas

union Is the total area of the two areas

c_area Is the smallest rectangular area that can contain two areas

# utils/utils.py 353

def compute_loss(p, targets, model): # predictions, targets, model

ft = torch.cuda.FloatTensor if p[0].is_cuda else torch.Tensor

lcls, lbox, lobj = ft([0]), ft([0]), ft([0])

tcls, tbox, indices, anchors = build_targets(p, targets, model) # targets

h = model.hyp # hyperparameters

red = 'mean' # Loss reduction (sum or mean)

# Define criteria

BCEcls = nn.BCEWithLogitsLoss(pos_weight=ft([h['cls_pw']]), reduction=red) # 'cls_pw': 1.0

BCEobj = nn.BCEWithLogitsLoss(pos_weight=ft([h['obj_pw']]), reduction=red) # 'obj_pw': 1.0

# class label smoothing https://arxiv.org/pdf/1902.04103.pdf eqn 3

cp, cn = smooth_BCE(eps=0.0) # cp=1.0 cn=0.0

# focal loss

g = h['fl_gamma'] # focal loss gamma 'fl_gamma': 0.0

if g > 0:

BCEcls, BCEobj = FocalLoss(BCEcls, g), FocalLoss(BCEobj, g)

# per output

nt = 0 # targets

for i, pi in enumerate(p): # layer index, layer predictions

b, a, gj, gi = indices[i] # image, anchor, gridy, gridx

tobj = torch.zeros_like(pi[..., 0]) # target obj shape[bs, 3, 13, 13]

nb = b.shape[0] # number of targets

if nb:

nt += nb # cumulative targets

ps = pi[b, a, gj, gi] # adopt indices, Get the prediction value of the network for the target location

# GIoU

pxy = ps[:, :2].sigmoid()

pwh = ps[:, 2:4].exp().clamp(max=1E3) * anchors[i]

pbox = torch.cat((pxy, pwh), 1) # predicted box

giou = bbox_iou(pbox.t(), tbox[i], x1y1x2y2=False, GIoU=True) # giou(prediction, target)

lbox += (1.0 - giou).sum() if red == 'sum' else (1.0 - giou).mean() # giou loss

# Obj

# model.gr No source found , Values are 0

# tobj shape[bs, 3, 13, 13], The existing target location is 1, For the other 0

tobj[b, a, gj, gi] = (1.0 - model.gr) + model.gr * giou.detach().clamp(0).type(tobj.dtype) # giou ratio

# Class

if model.nc > 1: # cls loss (only if multiple classes)

t = torch.full_like(ps[:, 5:], cn) # targets

t[range(nb), tcls[i]] = cp

lcls += BCEcls(ps[:, 5:], t) # BCE

# Append targets to text file

# with open('targets.txt', 'a') as file:

# [file.write('%11.5g ' * 4 % tuple(x) + '\n') for x in torch.cat((txy[i], twh[i]), 1)]

lobj += BCEobj(pi[..., 4], tobj) # obj loss

lbox *= h['giou'] # 'giou': 3.54

lobj *= h['obj'] # 'obj': 64.3

lcls *= h['cls'] # 'cls': 37.4

if red == 'sum':

bs = tobj.shape[0] # batch size

g = 3.0 # loss gain

lobj *= g / bs

if nt:

lcls *= g / nt / model.nc

lbox *= g / nt

loss = lbox + lobj + lcls

return loss, torch.cat((lbox, lobj, lcls, loss)).detach()

边栏推荐

- 【图像去噪】基于马尔可夫随机场实现图像去噪附matlab代码

- Gmail: how do I recall an outgoing message?

- Visual slam lecture notes-10-2

- 程序员10年巨变,一切都变了又好像没变...

- Raki's notes on reading paper: memory replace with data compression for continuous learning

- Undefined reference to 'g2o:: vertexe3:: vertexe3()'

- Record the phpstudy configuration php8.0 and php8.1 extension redis

- 5g communication test manual based on Ti am5728 + artix-7 FPGA development board (dsp+arm)

- [Multisim Simulation] generate square wave and triangular wave generators by operational amplifier

- [image denoising] impulse noise image denoising based on absolute difference median filter, weighted median filter and improved weighted median filter with matlab code attached

猜你喜欢

highcharts设置柱状图宽度、渐变、圆角、柱子上方数据

【信号去噪】基于FFT和FIR实现信号去噪附matlab代码

Flink CDC 在大健云仓的实践

![[image denoising] impulse noise image denoising based on absolute difference median filter, weighted median filter and improved weighted median filter with matlab code attached](/img/dc/6348cb17ca91afe39381a9b3eb54e6.png)

[image denoising] impulse noise image denoising based on absolute difference median filter, weighted median filter and improved weighted median filter with matlab code attached

postman配置中文

Raki's notes on reading paper: memory replace with data compression for continuous learning

Specific methods for porting WinCC flexible 2008 project to botu WinCC

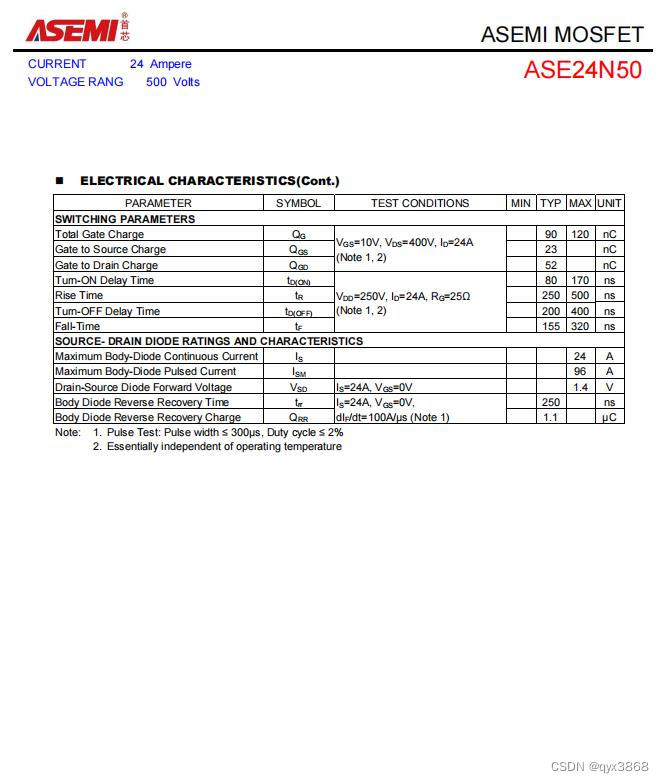

ASEMI的MOS管24N50参数,24N50封装,24N50尺寸

【Multisim仿真】利用运算放大器产生方波、三角波发生器

LDPC 7 - simple example of decoding

随机推荐

Hospital intelligent infusion management system source code hospital source code

Qubicle notes: Hello voxel

MySQL federated index and BTREE

Bottomsheetdialog usage details, setting fillet, fixed height, default full screen, etc

[assembly] analysis of Experiment 7 of the fourth edition of assembly language

【图像分割】基于马尔可夫随机场实现图像分割附matlab代码

【图像去噪】基于绝对差分中值滤波、加权中值滤波法、改进加权中值滤波实现脉冲噪声图像去噪附matlab代码

Specific methods for porting WinCC flexible 2008 project to botu WinCC

Visual slam lecture notes-10-1

Flask CKEditor 富文本编译器实现文章的图片上传以及回显,解决路径出错的问题

Operator new and placement new

Leetcode: sword finger offer 59 - ii Maximum value of queue [deque + sortedlist]

leetcode:66. add one-tenth

【图像去噪】基于马尔可夫随机场实现图像去噪附matlab代码

激活函数公式、导数、图像笔记

美国通胀率8.6%创41年历史新高!通胀高烧不退?股票、加密市场先跌为敬!

Kubernetes binary installation (v1.20.15) (IX) closeout: deploy several dashboards

7-3 combinatorial problems (*)

First acquaintance with enterprise platform

Performance of MOS transistor 25n120 of asemi in different application scenarios