当前位置:网站首页>Yolov5 model construction source code details | CSDN creation punch in

Yolov5 model construction source code details | CSDN creation punch in

2022-07-03 05:07:00 【TT ya】

Introduction to deep learning, rookie , I hope it's like taking notes and recording what I've learned , Also hope to help the same entry-level people , I hope the big guys can help correct it ~ Tort made delete .

The whole family bucket part of code analysis comments is just for the convenience of looking at the loop , Those indents of conditional judgment correspond to , And two 、 3、 ... and 、 The four topics are the same . Can be pasted directly into VS code Look inside , It will also be clearer , See what kind of form you like ~

Catalog

One 、 Read the synopsis carefully

1、 Load the parsing yaml The configuration file ( Including network parameters and so on )

5、 Initialize weights and offsets

5、 ... and 、 Code analysis comments family bucket

One 、 Read the synopsis carefully

We have already introduced YOLOv5 Network framework of , Portal

YOLOv5 Network structure details _tt Ya's blog -CSDN Blog

How to build it concretely ? Let's start with the source code (YOLOv5 In the source yolo.py Of class Model) Let's get started

Two 、def __init__

Actually, after running __init__, The whole network is set up .

1、 Load the parsing yaml The configuration file ( Including network parameters and so on )

class Model(nn.Module):

def __init__(self, cfg='yolov5s.yaml', ch=3, nc=None, anchors=None): # model, input channels, number of classes

super().__init__()

if isinstance(cfg, dict): # Judge cfg Is it right? dict( Dictionaries ) type

self.yaml = cfg # model dict

# The model dictionary is assigned to self.yamlFirst judgement cfg Is it right? dict( Dictionaries ) type , If it is , Assign this model dictionary to self.yaml

else: # is *.yaml

import yaml # for torch hub

self.yaml_file = Path(cfg).name # obtain cfg The name of the file

with open(cfg, encoding='ascii', errors='ignore') as f:# use ascii code , Ignore the wrong form to open the file cfg

self.yaml = yaml.safe_load(f) # model dict

# use yaml Loaded as a file cfg, Assign a value to self.yamlOtherwise import yaml, obtain cfg After the file name of , use ascii code , Ignore the wrong form to open the file cfg, use yaml Loaded as a file cfg, Assign a value to self.yaml.

final yaml Configuration information in the form of a dictionary .

2、self.yaml

Let's first look at what is in the configuration file

The first is the setting of some parameters

# Parameters

nc: 80 # number of classes

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.50 # layer channel multiple

anchors:

- [10,13, 16,30, 33,23] # P3/8

- [30,61, 62,45, 59,119] # P4/16

- [116,90, 156,198, 373,326] # P5/32And then there's the Internet backbone part

# YOLOv5 v6.0 backbone

backbone:

# [from, number, module, args]

[[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2

[-1, 1, Conv, [128, 3, 2]], # 1-P2/4

[-1, 3, C3, [128]],

[-1, 1, Conv, [256, 3, 2]], # 3-P3/8

[-1, 6, C3, [256]],

[-1, 1, Conv, [512, 3, 2]], # 5-P4/16

[-1, 9, C3, [512]],

[-1, 1, Conv, [1024, 3, 2]], # 7-P5/32

[-1, 3, C3, [1024]],

[-1, 1, SPPF, [1024, 5]], # 9

]Finally, the network Neck + Head part ( But here it is written directly Head 了 , It's all the same )

# YOLOv5 v6.0 head

head:

[[-1, 1, Conv, [512, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 6], 1, Concat, [1]], # cat backbone P4

[-1, 3, C3, [512, False]], # 13

[-1, 1, Conv, [256, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 4], 1, Concat, [1]], # cat backbone P3

[-1, 3, C3, [256, False]], # 17 (P3/8-small)

[-1, 1, Conv, [256, 3, 2]],

[[-1, 14], 1, Concat, [1]], # cat head P4

[-1, 3, C3, [512, False]], # 20 (P4/16-medium)

[-1, 1, Conv, [512, 3, 2]],

[[-1, 10], 1, Concat, [1]], # cat head P5

[-1, 3, C3, [1024, False]], # 23 (P5/32-large)

[[17, 20, 23], 1, Detect, [nc, anchors]], # Detect(P3, P4, P5)

]3、 Define the network model

# Define model

ch = self.yaml['ch'] = self.yaml.get('ch', ch) # input channels

if nc and nc != self.yaml['nc']:

LOGGER.info(f"Overriding model.yaml nc={self.yaml['nc']} with nc={nc}")

self.yaml['nc'] = nc # override yaml valueJudge the input channel Is it the same as that in the configuration file , If they are different, they become input parameters .

if anchors: LOGGER.info(f'Overriding model.yaml anchors with anchors={anchors}') self.yaml['anchors'] = round(anchors) # override yaml valuetake anchors To round ( Prevent the input of decimal numbers from reporting errors )

self.model, self.save = parse_model(deepcopy(self.yaml), ch=[ch]) # model, savelistImport the information in the configuration file into the model and save .

Then let's intersperse here parse_model function

Please look at the catalogue

Four 、 Other required functions —— parse_model function

Let's go back and continue our analysis

self.model, self.save = parse_model(deepcopy(self.yaml), ch=[ch]) # model, savelist

self.names = [str(i) for i in range(self.yaml['nc'])] # default names

# Number those categories , from 0 To nc-1

self.inplace = self.yaml.get('inplace', True)self.name: Number those categories , from 0 To nc-1

4、Build strides, anchors

m = self.model[-1] # Detect()

if isinstance(m, Detect):

s = 256 # 2x min stride

m.inplace = self.inplace

m.stride = torch.tensor([s / x.shape[-2] for x in self.forward(torch.zeros(1, ch, s, s))]) # forward

m.anchors /= m.stride.view(-1, 1, 1)

Here we call forward() function :

Then let's intersperse here forward() function

Please look at the catalogue

Let's come back and continue to analyze .

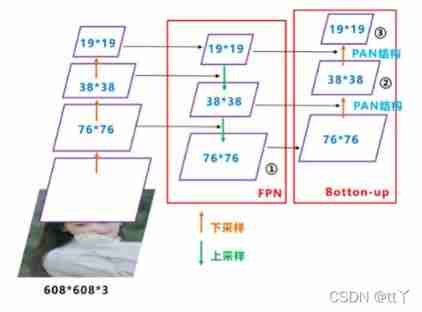

Enter a [1, ch, 256, 256] Of tensor, Then get FPN Dimension of output results . Then the multiple of down sampling is calculated stride:8,16,32.

Last use anchor Remove the above value , This way anchor Put and shrink to 3 On different scales .

therefore anchor In the end shape yes [3,3,2].

check_anchor_order(m)

# according to YOLOv5 Detect() modular m Check the anchoring sequence , Correct if necessary

self.stride = m.stride

self._initialize_biases() # only run once

# Initialize the deviation into Detect modular First of all, according to the YOLOv5 Detect() modular m Check the anchoring sequence , Correct if necessary , After checking and correcting stride It's settled , Then initialize the deviation into Detect modular ( There is no deviation , Because the initial class frequency cf by None).

5、 Initialize weights and offsets

initialize_weights(self)

self.info()

LOGGER.info('')3、 ... and 、def forward

1、 The main body

def forward(self, x, augment=False, profile=False, visualize=False):

if augment:

return self._forward_augment(x) # augmented inference, None

return self._forward_once(x, profile, visualize) # single-scale inference, trainIf augment yes True Just call _forward_augment function , Do enhancement .

If augment yes False Just call _forward_once function , No enhancement .

You can see the default augment by False, So what is actually called is _forward_once function .

2、_forward_once function

def _forward_once(self, x, profile=False, visualize=False):

y, dt = [], [] # outputs

for m in self.model:Here, first initialize the list of stored outputs ( among dt Not used , Later on ), Then enter the cycle , Traverse each module ( layer )

Once again m Parameters in :m.f Which floor does it start from ;

m.n Is the default depth of the module ;

m.args Is the parameter of this layer ( It's from yaml From there )

if m.f != -1: # if not from previous layer

# Not on the next floor , That is, it is not directly connected to the upper layer ( Such as Concat)

x = y[m.f] if isinstance(m.f, int) else [x if j == -1 else y[j] for j in m.f] # from earlier layers

# Take out the result of the corresponding layer , Prepare the following entry correspondence m Of forward()First, judge : If not directly connected to the upper layer , Such as Concat, Then we take out the result of the corresponding layer , Prepare the following entry correspondence m Of forward().

( The result of the corresponding layer and the corresponding m Of forward() I'll say later )

if profile:

self._profile_one_layer(m, x, dt)Because the default profile yes False, The input is also False, So it doesn't use , Let's not talk about him , next ~

x = m(x) # run

#m It's a module ( Some layer ) It means , therefore x Incoming module , Equivalent to the execution module ( for instance Focus,SPP etc. ) Medium forward()

# first floor Focus Of m.f yes -1, So jump to this step directly Here is what we said earlier “ Corresponding m Of forward()” La

m It's a module ( Some layer ) It means , therefore x Incoming module , Equivalent to the execution module ( for instance Focus,SPP etc. ) Medium forward()

Because the first floor Focus Of m.f yes -1, None of the previous judgments has entered , So jump to this step directly .

y.append(x if m.i in self.save else None) # save output

# Save the output results of each layer to yHere is what we said earlier “ The result of the corresponding layer ” La

Here is to save the output results of each layer to y, The preservation here is for people like Concat These modules serve , They are not directly connected to the upper layer , Output results of all layers to be connected are required .

Causality , So not all the output results of all layers should be saved , Only needed , To save .

Here is our self.m It does matter . If you remember , Actually, as we said before , stay parse_model Here in the function

save.extend(x % i for x in ([f] if isinstance(f, int) else f) if x != -1) # append to savelistHere is the operation of on-demand allocation (x!=1)

OK, return to our _forward_once function

if visualize:

feature_visualization(x, m.type, m.i, save_dir=visualize)Here with profile equally , Default False, There is no need to use ,pass~

return xreturn It's over

Four 、 Other required functions —— parse_model function

def parse_model(d, ch): # model_dict, input_channels(3)

LOGGER.info(f"\n{'':>3}{'from':>18}{'n':>3}{'params':>10} {'module':<40}{'arguments':<30}")

# Log record , Regardless of him

anchors, nc, gd, gw = d['anchors'], d['nc'], d['depth_multiple'], d['width_multiple']

na = (len(anchors[0]) // 2) if isinstance(anchors, list) else anchors # number of anchors

no = na * (nc + 5) # number of outputs = anchors * (classes + 5)The above is the read configuration dict In the parameters of the , See my previous article for specific parameters

layers, save, c2 = [], [], ch[-1] # layers, savelist, ch outinitialization

for i, (f, n, m, args) in enumerate(d['backbone'] + d['head']):

# from, number, module, args

m = eval(m) if isinstance(m, str) else m # Set the module type m Convert to value

for j, a in enumerate(args):# Loop module parameters args

try:

args[j] = eval(a) if isinstance(a, str) else a # Set each module parameter args[j] Convert to value

except NameError:

pass

Iteration loop backbone And Head(Neck,head) Configuration of , And the module type m Convert to value , Parameters of each module args[j] Convert to value

f: From which floor

n: Default depth of the module

m: The type of module

args: Parameters of the module

n = n_ = max(round(n * gd), 1) if n > 1 else n # depth gain

# Network with (n*gd) Depth scaling of the control module .

# Depth here refers to something like CSP The number of iterations of this module , Generally, we refer to the width of the characteristic graph channel.Network with (n*gd) Depth scaling of the control module .

Depth here refers to something like CSP The number of iterations of this module , Generally, we refer to the width of the characteristic graph channel.

if m in [Conv, GhostConv, Bottleneck, GhostBottleneck, SPP, SPPF, DWConv, MixConv2d, Focus, CrossConv,

BottleneckCSP, C3, C3TR, C3SPP, C3Ghost]:

c1, c2 = ch[f], args[0]

#ch It is used to save the output of all previous modules channle( therefore ch[-1] Represents the output channel of the previous module ).

# ch[f] It's No f Layer output .args[0] Is the default output channel .ch It is used to save the output of all previous modules channle( therefore ch[-1] Represents the output channel of the previous module ).

ch[f] It's No f Layer output .args[0] Is the default output channel .

if c2 != no: # if not output

# If not the final output

c2 = make_divisible(c2 * gw, 8)# Ensure that the output channel is 8 Multiple

def make_divisible(x, divisor):

# Returns nearest x divisible by divisor

# Returns the nearest... Divisible by divisor x

if isinstance(divisor, torch.Tensor):

divisor = int(divisor.max()) # to int

return math.ceil(x / divisor) * divisorIf not the final output , call general.py Medium make_divisible function , Generation can be 8 The nearest division x As c2, This ensures that the output channel is 8 Multiple .

args = [c1, c2, *args[1:]] #args Change to the original args+module The number of input channels (c1)、 Number of output channels (c2)

if m in [BottleneckCSP, C3, C3TR, C3Ghost]: # Only CSP The structure will be based on the depth parameter n To adjust the number of repetitions of this module

args.insert(2, n) # number of repeats

# Module parameter information args Insert n

n = 1#n Reset Yes args Supplement information :

args Change to the original args+module The number of input channels (c1)、 Number of output channels (c2)

If it is BottleneckCSP, C3 Words , Plus the depth parameter n( Used to adjust the number of repetitions of this module ), Then reset n.

elif m is nn.BatchNorm2d:

args = [ch[f]]BN Only the number of input channels , That is, the number of channels remains unchanged

elif m is Concat:

c2 = sum(ch[x] for x in f)Concat:f Is the index of all the layers that need to be spliced , Then the output channel c2 Is the sum of all layers

elif m is Detect:

args.append([ch[x] for x in f])# Fill in the number of input channels for each prediction layer

if isinstance(args[1], int): # number of anchors

args[1] = [list(range(args[1] * 2))] * len(f)

#[list(range(args[1] * 2))]: Initialization list : The width and height of the prediction box

# Finally, take len(f) , Is to generate the initial height and width of the prediction frame corresponding to all prediction layers Detect class :

Module information args First fill in the number of input channels of each prediction layer , Then fill in the list of the initial height and width of the prediction box corresponding to all prediction layers .

elif m is Contract:

c2 = ch[f] * args[0] ** 2

elif m is Expand:

c2 = ch[f] // args[0] ** 2

else:

c2 = ch[f]# In other cases, the number of output channels (c2) Is the number of input channels Contract and Expand Class does not exist in the network structure , Let's not talk about him

m_ = nn.Sequential(*(m(*args) for _ in range(n))) if n > 1 else m(*args) # module

# take args Parameters in to build module m, Then, the number of cycles of the module is expressed as a parameter n control .take args Parameters in to build module m, Then, the number of cycles of the module is expressed as a parameter n control

t = str(m)[8:-2].replace('__main__.', '') # module type

np = sum(x.numel() for x in m_.parameters()) # number params

m_.i, m_.f, m_.type, m_.np = i, f, t, np # attach index, 'from' index, type, number params

LOGGER.info(f'{i:>3}{str(f):>18}{n_:>3}{np:10.0f} {t:<40}{str(args):<30}') # print

# The above is the log file information ( Each layer module Build number 、 Parameter quantity, etc )The above is the log file information ( Each layer module Build number 、 Parameter quantity, etc )

save.extend(x % i for x in ([f] if isinstance(f, int) else f) if x != -1) # append to savelist

layers.append(m_)# Save the built module to layers in

if i == 0:

ch = []

ch.append(c2)# Write the number of output channels of this layer ch In the list Save the output of the required layer ( such as Concat Layers need concat Some layers , The results of these layers need to be saved ), The follow-up will be in _forward_once The function uses

The built modules are saved in layers in , And write the number of output channels of this layer into ch in

# When the cycle is over, build the model

return nn.Sequential(*layers), sorted(save)Build the model after the cycle .

5、 ... and 、 Code analysis comments family bucket

1、Model part

class Model(nn.Module):

def __init__(self, cfg='yolov5s.yaml', ch=3, nc=None, anchors=None): # model, input channels, number of classes

super().__init__()

if isinstance(cfg, dict): # Judge cfg Is it right? dict( Dictionaries ) type

self.yaml = cfg # model dict

# The model dictionary is assigned to self.yaml

else: # is *.yaml

import yaml # for torch hub

self.yaml_file = Path(cfg).name # obtain cfg The name of the file

with open(cfg, encoding='ascii', errors='ignore') as f:# use ascii code , Ignore the wrong form to open the file cfg

self.yaml = yaml.safe_load(f) # model dict

# use yaml Loaded as a file cfg, Assign a value to self.yaml

# Define model

ch = self.yaml['ch'] = self.yaml.get('ch', ch) # input channels

if nc and nc != self.yaml['nc']:

LOGGER.info(f"Overriding model.yaml nc={self.yaml['nc']} with nc={nc}")

self.yaml['nc'] = nc # override yaml value

# The above is for judging the input channel Is it the same as that in the configuration file , If they are different, they become input parameters .

if anchors:

LOGGER.info(f'Overriding model.yaml anchors with anchors={anchors}')

self.yaml['anchors'] = round(anchors) # override yaml value

# General anchors To round ( Prevent the input of decimal numbers from reporting errors )

self.model, self.save = parse_model(deepcopy(self.yaml), ch=[ch]) # model, savelist

self.names = [str(i) for i in range(self.yaml['nc'])] # default names

# Number those categories , from 0 To nc-1

self.inplace = self.yaml.get('inplace', True)

# Build strides, anchors

m = self.model[-1] # Detect()

if isinstance(m, Detect):

s = 256 # 2x min stride Step size initialization

m.inplace = self.inplace

m.stride = torch.tensor([s / x.shape[-2] for x in self.forward(torch.zeros(1, ch, s, s))]) # forward

m.anchors /= m.stride.view(-1, 1, 1)

check_anchor_order(m) # according to YOLOv5 Detect() modular m Check the anchoring sequence , Correct if necessary

self.stride = m.stride

self._initialize_biases() # only run once

# Initialize the deviation into Detect modular ( There is no deviation , Because the initial class frequency cf by None)

# Init weights, biases

initialize_weights(self)

self.info()

LOGGER.info('')

def forward(self, x, augment=False, profile=False, visualize=False):

if augment:

return self._forward_augment(x) # augmented inference, None

# enhance

return self._forward_once(x, profile, visualize) # single-scale inference, train

# Not enhanced by default 2、_forward_once function

def _forward_once(self, x, profile=False, visualize=False):

y, dt = [], [] # outputs

for m in self.model:

#m Parameters in :m.f Which floor does it start from ,m.n Is the default depth of the module ,m.args Is the parameter of this layer ( It's from yaml From there )

if m.f != -1: # if not from previous layer

# Not on the next floor , That is, it is not directly connected to the upper layer ( Such as Concat)

x = y[m.f] if isinstance(m.f, int) else [x if j == -1 else y[j] for j in m.f] # from earlier layers

# Take out the result of the corresponding layer , Prepare the following entry correspondence m Of forward()

if profile:

self._profile_one_layer(m, x, dt)

x = m(x) # run

#m It's a module ( Some layer ) It means , therefore x Incoming module , Equivalent to the execution module ( for instance Focus,SPP etc. ) Medium forward()

# first floor Focus Of m.f yes -1, So jump to this step directly

y.append(x if m.i in self.save else None) # save output

# Save the output results of each layer to y

if visualize:

feature_visualization(x, m.type, m.i, save_dir=visualize)

return x3、parse_model function

def parse_model(d, ch): # model_dict, input_channels(3)

LOGGER.info(f"\n{'':>3}{'from':>18}{'n':>3}{'params':>10} {'module':<40}{'arguments':<30}")

# Log record , Regardless of him

anchors, nc, gd, gw = d['anchors'], d['nc'], d['depth_multiple'], d['width_multiple']

na = (len(anchors[0]) // 2) if isinstance(anchors, list) else anchors # number of anchors

no = na * (nc + 5) # number of outputs = anchors * (classes + 5)

# The above is the read configuration dict The parameters inside

layers, save, c2 = [], [], ch[-1] # layers, savelist, ch out

for i, (f, n, m, args) in enumerate(d['backbone'] + d['head']): # from, number, module, args

m = eval(m) if isinstance(m, str) else m # Set the module type m Convert to value

for j, a in enumerate(args):# Loop module parameters args

try:

args[j] = eval(a) if isinstance(a, str) else a # Set each module parameter args[j] Convert to value

except NameError:

pass

# Above cycle , Start the iteration loop backbone And head Configuration of

#f: From which floor ;n: Default depth of the module ;m: The type of module ;args: Parameters of the module

n = n_ = max(round(n * gd), 1) if n > 1 else n # depth gain

# Network with (n*gd) Depth scaling of the control module .

# Depth here refers to something like CSP The number of iterations of this module , Generally, we refer to the width of the characteristic graph channel.

if m in [Conv, GhostConv, Bottleneck, GhostBottleneck, SPP, SPPF, DWConv, MixConv2d, Focus, CrossConv,

BottleneckCSP, C3, C3TR, C3SPP, C3Ghost]:

c1, c2 = ch[f], args[0]

#ch It is used to save the output of all previous modules channle( therefore ch[-1] Represents the output channel of the previous module ).

# ch[f] It's No f Layer output .args[0] Is the default output channel .

if c2 != no: # if not output

# If not the final output

c2 = make_divisible(c2 * gw, 8)# Ensure that the output channel is 8 Multiple

args = [c1, c2, *args[1:]] #args Change to the original args+module The number of input channels (c1)、 Number of output channels (c2)

if m in [BottleneckCSP, C3, C3TR, C3Ghost]: # Only CSP The structure will be based on the depth parameter n To adjust the number of repetitions of this module

args.insert(2, n) # number of repeats

# Module parameter information args Insert n

n = 1#n Reset

elif m is nn.BatchNorm2d:

args = [ch[f]]#BN Only the number of input channels , That is, the number of channels remains unchanged

elif m is Concat:

c2 = sum(ch[x] for x in f)#Concat:f Is the index of all the layers that need to be spliced , Then the number of output channels c2 Is the sum of all layers

elif m is Detect:

args.append([ch[x] for x in f])# Fill in the number of input channels for each prediction layer

if isinstance(args[1], int): # number of anchors

args[1] = [list(range(args[1] * 2))] * len(f)

#[list(range(args[1] * 2))]: Initialization list : The width and height of the prediction box

# Finally, take len(f) , Is to generate the initial height and width of the prediction frame corresponding to all prediction layers

elif m is Contract:

c2 = ch[f] * args[0] ** 2

elif m is Expand:

c2 = ch[f] // args[0] ** 2

else:

c2 = ch[f]# In other cases, the number of output channels (c2) Is the number of input channels

m_ = nn.Sequential(*(m(*args) for _ in range(n))) if n > 1 else m(*args) # module

# take args Parameters in to build module m, Then, the number of cycles of the module is expressed as a parameter n control . The whole is scaled by the width ,C3 The module is depth scaled .

t = str(m)[8:-2].replace('__main__.', '') # module type

np = sum(x.numel() for x in m_.parameters()) # number params

m_.i, m_.f, m_.type, m_.np = i, f, t, np # attach index, 'from' index, type, number params

LOGGER.info(f'{i:>3}{str(f):>18}{n_:>3}{np:10.0f} {t:<40}{str(args):<30}') # print

# The above is the log file information ( Each layer module Build number 、 Parameter quantity, etc )

save.extend(x % i for x in ([f] if isinstance(f, int) else f) if x != -1) # append to savelist

# Save the output of the required layer ( such as Concat Layers need concat Some layers , The results of these layers need to be saved )

layers.append(m_)# Save the built module to layers in

if i == 0:

ch = []

ch.append(c2)# Write the number of output channels of this layer ch In the list

# When the cycle is over, build the model

return nn.Sequential(*layers), sorted(save)You are welcome to criticize and correct in the comment area ~

At the same time , Originality is not easy. , Hee hee , If you like , Please praise it a little

边栏推荐

- The current market situation and development prospect of the global gluten tolerance test kit industry in 2022

- The process of browser accessing the website

- 1087 all roads lead to Rome (30 points)

- Current market situation and development prospect forecast of the global fire boots industry in 2022

- Analysis of proxy usage of ES6 new feature

- Blog building tool recommendation (text book delivery)

- Distinguish between releases and snapshots in nexus private library

- Promise

- Sprintf formatter abnormal exit problem

- Market status and development prospects of the global IOT active infrared sensor industry in 2022

猜你喜欢

微服务常见面试题

appium1.22.x 版本後的 appium inspector 需單獨安裝

Pan details of deep learning

![[research materials] 2021 annual report on mergers and acquisitions in the property management industry - Download attached](/img/95/833f5ec20207ee5d7e6cdfa7208c5e.jpg)

[research materials] 2021 annual report on mergers and acquisitions in the property management industry - Download attached

leetcode452. Detonate the balloon with the minimum number of arrows

The programmer resigned and was sentenced to 10 months for deleting the code. JD came home and said that it took 30000 to restore the database. Netizen: This is really a revenge

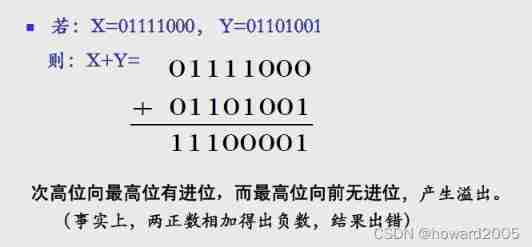

Three representations of signed numbers: original code, inverse code and complement code

Analysis of proxy usage of ES6 new feature

Use posture of sudo right raising vulnerability in actual combat (cve-2021-3156)

Handler understands the record

随机推荐

Market status and development prospect prediction of global colorimetric cup cover industry in 2022

1095 cars on campus (30 points)

Literature reading_ Research on the usefulness identification of tourism online comments based on semantic fusion of multimodal data (Chinese Literature)

"Pthread.h" not found problem encountered in compiling GCC

Unity tool Luban learning notes 1

Caijing 365 stock internal reference: what's the mystery behind the good father-in-law paying back 50 million?

appium1.22. Appium inspector after X version needs to be installed separately

Huawei personally ended up developing 5g RF chips, breaking the monopoly of Japan and the United States

编译GCC遇到的“pthread.h” not found问题

[research materials] 2021 China's game industry brand report - Download attached

Thesis reading_ Chinese NLP_ ELECTRA

ZABBIX monitoring of lamp architecture (2): ZABBIX basic operation

5-36v input automatic voltage rise and fall PD fast charging scheme drawing 30W low-cost chip

Ueditor, FCKeditor, kindeditor editor vulnerability

Analysis of proxy usage of ES6 new feature

1111 online map (30 points)

Messy change of mouse style in win system

Based on RFC 3986 (unified resource descriptor (URI): general syntax)

[set theory] relation properties (transitivity | transitivity examples | transitivity related theorems)

Gbase8s unique index and non unique index