当前位置:网站首页>19. Up and down sampling and batchnorm

19. Up and down sampling and batchnorm

2022-07-27 05:59:00 【Pie star's favorite spongebob】

Catalog

A unit in the convolution neural network process :

conv2D->batchnorm->pooling->Relu

The last three orders depend on the mainstream and experience , Inversion doesn't have much effect .

Pool layer and sampling

Down sampling means right map narrow , Upsampling and amplification methods are very similar

downsample Down sampling

pooling and subsampl The result is similar but the operation is different

max pooling

It's right kernel Values in the range , Choose a maximum number .

avg pooling

It's right kernel Values in the range , Calculate the average value and output .

x=torch.rand(1,16,14,14)

layer=nn.MaxPool2d(2,stride=2)

out=layer(x)

print('out shape:',out.shape)

2 It means size 2×2, Step length is 2, Halve the result .

upsample On the sampling

in the light of tensor Of . Simply copy the latest value , Play the role of amplification .

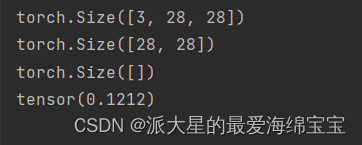

x=torch.rand(1,16,7,7)

out1=F.interpolate(x,scale_factor=2,mode='nearest')

print('out1 shape:',out1.shape)

out2=F.interpolate(x,scale_factor=3,mode='nearest')

print('out2 shape:',out2.shape)

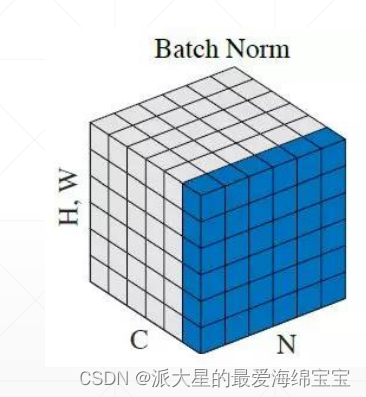

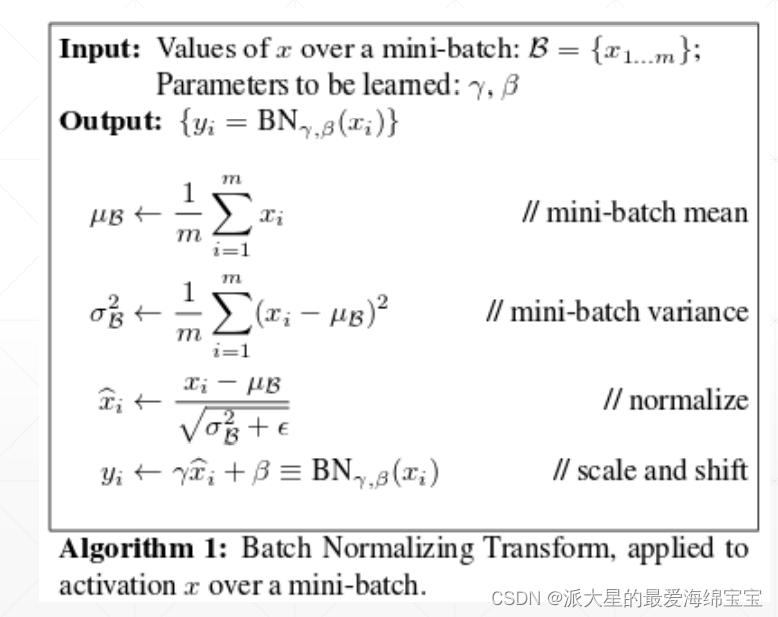

BatchNorm

We usually use relu Function instead of sigmod, Because sigmod Function is outside a certain range , The gradient information is 0, But have to use sigmo when , We need to put our input value x, To a certain extent . best x stay 0 near .

When multiple inputs ,x1 and x2 When there is a large difference in the range of , The weight w1w2 Different changes in , Yes loss The impact of .

feature scaling

Image normalization

Picture three channels RGB There is mean variance

normalize=transforms.Normalize(mean=[0.485,0.456,0.406],std=[0.229,0.224,0.225])

(Xr-0.485)/0.229,(Xg-0.456)/0.224,(X\b-0.406)/0.225, The new distribution obtained is more in line with what we said N(0,1), And input to the next layer con2d when , It can solve well .

Batch Normalization

hypothesis [16,3,784]

our channel yes 3, For channel0 All of this passage 16 Number of pictures of all feature, Calculate , Get the mean and variance . Finally, the common dimension is 1,shape by 3 Number of numbers , Represents the 3 individual channel.

μ and σ Is based on the current batch Statistics of , The global mean and variance are stored in running_mean,running_var in .

β and γ We learned , It will automatically update , Even if there is an initial value at the beginning , And need gradient information .

Obey separately N(μ,σ),N(β,γ)

x=torch.rand(100,16,784)

layer=nn.BatchNorm1d(16)

out=layer(x)

print('running_mean:',layer.running_mean)

print('running_val:',layer.running_var)

Standardized writing

nn.BatchNorm2d

x=torch.rand(1,16,7,7)

layer=nn.BatchNorm2d(16)

out=layer(x)

print('out shape:',out.shape)

print(vars(layer))

running_mean,running_var Is the global mean and variance , We can't know every... From the parameters at present batch The mean and variance of .

layer.weight and layer.bias Parameter is γ and β

‘affine:True’:β and γ Whether to learn automatically , And automatically update .

test And train Use difference

test At the time of the μ and σ Is a global , Can be obtained from running_mean,running_var Copy value .test No, backward, therefore γ and β It doesn't need to be updated .

layer.eval()

BatchNorm1d(16,eps=1e-05,momentum=0.1,affine=True,track_running_stats=True)

out=layer(x)

We need to call eval(), Transfer to test Pattern

advantage

Convergence is faster

Better performance

A more stable

边栏推荐

- Performance optimization of common ADB commands

- Gbase 8C - SQL reference 5 full text search

- Day 7. Towards Preemptive Detection of Depression and Anxiety in Twitter

- GBASE 8C——SQL参考 5 全文检索

- How MySQL and redis ensure data consistency

- Day 17.The role of news sentiment in oil futures returns and volatility forecasting

- 16.过拟合欠拟合

- 2021中大厂php+go面试题(1)

- Day 9. Graduate survey: A love–hurt relationship

- GBASE 8C——SQL参考6 sql语法(12)

猜你喜欢

Graph node deployment

Emoji Emoji for text emotion analysis -improving sentimental analysis accuracy with Emoji embedding

「中高级试题」:MVCC实现原理是什么?

14.实例-多分类问题

数字图像处理 第八章——图像压缩

数字图像处理 第一章 绪论

【mysql学习】8

The NFT market pattern has not changed. Can okaleido set off a new round of waves?

5. Indexing and slicing

Day14. 用可解释机器学习方法鉴别肠结核和克罗恩病

随机推荐

数字图像处理——第三章 灰度变换与空间滤波

根据文本自动生成UML时序图(draw.io格式)

GBASE 8C——SQL参考6 sql语法(4)

Day 2. Depressive symptoms, post-traumatic stress symptoms and suicide risk among graduate students

dpdk 网络协议栈 vpp OvS DDos SDN NFV 虚拟化 高性能专家之路

6. Dimension transformation and broadcasting

GBASE 8C——SQL参考6 sql语法(11)

神经网络参数初始化

9. High order operation

andorid检测GPU呈现速度和过度绘制

Emoji表情符号用于文本情感分析-Improving sentiment analysis accuracy with emoji embedding

Digital image processing Chapter 5 - image restoration and reconstruction

9.高阶操作

GBASE 8C——SQL参考6 sql语法(5)

Gbase 8C - SQL reference 6 SQL syntax (10)

数字图像处理 第八章——图像压缩

mysql优化sql相关(持续补充)

GBASE 8C——SQL参考6 sql语法(1)

向量和矩阵的范数

Graph node deployment