当前位置:网站首页>Chapter 6 of machine learning [series] random forest model

Chapter 6 of machine learning [series] random forest model

2022-06-11 06:02:00 【Forward ing】

machine learning 【 series 】 Chapter 6 stochastic forest model

Chapter 6 random forest model

Random forest model

- machine learning 【 series 】 Chapter 6 stochastic forest model

- Preface

- One 、 The principle and code implementation of random forest model

- Two 、 Case actual combat : Stock rise and fall prediction model

- summary

Preface

This chapter will introduce the integrated learning model , Integrated learning actually adopts this idea : Combine multiple models , To produce a more powerful model . This chapter will explain a very typical integrated learning model ----- Random forest model , And through an application in the financial field ---- Stock up and down prediction model to consolidate the knowledge .

Tips : The following is the main body of this article , The following cases can be used for reference

One 、 The principle and code implementation of random forest model

1. Introduction to integration model

The integrated learning model uses a series of weak learners ( Also called base model or base model ) To study , And integrate the results of each weak learner , So as to obtain better learning effect than a single learner . The common algorithms of ensemble learning model are Bagging Algorithm and Boosting Algorithm .Bagging The typical machine learning model of the algorithm is the random forest model to be discussed in this chapter , and Boosting The typical machine learning model of the algorithm will be discussed next time AdaBoost,GBDT、XGBoost and LightGBM Model .

- (1)Bagging Algorithm

Bagging The principle of the algorithm is similar to voting , Every weak learner has one vote , Finally, according to the voting of all weak learning , according to “ The minority is subordinate to the majority ” The principle of produces the final prediction result . say concretely , In the classification problem, we use n A weak learner votes to get the final prediction result , In the regression problem, we take n The average of two weak learners is taken as the final result .

- (2)Boosting Algorithm

Boosting The essence of the algorithm is to promote the weak learner to the strong learner , It and Bagging The difference between algorithms is :Bagging The algorithm treats all weak learners equally ; and Boosting The algorithm will work on weak learners “ Differential treatment ”, Generally speaking, it means paying attention to “ Cultivate elites ” and “ Pay attention to mistakes ”.

“ Cultivate elites ” After each round of training, the weak learner with more accurate prediction results is given a larger weight , Reduce the weight of weak learners that do not perform well . So in the final prediction ,“ Excellent model ” The weight of is big , Equivalent to it can cast multiple votes , and “ The general model ” Only one vote or no vote .

“ Pay attention to mistakes ” That is to change the weight or probability distribution of the training set after each round of training , By increasing the weight of the example predicted wrong by the weak learner in the previous round , Reduce the correct weight predicted by the weak learner in the previous round , To improve the weak learner's attention to the mispredicted data , So as to improve the overall prediction effect of the model .

2. The basic principle of random forest model

Random forests yes (Random Forest) A classic Bagging Model , If the learner is a decision tree model . Random forest will be sampled randomly in the original data set , constitute n Different sample data sets , And then build... Based on these data sets n Different decision tree models , Finally, according to these sample data sets , And then build... Based on these data sets n Different decision tree models , Finally, according to the average value of these decision tree models ( For regression models ) Or voting ( For classification model ) To get the final result . In order to ensure the generalization ability of the model ( Or general ability ), The random forest model builds each tree , Often follow “ Random data ” and “ Feature randomised ” These two basic principles .

- Random data : Among all the data, the data is randomly selected as the training data of one of the decision tree models . for example : Yes 1000 Raw data , There is a place to put back to extract 1000 Time , Form a new set of data , For training a decision tree model .

- Feature randomised : If the characteristic dimension of each sample is M, Specify a constant k<M, Randomly from M Selected from features k Features . In the use of Python When constructing a random forest model , The number of features selected by default k by Radical sign M.

3. Random forest model code implementation

# Random forest classification model , Weak learners are classified decision tree models

from sklearn.ensemble import RandomForestClassifier

X = [[1, 2], [3, 4], [5, 6], [7, 8], [9, 10]]

y = [0, 0, 0, 1, 1]

model = RandomForestClassifier(n_estimators=10, random_state=123)

model.fit(X, y)

print(model.predict([[5, 5]]))

# Random forest regression model , Weak learners are regression decision tree models

from sklearn.ensemble import RandomForestRegressor

X = [[1, 2], [3, 4], [5, 6], [7, 8], [9, 10]]

y = [1, 2, 3, 4, 5]

model = RandomForestRegressor(n_estimators=10, random_state=123)

model.fit(X, y)

print(model.predict([[5, 5]]))

Two 、 Case actual combat : Stock rise and fall prediction model

1. Stock basic data acquisition

#!/usr/bin/env python

# -*- coding:utf-8 -*-

import tushare as ts

# 1. Get daily market data

df = ts.get_hist_data("000002",start='2018-01-01',end="2019-01-31")

# print(df.head())

# 2. Get minute level data

df = ts.get_hist_data("000002",ktype='5')

# print(df.head())

# 3. Get real-time market data

df = ts.get_realtime_quotes("000002")

# print(df.head())

df = df[["code","name","price","bid","ask","volume","amount","time"]]

# print(df)

# 4. Obtain transaction data

# Get historical split data , That is, the data of each transaction

df = ts.get_tick_data("000002",date="2018-12-12",src="tt")

# print(df)

# Get each data of the current day

df = ts.get_today_ticks("000002");

# print(df)

# Get index data

df = ts.get_index()

# print(df.head())

2. Stock derivative variable generation

# 1. Get basic stock data

df = ts.get_k_data("000002",start='2015-01-01',end="2019-12-31")

df = df.set_index('date')

# print(df.head())

# 2. Generate simple derived variables

df["close-open"] = (df["close"] - df["open"]) / df["open"]

df["high-low"] = (df["high"] - df["low"]) / df["low"]

df["pre_close"] = df["close"].shift(1)

df["price_change"] = df["close"] - df["pre_close"]

df["p_change"] = (df["close"]-df["pre_close"]) / df["pre_close"]*100

# print(df.head())

# 3. Generate moving average indicators MA value

df["MA5"]=df["close"].rolling(5).mean()

df["MA10"]=df["close"].rolling(10).mean()

df.dropna(inplace=True) # Delete null value line , Or you could write it as df = df.dropna()

df["MA5"] = df["close"].sort_index().rolling(5).mean()

# print(df.head())

# 4. Stock derived variable generation library TA_lib Installation

import talib

# 5. use TA—lib The library generates relative strength indicators RSI value

df["RSI"] = talib.RSI(df["close"],timeperiod=12)

RSI Value can reflect the strength of stock price rise relative to decline in the short term , Help us better judge the rising and falling trend of the stock price .RSI The bigger the value is. , The stronger the rise relative to the fall , On the contrary, the weaker the rise relative to the decline .

# 6. use TA-lib Library generated momentum index MOM value

df["MoM"] = talib.MOM(df["close"],timeperiod=5)

# MOM It reflects the rise and fall speed of the stock price over a period of time

# 7. use TA-lib The library generates an exponential moving average EMA

df["EMA12"] = talib.EMA(df["close"],timeperiod=12) # 12 Moving average of daily index

df["EMA26"] = talib.EMA(df["close"],timeperiod=26) # 26 Moving average of daily index

# EMA And moving average MA Value has some type , But the formula is more complicated .EMA Is a trend indicator .

# 8. use TA-lib The library generates a moving average MACD value

df["MACD"],df["MACDsignal"],df["MACDhist"] = talib.MACD(df["close"],fastperiod=6,slowperiod=12,signalperiod=9)

#MACD Stock is a common index in the market , It is based on EMA It is worth deriving variables .

# After generating all derived variable data and deleting null values , Print tail() Look at the end of the table 5 That's ok .

print(df.tail())

3. Multi factor model construction

With the right data , You can build the model . The model in this case is built according to multiple features , In the field of quantitative finance, it is called multi factor model . Stock data is time series data , Some related data processing work is slightly different from the model mentioned before . Let's start with some simple data processing , Then build the model .

1. Introduce the required libraries

import tushare as ts # Introduce stock basic data related database

import numpy as np # Introduce scientific computing related libraries

import pandas as pd # Introduce scientific computing related libraries

import talib # Introduce stock derivative variable data correlation database

import matplotlib.pyplot as plt # Import drawing related Library

from sklearn.ensemble import RandomForestClassifier # The classification decision tree model is introduced

from sklearn.metrics import accuracy_score # The prediction accuracy scoring function is introduced

2. get data

# 1. Stock basic data acquisition

df = ts.get_k_data("000002",start='2015-01-01',end='2020-11-14')

df = df.set_index("date")

# 2. Simple derived variable data construction

df["close-open"] = (df["close"] - df["open"]) / df["open"]

df["high-low"] = (df["high"] - df["low"]) / df["low"]

df["pre_close"] = df["close"].shift(1)

df["price_change"] = df["close"] - df["pre_close"]

df["p_change"] = (df["close"]-df["pre_close"]) / df["pre_close"]*100

# 3. Moving average related data construction

df["MA5"] = df["close"].rolling(5).mean()

df["MA10"] = df["close"].rolling(10).mean()

df.dropna(inplace=True)

# 4. adopt TA_lib The library constructs derived variable data

df["RSI"] = talib.RSI(df["close"],timeperiod=12)

df["MoM"] = talib.MOM(df["close"],timeperiod=5)

df["EMA12"] = talib.EMA(df["close"],timeperiod=12) # 12 Moving average of daily index

df["EMA26"] = talib.EMA(df["close"],timeperiod=26) # 26 Moving average of daily index

df["MACD"],df["MACDsignal"],df["MACDhist"] = talib.MACD(df["close"],fastperiod=6,slowperiod=12,signalperiod=9)

df.dropna(inplace=True)

3. Extract feature variables and target variables

X = df[["close","volume","close-open","MA5","MA10","high-low","RSI","MoM","EMA12","MACD","MACDsignal","MACDhist"]]

y = np.where(df["price_change"].shift(-1)>0,1,-1)

4. Divide the training set and the test set

X_length = X.shape[0]

split = int(X_length * 0.5)

X_train,X_test = X[:split],X[split:]

y_train,y_test = y[:split],y[split:]

5. Build a model

model = RandomForestClassifier(max_depth=3,n_estimators=10,min_samples_leaf=10,random_state=1)

model.fit(X_train,y_train)

4. Model use and evaluation

1. Predict the rise and fall of the stock price in the next day

y_pred = model.predict(X_test)

a = pd.DataFrame()

a[" Predictive value "] = list(y_pred)

a[" actual value "] = list(y_test)

# use predict_proba() Function can predict the probability of belonging to each classification

y_pred_proba = model.predict_proba(X_test)

2. Model accuracy evaluation

from sklearn.metrics import accuracy_score

score = accuracy_score(y_pred,y_test)

model.score(X_test,y_test)

3. Analyze the characteristic importance of characteristic variables

features = X.columns

importances = model.feature_importances_

a = pd.DataFrame()

a[" features "] = features

a[" Importance of features "] = importances

a = a.sort_values(" Importance of features ",ascending=False)

print(a)

5. Draw the return test curve

Here we mainly use the multiplicative function cumprod()

X_test["prediction"] = model.predict(X_test)

X_test["p_change"] = (X_test["close"] - X_test["close"].shift(1)) / X_test["close"].shift(1)

X_test["origin"] = (X_test["p_change"]+1).cumprod()

X_test["strategy"] = (X_test["prediction"].shift(1) * X_test["p_change"]+1).cumprod()

X_test[["strategy","origin"]].dropna().plot()

plt.gcf().autofmt_xdate()

plt.show()

summary

Reference books :《Python Big data analysis and machine learning business case practice 》

边栏推荐

- Growth Diary 01

- Compliance management 101: processes, planning and challenges

- Servlet

- [daily exercises] merge two ordered arrays

- 修复Yum依赖冲突

- NDK learning notes (13) create an avi video player using avilib+opengl es 2.0

- NDK R21 compiles ffmpeg 4.2.2 (x86, x86_64, armv7, armv8)

- 使用Genymotion Scrapy控制手机

- Review XML and JSON

- Mingw-w64 installation instructions

猜你喜欢

Altiumdesigner2020 import 3D body SolidWorks 3D model

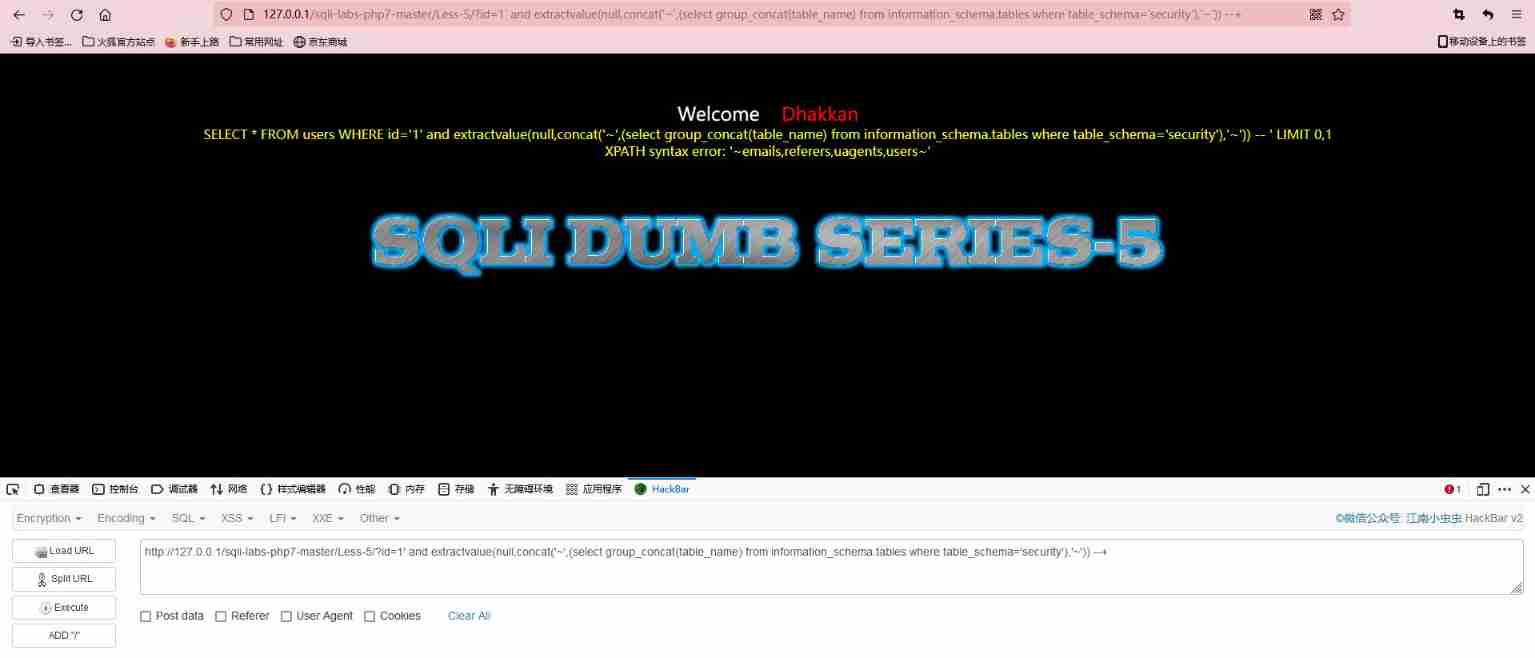

Error reporting injection of SQL injection

All the benefits of ci/cd, but greener

Yonghong Bi product experience (I) data source module

Which company is better in JIRA organizational structure management?

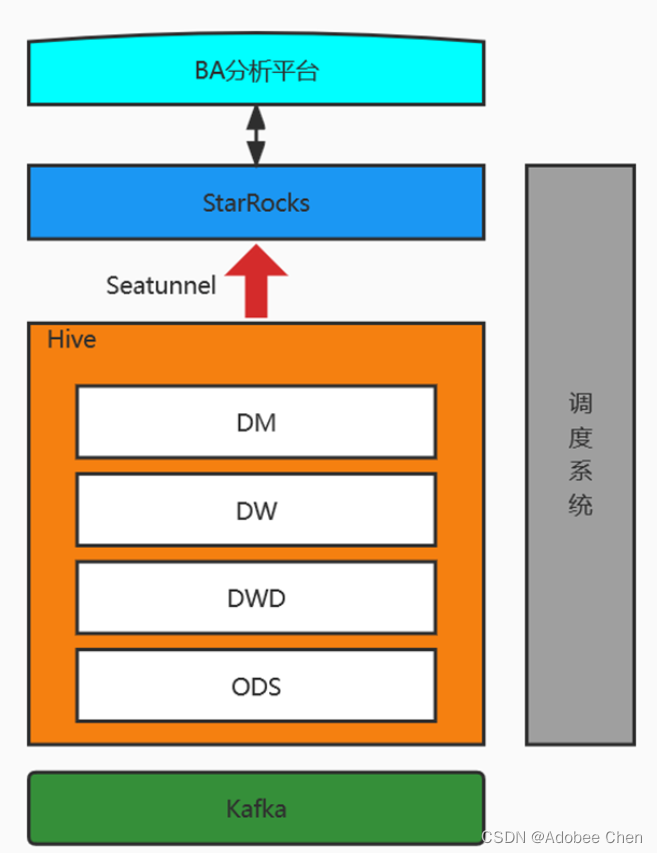

Implementation of data access platform scheme (Youzu network)

How to use the markdown editor

How to use perforce helix core with CI build server

Box model

Build the first power cloud platform

随机推荐

Configure the rust compilation environment

Using Internet of things technology to accelerate digital transformation

Data quality: the core of data governance

Database basic instruction set

Build the first power cloud platform

Deployment of Flink

使用Batch枚舉文件

Wechat applet learning record

Write a list with kotlin

VSCode插件开发

使用Batch枚举文件

Principle of copyonwritearraylist copy on write

我们真的需要会议耳机吗?

Review Servlet

Informatica:数据质量管理六步法

Invert an array with for

Linux Installation redis

How to use perforce helix core with CI build server

call和apply和bind的区别

Basic use of BufferedReader and bufferedwriter