当前位置:网站首页>Vit (vision transformer) principle and code elaboration

Vit (vision transformer) principle and code elaboration

2022-07-06 07:39:00 【bai666】

Course link : https://edu.51cto.com/course/30169.html

Transformer In many NLP( natural language processing ) In the mission SOTA The results of . ViT (Vision Transformer) yes Transformer be applied to CV( Computer vision ) Milestone work in the field , Later, more variants have been developed , Such as Swin Transformer.

ViT (Vision Transformer) Model published in paper An Image is Worth 16X16 Words: Transformer For Image Recognition At Scale, Use pure Transformer Image classification .ViT stay JFT-300M After pre training on the dataset , It can exceed convolutional neural network ResNet Performance of , And the training computing resources used can be less .

This course is right ViT Principle and PyTorch The implementation code is refined , To help you master its detailed principle and specific implementation . The code implementation includes two code implementation methods , One is to adopt timm library , The other is to adopt einops/einsum.

The principle part includes :Transformer An overview of the architecture 、Transformer Of Encoder 、Transformer Of Decoder、ViT Architecture Overview 、ViT The model, 、ViT Performance and analysis .

The refined part of the code uses Jupyter Notebook Yes ViT Of PyTorch Read the code line by line , Include : install PyTorch、ViT Of timm Library implementation code interpretation 、 einops/einsum 、ViT Of einops/einsum Implement code interpretation .

边栏推荐

- Significance and measures of encryption protection for intelligent terminal equipment

- HTTP cache, forced cache, negotiated cache

- opencv学习笔记九--背景建模+光流估计

- C # create database connection object SQLite database

- Jerry needs to modify the profile definition of GATT [chapter]

- Apache middleware vulnerability recurrence

- Typescript interface and the use of generics

- CF1036C Classy Numbers 题解

- Ble of Jerry [chapter]

- OpenJudge NOI 2.1 1661:Bomb Game

猜你喜欢

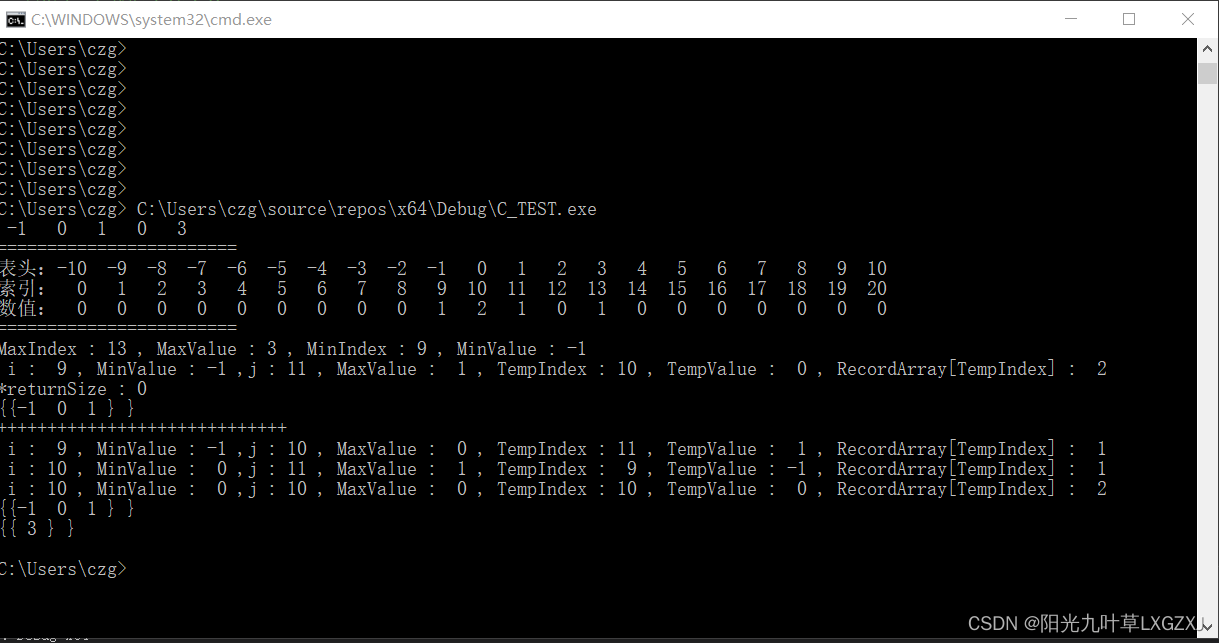

leecode-C语言实现-15. 三数之和------思路待改进版

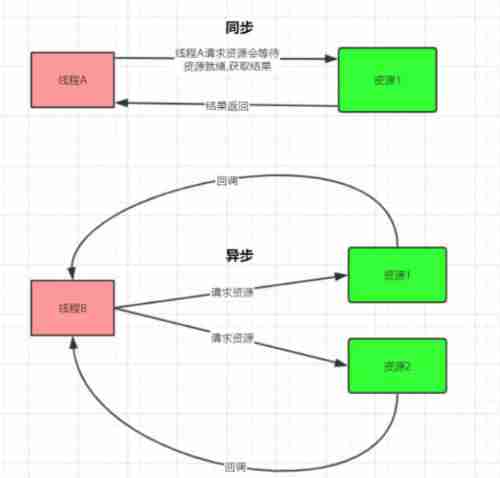

NiO programming introduction

![[MySQL learning notes 30] lock (non tutorial)](/img/9b/1e27575d83ff40bebde118b925f609.png)

[MySQL learning notes 30] lock (non tutorial)

How to delete all the words before or after a symbol in word

Generator Foundation

![Ble of Jerry [chapter]](/img/00/27486ad68bf491997d10e387c32dd4.png)

Ble of Jerry [chapter]

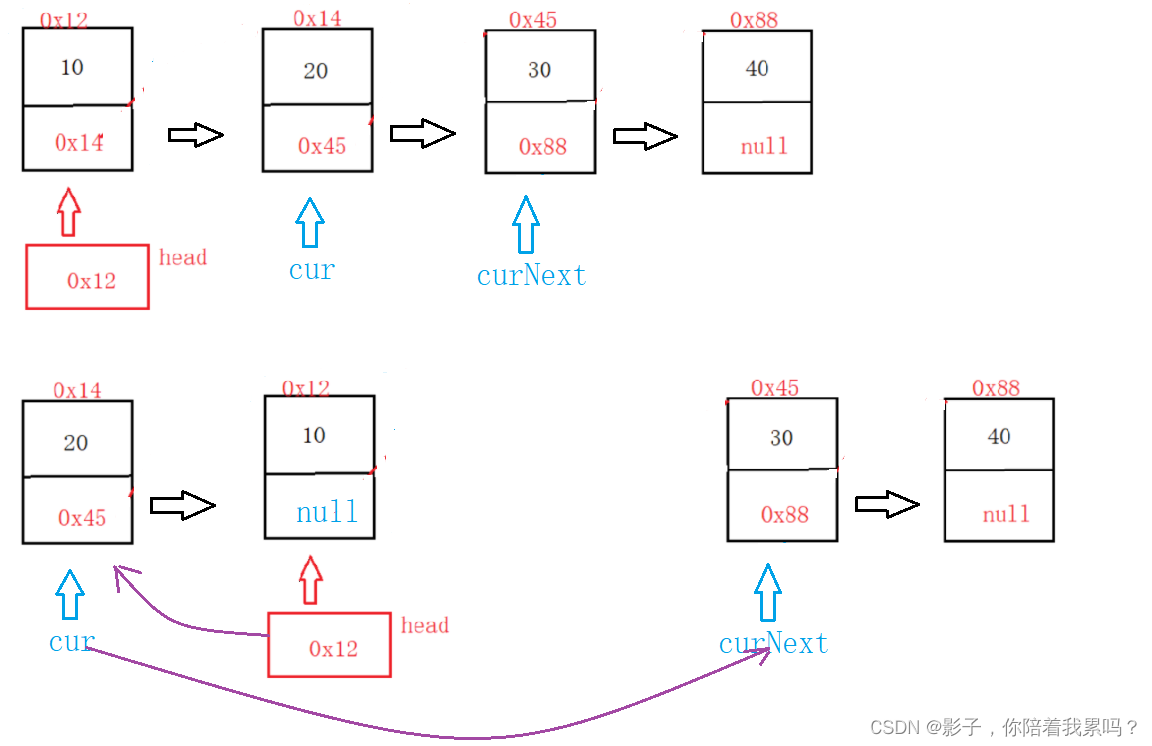

链表面试题(图文详解)

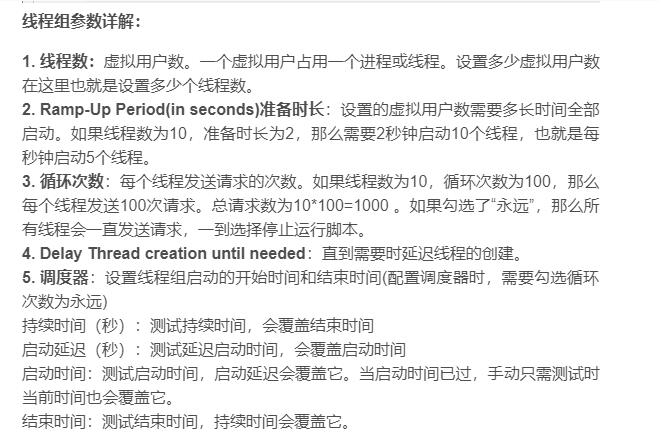

JMeter performance test steps practical tutorial

杰理之BLE【篇】

![[1. Delphi foundation] 1 Introduction to Delphi Programming](/img/14/272f7b537eedb0267a795dba78020d.jpg)

[1. Delphi foundation] 1 Introduction to Delphi Programming

随机推荐

word中把带有某个符号的行全部选中,更改为标题

Sélectionnez toutes les lignes avec un symbole dans Word et changez - les en titre

Simulation of holographic interferogram and phase reconstruction of Fourier transform based on MATLAB

Description of octomap averagenodecolor function

剪映的相关介绍

TypeScript void 基础类型

Solution: intelligent site intelligent inspection scheme video monitoring system

杰理之如若需要大包发送,需要手机端修改 MTU【篇】

Google可能在春节后回归中国市场。

TS 类型体操 之 循环中的键值判断,as 关键字使用

Summary of Digital IC design written examination questions (I)

解决方案:智慧工地智能巡检方案视频监控系统

How can word delete English only and keep Chinese or delete Chinese and keep English

leecode-C語言實現-15. 三數之和------思路待改進版

opencv学习笔记九--背景建模+光流估计

[1. Delphi foundation] 1 Introduction to Delphi Programming

Force buckle day31

Simple and understandable high-precision addition in C language

In the era of digital economy, how to ensure security?

智能终端设备加密防护的意义和措施