当前位置:网站首页>Talk about data center network again

Talk about data center network again

2022-06-11 04:13:00 【Fresh jujube class】

The day before yesterday , Jujube Jun sent an article about the data center network ( link ). Many readers leave messages , I said I didn't understand .

In line with “ Popularize communication to the end ” Principles , today , I will continue to talk about this topic .

The story still needs to start from the beginning .

1973 In the summer , Two young scientists ( Winton · Cerf and robertkahn ) Start to ⼒ Yu Zaixin ⽣ The computer ⽹ Collateral , seek ⼀ A device that can communicate between different machines ⽅ Law .

soon , In a yellow ⾊ On your notepad , They drew TCP/IP Prototypes of protocol families .

Almost at the same time , Metcalf and Boggs of Xerox , Ethernet invented (Ethernet).

We all know now , The earliest prototype of the Internet , It was Lao Mei who created it ARPANET( ARPANET ).

ARPANET The original protocol was terrible , It can not meet the demand of computing node scale growth . therefore ,70 End of the decade , The big guys will ARPANET The core protocol of is replaced by TCP/IP(1978 year ).

Get into 80 End of the decade , stay TCP/IP With the help of Technology ,ARPANET Rapidly expanding , And has spawned many brothers and sisters . These brothers and sisters connect with each other , It has become the world-famous Internet .

so to speak ,TCP/IP Technology and Ethernet technology , It is the cornerstone of the early rise of the Internet . They are cheap , Simple structure , To facilitate the development 、 Deploy , It has made great contributions to the popularization of computer network .

But later , With the rapid expansion of network scale , Tradition TCP/IP And Ethernet technology began to show fatigue , Unable to meet the large bandwidth of the Internet 、 High speed development demand .

The first problem , yes Storage .

Early storage , Everybody knows , Is the built-in hard disk of the machine , adopt IDE、SCSI、SAS Such as the interface , Connect the hard disk to the motherboard , Through the bus on the motherboard (BUS), Realization CPU、 Memory access to hard disk data .

later , The demand for storage capacity is increasing , Plus the consideration of secure backup ( Need to have RAID1/RAID5), More and more hard disks , A few hard drives are out of the question , There is no room inside the server . therefore , There is a magnetic array .

Magnetic array , disk array

A magnetic array is a special device for storing magnetic disks , A couple of dozen pieces in one hole .

Hard disk data access , It has always been the bottleneck of the server . At the beginning , The network cable or special cable is used to connect the server and the magnetic array , Soon found not enough . therefore , Start using optical fiber . This is it. FC passageway (Fibre Channel, Fibre channel ).

2000 About years ago , Fibre channel is still a relatively large technology , The cost is not low .

at that time , Public communication network ( backbone network ) The optical fiber technology of is in SDH 155M、622M The stage of ,2.5G Of SDH And wavelength division technology is just starting , It's not universal . later , Optical fiber just began to explode , Capacity began to jump rapidly , towards 10G(2003)、40G(2010)、100G(2010)、400G( Now? ) Direction of development .

Optical fiber cannot be used in the ordinary network of the data center , Then you can only continue to use the network cable , And Ethernet .

Fortunately, the communication requirements between servers were not so high at that time .100M and 1000M The Internet cable , Barely able to meet the needs of general business .2008 About years ago , The speed of Ethernet has barely reached 1Gbps Standards for .

2010 After year , Another moth .

Besides storage , Because cloud computing 、 Graph processing 、 Artificial intelligence 、 Supercomputing, bitcoin and other messy reasons , People began to focus on Suanli .

The gradual weakening of Moore's law , Can't support CPU The need to improve computing power . Toothpaste is getting harder to squeeze , therefore ,GPU Start to rise . Using a graphics card GPU The processor performs calculations , It has become the mainstream trend of the industry .

Thanks to the AI High speed development of , Major enterprises have also come up with AI chip 、APU、xPU Ah, there are all kinds of calculators .

Computing power expands rapidly (100 More than times ), Immediate consequences , Is the exponential increase in server data throughput .

except AI In addition to the abnormal computing power demand , There is also a significant change trend in the data center , That is, the data traffic between servers has increased dramatically .

Rapid development of Internet 、 The number of users soared , The traditional centralized computing architecture can not meet the demand , Began to transform into Distributed architecture .

for instance , Now? 618, Everyone is shopping . 180 users , One server can , Tens of millions, hundreds of millions , Definitely not . therefore , With a distributed architecture , Put a service , Put it in N On each server , Let's go dauch .

Distributed architecture , The data traffic between servers has greatly increased . The traffic pressure of the internal interconnection network in the data center has increased sharply , The same is true between data centers .

These horizontal ( The technical term is "East-West" ) Data message of , Sometimes it's very big , Some graphics processing data , The package size is even Gb Level .

To sum up the reasons , Traditional Ethernet can't handle such a large data transmission bandwidth and delay ( High performance computing , It requires a high time delay ) demand . therefore , A few manufacturers have developed a private network channel technology , That is to say Infiniband The Internet ( Literal translation “ Unlimited bandwidth ” technology , Abbreviation for IB).

FC vs IB vs Ethernet

IB The technical delay is very low , But the cost is high , And maintenance is complicated , Incompatible with existing technologies . therefore , and FC The technology is the same , Use only for special needs .

With the rapid development of computing power , Hard disk is not lonely , It's got SSD Solid state disk , Replace mechanical hard disk . Memory , from DDR To DDR2、DDR3、DDR4 even to the extent that DDR5, It is also a vigorous obscene development , Increase the frequency , Increase bandwidth .

processor 、 Hard disk and memory capacity explosion , Finally, the pressure is transferred to the network card and network .

Students who have studied the basics of computer network know , Traditional Ethernet is based on “ Carrier sense multiple access / Collision detection (CSMA/CD)” The mechanism of , Very prone to congestion , This causes the dynamic delay to increase , Packet loss often occurs .

TCP/IP agreement , The service time is too long , all 40 Years of old technology , There are a lot of problems .

for instance ,TCP The protocol stack is receiving / When sending a message , The kernel needs to do multiple context switches , Every switch costs 5us~10us About the time delay . in addition , At least three copies of data and dependencies are required CPU Perform protocol encapsulation .

These protocols add up to processing delays , Although it looks small , Ten microseconds , But for high-performance computing , It's intolerable .

In addition to the delay problem ,TCP/IP The network requires a host CPU Participate in memory copy of protocol stack for many times . The larger the network , The higher the bandwidth ,CPU The greater the scheduling burden when sending and receiving data , Lead to CPU Continuous high load .

According to the industry calculation data : Every transmission 1bit Data costs 1Hz Of CPU, So when the network bandwidth reaches 25G above ( Full load ) When ,CPU To consume 25GHz Calculation power , Used to handle the network . You can look at your computer CPU, What is the operating frequency .

that , Is it right to simply change the network technology ?

Not no , It is too difficult .

CPU、 Hard disk and memory , All are internal hardware of the server , Just change it , It has nothing to do with the outside .

But communication network technology , It is an external interconnection technology , It is to be changed together through negotiation . I changed it. , You didn't change , The Internet will fart .

At the same time, the Internet all over the world unifies the handover technology protocol , Do you think it is possible ?

impossible . therefore , Like right now IPv6 Replace IPv4, Step by step , Double stack first ( Support at the same time v4 and v6), And then slowly eliminate v4.

Physical channel of data center network , Optical fiber replacement network cable , It's a little easier , Start small-scale change , And gradually expand . After changing the optical fiber , Network speed and bandwidth problems , To be gradually relieved .

Insufficient network card capacity , It's also easier to solve . since CPU It doesn't count , The network card will be calculated by itself . therefore , There is now a very popular smart network card . To some extent , This is the sinking of calculating force .

To make 5G Colleagues in the core network should be familiar with ,5G Core network media plane ne UPF, It undertakes all the business data on the wireless side , There's a lot of pressure .

Now? ,UPF The network element adopts the intelligent network card technology , The network card handles the protocol itself , relieve CPU The pressure of the , Traffic throughput is also faster .

How to solve the problem of data center communication network architecture ? The experts thought for a long time , Or decided to change the structure . From the perspective of server internal communication architecture , Redesign a plan .

In the new scheme , Application data , No longer passed CPU And complex operating systems , Communicate directly with the network card .

This is the new communication mechanism ——RDMA(Remote Direct Memory Access, Remote direct data access ).

RDMA It's like a “ Eliminate middlemen ” Technology , Or say “ Back door ” technology .

RDMA Kernel bypass mechanism , Allow direct data reading and writing between the application and the network card , Reduce the data transmission delay in the server to close to 1us.

meanwhile ,RDMA Memory zero copy mechanism , The receiver is allowed to read data directly from the memory of the sender , Greatly reduced CPU The burden of , promote CPU The efficiency of .

RDMA Is far more capable than TCP/IP, It has gradually become the mainstream network communication protocol stack , It will certainly replace TCP/IP.

RDMA There are two types of network bearer schemes , Namely special InfiniBand and Traditional Ethernet .

RDMA When it was first proposed , It's loaded on InfiniBand In the network .

however ,InfiniBand Is a closed architecture , Switches are special products provided by specific manufacturers , Using private protocols , Cannot be compatible with the current network , In addition, the requirements for operation and maintenance are too complex , It is not a reasonable choice for users .

therefore , The experts intend to put RDMA Porting to Ethernet .

What's more embarrassing is ,RDMA With traditional Ethernet , There are big problems .

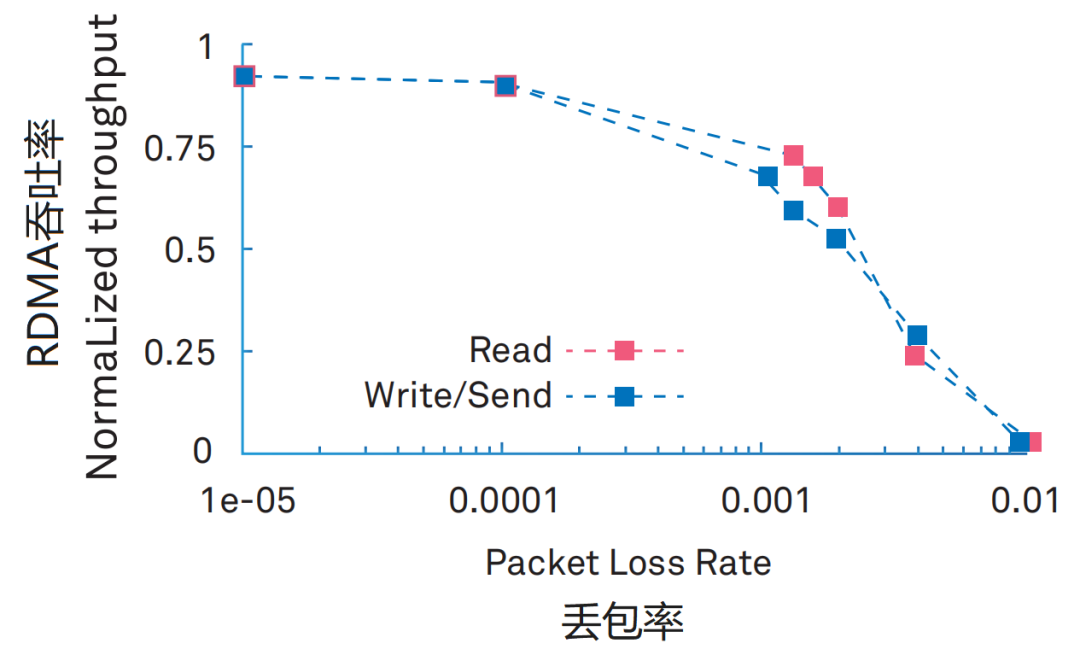

RDMA Very high packet loss rate is required .0.1% The packet loss rate , Will lead to RDMA Throughput drops sharply .2% The packet loss rate , Will make RDMA The throughput decreased to 0.

InfiniBand Although the network is expensive , But it can realize lossless and no packet loss . therefore RDMA collocation InfiniBand, There is no need to design a perfect packet loss protection mechanism .

Ok now , Switch to a traditional Ethernet environment , The life attitude of Ethernet is two words ——“ Rotten ”. Ethernet contracting , What is taken is “ Do my best ” Principles , Losing a bag is a common occurrence , If you lose it, you can pass it on .

therefore , Experts must solve the packet loss problem of Ethernet , Can achieve RDMA Porting to Ethernet . Then , The article mentioned the day before yesterday , Huawei's Super fusion data center network intelligent lossless technology .

To put it bluntly , Is to make Ethernet achieve zero packet loss , Then support RDMA. With RDMA, It can realize the super fusion data center network .

Details about zero packet loss technology , I won't go back to , Look at the article the day before yesterday ( Give me the link again : here ).

It is worth mentioning that , introduce AI Network intelligent lossless technology is the first of Huawei , But hyper converged data centers , Is a public concept . Besides Huawei , Other manufacturers ( For example, deeply convinced 、 Lenovo, etc ) Also talk about the super fusion data center , and , The concept of 2017 It's very hot in .

What is hyperfusion ?

To be precise , Hyper convergence is a network , Take all HPC High performance computing 、 Storage and general business . processor 、 Storage 、 signal communication , All of them are super integrated management resources , Everyone is on an equal footing .

Hyperfusion should not only meet these low delay requirements in performance 、 Abnormal demand for large bandwidth , And low cost , Not too expensive , It can't be too hard to maintain .

future , The data center is based on the overall network architecture , It is the leaf ridge network that leads to hei ( What exactly is a leaf ridge network ?). Routing switch scheduling ,SDN、IPv6、SRv6 Develop slowly . On the micro structure ,RDMA Technology development , Replace TCP/IP. On the physical layer , All optical continues to develop ,400G、800G、1.2T…

My personal conjecture , At present, the electric layer and the optical layer are mixed , It will eventually become the unity of light . After optical channel to all-optical cross , Is to penetrate into the server , The server motherboard is no longer normal PCB, It's the fiber backplane . Between chips , All optical channel . Inside the chip , Maybe it's just light .

Light channel is the king

Routing scheduling , Later on AI Of the world , Network traffic and protocols are all AI To take over , There is no need for human intervention . A large number of communication engineers are laid off .

Okay , There are so many introductions about the data center communication network . I wonder if you have understood this time ?

If you don't understand , Just watch it again .

—— The End ——

Extended reading :

How did the Internet come into being ?

What exactly is a leaf ridge network (Spine-Leaf)?

What is? OXC( All optical crossover )?

About ROADM Introduction to popular science

The strongest introduction to storage technology

边栏推荐

- Eth Of Erc20 And Erc721

- Evil CSRF

- A.前缀极差(C语言)

- 7. list label

- MAUI 遷移指南

- Guanghetong won the "science and Technology Collaboration Award" of Hello travel, driving two rounds of green industries to embrace digital intelligence transformation

- ESP series module burning firmware

- After the college entrance examination, what can I do and how should I choose my major-- From the heart of a college student

- 合理使用线程池以及线程变量

- Docker swarm installs redis cluster (bitnami/redis cluster:latest)

猜你喜欢

强烈推荐这款神器,一行命令将网页转PDF!

Student teacher examination management system based on SSM framework

June 10, 2022: Captain Shu crosses a sweet potato field from north to South (m long from north to South and N wide from east to West). The sweet potato field is divided into 1x1 squares. He can start

2022-06-10:薯队长从北向南穿过一片红薯地(南北长M,东西宽N),红薯地被划分为1x1的方格, 他可以从北边的任何一个格子出发,到达南边的任何一个格子, 但每一步只能走到东南、正南、西南方向的

代码复现CSRF攻击并解决它

Google 有哪些牛逼的开源项目?

Esp32 development -lvgl display picture

游戏数学: 计算屏幕点中的平面上的点(上帝视角)

7. 列表标签

2022 年 5 月产品大事记

随机推荐

Construction of esp8266/esp32 development environment

A - Eddy‘s AC难题(C语言)

SQL注入关联分析

Esp32 development -lvgl animation display

Safe and borderless, Guanghe tongdai 5g module +ai intelligent security solution shines at CPSE Expo

关于重复发包的防护与绕过

Embedded basic interface-i2c

雷达辐射源调制信号仿真(代码)

Pictures that make people feel calm and warm

Rational use of thread pool and thread variables

B - wall painting (C language)

给你一个项目,你将如何开展性能测试工作?

FreeRTOS startup - based on stm32

[激光器原理与应用-2]:国内激光器重点品牌

[laser principle and application-2]: key domestic laser brands

ESP8266_ RTOS modifies IP address and hostname in AP mode

Google 有哪些牛逼的开源项目?

Seven easy-to-use decorators

游戏数学: 计算屏幕点中的平面上的点(上帝视角)

Watson K's Secret Diary