当前位置:网站首页>MR-WordCount

MR-WordCount

2022-06-28 05:50:00 【Hill】

pom.xml

<dependencies>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>3.2.2</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>3.2.2</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>3.2.2</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-core</artifactId>

<version>3.2.2</version>

</dependency>

</dependencies>

<build>

<plugins>

<!-- Main function entry -->

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-jar-plugin</artifactId>

<version>2.4</version>

<configuration>

<archive>

<manifest>

<addClasspath>true</addClasspath>

<classpathPrefix>lib/</classpathPrefix>

<mainClass>com.mr.demo.wordcount.WordCount</mainClass>

</manifest>

</archive>

</configuration>

</plugin>

<!--jdk Definition -->

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<version>3.0</version>

<configuration>

<source>1.8</source>

<target>1.8</target>

<encoding>UTF-8</encoding>

</configuration>

</plugin>

</plugins>

</build>

WordCount.java

MapReduce Programming cases

package com.flink.mr.demo.wordcount;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

import java.io.IOException;

import java.net.URI;

public class NeoWordCount {

public static class NeoWordCountMapper extends Mapper<LongWritable, Text, Text, LongWritable> {

private final LongWritable ONE = new LongWritable(1);

private final Text outputK = new Text();

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

for (String s : value.toString().split(" ")) {

outputK.set(s);

context.write(outputK, ONE);

}

}

}

public static class NeoWordCountReducer extends Reducer<Text, LongWritable, Text, LongWritable> {

private final LongWritable outputV = new LongWritable();

@Override

protected void reduce(Text key, Iterable<LongWritable> values, Context context) throws IOException, InterruptedException {

long sum = 0;

for (LongWritable value : values) {

sum += value.get();

}

outputV.set(sum);

context.write(key, outputV);

}

}

public static void main(String[] args) throws Exception {

/*GenericOptionsParser parser = new GenericOptionsParser(args);

Job job = Job.getInstance(parser.getConfiguration());

args = parser.getRemainingArgs();*/

System.setProperty("HADOOP_USER_NAME","bigdata");

Configuration config = new Configuration();

config.set("fs.defaultFS","hdfs://10.1.1.1:9000");

config.set("mapreduce.framework.name","yarn");

config.set("yarn.resourcemanager.hostname","10.1.1.1");

// Cross platform parameters

config.set("mapreduce.app-submission.cross-platform","true");

Job job = Job.getInstance(config);

job.setJar("D:\\bigdata\\mapreduces\\flink-mr.jar");

job.setInputFormatClass(TextInputFormat.class);

job.setOutputFormatClass(TextOutputFormat.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(LongWritable.class);

job.setOutputKeyClass(Text.class);

job.setOutputKeyClass(LongWritable.class);

job.setMapperClass(NeoWordCountMapper.class);

job.setReducerClass(NeoWordCountReducer.class);

job.setCombinerClass(NeoWordCountReducer.class);

Path inputPath = new Path("/user/bigdata/demo/001/input");

FileInputFormat.setInputPaths(job, inputPath);

Path outputPath = new Path("/user/bigdata/demo/001/output");

FileSystem fs = FileSystem.get(new URI("hdfs://10.1.1.1:9000"),config,"bigdata");

if(fs.exists(outputPath)){

fs.delete(outputPath,true);

}

FileOutputFormat.setOutputPath(job, outputPath);

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

Environmental requirements

1. Local packaging forms

D:\bigdata\mapreduces\flink-mr.jar

2.Hadoop Environmental Science

10.1.1.1

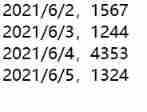

3. Document preparation

hdfs://10.1.1.1:9000/user/bigdata/demo/001/input

Upload several files for analysis

4. Run this example , Submit MR Task to cluster

边栏推荐

- Valueerror: iterative over raw text documents expected, string object received

- pytorch详解

- Mysql-17- create and manage tables

- 脚本语言和编程语言

- Line animation

- How to develop the language pack in the one-to-one video chat source code

- MR-WordCount

- 指定默认参数值 仍报错:error: the following arguments are required:

- 基于Kotlin+JetPack实现的MVVM框架的示例

- How to do a good job of dam safety monitoring

猜你喜欢

随机推荐

5g network overall architecture

小球弹弹乐

密码学笔记

Jenkins继续集成2

Official answers to the "network security" competition questions of the 2022 national vocational college skills competition

如何在您的Shopify商店中添加实时聊天功能?

Jenkins continues integration 2

V4l2 driver layer analysis

pkg打包node工程(express)

Create NFS based storageclass on kubernetes

Error: the following arguments are required:

数据仓库:DWS层设计原则

YYGH-8-预约挂号

sklearn 特征工程(总结)

JSP connects with Oracle to realize login and registration (simple)

Jdbc的使用

指定默认参数值 仍报错:error: the following arguments are required:

博客登录框

TypeScript基础类型

YYGH-BUG-03