当前位置:网站首页>Zookepper===> animal management system

Zookepper===> animal management system

2022-06-11 08:26:00 【Van Gogh's pig V】

summary

seeing the name of a thing one thinks of its function , Indicates an animal Manager . We think of multiple servers as different animals , and Zookeeper Is an administrator , To observe the state of these animals , And every time our client performs animal watching , Must interact with the administrator , Once the keeper finds a dead animal , Customers who want to see the animal will be notified .

Of course, this is just a common metaphor , actually ,ZK It is a cluster composed of one leader and multiple followers .

1) In this cluster , If half or more nodes die , The cluster will not work .

2) The global data of the cluster is consistent , That is, the data saved by each node is consistent , But this does not affect the pressure on the server .

3) Data updates support atomicity , Data reading supports real-time , Although there are multiple nodes , But the event of data synchronization is very short .

Application scenarios

- Unified naming service : During the operation of a project , There must be multiple servers deployed , But it is impossible for the client to remember your server when visiting ip Address , At this time, the domain name is needed , and ZK It can help customers find servers through domain names .

- Unified configuration management : In distributed development , Synchronization of file data is very common ,ZK Act as an observer , Unify file configurations into one node , Other client servers only listen to this node , Once the configuration changes , Other clients can also know in time .

- Unified cluster management : It is basically the same as the idea of unified configuration management , Once a node changes , Other nodes can receive change information in time .

- Server dynamic up and down : If there is a problem with one of the servers ,ZK Will immediately observe , In this way, the client can access other servers or not .

- Soft load balancing :ZK Observe the load of the server and allocate the access appropriately .

install

jdk install

http://www.oracle.com/technetwork/java/javase/downloads/jdk8-downloads-2133151.html Download from the official website jdk-8u171-linux-x64.tar.gz middle 8u171 It can be inconsistent , But to download .tar.gz At the end of the , Represents a compressed package .

Transfer files to /usr/local Under the table of contents . You can create a new... In this directory java Folder , And then again java Create two more in the file java and jdk Folder , Finally, move the compressed package to and jdk A level one java Under the table of contents .

Connect xsheel Tools , Open client , Input rpm -qa | grep java Command to see if... Has been installed jdk, If nothing is displayed, it means that .

If any , It may be the system's own , It can be done by rpm -e xxx --nodeps xxx Express jdk The name of the file

adopt cd ~ Return to root

And then through cd /usr/local Get into local Catalog . then cd java Get into java Catalog ,cd jdk Enter with jdk Directory of compressed package .

adopt tar -xvf jdk-8u171-linux-x64.tar.gz Unzip the file

After decompressing ,rm -rf jdk-8u171-linux-x64.tar.gz Delete the package , Last ll Look, only the decompressed ones are left jdk

cd jdk1.8.0_171/ Get into jdk Catalog pwd Display the full package name of the directory , Copy , The configuration environment to be used later

yum -y install vim Command to install vim Text editor

vim /etc/profile Command to edit the configuration file of environment variables , Enter the file , The keyboard down key can reach the end of the file , Then click on the keyboard letter i Enter input mode , Add the following variables at the end of the file

JAVA_HOME=/usr/local/java/jdk1.8.0_171 # The path just copied

CLASSPATH=.:$JAVA_HOME/lib.tools.jar

PATH=$JAVA_HOME/bin:$PATH

export JAVA_HOME CLASSPATH PATH

After the modification is completed , Click on esc Exit edit mode , Then input... In English mode :wq You can exit the file .

After exiting file editing ,source /etc/profile Command makes the changed configuration take effect immediately

Last java -version Command and javac -version Order to see jdk Is the installation successful

ZK The learning environment of the is generally provided to three clients , Therefore, all three clients must be installed jdk Environmental Science .

ZK install

Download from the official website apache-zookeeper-3.5.7-bin.tar.gz, Then it's best to linux Of opt Create a new one in the directory software Catalog , Then move the compressed package to the directory .

Then login xsheel client , Enter this directory , Input tar -zxvf apache-zookeeper-3.5.7-bin.tar.gz -C /opt/model This is the decompression command , The latter is to extract to a directory .

After decompression cd /opt Get into opt Catalog ,cd model Get into model Catalog ,ls Check whether there is any pressurized bin package .

Input mv apache-zookeeper-3.5.7-bin/ zookeeper-3.5.7-bin Change file name

cd zookeeper-3.5.7-bin/ Enter this directory ,cd conf Enter this directory to configure

mv zoo_sample.cfg zoo.cfg Will be conf Change the name of the file in the directory

vim zoo.cfg Enter the file

# the directory where the snapshot is stored.

# do not use /tmp for storage, /tmp here is just

# example sakes.

dataDir=/opt/model/zookeeper-3.5.7-bin/zkData

dataDir This property setting zk Where data is stored ,zk Officials do not recommend storing data in the original path , because linux The data will be refreshed at a certain time , At that time, data will be lost , So change to your own path , Here I am model/zookeeper-3.5.7-bin Directory and zkbin A new directory is created in the same level directory of the file to save the data .

After modification , Click on esc Exit edit mode , then :wq Exit file .

after cd … Return to upper level , after bin/zkServer.sh start Start server zk,jps -l perhaps jps Check the startup .

after bin/zkCli.sh Start server Appears after startup [zk: localhost:2181(CONNECTED) 0] , Input ls You can view the current zk The node of , only one zk.quit You can exit the server .

after bin/zkService.sh status You can see zk state Corresponding bin/zkService.sh stop Can stop zk.

ZooKeeper JMX enabled by default

Using config: /opt/model/zookeeper-3.5.7-bin/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost.

Mode: standalone

zoo.cfg Reading

# Be sure to read the maintenance section of the

# The number of milliseconds of each tick

tickTime=2000

Server and client heartbeat time , Unit millisecond

# The number of ticks that the initial

# synchronization phase can take

initLimit=10

Initialize communication time , The first time a connection is established , Indicates that the ten heartbeat times are timeout

# The number of ticks that can pass between

# sending a request and getting an acknowledgement

syncLimit=5

Except for the first connection , The maximum communication time for other connections is five heartbeats .

Data storage location and port number attributes are omitted .

Cluster distribution

In development , Use cluster distribution scripts to replicate zk To other servers , There is no need to manually configure again .

First , At the first zk Of zookeeper-3.5.7-bin Create a new folder named in the package zkData, Used to store data .

secondly , At the root ~ in ,cd /bin Get into bin package , then vim xsync file , Then write the code , Different servers require some configuration changes

#2. Traverse all machines in the cluster

for host in 192.168.188.99 192.168.188.100 192.168.188.101

do

echo ==================== $host ====================

#3. Traverse all directories , Send... One by one

for file in [email protected]

do

#4 Judge whether the file exists

if [ -e $file ]

then

#5. Get parent directory

pdir=$(cd -P $(dirname $file); pwd)

#6. Get the name of the current file

fname=$(basename $file)

ssh $host "mkdir -p $pdir"

rsync -av $pdir/$fname $host:$pdir

else

echo $file does not exists!

fi

done

done

After editing , To leave this file , In the current directory bin Next , Input chmod 777 xsync Assign permissions , You can use .

then , We are here model Catalog , Input xsync zookeeper-3.5.7-bin/ This command will copy the configuration of the server to other servers .zkData In the bag myid The same is true for files , So we need to allocate to other servers , To modify myid file , Change to different ports , such as , ancestral myid Is in the 2, Then the following machines can be in order 3,4

Next , Get into conf Catalog , Get into zoo.cfg file , Then add the following configurations at the lowest end

server.2=192.168.188.99:2888:3888

server.3=192.168.188.100:2888:3888

server.4=192.168.188.101:2888:3888

server.a=b.c.d

a It is just configured myid In the value of the , This file is used as zk Startup file .b Is that the zk Port number of the server ,c It belongs to this server follower and leader Exchange port number .d When one of the servers fails . A port number is required for re-election , Choose a new port number .

Next , In the first server ,zookeeper-3.5.7-bin Enter... In the package bin/zkServer.sh start command , start-up zk, Then input bin/zkServer.sh status You will find error word , This is because other servers have not been turned on , We start the remaining servers in this way , The system will choose who is through an election mechanism follwer and leader

Last , Is the command

zk.sh start

zk.sh status

zk.sh stop

Address mapping

Configure address mapping , Can effectively speed up and zk The connection of .

stay windows in , find C:\Windows\System32\drivers\etc Under the hosts file , as follows

# Copyright (c) 1993-2009 Microsoft Corp.

#

# This is a sample HOSTS file used by Microsoft TCP/IP for Windows.

#

# This file contains the mappings of IP addresses to host names. Each

# entry should be kept on an individual line. The IP address should

# be placed in the first column followed by the corresponding host name.

# The IP address and the host name should be separated by at least one

# space.

#

# Additionally, comments (such as these) may be inserted on individual

# lines or following the machine name denoted by a '#' symbol.

#

# For example:

#

# 102.54.94.97 rhino.acme.com # source server

# 38.25.63.10 x.acme.com # x client host

# localhost name resolution is handled within DNS itself.

# 127.0.0.1 localhost

# ::1 localhost

#127.0.0.1 activate.navicat.com

192.168.188.99 hadoop1

192.168.188.100 hadoop2

192.168.188.101 hadoop3

Configure address mapping as above .

After configuring the address mapping , Replace zoo.cfg To configure

server.2=hadoop1:2888:3888

server.3=hadoop2:2888:3888

server.4=hadoop3:2888:3888

You only need to replace one server here , Then the cluster distributes the script , Distribute . But after distribution , Remember to revise zkDtat Inside myid The serial number of .

It's not over yet , Because we modified it outside , So it's only right here windows and linux The operating system works , And yes linux Not valid between systems , We need to be in linux The systems are configured with each other

For example, here I log in Hadoop1, then vim /etc/hosts Get into hosts file , At the end of the file , To join and windows Same address mapping information . After exiting in this way , We need a cluster distribution mechanism , Distribute this configuration to other servers .

Last , It should be noted that , Since we modified Linux Address mapping for , It should be about input ip The file configuration of the address becomes the mapping name , That's how it works .

The election mechanism

- zk In the course of the first election , I will vote for myself first , Next, the second one , The third one … But every time you add a server to vote for yourself , Will follow the previous server id To compare , The server id Small , Will vote for the big one , Once it is found that the number of votes exceeds half of the number of servers , Then choose leader.

- zk When it is not the first time to initialize , The election strategy has changed , Here we design to three values :SID and myid equally , Can't repeat , Used to uniquely identify zk Server for id.ZXID, Business ID, Used to identify a change in server status , At some point , Each machine in the cluster is not necessarily identical , This is related to the processing logic of the server for the update requirements of the client .EPOCH Every leader The code name of the term of office , No, leader when , The logical clock values of the same round are consistent , After each round of voting , This value will increase . When it is not the first time to initialize , The election strategy is to compare these three values , Compare first EPOCH, A big winner , If the same , Compare transactions id, A big winner , If the same , Compare myid, A big winner .

Start stop script

- Follow xsync The script is the same , Here is the code to start and stop the script ,

#!/bin/bash

case $1 in

"start"){

for i in The server ip hadoop103 hadoop104

do

echo ---------- zookeeper $i start-up ------------

ssh $i "/opt/module/zookeeper-3.5.7/bin/zkServer.sh start"

done

};;

"stop"){

for i in hadoop102 hadoop103 hadoop104

do

echo ---------- zookeeper $i stop it ------------

ssh $i "/opt/module/zookeeper-3.5.7/bin/zkServer.sh stop"

done

};;

"status"){

for i in hadoop102 hadoop103 hadoop104

do

echo ---------- zookeeper $i state ------------

ssh $i "/opt/module/zookeeper-3.5.7/bin/zkServer.sh status"

done

};;

esac

After exiting editing ,chmod 777 Script name Make scripts available My name here is zk.sh

after , To configure java Unified path , Get into zookeeper Of bin Catalog , Then enter zkEnv.sh file .

if [[ -n "$JAVA_HOME" ]] && [[ -x "$JAVA_HOME/bin/java" ]]; then

JAVA="$JAVA_HOME/bin/java"

elif type -p java; then

JAVA=java

Above the code , by JAVA_HOME Configure your variables jdk The installation path .

JAVA_HOME="/usr/local/java/jdk/jdk1.8.0_301"

After completion , Turn off the firewall of each server .

Then input zk.sh start You can start three servers with one click ,zk.sh status Check the status of the three servers , If all three start up , And they all appear error This report is wrong , First, check the... Of each server myid Whether the port of the file is the same as zoo.cfg This file is set to server.id Agreement . The second is to check zoo.cfg Middle configuration server.id=A:B:C This A Whether the address of corresponds to . Finally, check whether the firewall is turned off , Be careful , The firewall cannot take effect until it is restarted after it is closed .

After starting three servers , You can start the client ,bin/zkCli.sh -server 192.168.188.99:2181

ssh Password free login

After configuring the start stop script , It is found that you must enter a password every time you access other servers , It affects efficiency , So you can use ssh Password free login .

Here we are right hadoop1 To configure , Need to visit yourself , and Hadoop2,Hadoop3.

First , Come to your own ~ Under the table of contents , Input

[[email protected] ~]# ls -al

All files will appear at this time , See if there is any .sh file , without , Indicates that the server is not used ssh ip Has accessed other servers , You can try to access the file once and it will appear .

Go to the hidden file cd .ssh/ You will find that known_hosts Such a document ,cat known_hosts From this file, you can know who has accessed the server

If we were .ssh Other files were found in the file, such as :

-rw-------. 1 root root 393 12 month 26 03:17 authorized_keys

-rw-------. 1 root root 1679 12 month 26 03:16 id_rsa # Private key

-rw-r--r--. 1 root root 393 12 month 26 03:16 id_rsa.pub # Public key

You don't have to type ssh -keygen -t rsa, If not, enter the command , Generate public key and key .

With the public and private keys , Both public and private keys can be distributed to authorized servers on this server

ssh-copy-id hadoop1 ssh-copy-id hadoop2 ssh-copy-id hadoop3 remember , Give it to yourself once .

Then if we stand hadoop1 From the perspective of logging in to other services , You don't have to enter the password .

such , We are standing Hadoop2 From the angle of Hadoop1 The operation of ,hadoop3 Also do unified operation , In this way, any server can access each other

Client command line

After starting , When the following message appears, the client is started

[zk: 192.168.188.99:2181(CONNECTED) 1]

help Command to view client commands

addauth scheme auth

close

config [-c] [-w] [-s]

connect host:port

create [-s] [-e] [-c] [-t ttl] path [data] [acl] # Create a node , Create permanent nodes by default ,-e Create temporary nodes for ,-s Bring the serial number when creating the node .

delete [-v version] path # Delete a node

deleteall path # Delete multiple nodes

delquota [-n|-b] path

get [-s] [-w] path # add -s Can get the value of the node ,-w It can monitor the change of value

getAcl [-s] path

history

listquota path

ls [-s] [-w] [-R] path# View node information -w Can listen for changes in the number of nodes

ls2 path [watch]

printwatches on|off

quit

reconfig [-s] [-v version] [[-file path] | [-members serverID=host:port1:port2;port3[,...]*]] | [-add serverId=host:port1:port2;port3[,...]]* [-remove serverId[,...]*]

redo cmdno

removewatches path [-c|-d|-a] [-l]

rmr path

set [-s] [-v version] path data # Modify the value of the corresponding node

setAcl [-s] [-v version] [-R] path acl

setquota -n|-b val path

stat [-w] path

sync path

ls -s / The following message appears in the command

cZxid = 0x0 # Create transactions for nodes id

ctime = Thu #Jan 01 08:00:00 CST 1970 # The number of milliseconds the node was created , from 1970 Year begins

mZxid = 0x0 # Last updated transaction id

mtime = Thu #Jan 01 08:00:00 CST 1970 # The number of milliseconds the node last modified is from 1970 Year begins

pZxid = 0x0 # The last updated child node transaction id

cversion = -1 # Version number , After the child node changes , Modification times of child nodes

dataVersion = 0 # The data change number of the node

aclVersion = 0 # Change number of node access control list

ephemeralOwner = 0x0 # If it's a temporary node , This is a temporary node session id, If it is not a temporary node, the value is 0

dataLength = 0 # The data length of the node

numChildren = 1 # Number of child nodes

Node type

Node with serial number

- This node is created with a serial number .

- This node is divided into two categories , One is persistent nodes , When the client and server are disconnected , Nodes will not be deleted ; One is a transient class node , When the client and server are disconnected , The node will be deleted .

Without serial number

- This node type is the opposite of the one with serial number , But persistence and transient nodes are still the same .

Example

- create /hyb Create a permanent node without serial number

- create /hyb/zyl stay hyb Create a permanent node under the node 1

- create -s /hyb Create a permanent node with serial number

- create -s /hyb “hyb” Create a value of hyb, And a permanent node with serial number

- get -s /hyb obtain hyb Value under node

- set /hyb value modify hyb The value of the node

- ls /hyb Look at the node hyb Value

- create -e /hyb Create a transient node hyb

- When starting and closing the client , Persistent nodes are still valid , Transient nodes will be deleted

Monitor

stay zk in , A client can register a listener , To observe the changes of some nodes .

Monitoring data changes :get -w / node

get -w /zyl Listen for changes in the node data , The premise is that the node must exist

Once the data of this node changes , The client where the listener is located will report the following message WatchedEvent state:SyncConnected type:NodeDataChanged path:/zyl

Listen for changes in child nodes :

ls -w / node Listen for changes in the number of child nodes of this node .

Once a child node is created or deleted under this node , The client where the listener is located will report changes such as listening to the data

Although both will give warnings , But this kind of warning will only appear during the first monitoring .

API Development

Connect

The following is shown in IDEA Open the connection zookeeper.

First, create a new one maven engineering , Add the following dependencies .

<dependencies>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-core</artifactId>

<version>2.8.2</version>

</dependency>

<dependency>

<groupId>org.apache.zookeeper</groupId>

<artifactId>zookeeper</artifactId>

<version>3.5.7</version>

</dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.13.2</version>

<scope>compile</scope>

</dependency>

</dependencies>

Then create a new class

package com.hyb.zk;

import org.apache.zookeeper.*;

import org.junit.Before;

import org.junit.Test;

public class ZkClient {

private String connectString="hadoop1,hadoop2,hadoop3";

// After connection ,zk Timeout of sending session

private int sessionTimeOut=200000;

private ZooKeeper zooKeeper;

@Before

public void init() throws Exception {

zooKeeper = new ZooKeeper(connectString, sessionTimeOut, new Watcher() {

@Override

public void process(WatchedEvent watchedEvent) {

}

});

}

@Test

public void create()throws Exception{

// Create a node ,data Is the data of this node ,ZooDefs For access rights , This setting is open to everyone , The final argument is what type of node to create : A persistent

// Be careful , When creating nodes , If used @Test Annotation startup , On the method that initializes the connection @Test Note replace with @Before annotation , Otherwise, a null pointer exception will be reported

zooKeeper.create("/hyb3","hyb".getBytes(), ZooDefs.Ids.OPEN_ACL_UNSAFE, CreateMode.PERSISTENT);

}

}

Monitor

@Test

public void getChildren()throws Exception{

// Get the node ,true Indicates default listening , Will go init() Medium process() Method ,

// If you need to customize the monitor , You can pass in an anonymous class

List<String> children = zooKeeper.getChildren("/", true);

for (String child : children) {

System.out.println(child);

}

// Let the console delay continuous output

Thread.sleep(Long.MAX_VALUE);

}

Then write init() Of process Method

@Override

public void process(WatchedEvent watchedEvent) {

System.out.println("-----------------");

// The monitoring mechanism is that you will only be prompted when you listen for the first time , And children Variables are only output when listening for the first time ,

// therefore , If you need the listener to continuously input changes to the nodes , Need to listen again

List<String> children = null;

try {

children = zooKeeper.getChildren("/", true);

for (String child : children) {

System.out.println(child);

}

Thread.sleep(Long.MAX_VALUE);

} catch (KeeperException | InterruptedException e) {

e.printStackTrace();

}

System.out.println("-----------------");

}

Listen for the existence of a node

@Test

public void exit() throws InterruptedException, KeeperException {

// The first is the node , The second is whether to enable listening

Stat exists = zooKeeper.exists("/hyb", false);

System.out.println(exists==null?"no exit":"exit");

}

The process of writing data

The customer directly requests leader

because leader Have write permission , So write a copy of the data first , Then inform others follower Start writing data .

If the entire cluster , Include leader, More than half of the servers have finished writing data , This information can be fed back to customers .

[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-aXna3uEQ-1653707450528)(C:\Users\46894\AppData\Local\Temp\WeChat Files\8c3bed4e7cd3e4b8099a1731b2a0e94.png)]

The customer directly requests follower

follower No permission to write , At this time, we will send a notice to leader,leader First write a , Then inform others follow Start writing , More than half of the servers have been written ,leader You can send commands that can be fed back to those that interact directly with the client follower, This follower They will give feedback to customers .

[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-Xb4AiIf5-1653707450530)(C:\Users\46894\AppData\Local\Temp\WeChat Files\056e433b42192b1b57def528f619587.png)]

Service dynamic online and offline

In a distributed system , There can be more than one master node , Dynamic up and down line , Any client can perceive in real time

Go online and offline to the master node server .

[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-06E4M1ZK-1653707450530)(C:\Users\46894\AppData\Local\Temp\WeChat Files\8a284512afde0ca3248fda02f68093e.png)]

Let's simulate this scenario , We simulate the actual online process of the server , And the client is through zk The cluster monitors the status of the server in real time .

The actual online process of the server , It is in zk Create node . The client is the listening node .

The first is the server

package com.hyb.zk;

import org.apache.zookeeper.*;

import java.io.IOException;

public class ZkTest1 {

private ZooKeeper zooKeeper;

private String connectString="hadoop1:2181,hadoop2:2181,hadoop3:2181";

private int sessionId=2000;

public static void main(String[] args) throws Exception {

ZkTest1 zkTest = new ZkTest1();

// obtain zk Connect

zkTest.getConnect();

// register zk

zkTest.register("hadoop1");

// Delay console output ( Business logic )

zkTest.sleep();

}

private void sleep() throws InterruptedException {

Thread.sleep(Long.MAX_VALUE);

}

private void register(String hostName) throws Exception {

zooKeeper.create("/server/"+hostName,hostName.getBytes(), ZooDefs.Ids.OPEN_ACL_UNSAFE, CreateMode.EPHEMERAL_SEQUENTIAL);

System.out.println(hostName+" go online ");

}

private void getConnect() throws IOException {

zooKeeper = new ZooKeeper(connectString, sessionId, new Watcher() {

@Override

public void process(WatchedEvent watchedEvent) {

}

});

}

}

Next is the client .

package com.hyb.zk;

import org.apache.zookeeper.*;

import java.io.IOException;

import java.util.ArrayList;

import java.util.List;

public class ZkTestClient1 {

private String connectString="hadoop1:2181,hadoop2:2181,hadoop3:2181";

private int sessionId=20000;

private ZooKeeper zk;

public static void main(String[] args) throws Exception {

ZkTestClient1 zkTestClient1 = new ZkTestClient1();

// Get the connection

zkTestClient1.getConnect();

// monitor

zkTestClient1.watch();

// Business logic , Let the console delay the output

zkTestClient1.sleep();

}

private void sleep() throws Exception {

Thread.sleep(Long.MAX_VALUE);

}

private void watch() throws InterruptedException, KeeperException {

// Monitor

List<String> server = zk.getChildren("/server", true);

List<String> list=new ArrayList<>();

for (String s :

server) {

// get data

byte[] data = zk.getData("/server/" + s, false, null);

list.add(new String(data));

}

System.out.println(list);

}

private void getConnect() throws IOException {

zk=new ZooKeeper(connectString, sessionId, new Watcher() {

@Override

public void process(WatchedEvent watchedEvent) {

try {

// Monitor again , Always observe node changes

watch();

} catch (Exception e) {

e.printStackTrace();

}

}

});

}

}

test : First , Let's start listening on the client , Then open the server , The server will create nodes , And real time , You can observe the node changes of the client listener on the console .

Distributed lock

Multiple distributed processes share a resource at the same time , But there is only one process that can monopolize , When a process runs out , Another lock starts to monopolize .

Implementation of distributed lock : Create multiple short nodes with serial numbers of the same level , Specify that the smaller the serial number , Access to resources is the highest , The easiest way to monopolize resources , Nodes with larger serial numbers listen to nodes with exclusive resources , Once the node that owns the resource is released , The node with the most similar permissions will determine whether its own permissions are the highest , Once it is the highest , Monopolize resources .

[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-6EUHd0hr-1653707450531)(C:\Users\46894\AppData\Local\Temp\WeChat Files\1c00871a41f32e2bff07cb07fd3049c.png)]

Native examples

package com.hyb.zk;

import org.apache.zookeeper.*;

import java.io.IOException;

import java.nio.charset.StandardCharsets;

import java.util.Collection;

import java.util.Collections;

import java.util.List;

import java.util.concurrent.CountDownLatch;

public class DistributedLock {

private ZooKeeper zk;

private int sessionTimeout=20000;

private String connectString="hadoop1:2181,hadoop2:2181,hadoop3:2181";

// Previous node path

private String upPath;

// wait for

private CountDownLatch countDownLatch=new CountDownLatch(1);

private CountDownLatch upCountDownLatch=new CountDownLatch(1);

private String node;

public DistributedLock() throws Exception {

// Get the connection

zk=new ZooKeeper(connectString, sessionTimeout, new Watcher() {

@Override

public void process(WatchedEvent watchedEvent) {

// Start listening

// countDownLatch Detection status

if (watchedEvent.getState()==Event.KeeperState.SyncConnected){

// If it is already connected , Release

countDownLatch.countDown();

}

// upCountDownLatch testing

// If the previous node is being deleted , The path of the previous node and upPath identical

if (watchedEvent.getType()==Event.EventType.NodeDeleted&&watchedEvent.getPath().equals(upPath)){

upCountDownLatch.countDown();

}

}

});

// Wait for the connection , Before performing the next operation

countDownLatch.await();

// Determine whether the root node exists

if (zk.exists("/locks",false)==null){

// Create a permanent node if the root node does not exist

zk.create("/locks","locks".getBytes(StandardCharsets.UTF_8), ZooDefs.Ids.OPEN_ACL_UNSAFE, CreateMode.PERSISTENT);

}

}

// locked

public void setLock()throws Exception{

// Create nodes , temporary , With serial number

node = zk.create("/locks/lock", null, ZooDefs.Ids.OPEN_ACL_UNSAFE, CreateMode.EPHEMERAL_SEQUENTIAL);

// Judge whether the node is the node with the smallest serial number under the root node , The highest authority

List<String> children = zk.getChildren("/locks", false);

// If the set is 1, Then this node can acquire locks

int size=children.size();

if (size==1){

System.out.println(children.get(0)+" Acquire the lock ");

return;

}else {

// Sort the set

Collections.sort(children);

// Get the position of the node in the sorted collection

// 1. Get the name of the node

String thisNode=node.substring("/locks/".length());

// 2. obtain thisNode Node index

int index = children.indexOf(thisNode);

// If the index is in the first place , Then you don't have to listen , It can acquire the lock itself

if (index==-1){

System.out.println(" Something went wrong ");

return;

}else if (index==0){

System.out.println(children.get(0)+" Got the lock ");

return;

}else {

// The path of the previous

upPath="/locks/"+children.get(index-1);

// Monitoring data changes

zk.getData(upPath,true,null);

// Wait for the monitoring to complete

upCountDownLatch.await();

return;

}

}

}

// Release the lock

public void freeLock() throws Exception {

// Delete node

zk.delete(node,-1);

}

}

package com.hyb.zk;

public class DistributedLockTest {

public static void main(String[] args) throws Exception {

final DistributedLock distributedLock1 = new DistributedLock();

final DistributedLock distributedLock2 = new DistributedLock();

// Create two threads that do not affect each other

new Thread(new Runnable() {

@Override

public void run() {

try {

distributedLock1.setLock();

System.out.println(" As soon as the thread starts ");

// Simulated thread occupation

Thread.sleep(5*1000);

distributedLock1.freeLock();

System.out.println(" As soon as the thread is released ");

} catch (Exception e) {

e.printStackTrace();

}

}

}).start();

new Thread(new Runnable() {

@Override

public void run() {

try {

distributedLock2.setLock();

System.out.println(" Thread 2 starts ");

Thread.sleep(5*1000);

distributedLock2.freeLock();

System.out.println(" Thread 2 is released ");

} catch (Exception e) {

e.printStackTrace();

}

}

}).start();

}

}

Frame example

<dependency>

<groupId>org.apache.curator</groupId>

<artifactId>curator-framework</artifactId>

<version>4.3.0</version>

</dependency>

<dependency>

<groupId>org.apache.curator</groupId>

<artifactId>curator-recipes</artifactId>

<version>4.3.0</version>

</dependency>

<dependency>

<groupId>org.apache.curator</groupId>

<artifactId>curator-client</artifactId>

<version>4.3.0</version>

</dependency>

package com.hyb.zk;

import org.apache.curator.framework.CuratorFramework;

import org.apache.curator.framework.CuratorFrameworkFactory;

import org.apache.curator.framework.recipes.locks.InterProcessMutex;

import org.apache.curator.retry.ExponentialBackoffRetry;

public class CuratorLockTest {

public static void main(String[] args) {

// Create distributed locks 1

final InterProcessMutex interProcessMutex1 = new InterProcessMutex(getCuratorWork(), "/locks");

final InterProcessMutex interProcessMutex2 = new InterProcessMutex(getCuratorWork(), "/locks");

// Create two unrelated threads

new Thread(new Runnable() {

@Override

public void run() {

try {

interProcessMutex1.acquire();

System.out.println("1");

interProcessMutex1.release();

} catch (Exception e) {

e.printStackTrace();

}

}

}).start();

new Thread(new Runnable() {

@Override

public void run() {

try {

interProcessMutex2.acquire();

System.out.println("2");

interProcessMutex2.release();

} catch (Exception e) {

e.printStackTrace();

}

}

}).start();

}

private static CuratorFramework getCuratorWork() {

// Reset after connection failure

ExponentialBackoffRetry ebr = new ExponentialBackoffRetry(3000, 3);

// connectionTimeoutMs Connection timeout sessionTimeoutMs After connection , Timeout of sending session

CuratorFramework build = CuratorFrameworkFactory.builder().connectString("hadoop1:2181,hadoop2:2181,hadoop3:2181")

.connectionTimeoutMs(20000)

.sessionTimeoutMs(20000)

.retryPolicy(ebr).build();

// The client starts

build.start();

Systemt.println("zk Successful launch !");

return build;

}

Business interview questions

ask :zk What's the election mechanism for ?

First , It was the first election : In the process of every election, I will vote for myself first , Then compare with the previous server ,id Small servers give their votes to id Big , Once the number of server votes exceeds half of the number of servers , Then choose leader

zk When it is not the first time to initialize , The election strategy has changed , Here we design to three values :SID and myid equally , Can't repeat , Used to uniquely identify zk Server for id.ZXID, Business ID, Used to identify a change in server status , At some point , Each machine in the cluster is not necessarily identical , This is related to the processing logic of the server for the update requirements of the client .EPOCH Every leader The code name of the term of office , No, leader when , The logical clock values of the same round are consistent , After each round of voting , This value will increase . When it is not the first time to initialize , The election strategy is to compare these three values , Compare first EPOCH, A big winner , If the same , Compare transactions id, A big winner , If the same , Compare myid, A big winner

The number of production cluster installations is appropriate : Not too much , Too much has the benefit of improving reliability , But too much will delay communication , That's not good. .

From experience, there are the following comparisons :

10:3 20:5 100:11 200 :11

边栏推荐

猜你喜欢

怎么做好项目管理?学会这4个步骤就够了

![[software tool] the hacker matrix special effect software CMatrix](/img/d3/bbaa3dfd244a37f0f8c6227db37257.jpg)

[software tool] the hacker matrix special effect software CMatrix

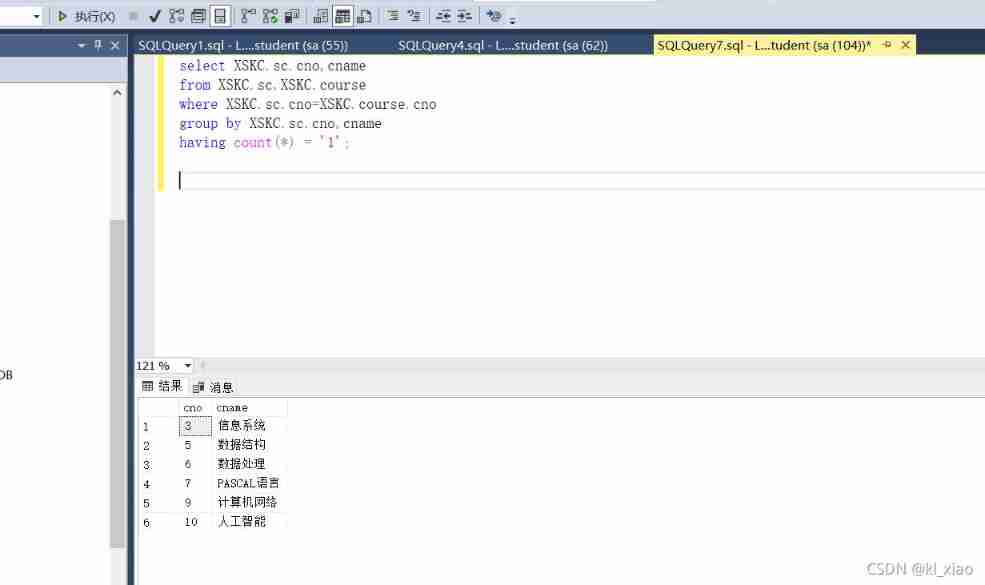

Introduction to database system experiment report answer Experiment 6: advanced query of data table

Method summary of creating deep learning model with keras/tensorflow 2.9

Bubble sorting with C language

Mongodb--- automatically delete expired data using TTL index

Empty difference between postgrepsql and Oracle

嵌入式软件面试问题总结

不想项目失控?你需要用对项目管理工具

How CSDN reports plagiarized articles

随机推荐

Selenium click the floating menu and realize the functions of right mouse button

Typescript configuring ts in koa and using koa router

Don't want the project out of control? You need to use project management tools

Mongodb--- automatically delete expired data using TTL index

How to do a good job in project management? Learning these four steps is enough

The difference between equals and = =

Web design and website planning assignment 12 online registration form

TypeScript-类和接口、类和泛型、接口合并现象

Record a murder case caused by ignoring the @suppresslint ("newapi") prompt

进程控制:进程等待(回收子进程)

DAMENG 数据库启停

In an activity, view postdelay will cause memory leakage, but will not affect the life cycle execution of the activity.

Dameng database login

How to find the complementary sequence of digraph

BFS on tree (tree breathing first search)

Qiao NPMS: get the download volume of NPM packages

Use special characters to splice strings "+“

How CSDN reports plagiarized articles

TypeScript-null和undefined

torch. unbind()