当前位置:网站首页>【AAAI 2021】Few-Shot One-Class Classification via Meta-Learning 【FSOCC via Meta-learning】

【AAAI 2021】Few-Shot One-Class Classification via Meta-Learning 【FSOCC via Meta-learning】

2022-06-26 09:23:00 【_ Summer tree】

Background knowledge

One-class classification

learning a binary classifier with data from only one class // Learn a two classifier from a class . 【 idea 】 Can be used for anomaly detection .

The anomaly detection (AD) task

(Chandola, Banerjee, and Kumar 2009; Aggarwal 2015) consists in differentiating between normal and abnormal data samples. AD

AD problems are usually formulated as one-class classification (OCC) problems (Moya, Koch, and Hostetler 1993), where either only a few or no anomalous data samples are available for training the model (Khan and Madden 2014).

Contents summary

Our work addresses the few-shot OCC problem and presents a method to modify the episodic data sampling strategy of the model-agnostic meta-learning (MAML) algorithm to learn a model initialization particularly suited for learning few-shot OCC tasks. // Our work has solved few-shot OCC problem , A method to modify model independent meta learning is proposed (MAML) Scenario data sampling strategy of the algorithm , Learning one is especially suitable for learning few-shot OCC Task model initialization .

We provide a theoretical analysis that explains why our approach works in the few-shot OCC scenario, while other meta-learning al- gorithms fail, including the unmodified MAML. // We provide a theoretical analysis , Explains why our approach is few-shot OCC Effective in the scene , Other meta learning algorithms fail , Include unmodified MAML.

Our exper- iments on eight datasets from the image and time-series do- mains show that our method leads to better results than classi- cal OCC and few-shot classification approaches, and demon- strate the ability to learn unseen tasks from only few nor- mal class samples. // We are 8 Experiments on image and time series data sets show that , Our method is better than classical OCC and few-shot The classification method achieves better results , And proved the ability to learn invisible tasks from only a few ordinary samples

we successfully train anomaly detectors for a real-world application on sensor readings recorded during industrial manufacturing of workpieces with a CNC milling machine, by using few normal examples. // By using a few common examples , We have successfully trained the anomaly detector , In order to apply it to CNC Sensor readings recorded during industrial manufacturing of milling machine workpiece .

contributions

- Firstly, we show that classical OCC approaches fail in the few-shot data regime. We proved the classic OCC Method in few-shot Failed in data .

- Secondly, we provide a theoretical analysis showing that classical gradient-based meta-learning algorithms do not yield parameter initializations suitable for OCC and that second- order derivatives are needed to optimize for such initializations. We Provides a theoretical analysis , It shows that the classical gradient based meta learning algorithm No fit OCC Parameter initialization of , also Second derivative This initialization needs to be optimized .

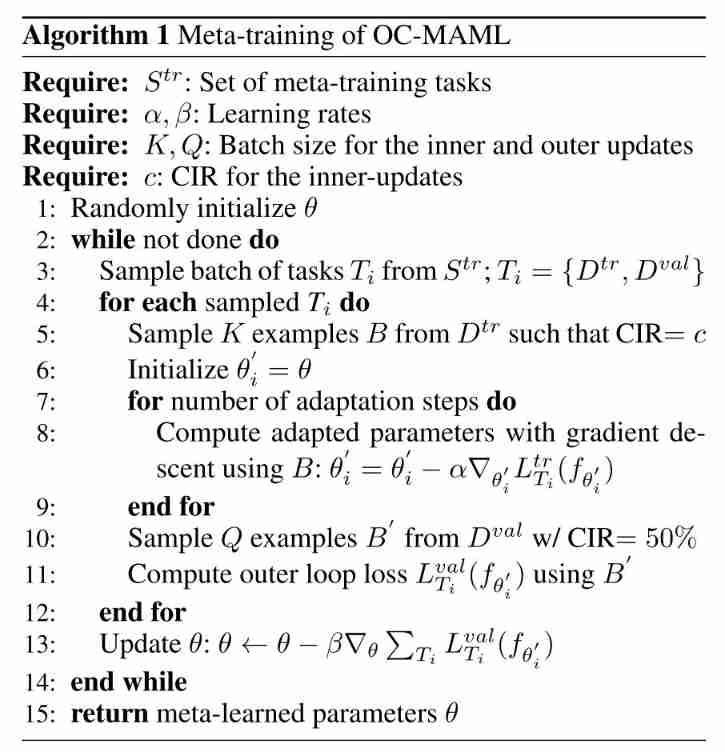

- Thirdly, we propose a simple episode generation strat- egy to adapt any meta-learning algorithm that uses a bi-level optimization

scheme to FS-OCC. Hereby, we first focus on modifying the model-agnostic meta-learning (MAML) al- gorithm (Finn, Abbeel, and Levine 2017) to learn initializa- tions useful for the FS-OCC scenario. The resulting One- Class MAML (OC-MAML) maximizes the inner product of loss gradients computed on one-class and class-balanced minibatches, hence maximizing the cosine similarity be- tween these gradients. - Finally, we demonstrate that the pro- posed data sampling technique generalizes beyond MAML to other metalearning algorithms, e.g., MetaOptNet (Lee et al. 2019) and Meta-SGD (Li et al. 2017), by successfully adapting them to the understudied FS-OCC.

The main method

Algorithm description

边栏推荐

- Self learning neural network series - 8 feedforward neural networks

- Application of hidden list menu and window transformation in selenium

- Self taught programming series - 1 regular expression

- How to compile builds

- 行為樹XML文件 熱加載

- MySQL cannot be found in the service (not uninstalled)

- 挖财打新债安全吗

- "One week to solve the model electricity" - negative feedback

- Behavior tree XML file hot load

- Self taught neural network series - 9 convolutional neural network CNN

猜你喜欢

Yolov5 results Txt visualization

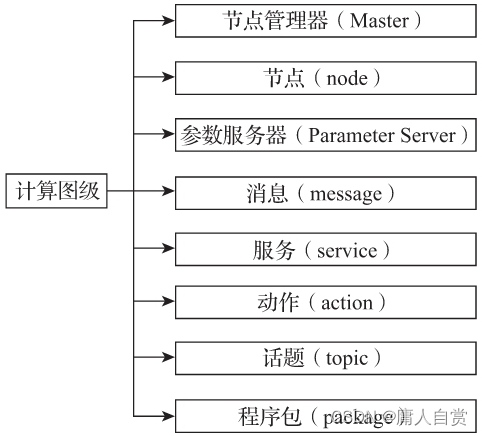

简析ROS计算图级

《一周搞定模电》—负反馈

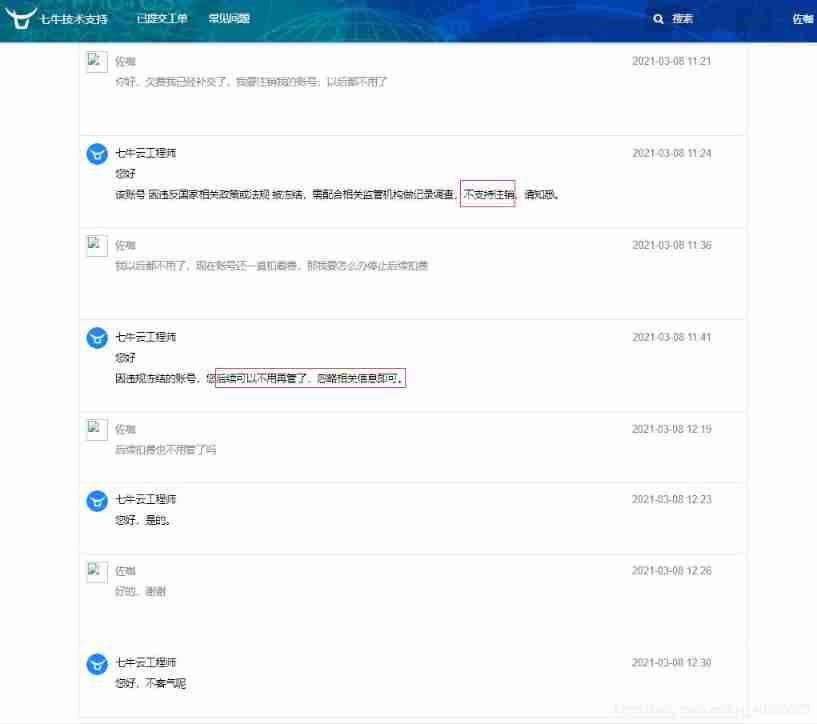

Cancellation and unbinding of qiniu cloud account

【CVPR 2021】Intra-Inter Camera Similarity for Unsupervised Person Re-Identification (IICS++)

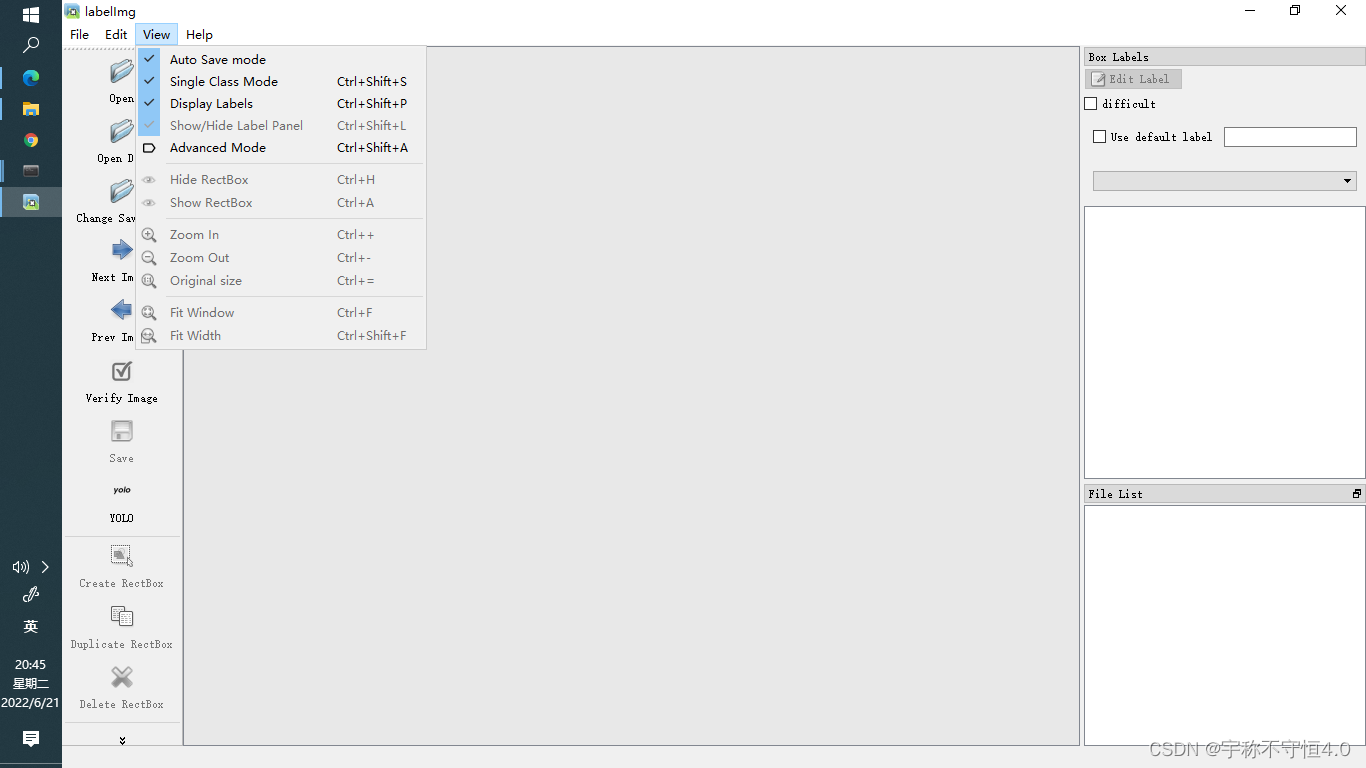

Yolov5 advanced level 2 installation of labelimg

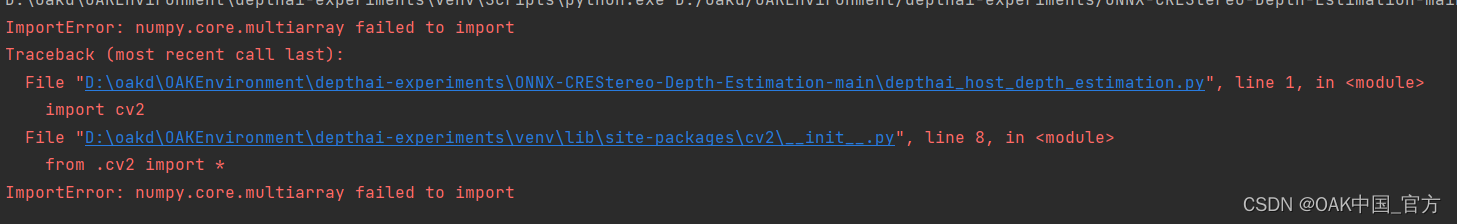

Error importerror: numpy core. multiarray failed to import

Tutorial 1:hello behavioc

教程1:Hello Behaviac

《单片机原理及应用》——概述

随机推荐

《一周搞定模电》—集成运算放大器

Param in the paper

Cancellation and unbinding of qiniu cloud account

Collection object replication

How to compile builds

PD fast magnetization mobile power supply scheme

Application of hidden list menu and window transformation in selenium

Self taught machine learning series - 1 basic framework of machine learning

MySQL cannot be found in the service (not uninstalled)

Is it safe to dig up money and make new debts

Notes on setting qccheckbox style

全面解读!Golang中泛型的使用

JSON file to XML file

"One week to finish the model electricity" - 55 timer

Phpcms V9 mobile phone access computer station one-to-one jump to the corresponding mobile phone station page plug-in

php提取txt文本存储json数据中的域名

51 single chip microcomputer ROM and ram

ThreadLocal

Principe et application du micro - ordinateur à puce unique - Aperçu

What is optimistic lock and what is pessimistic lock