当前位置:网站首页>Interface test - knowledge points and common interview questions

Interface test - knowledge points and common interview questions

2022-07-04 15:47:00 【xjChenM】

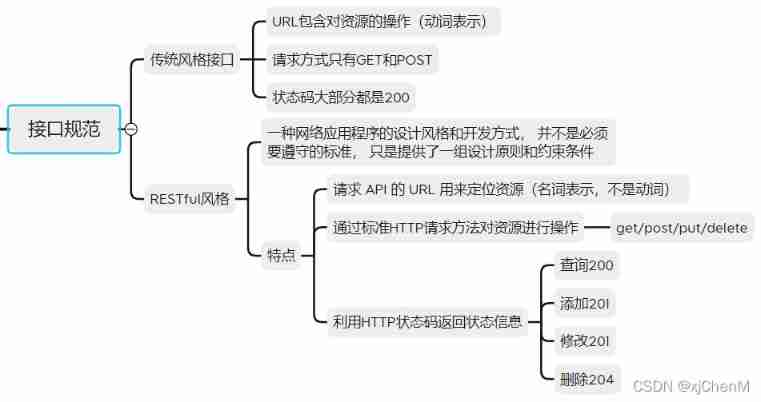

The interface specification

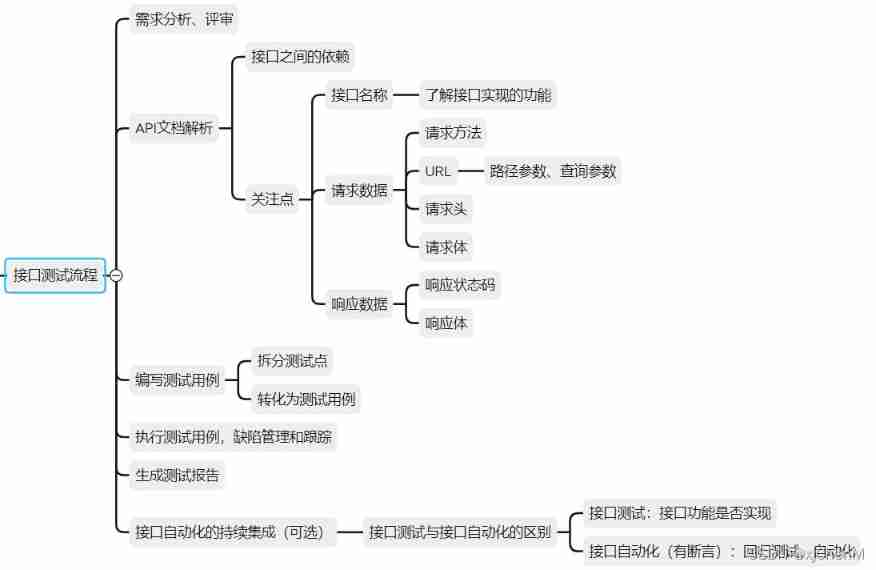

Interface test process

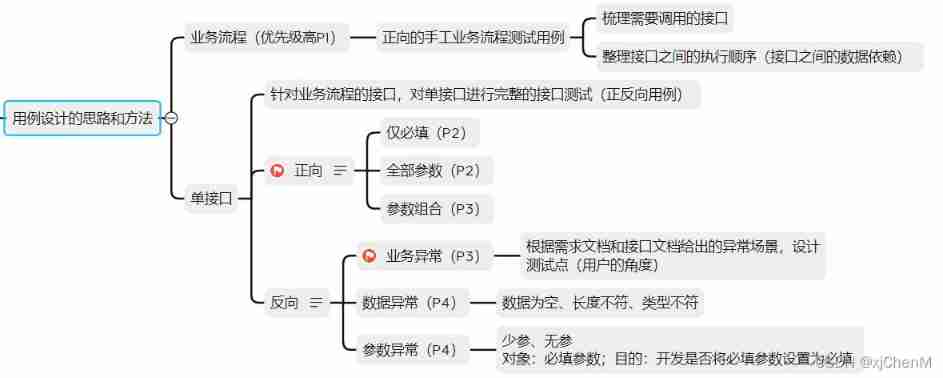

Ideas and methods of test cases

pymysql Operating the database

# Import pymysql

import pymysql

# Establishing a connection

conn = pymysql.connect(host='ip', port=3306,user=' account number ', password=' password ',database=' Database name ',arset='utf8')

# To obtain the cursor

cursor = conn.cursor()

# Execute query statement

cursor.execute('select version();') # Return the number of affected rows

# View query results

resp = cursor.fetchall()

resp = cursor.fetchone()

resp = cursor.fetchmany(6)

# Close cursors and connection objects

cursor.close()

conn.close()

# perform dml sentence

try:

n = cursor.execute('delete from table where id = '1';')

except Exception as e:

# There are abnormal , Roll back the transaction

logging.info(e)

conn.rollback()

else:

# No abnormal , Commit transaction

conn.commit()

finally:

# Close cursors and connection objects

cursor.close()

conn.close()Read JSON Method

import json

import logging

def param_data(cls, path):

'''

For parameterization , analysis json file

:param path: json File path

:return: Return to the meta group type list [(),(),...]

'''

with open(path, 'r', encoding='utf-8') as f:

json_data = json.load(f)

json_list = []

for i in json_data:

json_list.append(tuple(i.values()))

return json_listRead XLSX Method

import json

from openpyxl import load_workbook

def read_xlsx(cls, file_path, sheet_name):

'''

:param file_path: xlsx File path

:param sheet_name: xlsx The name of the worksheet at the bottom

:return: [(),(),(),...]

'''

wb = load_workbook(file_path)

sheet = wb.get_sheet_by_name(sheet_name)

case_data = []

i = 2

while i <= sheet.max_row:

# C In the table 【 title 】 Column name of ,K In the table 【 Request parameters 】 Column name of ,L In the table 【 Status code 】 Column name of ,M In the table 【 Expected results 】 Column name of

tuple_data = sheet[f'C{i}'].value, json.loads(sheet[f'K{i}'].value), sheet[f'L{i}'].value, json.loads(sheet[f'M{i}'].value)

case_data.append(tuple_data)

i += 1

return case_dataRequests Use

Guide library

import requests

Send a request

resp = requests. Request mode (url,params= Query parameters ,data= Form request body ,json=json Request body ,headers= Request header ,cookies=cookies Information about )

multiply The request method of the form :

resp = requests. Request mode (url,data= Form request body ,files={'x': 'y'})

Response content acquisition

Status code

resp.status_code

Check the response header character encoding

resp.encoding

Request header information

resp.headers

cookie Information

resp.cookies

request url

resp.url

The returned content is a web page

resp.text

The returned content is json

resp.json()

Response content in bytes

resp.content

resp.content.decode('utf-8')

token Mechanism

token Mechanism

obtain token Then it will be carried into the request header

headers_data={

'Authorization': token value

}

requests. Request mode (url,json=json Request body ,headers= Request header )

Session+cookes Mechanism

Mode one :

cookie Bring into the request , Get after sending the request cookies

response.cookies

take cookies Put it into the request parameters

requests. Request mode (url,cookies=cookies Information )

Mode two :Session Object send request

1. establish Session Instance object

session=requests.Session()

2. Multiple requests use session send out

resp = session.post('xxx')

3. close session

session.close()Log initialization configuration

The level of logging

Only level information greater than or equal to the setting is displayed

DEBUG

INFO

WARNING

ERROR

CRITICALInternal implementation principles

Create a logger object

logger = logging.getLogger()

Set log print level

logger.setLevel(logging.INFO)

Create processor objects

Output to console

st = logging.StreamHandler()

Output to a log file

fh = logging.handlers.TimedRotatingFileHandler('a.log',when='midnight',interval=1,

backupCount=7,encoding='utf-8')

Create formatter

fmt = "%(asctime)s %(levelname)s [%(filename)s(%(funcName)s:%(lineno)d)] - %(message)s"

Set formatter for processor

st.setFormatter(formatter)

fh.setFormatter(formatter)

Add a processor to the logger

logger.addHandler(st)

logger.addHandler(fh)Log calls

logging.debug('debug')Dubbo The interface test

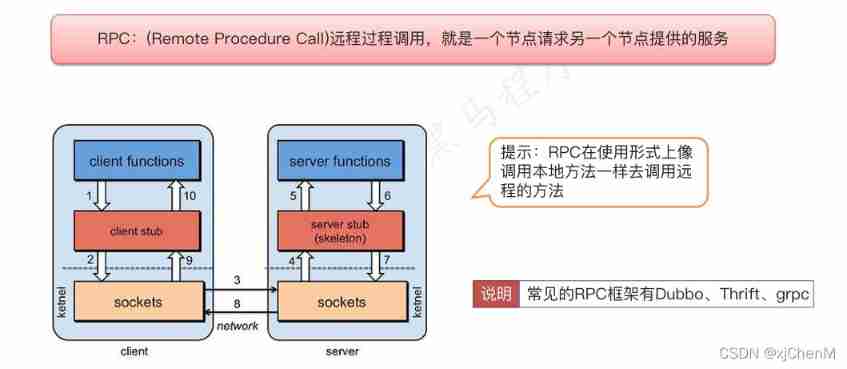

Concept

RPC agreement

Dubbo framework

Dubbo What is it?

Dubbo It's a high performance 、 Lightweight 、 be based on Java The open source RPC frame

Why use Dubbo

Open source , More people use it

Pull out the core business , Communicate through internal interface , Improve business flexibility

Improve concurrency through distribution telnet call Dubbo Interface

Connection service

telnet IP port

View the list of services

ls

Displays the methods contained in the specified service

ls -l service name

Call service interface

invoke service name . Method ( Parameters )Python call Dubbo Interface

establish telnet Instance object

import telnetlib

telnet = telnetlib.Telnet(host, port)

Call interface

telnet.write('invoke service name . Method name ( parameter list )'.encode())

Read response data

telnet.read_until("dubbo>".encode())Third party interface testing

Interface Mock

Definition :

Write an interface just like development ( Virtual an interface )

Interface Mock test

Use scenarios : When conducting interface tests for business processes , One of the interfaces cannot be obtained , To ensure that the process can be executed , Implement interfaces that cannot be implemented Mock

The project team often fails to obtain the interface :

1. The development is not completed

2. Third party interface ( Test environment )

Will target Mock The interface of , Carry out special single interface test ?

Can't Code implementation Mock service

Tools :flask

install :pip install flask

Confirm installation :pip show flask

Code implementation Mock Service steps

1. Guide pack flask

2. call flask, Create application objects

3. Define the request method and request path of the interface

4. Define the response body data returned by the interface

5. start-up WEB service ( Start application object )

Code implementation -Mock(ihrm Login interface )

from flask import Flask, json

# Create application objects

app = Flask(__name__)

# Set up Mock Interface request data ( Request method , And the path )

@app.route("/login", methods=['get', 'post'])

# Implement the business logic of the interface in the function , The return result of the function (return), by Mock Response body data of the interface

def login():

data = {"success": True, "code": 10000, "message": " Successful operation !", "data":"1233333333"}

return json.jsonify(data)

# call app Configuration object , Use run Method , Start writing Mock Interface

if __name__ == '__main__':

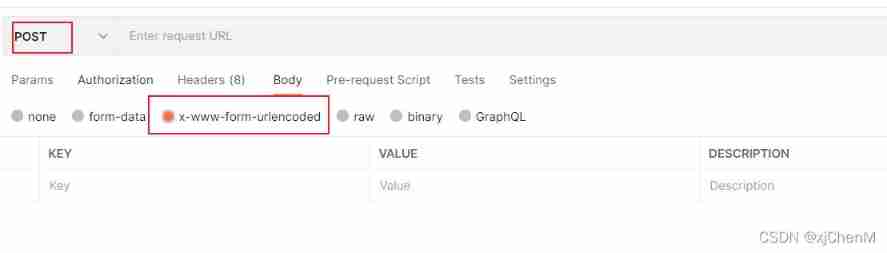

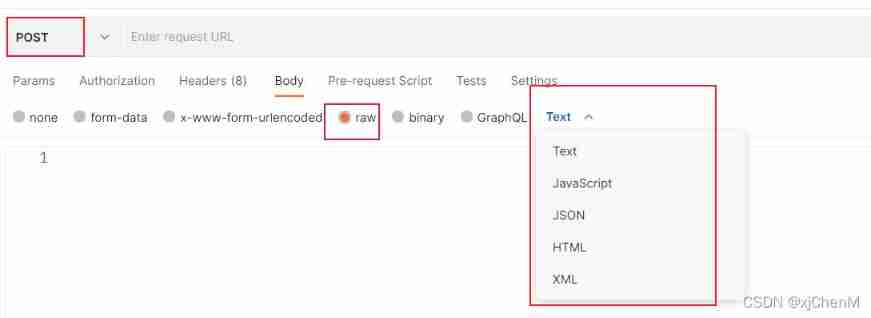

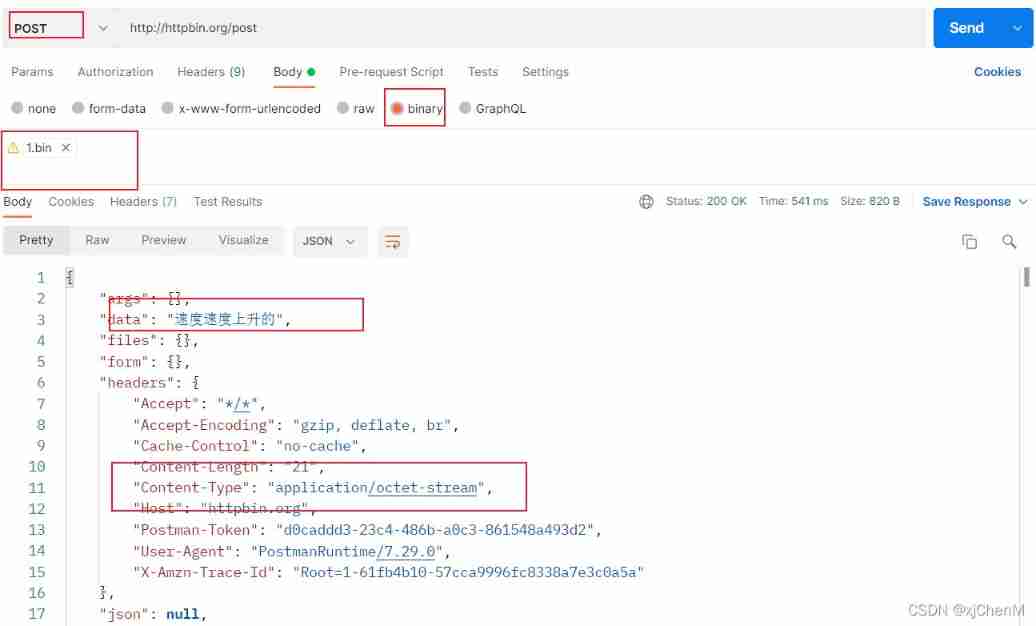

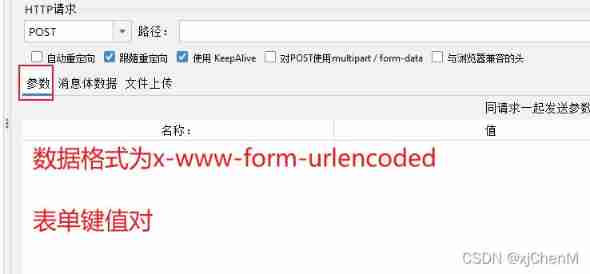

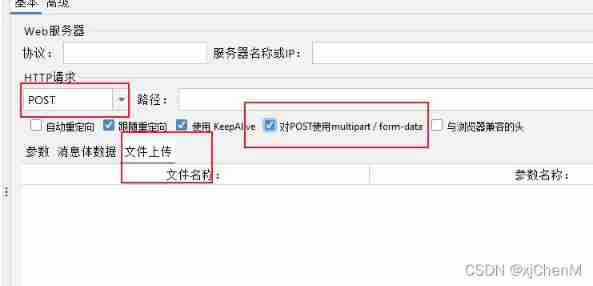

app.run()Postman Supported request body data types

1.form-data

multipart/form-data, It organizes the data of the form into Key-Value form ;

When the uploaded field is a file , There will be content-type To describe the type of document ;content-disposition, Some information used to describe the field ;

Because of boundary Isolation , therefore multipart/form-data You can upload files , You can also upload key value pairs , It takes the form of key value pairs , So you can upload multiple files .

2.x-www-form-urlencoded

application/x-www-from-urlencoded, Convert the data in the form to Key-Value, Only key value pairs can be uploaded , Cannot be used for file upload .

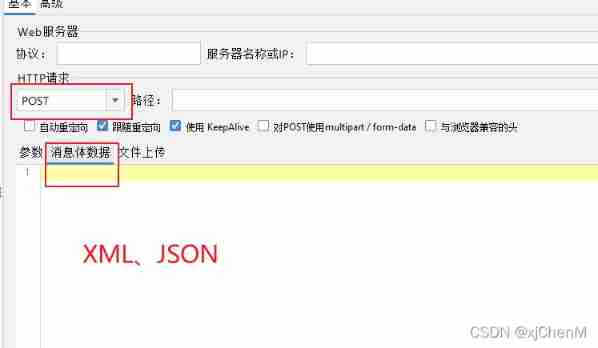

3.raw

transmission txt,JavaScript,json,xml,html The data of

4.binary

It means that only binary data can be uploaded , To upload files , You can only upload at one time 1 Data

Jmeter Data types supported by the request body

1. Form key value pairs

2.XML、JSON

3. file

Postman Perform interface tests

Variable priority

Data ---- > Local ---- > Enviroment ---- > GlobalAssertion

State code assertion

pm.test("Status code is 200", function () {

pm.response.to.have.status(200);

});

Contains the specified string

pm.test("Body matches success ", function () {

pm.expect(pm.response.text()).to.include(" success ");

});

JSON Data assertion

pm.test("response json value check", function () {

var jsonData = pm.response.json();

pm.expect(jsonData.success).to.eql(true);

pm.expect(jsonData.code).to.eql(10000);

// Greater than pm.expect(jsonData.items.length).to.above(4);

// Less than pm.expect(jsonData.items.length).to.below(6);

// It's not equal to pm.expect(jsonData.items.length).to.not.eql(6);

});relation

1. Set environment variables or global variables in the post script

var jsonData = pm.response.json();

pm.environment.set("token", jsonData.token);

2. Non code areas , Use {

{ Variable name }}

{"token":"{

{token}}"}Batch execution of test cases

Execute in the use case set RUNA parameterized

significance : Test data and test script are separated , Improve the efficiency of interface testing

Data files

csv: Boolean values and complex data types are not supported , The data organization format is simple

json: Support Boolean values and complex data types , The data organization format is complex

Reference data file

Request parameters {

{key}}

In the code (pm built-in data object ) data.key

Be careful

Only through use case sets RUN, Select the data file and execute Generate test reports

1. Export use case set

2. Carry out orders :newman run Test script file -e Environment variables file -d Test data file -r html --reporter-html-export report.html Jmeter Perform interface tests

Initialize settings

setUP Thread group : Execute before all normal thread groups

Database connection configuration : Connect to the database of the project

HTTP Request defaults : The domain name of the unified management project , Port number , Coding format

HTTP Cookie Manager : In thread group , Automatically transfer between interfaces cookie

User defined variables : Unified management , Test data in the project

HTTP Header Manager : Uniformly manage the request header data of the interface in the project

tearDown: Execute after all normal thread groups Implement interface testing

Thread group : One thread = A test case

HTTP request : Send interface request

View the result tree : View the response / Request data Interface automation

Assertion :JSON Assertion 、 Response assertion

relation : Regular expression extractor 、JSON Extractor

A parameterized :CSV Data file settings Initialization of test data / cleaning

setup/teardown Thread group Test report

jmeter -n -t xxx.jmx -l result.jtl -e -o ./reportPytest

Functions and features

1. Very easy to get started , Easy entry , The document is rich , There are many examples in the document . 2. Support simple unit testing and complex functional testing . 3. Support parameterization . 4. Be able to execute all test cases , You can also select some test cases to execute , And can repeatedly execute failed use cases . 5. Support concurrent execution , It can also run by nose, unittest Test cases written . 6. convenient 、 Simple assertions . 7. Ability to generate standard Junit XML Test results in format . 8. There are many third-party plug-ins , And you can customize the extension . Easy integration with continuous integration tools .

Use case running rules

All the bags (package) Must have __init__.py file

File name to test Beginning or _test ending

Function to test_ start

Test class to Test start , Can not contain __init__ Method

Test method with test_ start Fixture

Call mode

@pytest.fixture(scope = "function",params=None,autouse=False,ids=None,name=None)scope

Used to control the Fixture The scope of action of

Function like Pytest Of setup/teardown

The default value is function( Function level ), The order of control range is :session > module > class > function

| Value | Range | explain |

|---|---|---|

| function | Function level | As long as the test method is called, it will execute |

| class | Class level | Start with the called test method , Each test class runs only once |

| module | Module level | Only from .py The file begins to reference fixture The location of the takes effect , every last .py File call once |

| session | Session level | You only need to run once per session , All methods and classes in the session , Modules share this method |

params

1. For parameterization ,Fixture Optional parameter list of , Support list input 2. Default None, Every param Value 3.fixture It's going to be called and executed once , similar for loop 4. Can be related to parameters ids Use it together , As the identification of each parameter

@pytest.fixture(params=[1,2,{1,2},(3,2)])

def demo(request):

return request.param # Fixed writing ids

Use case identification ID And params In combination with , One to one relationship

autouse

Default False if True, Just now, each test function will automatically call this fixture, No need to pass in fixture Function name

name

fixture Rename of Generally speaking, use fixture Your test function will fixture The function name of is passed as an argument , however pytest It is also allowed to fixture rename If used name, That can only name It is said that , The function name is no longer valid Calling method :@pytest.mark.usefixtures(‘fixture1’,‘fixture2’)

Back and forth

Mode one :

def setup_class(self):

pass

def teardown_class(self):

pass

def setup(self):

pass

def teardown(self):

pass

Mode two :

@pytest.fixture(scope=' Range value ')

def setup_func(self):

print('setup')

yield

print('teardonw')

1. Multiple test modules share the same front and rear time , The pre post method can be written into , Of the project root directory conftest.py In file ;

2. Front and back with yield Separate

3. The front and back levels pass scope Value determination A parameterized

Mode one :

@pytest.mark.parametrize("title,body,expected", [(),(),()...])

Mode two :

@pytest.fixture(params=[(' title 1',{'r':1},{'code':200}),(' title 2',{'r':1.1},{'code':200})], ids=['1','2'])

def params_data(request):

return request.param

def test_01(params_data):

print(params_data)repeat

1. install pytest-repeat plug-in unit

pip ptyest-repeat

2.mark mark

@pytest.mark.repeat(5) # test_a Use case execution 5 Time

def test_demo():

passSkip execution

@pytest.mark.skip('skip')

def test_skip(self):

pass

version = 20

@pytest.mark.skipif(version > 20)

def test_skip(self):

passAssertion fails and execution continues

1. install pytest-assume plug-in unit

2. For assertion pytest.assume( Judge )

def test_01():

print('--- Use cases 01---')

pytest.assume(1==2)

pytest.assume(1<2)

print(' Execution completed !')Execution order

Mode one :

The test method is marked with numbers test01_demo test02_demo

Mode two :

plug-in unit :pytest-ordering, The smaller the number, the higher the priority , Positive numbers have higher priority than negative numbers

@pytest.mark.run(order=1)

def test_demo():

passLabel and execute

By customizing labels , Use cases that can execute a certain type of label

1. stay pytest.ini To configure marker

markers=

smoke: Smoke testing

2. Mark the use case

@pytest.mark.smoke

def test_mark_1(self):

print('test_mark_1')

3. Carry out orders : If the label has logical operation , Be sure to use double quotation marks ," label 1 and label 2 or label 3"

pytest -m " Tag name "pytest perform

pytest

# Full implementation

# run all tests below current dir

pytest test_mod.py

# Specify file execution

# run tests in module file test_mod.py

pytest somepath

# Specify the path to execute

# run all tests below somepath like ./tests/

pytest -k stringexpr

# Fuzzy query execution

# only run tests with names that match the

# the "string expression", e.g. "MyClass and not method"

# will select TestMyClass.test_something

# but not TestMyClass.test_method_simple

pytest test_mod.py::test_func

# Explicitly specify the function name

# only run tests that match the "node ID",

# e.g "test_mod.py::test_func" will be selected

# only run test_func in test_mod.pyCalculation pytest coverage

plug-in unit :pytest-cov

pytest --cov-report=html --cov=./ test_code_target_dirTest report

Mode one :

plug-in unit :pytest-html

pytest.ini To configure :

addopts = --html=./report/report.html

--self-contained-html

Mode two :

coordination jenkins+Allure Use

jenkins Build command for :

call pytest case --alluredir ${WORKSPACE}\report\allure_report

exit 0Distributed testing

plug-in unit :pytest-xdistBeautifulSoup

For parsing HTML The content of , Use steps :

1. establish BeautifulSoup object from bs4 import BeautifulSoup bs = BeautifulSoup(data, 'html.parser')

2. Common methods

bs. Tag name # Return the entire label content

bs. Tag name .string # Return the value of the tag

bs. Tag name .get_text() # Return the value of the tag

bs. Tag name .get( The attribute name ) # Return property value

bs.find_all( Tag name ) # Returns a list of GIT operation

git Upload the code to github On git add –all git commit ‘ notes ’ git push

git take github Pull down the code on git clone ‘github Project address of ’jenkin Continuous integration

Basic operation

1. newly build Item; 2. Code source management , Of the associated project GITEE Address ; 3. Set trigger H 8 ***; 4. Enter the build command ; 5. Post-build operation , choice HTML The report -Pbulish HTML reports 6. Execute build ;

Mailbox settings

1. Get the authorization code of the sender's mailbox , And open POP3/SMTP service ; 2. Get into Jenkins System configuration interface 3. Configure the email address of the system administrator 4. Configure extended mail notification ( Extended E-mail Notification )tab Email address 5. Configure mail notifications tab Email address 6. Check whether the sender email address configuration is successful 7. Save settings

Configure automatic email

1. Configure before opening jenkins Project ; 2. Post-build operation , choice Editable Email Notification; 3. Modify the recipient when the build is triggered , And mail style, etc ; 4.Build Now; 5. Check whether the recipient's mailbox successfully received the mail

Interview questions

When will the interface test be carried out ?

Interface use case design :API After the document design is completed , Start interface use case design The test execution : The backend is developed first ( Generally complete some functions , Just part of the test ), Use Postman And other tools or code to complete interface testing , End of interface test , If there is still time , Then write the interface automation script

How to implement interface testing in your project ?

explain : There are many ways to implement interface testing , For different projects , Different teams have different working modes , The following answers are only for reference in my previous projects , Agile development mode . The version is constantly iterating , Sometimes when developing a whole submission SIT Before testing , Testers will have plenty of time . under these circumstances , We will try to test the left shift , Early intervention testing , Seek interface documentation , Based on the core function interface of this version iteration Postman Tools for interface testing . Second, due to the characteristics of the company's project , There will also be some historical feature versions , At the same time, when the project task is relatively idle , It will also use code form to write interface automation test scripts for single interfaces and interface processes , It uses :python+requests library +git+jenkins frame , Generally, the content implemented by the original core interface will not change , This interface Script Library , You can use the use case to perform regression testing on the code of the background interface , It will also guarantee the quality of the project .

Introduce the construction of interface automation framework

In order to reduce api Maintenance cost of interface change , First, encapsulate the interface , Will create api Catalog ; Then call the encapsulated api Interface , Implement interface test cases , Get response results and assertions , involves case Catalog ; In order to facilitate the maintenance of test data , And reduce the number of test methods , Implement parameterization to separate test data from test script , involves data Catalog ; In addition, put public methods or tool classes in commom Directory , Log log Catalog , And generate test reports report Catalog ; In the later stage, continuous integration will be carried out for interface regression testing , Will use jenkins Integration tool ;

How is the code based interface testing framework encapsulated

The core idea : Code layering

1. Encapsulate the interface request as a method

2. Call the method of encapsulated interface request , Implement interface test cases

3. Parameterize the test data

Interface encapsulation

effect : When the interface information changes , Reduce script maintenance costs

Realize the idea

1. Parameters : Dynamically changing request data , Set as the parameter of the encapsulation method

2. Return results :response object

3. Interface with Cookie When you depend on , Use Session Instantiate objects , Cannot create in encapsulated classes and methods , Can only be imported externally

Interface use case implementation

1. Call the method of encapsulated interface request , Get response data (response object )

2. call unittest The assertion method provided , Verify whether the response data is consistent with expectations

Parameterization of test data

effect :

1. For single interface testing , Reduce the number of test methods written , Provide efficiency of use case implementation

2. Realize the separation of test data and code , It is convenient to maintain the test data separately

Realize the idea :

1. Analysis requires parameterized test data ( Dynamically changing request data and expected results )

2. Write the test data to an external file , The file type is Json

3. Call the encapsulated method of reading test data , Get the test data in the external file

4. Use parameterized plug-in unit , Complete the transfer of test data from external files to test methods

Ensure the stability of the script

Test data initialization and data cleaning

Continuous integration

Concept : Use tools to automatically execute scripts , And generate test reports , Send the test report to relevant personnel by email ;

Purpose

Automatically execute interface automation scripts

Automatically generate test reports

Automatically send test report email

technological process

1. Complete interface automation script

2. Upload the code to the hosting platform

3.Jenkins Create tasks , Associated code hosting platform

involve Python modular

requests,unittest/pytest,pymysql,parameterized,HTMLTestReporter,jsonschemaHow can you implement interface testing without interface documentation ?

In my previous company , Generally, each version will have interface documents , Because the front and rear ends need joint debugging . If some historical interfaces are missing, the interface documents will be lost , From a business point of view , I will find out the core functions first , Use F12 Or Fiddler The way to grab a bag , Get the interface address and request relevant information , If the specific meaning of some fields is not clear , You will find the developer of the corresponding function module for confirmation , Then improve the interface related information you have recorded .

How do you design interface test cases ?

In previous projects , The interface test case design layer includes two aspects : One is based on single function interface , One is to design from the business scenario .

Based on the single function interface, multi parameters will be considered 、 Shaoshen 、 jurisdiction 、 Wrong participation ,

The following single interface : Will consider all kinds of pre , Such as using coupons to place orders 、 Use points to deduct orders 、 Place orders based on inventory items, etc

Based on business function scenarios : Will consider various functional processes from the perspective of users

As implemented in the previous system :

Ordinary member login - Search for products - Add to cart - place order -mock payment - Confirm the receiving process ;

Ordinary members - Choose to snap up goods - Add to cart - Use coupons - Order submission process, etc

Used after final confirmation jmeter or python+requests+unittest Framework to implement .What are the core verification points of interface testing ? What is the basis ?

first : Stand on the level of system or version , Core checkpoint : Single interface , And business processes the second : Based on a single interface , Will verify forward and reverse , Reverse will take into account multi parameter 、 Shaoshen 、 Wrong participation , At the same time, the user permissions of the request header will also be considered According to the existing interface documents , And requirements documents . What data should the interface return , It is based on the business rules of the requirements document , It is no different from the results of manual test verification , It's just an interface , One is to see json perhaps html Code form data .

Where do you put the test data ?

1 For account numbers and passwords , This kind of global parameter , You can use command line arguments , Take it out alone , In the configuration file ( Such as ini) 2 For some disposable data , Such as registration , Each registration is different , You can use random functions to generate 3. For an interface, there are multiple sets of test parameters , You can parameterize , Data playback yaml,text,json,excel Fine 4. For data that can be reused , For example, the status of the order needs to create data , You can put it in the database , Every data initialization , Clean up after use 5. For some parameters of mailbox configuration , It can be used ini The configuration file 6. For all independent interface projects , It can be data driven , use excel/csv Manage interface data for testing 7. For a small amount of static data , For example, the test data of an interface , It's just 2-3 Group , You can write py The beginning of the script , It won't change for ten or eight years All in all, different test data , Can be managed with different files

What is data driven , How to parameterize ?

Use decorators ,ddt,data,unpack ddt Decoration test class ,data Decoration test method ,unpack unpacking , Parameterize the data that needs to be modified , Facilitate the operation of later data The idea of parameterization is that after the code use case is written , No need to change code , Just maintain the test data , And generate multiple use cases according to different test data

The next interface request parameter depends on the data returned from the previous interface ?

1. Different interfaces are encapsulated into different functions or methods , Data required return come out , Use an intermediate variable a To accept , The interface at the back transmits a That's all right. 2. Use reflection mechanism , The data returned by the previous interface As a participant Pass to the next interface .setattr, Save the returned data into a variable

Depends on how the login interface handles ?

1. The login interface depends on token Of , You can log in first and then ,token Save to a yaml perhaps json, perhaps ini In the configuration file of , After all the requests to get this data can be used globally 2. If it is cookies Parameters of , It can be used session Automatic association s=requires.session() And then according to get/post Method , To call (s.get()) Automatic association session

How to deal with interfaces that rely on third parties ?

Use mock Mechanism , To build one mock service , The analog interface returns data

Irreversible operation , How to deal with it , For example, how to test this interface when deleting an order ?

Test your ability to generate data , Interface request data , Many of them depend on the previous state . Like workflow , The flow to different people is different , The operation permissions are different , When it comes to testing , Every state has to be measured , You need to be able to make your own data . We usually make data by hand , Change the field status directly in the database . So it's the same with automation , The data can be used python It's connected to the database , Do the operation of adding, deleting, modifying and checking ; Test case pre operation ,setUp Do data preparation , The rear operation ,tearDown Do data cleaning .

How to clean up the garbage data generated by the interface ?

Creating data and data cleaning , need python It's connected to the database , Do the operation of adding, deleting, modifying and checking Test case pre operation ,setUp Do data preparation , The rear operation ,tearDown Do data cleaning .

How to measure all the states of an order , Such as : Untreated , In processing , Processing failed , Handle a successful

Creating data , Modify the type of data

How to deal with dependencies between interfaces

What is data dependency between interfaces ?

Request data of the next interface , You need to get from the response data of the previous interface

Ideas :1. extract ;2. Set up ;3. obtain

Postman Tools :

1. extract : stay Tests In the label , To write js Code , Extract the data associated with the interface

2. Set up : stay Tests In the label , To write js Code , Set the associated data to global / environment variable

3. obtain : Later interface , Use two curly braces {

{}}, The variable name set by reference , Get associated data , Pass to the request data

Code :

1. extract : Call the last interface response object , Use json Methods , Get response body data of dictionary type , Extract associated data from

2. Set up : The extracted data , Assign values to custom variables

3. obtain : Call the defined variable , Get associated data , The request data passed into the next interface How to implement interface Association ? How to realize it ?

When designing interface test cases , Will design some business scenario test cases . If you need to place an order after login ; Then the user's login identity credentials are required when placing an order , The user identity credentials need to be obtained from the information returned by the login interface . stay jmeter According to the response results , Use Xpath Extractor or Json Extractor , To get the data that needs to be associated , After extracting the data, a variable will be formed , This variable can be used as a subsequent interface request parameter .

When an interface goes wrong , How do you analyze anomalies ?

It is consistent with the design idea of function test , It's just for various business scenarios , Or various abnormal data entry angles , To verify whether the business logic processed by the function in the background is correct . So when the interface handles exceptions , Pay attention to business processing logic rule errors 、 Or abnormal data 、 Or permission restriction error . Analyze from the perspective of demand , Analyze whether the interface response data is correct . Sometimes, the interface request log , To analyze the specific causes of the error .

How to do continuous integration ? How often automated tests are built

Continuous integration of the tested project

As a tester , Use jenkins Deploy the environment for the version developed to me , Test the function of the project

Continuous integration of automated projects

Use Jenkins, Automatically execute automated projects , Generate test results into test reports , Send me an email . Let me check , automated testing , Which test cases fail

About the steps

1. Create tasks

2. Set the conditions that trigger the build

3. structure , Set the execution command

4. Some plug-ins for post build operation , Generate test reports , Send E-mail

How often to build

Continuous integration of the tested project , Development has version updates , You need to build

Test build ; Development solution bug, Changed the code , It also needs to be built

Continuous integration of automation projects

Working day , Every day in the morning XX spot , Auto build once

After development and testing , Manually trigger the build once . The test of this development , For other interfaces / Whether the function has an impact Interface automation how to initialize data ?

Jmeter Data initialization (setup Thread group +JDBC Database link configuration +JDBC Database request )

1. Good configuration jdbc mysql driver package

2. add to setUP Thread Group Thread group

3. add to JDBC Connection Configuration The configuration element , Configure database links

4. add to JDBC request Sampler, Complete data cleaning and data initialization

Python+pymysql+ A parameterized

Use pymysql library , Complete data cleaning and data initialization How to judge the result of interface test ( Success or failure )?

What is an assertion of an interface ?

Verify whether the response data returned by the interface is consistent with the expectation

The verified response data are

The status code of the response , Response body data

Postman Tools :

1. stay Tests In the label , Select the appropriate assertion fragment

Common assertion fragments are :

Assertion status code ; The field value of the assertion response body is consistent with the expectation ; The assertion response body contains the specified string

2. In the automatically generated assertion code , Enter the expected value

3. Send the interface request again , stay Test Results In the label , Look at the assertion results , success 【Pass】、 Failure 【Fail】

Code :

1. Call the method of encapsulated interface request , Get response data (response object )

2. call unittest The assertion method provided , Verify whether the response data is consistent with expectations

self.assertEqual( Expected results , response.status_code)

self.assertIn( Expected results , response.json().get("key"))How do you test the third-party interface ?

Based on the third-party interface , In general , It will make request calls to the real third-party interface , Get the actual response data for interface test . Some interfaces that cannot be called directly , For example, payment , We will use python The way of routing mock Interface , Simulate various return situations of payment . You can also use Postman To forge the interface return data , You can also use Fiddler AutoResponder function .

What is a redirection interface ? How do you perform tests on encrypted interfaces ?

The requested interface after receiving the customer's request , Jump to another interface that actually handles customer business . Have contacted some encrypted interfaces , My previous company has encrypted some sensitive interfaces , The encryption method is a set of encryption methods developed and implemented by our team . There are special code functions to handle , When testing these interfaces , I directly look for the development of the corresponding encryption and decryption function , After encrypting the request information, call the interface to request , At the same time, call the decryption function to decrypt .

What types of interfaces are you mainly testing ? What's the difference? ?

The core of the previous project is still HTTP Interface oriented , It will also involve some HTTPS( There are other types of interfaces in actual work , for example webservices, In short, they are similar , If you can say no, don't copy ) HTTP and HTTPS The core difference between ,HTTP The data transmitted by the protocol is unencrypted ,HTTPS It's encrypted. ,HTTPS Agreement is made SSL+HTTP The protocol is built for encrypted transmission 、 Network protocol for identity authentication , than http Security agreement .https The agreement requires a certificate .HTTP Default port 80,HTTPS Default port 443.

Common interface request methods and differences

During interface testing , Common request methods get,post,put,delete

Restful Style defines the function of the interface request method

get Expressed as query ,post Indicates as new ,put It means to modify ,delete Means delete

get The success status code is 200, post and put by 201,delete by 204GET and POST Differences in requests

Pass the location of the parameter

GET: Only on the url

POST: Can be placed in url, It can also be placed in the request body

The length of the passed parameter

GET: Query parameters can only be in url To pass ,url There is a length limit

POST: If the request parameter is placed in the request body , The request body has no length limit

Security

GET If you pass query parameters , Only in url To pass , You can view the specific parameters through the browser

POST Pass query parameters , It can be passed in the request body , The browser cannot view , however , Through the bag grabbing tool , You can still view the data of the request body , So it's not absolutely safe , have access to HTTPS agreement ;

Coding format

POST The acceptable encoding format is better than GET Much richer HTTP And HTTPS difference

Default port

HTTP:80

HTTPS:443

Security

HTTP: The transmitted data is not encrypted , Very low security

HTTPS: Encrypt the transmitted data , Very safe

Data transmission efficiency

HTTP: There is no need to encrypt or decrypt the data , So the data transmission efficiency is high

HTTPS: The data needs to be encrypted and decrypted , So the efficiency of data transmission is low

cost

HTTP: Do not use digital certificates , free

HTTPS: The public key used = digital certificate , You need to pay for it Know what network protocols , Briefly describe the difference ?

In the previous testing work, it was mainly testing https and http agreement , Of course, I have a simple understanding of TCP agreement : 1、https The agreement needs to reach CA Apply for a certificate , Generally, there are fewer free certificates , So there is a certain cost . 2、http It's the hypertext transfer protocol , The message is transmitted in clear text ,https It is safe ssl/tls Encrypted transport protocol . 3、http and https It USES a completely different connection , The ports are different , The former is 80, The latter is 443. 4、http The connection is simple , It's stateless ;HTTPS Agreement is made SSL/TLS+HTTP The protocol is built for encrypted transmission 、 Network protocol for identity authentication , Than http Security agreement .

What are the common response status codes ? What do they mean respectively ?

200 ( success ) The server has successfully processed the request

201 ( Created ) The request succeeded and the server created a new resource

202 ( Accepted ) Request accepted by server , But not yet processed

204 ( There is no content ) The server successfully processed the request , But nothing is returned

301 ( A permanent move ) The requested page has been permanently moved to a new location

302 ( Temporary movement ) The server is currently responding to requests from pages in different locations , But the requester should continue to use the original location for future requests

400 ( Wrong request ) The server does not understand the syntax of the request

401 ( unauthorized ) Request for identification

403 ( prohibit ) Server rejects request

404 ( Not found ) The server could not find the requested page

405 ( Method disable ) Disable the method specified in the request

500 ( Server internal error ) Server encountered an error , Unable to complete request

501 ( Not yet implemented ) The server does not have the function to complete the request

502 ( Bad Gateway ) Server as gateway or proxy , Invalid response received from upstream server

503 ( Service not available ) The server is currently unavailable ( Maintenance due to overload or shutdown )

504 ( gateway timeout ) Server as gateway or proxy , But the request was not received from the upstream server in time send out HTTP When asked , What are the ways to pass parameters ?

Resource path url The query parameters section ,url The part after the question mark Request body

Simply describe the way of data-driven implementation ? Where is the data stored ?

The data-driven implementation of our previous project is to use json Document and parameterized Parameterized plug-in implementation . json Files are used to organize and define test data ,parameterized To traverse data , Read json Data is read by defining a function . Of course, I also know that there are many other forms of data storage : You can directly read the data of the database 、 You can also define test data to excel In file .

If you need to rely on a third party during interface testing java package , How to implement interface testing ?

In the actual test process , Except directly through jmeter Configure interface request , Occasionally, you need to use some external code to process data , For example, call external code to encrypt and decrypt data . Another example is some non throwing HTTP Interface testing . How to achieve : Get jar After package , Put the bag directly into jmemter Under the expansion package directory , Then in the test plan , stay "add directary or jar to classpath" Click later " add to ", Select the corresponding jar package , open , Can be successfully added to jmeter in . It can be used as needed beanshell To make some code calls or processing .

How do you implement the interface test in the form of code , and Postman/jmeter What is the difference between implementing interface testing ? Which form do you think is better ?

Here I have used python Third party library requests Library to conduct interface testing in the form of code . For system interfaces request Provided by the get、post And so on . And unified in one Factory Instantiate objects in factory classes . Then through the unit test framework pytest To organize test cases . utilize parameterized Plug in to realize parameterization . And the use of pytest Self contained assert To assert the interface test results . Finally, based on the overall test case code use git Conduct management , combination jenkins To run regularly and continuously . Postman/jmeter There is no difference in doing interface testing , Because the implementation form of its own interface has not changed , The configuration is the same as the configuration . difference : Postman There is no way to connect to the database . and jmeter You can configure the jdbc Drive to connect to the database . jmeter Our tools are more inclined to performance testing . For example, only implementing interface testing Postman More convenient and easy to learn , If you need to implement performance based on interfaces , That's obvious Jmeter More powerful .

The difference between using tools and code to implement interface testing

Tools

advantage : Easy to use , Quick start , The cost of learning is low

shortcoming :Postman There is no way to operate the database directly , So as to realize the automatic comparison and verification between the response results and the database query results

For some special use cases , There is no way to achieve it according to our own requirements

Code

advantage : More flexibility , Basically, it can meet the test cases of various situations

shortcoming : The cost of learning is too high ( First learn a programming language )How do you use it Postman Implemented interface tests ?

1. As I mentioned before, it is generally in the development of overall submission SIT Before testing , Testers will have plenty of time , Will use Postman Quickly test the core interfaces within the scope of this test

2. How to use it :

First of all 、 According to the interface document , stay Postman The use case set in is divided into directory structure , It is convenient to distinguish interface function modules ;

second 、 According to the interface document in Postman Create the corresponding Request Request use case , Configure the request method 、URL、Request Header、Body;

Third 、 Optimize interface test points , Based on multi parameter 、 Less parameters 、 Configure test points with wrong parameters , Use global variables and parameterization to test the data in various situations .

Fourth 、 Add assertions , Based on the response body , Extract the necessary response volume result data for judgment . Usually, there are data dependencies between multiple interfaces , We will also use the method of association to improve the interface test cases .Jmeter and Postman Test interface , Which do you think is better ? What's the difference between the two ?

Personally, I think each has its own advantages , Compared with interface testing ,Postman Easier to learn and use , There is not much difference between the two in terms of implementing interface testing .Postman It is specially used for interface testing , and jmeter It's more about testing performance based on interfaces

Have you ever tested webservice The interface of ? How did you test it ?

SOAP/XML/WSDL It's the formation of WebService Three technologies of the platform Mode one : Use SoapUI Tool testing Webservice Mode two : Use Postman 1. Request mode POST,URL by WebService Address , Suffix with wsdl; 2. The request header Content-Type by text/xml;charset=UTF-8; 3. Request body Body Click on raw, The content is soap Label of the agreement , With method name and parameter value , Can pass SOAPUI Tool acquisition ; 4. Click send to complete the interface test ;

Have you found any in the process of interface testing BUG? Give a simple example ?

yes , we have . For example, when testing new products before , Test the condition that there are few mandatory items , Expect to return the corresponding msg Prompt information , Actually returned NullPoint; Another example is based on submitting an order , Test multiple coupons to use at the same time , bad parameter , Expect the result to return msg Wrong coupon , Actually returned Outoffrange

How many interfaces have been tested on the project ? How many use cases have been written ? How much did you find bug?

In the company , I am mainly responsible for the Department module of human resource management project 、 Employee module 、 Sign in 、 Interface test of authority management module . Altogether 20 Interface . A total of threeorfour test cases are designed , Found out BUG Seventy eight BUG, To a certain extent, it promotes the quality of the back-end interface .

How many interfaces are there in your project ? How many interface tests are implemented ?

Here we need to have a basic understanding , Generally, one function corresponds to 1 One or more interfaces . For example, the shopping cart function will correspond : Add to cart 、 Shopping cart query 、 Shopping cart information modification interface , How many interfaces are there in a specific system , It also needs to be directly related to the number of project functions in your resume . for example B2C E-commerce can answer this . In my previous projects, the specific number of interfaces is not clear , stay 500-1000 The appearance of ( You can take any number in the interval ). Only for the core function modules HTTP The interface test is carried out for the external throwing interface . Realized 200 Multiple , Such as placing orders under various circumstances 、 Add to cart 、 Commodity inquiry, etc

边栏推荐

- 左右对齐!

- LeetCode 1184. Distance between bus stops -- vector clockwise and counterclockwise

- [North Asia data recovery] data recovery case of database data loss caused by HP DL380 server RAID disk failure

- How did the beyond concert 31 years ago get super clean and repaired?

- Unity script introduction day01

- How was MP3 born?

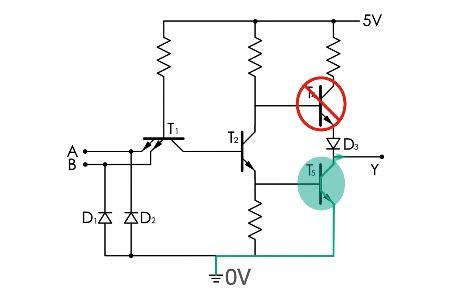

- In today's highly integrated chips, most of them are CMOS devices

- c# 实现定义一套中间SQL可以跨库执行的SQL语句

- Hexadecimal form

- 2022 financial products that can be invested

猜你喜欢

夜天之书 #53 Apache 开源社群的“石头汤”

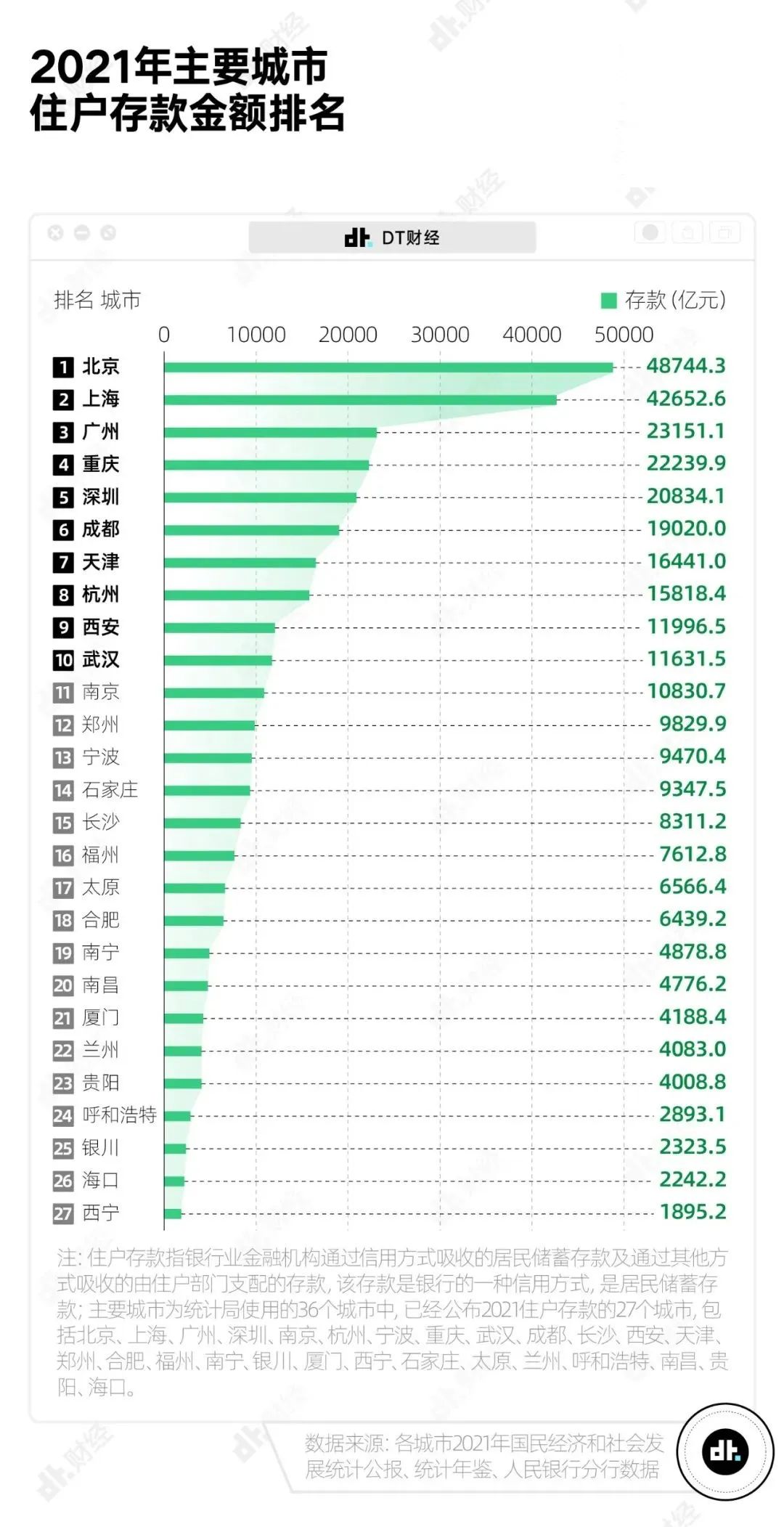

The per capita savings of major cities in China have been released. Have you reached the standard?

一篇文章搞懂Go语言中的Context

Audio and video technology development weekly | 252

In today's highly integrated chips, most of them are CMOS devices

Neuf tendances et priorités du DPI en 2022

每周招聘|高级DBA年薪49+,机会越多,成功越近!

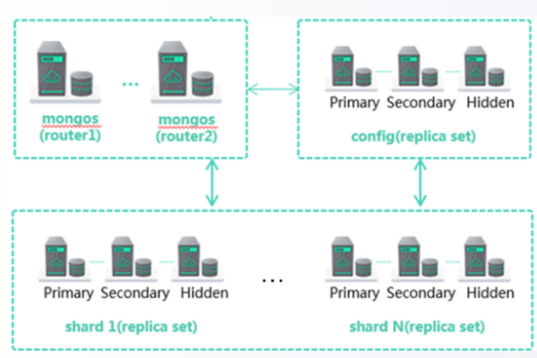

Huawei cloud database DDS products are deeply enabled

How did the beyond concert 31 years ago get super clean and repaired?

Go zero micro service practical series (IX. ultimate optimization of seckill performance)

随机推荐

%s格式符

C implementation defines a set of intermediate SQL statements that can be executed across libraries

Usage of database functions "recommended collection"

Unity script lifecycle day02

MySQL - MySQL adds self incrementing IDs to existing data tables

[flask] ORM one to many relationship

How can floating point numbers be compared with 0?

What encryption algorithm is used for the master password of odoo database?

Unity script API - component component

. Net applications consider x64 generation

Unity script introduction day01

MySQL学习笔记——数据类型(数值类型)

[Dalian University of technology] information sharing of postgraduate entrance examination and re examination

MySQL learning notes - data type (2)

.Net 应用考虑x64生成

Unity prefab day04

Unity脚本API—GameObject游戏对象、Object 对象

31年前的Beyond演唱会,是如何超清修复的?

An article learns variables in go language

LeetCode 35. Search the insertion position - vector traversal (O (logn) and O (n) - binary search)