当前位置:网站首页>Acl2021 best paper released, from ByteDance

Acl2021 best paper released, from ByteDance

2022-07-27 09:59:00 【51CTO】

️ Click on the above , Send you dry goods every day ️

ACL2021 The best paper of was published today , It's from ByteDance Artificial Intelligence Laboratory 「Vocabulary Learning via Optimal Transport for Neural Machine Translation」.

The experience of this paper is quite bumpy , It was finished at the beginning ICLR2021 after , Only got 4,4,3,3 The score ( Full marks 10 branch ), The probability is to be rejected . Later, it was also carried out rebuttal, But because I want to switch ACL2021, So I withdrew my manuscript . After repeated retouching and modification , Improved methods and experiments , In the end in ACL2021 Got high marks on , And was rated as the best paper .

「ACL2021 Paper receiving list :」

https://2021.aclweb.org/program/accept

「 Address of thesis :」

https://arxiv.org/abs/2012.15671

「 Source code address :」

https://github.com/Jingjing-NLP/VOLT

ByteDance artificial intelligence laboratory has achieved quite a lot this year , Previously, the industry's first open source NLP Model training and reasoning whole process acceleration engine LightSeq:https://github.com/bytedance/lightseq

It's open source TensorFlow Version of Transformer Training library NeurST:https://github.com/bytedance/neurst

Because the scores of the two meetings differ greatly , Zhihushang also immediately had a heated discussion , Several authors came out for a detailed interpretation , Let's move on to the answers of the two original authors .

「 Question link :」

https://www.zhihu.com/question/470224094

Know user @WAZWY

https://www.zhihu.com/question/470224094/answer/1980448588

I am this. paper One of the authors of , A colleague in the company group just sent me a link to this question , I'm shocked that someone should pay so much attention to us paper, Hand speed is so fast , Thank you very much , The code is still being sorted , Welcome to use after finishing , I hope everyone can try VOLT, There must still be many deficiencies , Also welcome to give us more comments .

First of all, congratulations @ Xu Jingjing , It's not easy !!!

Next, answer this question : About from ICLR To ACL Conversion of , The situation was like this , We're casting ICLR When , Spend too much time on experiments , stay writing I don't spend enough time on it , Whole paper To put it plainly ,Intuition Didn't say , And some important experiments have not been supplemented . As a result, you can see , I think this is an important issue lesson, You are also welcome to compare our two versions of the paper ...

Take Away: But he that doeth good , Don't ask future . You should still work hard 360 Do a good job in all aspects , Do a solid job , Instead of finding a suitable ddl Just go to submit, Now? arxiv It's so convenient , Be satisfied with yourself arxiv that will do .

PS: Why withdraw the manuscript ICLR

This question is not accurate , We actually did rebuttal Of ,ICLR Of reviewer Gave very good advice , We respect and absorb . at that time ACL Have policies ICLR If you don't withdraw the manuscript within the specified time, you can't submit ACL, because open review It's against ACL The rules of . We specially wrote a letter to ask PC Confirmed , I withdrew my manuscript . But later on ACL Made policy adjustments very humanized , This is a later story .

PSS: Welcome to pay attention to another article by ICLR Rejected , Then it was ACL High scores paper:GLAT:Glancing Transformer for Non-Autoregressive Neural Machine Translation. at that time ICLR submission Here it is :Non-iterative Parallel Text Generation via Glancing TransformerGLAT This paper Also very confident , It's a little bit RUSH, It leads to poor writing . In fact, the effect is very good ,

GLAT In our ByteDance internal volcano translation has been online ,Tiktok Part of the translation traffic on is GLAT serve Of . The bigger the data ,GLAT The better , We use it GLAT Participated in this year's WMT Translation evaluation , Big language German -> English ( be limited to ), And English -> German ( Unrestricted ) In the competition ,GLAT Take it in both directions BLEU score First , Fully explain parallelism ( Non autoregressive ) The generative model is not necessarily worse than the autoregressive model , Maybe even better , Welcome to follow up !

=======================

In the blink of an eye 5 After the first answer : I personally disagree with the anonymous answer above ” Explain that no matter what work peer review Just touch the lottery “, Reviewed twice review The quality is very high , say review The answer is to touch the lottery ticket. At first glance, I haven't read the paper and review, A little irresponsible and misleading , Make some junior Of the students have a wrong understanding of the contribution ! I hope to read the paper a little .

Know user @ Xu Jingjing

https://www.zhihu.com/question/470224094/answer/1980633745

Thank you for your attention to this work , I am Xu Jingjing, one of the authors of this work , He is also an ordinary melon eater in the natural language processing circle , I just didn't expect to eat my own melon this time orz. Here I would like to briefly share with you my answers to this question and the experience and lessons I learned in this submission .

First of all , The most important lesson I learned is to write things clearly . There is one saying. , We ICLR That work is really not well written . The feedback of the review is mainly in the following aspects : The experiment is not enough , The introduction of the method is not clear enough , And there's no direct evidence of motivation . The following points , We are ACL A lot of improvements have been made to the versions . We added a lot of follow-up experiments , Writing also starts all over again , Over and over again to see if the logic is reasonable , Whether the experiment is rigorous and sufficient, etc , The whole process is very painful . So then we got ACL I'm very excited about the accreditation of , After all, a lot of hard work has finally paid off .

second , Don't rush to contribute . After we finished our work , I think it's quite interesting , To catch up with ICLR The deadline for , It was written in a hurry , There are various problems , The result is ICLR Our reviewer taught us how to be a man . One thing I learned after this submission is to be fully prepared before submitting , Otherwise, it will bring unnecessary pressure to the review and be taught by the review every minute .

Third , Negative opinions are not negatives , But an important source of progress . In fact, there are many precedents of rejected papers with high scores , For example, the best paper Lottery Ticket Hypothesis ,pre-training Originator ELMO,LayerNorm,KD wait . I don't want to say that our work can be comparable with them ( Of course, we also want to do work that can be really useful , These jobs have always been our role models ), But I want you to look at this problem objectively . Many people may think that negative opinions are negatives of work , Actually, from another angle , Negative opinions are also an important force for our progress ~ Although it's very stressful to be talked about by everyone this time , But we are also very happy to let you think about negative opinions . When everyone's paper is rejected , Think about it Hinton All the papers were rejected , Will you become more confident !

Fourth :NLP Conference papers are not necessarily better than ML Poor conference papers . There are many excellent papers in NLP Also got a high profit at the meeting , such as BERT,ELMO wait .ML There are also some forgotten jobs in the meeting . Recently, the number of papers in major conferences has indeed become more and more , Some of the most debilitating papers were accepted , But on the other hand , well paper Also changed more .NLP Your meeting is right NLP More attention ,ML The meeting of paid more attention to the algorithm . What we did at that time was the study of vocabulary , Maybe for ML People are a small problem , But for NLP Field , It's really something you use every day , May also recognize our work more .

Last , Make a small advertisement , In this work, we study the problem of vocabulary learning , Also found some interesting conclusions , We plan to open source the code in the near future , Welcome to try it out at that time ~ A big man said that research is a long-term thing , No matter how many honors you get in the short term , What matters is whether the things you make can stay . We also very much hope to do this kind of work ~

If you have any comments and suggestions on this work , Or confusion about revising the paper , Welcome to join me on wechat :xujingjingpku

Finally, refute another article about NAS The problem of , We were NAS I was the first to vote NeurIPS, The submission time is 2020 year 5 month 27 Number , I missed and then voted ICLR, Recently accepted .without training The article is on arxiv The time is 2020 year 6 month 8 Number , So strictly speaking, it's simultaneous work ~

- END -

I am a godweiyang, Department of computer science, East China Normal University , Bytes to beat AI Lab NLP Algorithm engineer , Qiuzhao won three big internet factories in Shanghai ssp offer, The main research direction is machine translation 、 Syntactic parsing 、 Model compression and acceleration . The biggest characteristic is a good temper 、 thole , If you have any questions, you can always consult me , Whether it's technical or life .

Official account back office reply 【 push 】

You can send your resume through my twitter code , With my wechat, I can check the progress at any time 、 Ask questions .

Official account back office reply 【 Add group 】

You can join my technical exchange group

Remember one button ③ even , You are very cute today ????

边栏推荐

- Expose a technology boss from a poor family

- 蚂蚁集团境外站点 Seata 实践与探索

- 超赞的卡尔曼滤波详解文章

- About getter/setter methods

- GBase 8a MPP集群扩容实战

- QT | about the problem that QT creator cannot open the project and compile it

- 去 OPPO 面试,被问麻了

- When I went to oppo for an interview, I got numb

- Exercises --- quick arrangement, merging, floating point number dichotomy

- How to install cpolar intranet penetration on raspberry pie

猜你喜欢

Write yourself a year-end summary. Happy New Year!

Review of in vivo detection

LeetCode.814. 二叉树剪枝____DFS

![WordPress prohibits login or registration of plug-ins with a specified user name [v1.0]](/img/94/92ad89751e746a18edf80296db9188.png)

WordPress prohibits login or registration of plug-ins with a specified user name [v1.0]

圆环工件毛刺(凸起)缺口(凹陷)检测案例

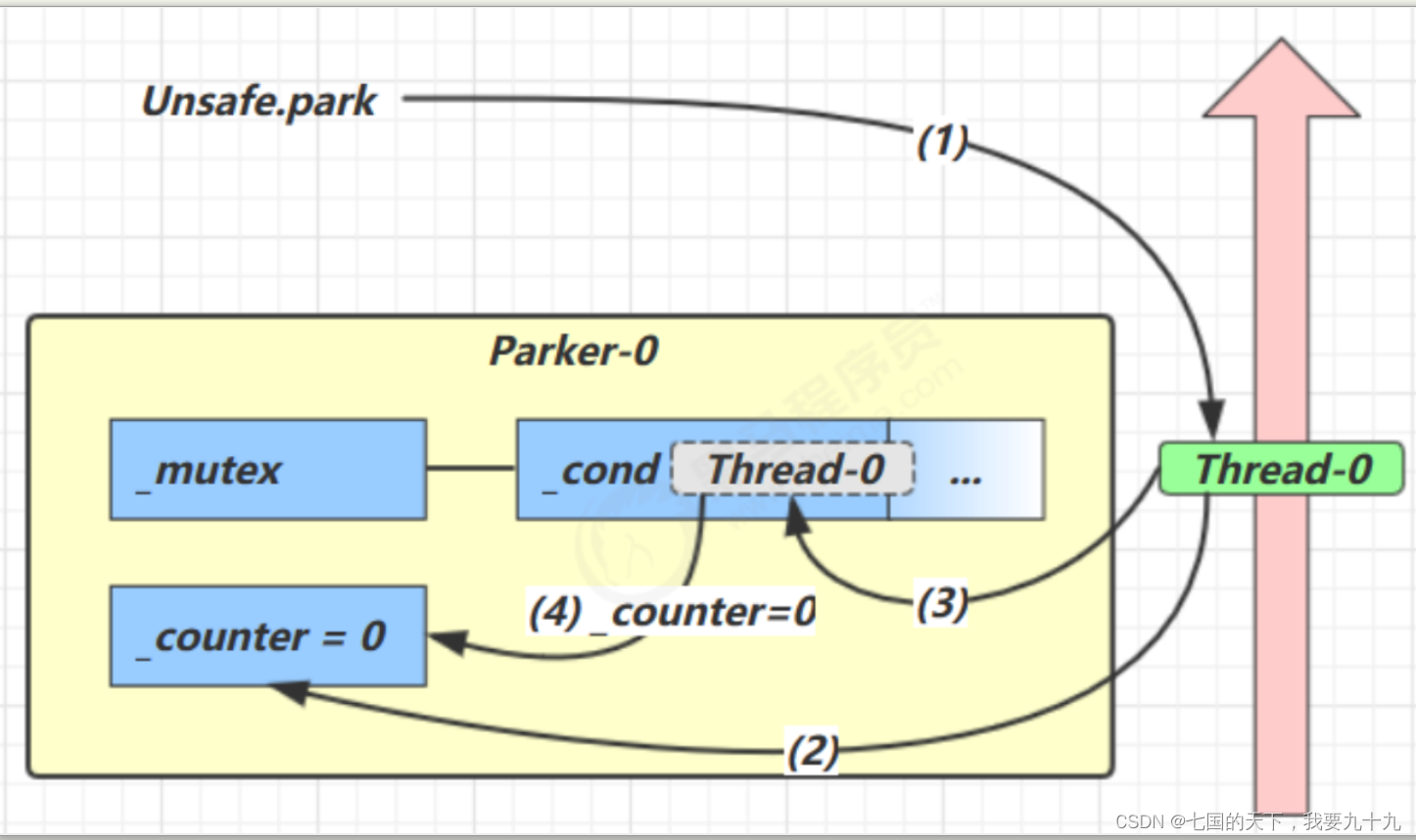

并发之park与unpark说明

What age are you still using date

![[SCM]源码管理 - perforce 分支的锁定](/img/c6/daead474a64a9a3c86dd140c097be0.jpg)

[SCM]源码管理 - perforce 分支的锁定

刷题《剑指Offer》day03

Snowflake vs. Databricks谁更胜一筹?2022年最新战报

随机推荐

Review summary of engineering surveying examination

Brush the title "sword finger offer" day04

刷题《剑指Offer》day03

蚂蚁集团境外站点 Seata 实践与探索

July training (day 10) - bit operation

Understand chisel language. 26. Chisel advanced input signal processing (II) -- majority voter filtering, function abstraction and asynchronous reset

吃透Chisel语言.27.Chisel进阶之有限状态机(一)——基本有限状态机(Moore机)

July training (day 17) - breadth first search

System parameter constant table of system architecture:

About getter/setter methods

达梦 PARTGROUPDEF是自定义的对象吗?

35 spark streaming backpressure mechanism, spark data skew solution and kylin's brief introduction

Summary of engineering material knowledge points (full)

The command prompt cannot start mysql, prompting system error 5. Access denied. terms of settlement

c'mon! Please don't ask me about ribbon's architecture principle during the interview

直播倒计时 3 天|SOFAChannel#29 基于 P2P 的文件和镜像加速系统 Dragonfly

Concurrent Park and unpark description

Shell的正则表达式入门、常规匹配、特殊字符:^、$、.、*、字符区间(中括号):[ ]、特殊字符:\、匹配手机号

食品安全 | 还在吃酵米面吗?当心这些食物有毒!

注解与反射