当前位置:网站首页>【torch】|torch. nn. utils. clip_ grad_ norm_

【torch】|torch. nn. utils. clip_ grad_ norm_

2022-07-06 05:18:00 【rrr2】

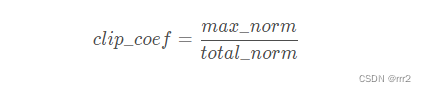

The greater the gradient ,total_norm The bigger the value is. , Leading to clip_coef The smaller the value of , Eventually, it will also lead to the more severe clipping of the gradient , Very reasonable.

norm_type Take... No matter how much , about total_norm The impact is not too great (1 and 2 The gap is a little larger ), So you can take the default value directly 2

norm_type The bigger it is ,total_norm The smaller it is ( The conclusions observed in the experiment , Math is not good , It will not prove that , So this article is not necessarily right )

...

loss = crit(...)

optimizer.zero_grad()

loss.backward()

torch.nn.utils.clip_grad_norm_(parameters=model.parameters(), max_norm=10, norm_type=2)

optimizer.step()

...

clip_coef The smaller it is , The more severe the cutting of gradient , namely , The more you reduce the value of the gradient

max_norm The smaller it is ,clip_coef The smaller it is , therefore ,max_norm The bigger it is , The softer the solution of gradient explosion ,max_norm The smaller it is , The harder to solve the gradient explosion .max_norm You can take decimals

ref

https://blog.csdn.net/Mikeyboi/article/details/119522689

边栏推荐

- EditorUtility.SetDirty在Untiy中的作用以及应用

- Nacos TC setup of highly available Seata (02)

- Sorting out the knowledge points of multicast and broadcasting

- EditorUtility. The role and application of setdirty in untiy

- Three.js学习-光照和阴影(了解向)

- 树莓派3.5寸屏幕白屏显示连接

- Pagoda configuration mongodb

- Collection + interview questions

- MySQL if and ifnull use

- Implementing fuzzy query with dataframe

猜你喜欢

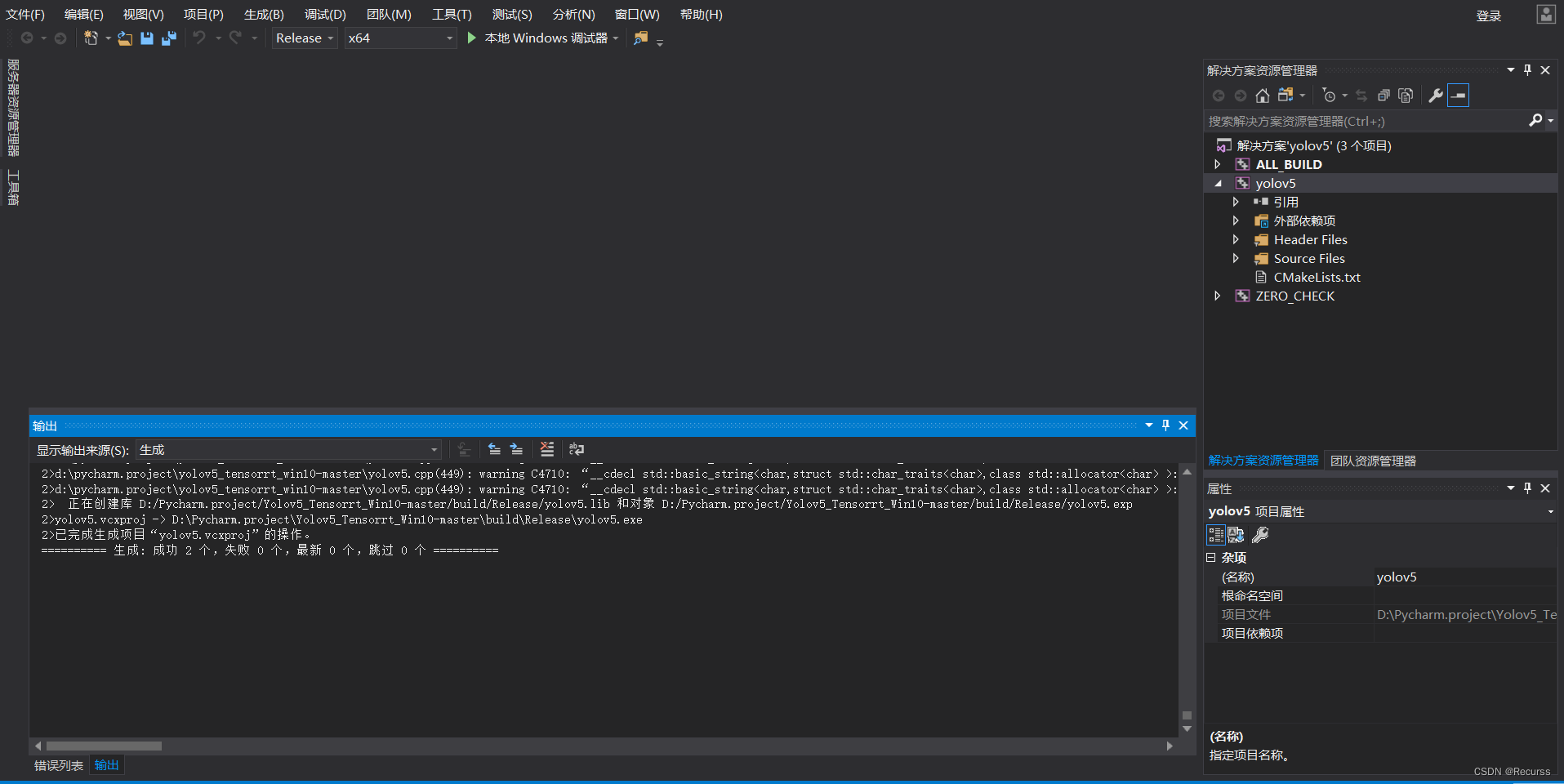

yolov5 tensorrt加速

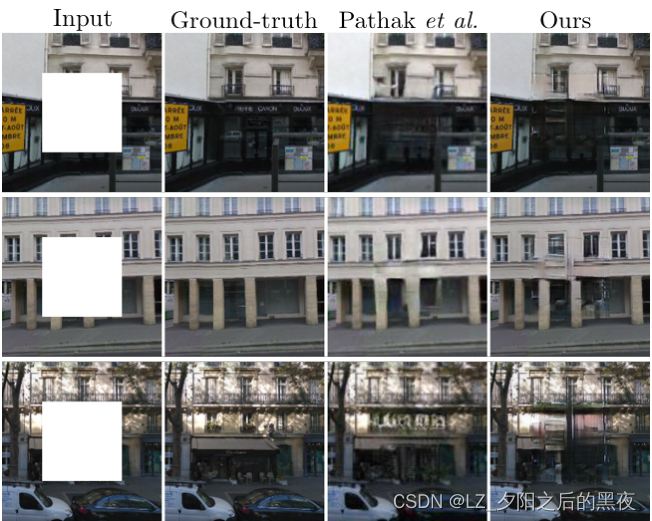

Pix2pix: image to image conversion using conditional countermeasure networks

Codeforces Round #804 (Div. 2)

Fiddler installed the certificate, or prompted that the certificate is invalid

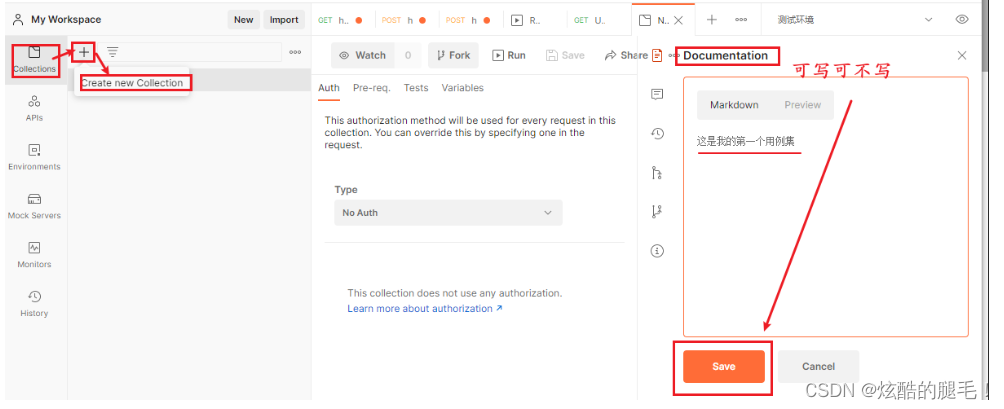

Postman manage test cases

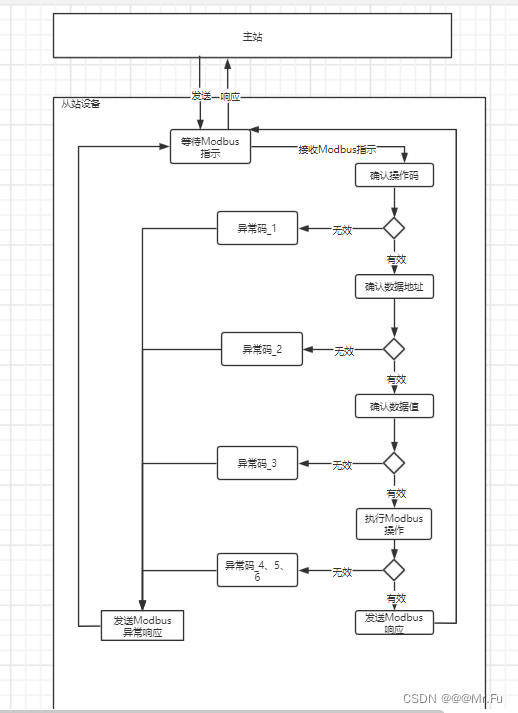

Modbus protocol communication exception

Questions d'examen écrit classiques du pointeur

Three methods of Oracle two table Association update

Pointer classic written test questions

用StopWatch 统计代码耗时

随机推荐

Cve-2019-11043 (PHP Remote Code Execution Vulnerability)

SQLite add index

Fluent implements a loadingbutton with loading animation

Golang -- TCP implements concurrency (server and client)

Postman Association

Select knowledge points of structure

Huawei od computer test question 2

[buuctf.reverse] 159_ [watevrCTF 2019]Watshell

In 2022, we must enter the big factory as soon as possible

Pickle and savez_ Compressed compressed volume comparison

毕业设计游戏商城

HAC集群修改管理员用户密码

Why does MySQL need two-phase commit

The ECU of 21 Audi q5l 45tfsi brushes is upgraded to master special adjustment, and the horsepower is safely and stably increased to 305 horsepower

The ECU of 21 Audi q5l 45tfsi brushes is upgraded to master special adjustment, and the horsepower is safely and stably increased to 305 horsepower

Postman manage test cases

【OSPF 和 ISIS 在多路访问网络中对掩码的要求】

Mongodb basic knowledge summary

Modbus protocol communication exception

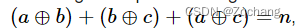

Codeforces Round #804 (Div. 2) Editorial(A-B)