当前位置:网站首页>[pytorch] resnet18, resnet20, resnet34, resnet50 network structure and Implementation

[pytorch] resnet18, resnet20, resnet34, resnet50 network structure and Implementation

2022-07-27 08:00:00 【leSerein_】

List of articles

Pick classic early Pytorch Analyze the official implementation code

https://github.com/pytorch/vision/blob/9a481d0bec2700763a799ff148fe2e083b575441/torchvision/models/resnet.py

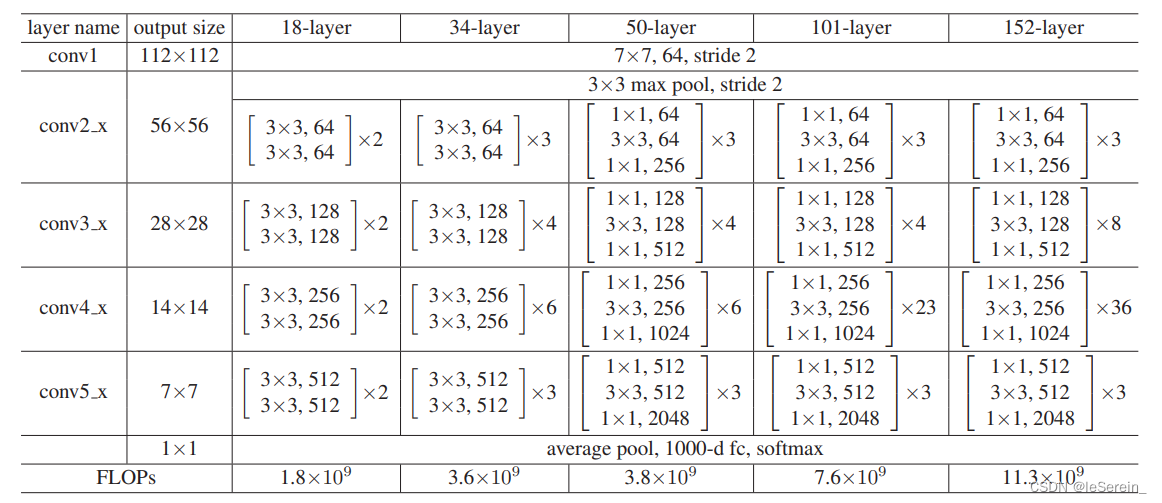

Various ResNet The Internet is made up of BasicBlock perhaps bottleneck Composed of , They are the basic modules of the deep residual network

ResNet The main body

ResNet Most of the various structures of are 1 layer conv+4 individual block+1 layer fc

class ResNet(nn.Module):

def __init__(self, block, layers, zero_init_residual=False):

super(ResNet, self).__init__()

self.inplanes = 64

self.conv1 = nn.Conv2d(3, 64, kernel_size=7, stride=2, padding=3,

bias=False)

self.bn1 = nn.BatchNorm2d(64)

self.relu = nn.ReLU(inplace=True)

self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

self.layer1 = self._make_layer(block, 64, layers[0])

self.layer2 = self._make_layer(block, 128, layers[1], stride=2)

self.layer3 = self._make_layer(block, 256, layers[2], stride=2)

self.layer4 = self._make_layer(block, 512, layers[3], stride=2)

self.avgpool = nn.AdaptiveAvgPool2d((1, 1))

self.fc = nn.Linear(512 * block.expansion, num_classes)

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')

elif isinstance(m, nn.BatchNorm2d):

nn.init.constant_(m.weight, 1)

nn.init.constant_(m.bias, 0)

# Zero-initialize the last BN in each residual branch,

# so that the residual branch starts with zeros, and each residual block behaves like an identity.

# This improves the model by 0.2~0.3% according to https://arxiv.org/abs/1706.02677

if zero_init_residual:

for m in self.modules():

if isinstance(m, Bottleneck):

nn.init.constant_(m.bn3.weight, 0)

elif isinstance(m, BasicBlock):

nn.init.constant_(m.bn2.weight, 0)

def _make_layer(self, block, planes, blocks, stride=1):

downsample = None

if stride != 1 or self.inplanes != planes * block.expansion:

# normly happened when stride = 2

downsample = nn.Sequential(

conv1x1(self.inplanes, planes * block.expansion, stride),

nn.BatchNorm2d(planes * block.expansion),

)

layers = []

layers.append(block(self.inplanes, planes, stride, downsample))

self.inplanes = planes * block.expansion

for _ in range(1, blocks):

# only the first block need downsample thus there is no downsample and stride = 2

layers.append(block(self.inplanes, planes))

return nn.Sequential(*layers)

def forward(self, x):

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.maxpool(x)

c2 = self.layer1(x)

c3 = self.layer2(c2)

c4 = self.layer3(c3)

c5 = self.layer4(c4)

x = self.avgpool(x)

x = x.view(x.size(0), -1)

x = self.fc(x)

return c5

It should be noted that the last avgpool Is the global average pooling

BasicBlock

class BasicBlock(nn.Module):

expansion = 1

def __init__(self, inplanes, planes, stride=1, downsample=None):

# here planes names channel number

super(BasicBlock, self).__init__()

self.conv1 = conv3x3(inplanes, planes, stride)

self.bn1 = nn.BatchNorm2d(planes)

self.relu = nn.ReLU(inplace=True)

self.conv2 = conv3x3(planes, planes)

self.bn2 = nn.BatchNorm2d(planes)

self.downsample = downsample

self.stride = stride

def forward(self, x):

identity = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

if self.downsample is not None:

identity = self.downsample(x)

out += identity

out = self.relu(out)

return out

ResNet18

The corresponding is [2,2,2,2]

def resnet18(pretrained=False, **kwargs):

"""Constructs a ResNet-18 model. Args: pretrained (bool): If True, returns a model pre-trained on ImageNet """

model = ResNet(BasicBlock, [2, 2, 2, 2], **kwargs)

if pretrained:

print('Loading the pretrained model ...')

# strict = False as we don't need fc layer params.

model.load_state_dict(model_zoo.load_url(model_urls['resnet18']), strict=False)

return model

ResNet34

def resnet34(pretrained=False, **kwargs):

"""Constructs a ResNet-34 model. Args: pretrained (bool): If True, returns a model pre-trained on ImageNet """

model = ResNet(BasicBlock, [3, 4, 6, 3], **kwargs)

if pretrained:

print('Loading the pretrained model ...')

model.load_state_dict(model_zoo.load_url(model_urls['resnet34']), strict=False)

return model

ResNet20

This needs to be emphasized , natural ResNet20 It should be put forward in the article , in the light of cifar Data set design n=3 When , 1+6*3+1=20

class ResNet4Cifar(nn.Module):

def __init__(self, block, num_block, num_classes=10):

super().__init__()

self.in_channels = 16

self.conv1 = nn.Sequential(

nn.Conv2d(3, 16, kernel_size=3, padding=1, bias=False),

nn.BatchNorm2d(16),

nn.ReLU(inplace=True))

# we use a different inputsize than the original paper

# so conv2_x's stride is 1

self.conv2_x = self._make_layer(block, 16, num_block[0], 1)

self.conv3_x = self._make_layer(block, 32, num_block[1], 2)

self.conv4_x = self._make_layer(block, 64, num_block[2], 2)

self.avg_pool = nn.AdaptiveAvgPool2d((1, 1))

self.fc = nn.Linear(64 * block.expansion, num_classes)

def _make_layer(self, block, out_channels, num_blocks, stride):

strides = [stride] + [1] * (num_blocks - 1)

layers = []

for stride in strides:

layers.append(block(self.in_channels, out_channels, stride))

self.in_channels = out_channels * block.expansion

return nn.Sequential(*layers)

def forward(self, x):

output = self.conv1(x)

output = self.conv2_x(output)

output = self.conv3_x(output)

output = self.conv4_x(output)

output = self.avg_pool(output)

output = output.view(output.size(0), -1)

output = self.fc(output)

return output

def resnet20(num_classes=10, **kargs):

""" return a ResNet 20 object """

return ResNet4Cifar(BasicBlock, [3, 3, 3], num_classes=num_classes)

We also calculate the parameter quantity as 0.27M, Consistent with the paper , Yes [1,3,32,32] The input of , The output dimension is [1,64,8,8]

But there are also some articles that only change the first three layers 3x3 Convolution layer , The number of channels is not used 16、32、64, Is still 4 Layer of 64、128、256、512

, So the parameter quantity is 11.25M. For different tasks , But if you don't pay attention to the original network structure , This can be ignored .

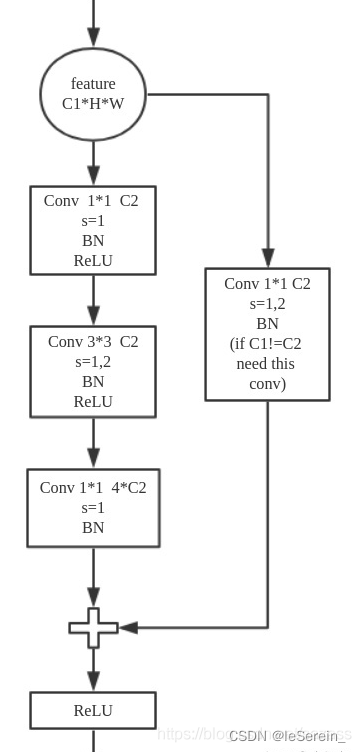

Bottleneck Block

Bottleneck Block Used in 1×1 Convolution layer . If the number of input channels is 256,1×1 The convolution layer will reduce the number of channels to 64, after 3×3 After the convolution layer , Then increase the number of channels to 256.1×1 The advantage of convolution layer is in deeper networks , Handle inputs with a large number of channels with a small amount of parameters .

This structure is used in ResNet50、ResNet101 in .

class Bottleneck(nn.Module):

expansion = 4

def __init__(self, inplanes, planes, stride=1, downsample=None):

super(Bottleneck, self).__init__()

self.conv1 = conv1x1(inplanes, planes)

self.bn1 = nn.BatchNorm2d(planes)

self.conv2 = conv3x3(planes, planes, stride)

self.bn2 = nn.BatchNorm2d(planes)

self.conv3 = conv1x1(planes, planes * self.expansion)

self.bn3 = nn.BatchNorm2d(planes * self.expansion)

self.relu = nn.ReLU(inplace=True)

self.downsample = downsample

self.stride = stride

def forward(self, x):

identity = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

out = self.relu(out)

out = self.conv3(out)

out = self.bn3(out)

if self.downsample is not None:

identity = self.downsample(x)

out += identity

out = self.relu(out)

return out

ResNet50

The same network structure as above , hold Bottleneck It's OK to pile it up according to the number of layers

def resnet50(pretrained=False, **kwargs):

"""Constructs a ResNet-50 model. Args: pretrained (bool): If True, returns a model pre-trained on ImageNet """

model = ResNet(Bottleneck, [3, 4, 6, 3], **kwargs)

if pretrained:

print('Loading the pretrained model ...')

model.load_state_dict(model_zoo.load_url(model_urls['resnet50']), strict=False)

return model

ResNet What problem has it solved

It is recommended to see the problem Resnet What problem is being solved ?

Post some of my favorite answers :

A. about L L L Layer network , There are no residuals Plain Net The attenuation of gradient correlation is in 1 2 L \frac{1}{2^L} 2L1 , and ResNet But the attenuation of it is only 1 L \frac{1}{\sqrt{L}} L1 . Even if BN After that, the mode of the gradient is stable in the normal range , However, the gradient correlation actually decreases continuously with the increase of the number of layers . And it turns out ,ResNet It can effectively reduce the attenuation of this correlation .

B. about “ Gradient dispersion ” From the point of view , Introduce an input into the output x The identity mapping of , Then the gradient will correspondingly introduce a constant 1, Such a network is indeed not prone to abnormal gradient values , In a sense , It plays a role in stabilizing the gradient .

C. Hop join addition can realize the combination of different resolution features , Because the shallow layer is easy to have high-resolution but low-level semantic features , The deep features have high-level semantics , But the resolution is very low . The introduction of jump connection actually makes the model itself more “ flexible ” Structure , That is, in the training process itself , The model can be selected in each part is “ More convolution and nonlinear transformation ” still “ More inclined to do nothing ”, Or a combination of the two . The model can adapt its own structure after training .3

D. When a residual network is used , Is to join skip connection structure , At this time, a building block The task of : F(x) := H(x), Turned into F(x) := H(x)-x Compare these two functions to be fitted , Fitting residual diagram is easier to optimize , in other words :F(x) := H(x)-x Than F(x) := H(x) Easier to optimize 4. An example of a differential amplifier is given :F Is the network mapping before summation ,H Is the network mapping from input to summation . For example 5 Mapping to 5.1, So before introducing the residuals F’(5)=5.1, After introducing the residual, it is H(5)=5.1, H(5)=F(5)+5, F(5)=0.1. there F’ and F All represent network parameter mapping , The residual mapping is more sensitive to the change of output . such as s Output from 5.1 Change to 5.2, mapping F’ The output of has increased 1/51=2%, For the residual structure, the output is from 5.1 To 5.2, mapping F It's from 0.1 To 0.2, Added 100%. Obviously, the output change of the latter plays a greater role in adjusting the weight , So it works better . The idea of residuals is to remove the same main part , To highlight small changes .

There are many sayings , Easy to use, it's over ~

边栏推荐

- Shell enterprise interview exercise

- 2020 International Machine Translation Competition: Volcano translation won five championships

- C语言:优化后的希尔排序

- 【万字长文】吃透负载均衡,和阿里大牛的技术面谈

- Shell awk related exercises

- Install tensorflow

- 一文速览EMNLP 2020中的Transformer量化论文

- [ten thousand words long article] thoroughly understand load balancing, and have a technical interview with Alibaba Daniel

- C language: optimized Hill sort

- API version control [eolink translation]

猜你喜欢

综合案例、

Framework of electronic mass production project -- basic idea

Day111.尚医通:集成NUXT框架、前台页面首页数据、医院详情页

QingChuang technology joined dragon lizard community to build a new ecosystem of intelligent operation and maintenance platform

![[resolved] SSO forwarding succeeded, and there was an unexpected error (type=internal server error, status=500) caused by parameters in the forwarding URL](/img/05/41f48160fa7895bc9e4f314ec570c5.png)

[resolved] SSO forwarding succeeded, and there was an unexpected error (type=internal server error, status=500) caused by parameters in the forwarding URL

SQL labs SQL injection platform - level 1 less-1 get - error based - Single Quotes - string (get single quote character injection based on errors)

RPC remote procedure call

Zero training platform course-1. SQL injection Foundation

On data security

SETTA 2020 国际学术会议即将召开,欢迎大家参加!

随机推荐

RPC remote procedure call

Promise details

【pytorch】ResNet18、ResNet20、ResNet34、ResNet50网络结构与实现

API 版本控制【 Eolink 翻译】

DASCTF2022.07赋能赛密码wp

C语言:随机生成数+希尔排序

什么是真正的HTAP?(一)背景篇

这次龙蜥展区玩的新花样,看看是谁的 DNA 动了?

杂谈:把肉都烂在锅里就是保障学生权益了?

How does slf4j configure logback?

Solidity智能合约开发 — 3.3-solidity语法控制结构

如何更新pip3?和Running pip as the ‘root‘ user can result in broken permissions and conflicting behaviour

Mqtt instruction send receive request subscription

【小程序】uniapp发行微信小程序上传失败Error: Error: {'errCode':-10008,'errMsg':'invalid ip...

Shell scripts related

Lu Xun: I don't remember saying it, or you can check it yourself!

为啥国内大厂都把云计算当成香饽饽,这个万亿市场你真的了解吗

Sword finger offer 58 - I. flip word order

What other methods are available for MySQL index analysis besides explain

shell脚本学习day01