当前位置:网站首页>Scrcpy source code walk 3 what happened between socket and screen refresh

Scrcpy source code walk 3 what happened between socket and screen refresh

2022-06-13 06:27:00 【That's right】

The first part scrcpy Function list in main function

Source version : v1.19

bool scrcpy(const struct scrcpy_options *options) {

static struct scrcpy scrcpy;

struct scrcpy *s = &scrcpy;

server_init(&s->server); ///> 1. server_init()

struct server_params params = {

.serial = options->serial,

.port_range = options->port_range,

.bit_rate = options->bit_rate,

.max_fps = options->max_fps,

.display_id = options->display_id,

.codec_options = options->codec_options,

.encoder_name = options->encoder_name,

.force_adb_forward = options->force_adb_forward,

};

server_start(&s->server, ¶ms); ///> 2. server_start();

server_started = true;

sdl_init_and_configure(options->display, options->render_driver,

options->disable_screensaver);

server_connect_to(&s->server, device_name, &frame_size); ///> 3. server_connect_to();

file_handler_init(&s->file_handler, s->server.serial,

options->push_target); ///> 4. file_handler_init(); socket init & Server code adb push

decoder_init(&s->decoder); ///> 5. decoder_init();

av_log_set_callback(av_log_callback); ///> 6. av_log_set_callback();

static const struct stream_callbacks stream_cbs = {

///> 7. stream_init();

.on_eos = stream_on_eos,

};

stream_init(&s->stream, s->server.video_socket, &stream_cbs, NULL);

stream_add_sink(&s->stream, &dec->packet_sink); ///> 8. stream_add_sink(); dec

stream_add_sink(&s->stream, &rec->packet_sink); ///> 9. stream_add_sink(); rec

controller_init(&s->controller, s->server.control_socket); ///> 10. controller_init(); control_socket

controller_start(&s->controller); ///> 11. controller_start();

struct screen_params screen_params = {

.window_title = window_title,

.frame_size = frame_size,

.always_on_top = options->always_on_top,

.window_x = options->window_x,

.window_y = options->window_y,

.window_width = options->window_width,

.window_height = options->window_height,

.window_borderless = options->window_borderless,

.rotation = options->rotation,

.mipmaps = options->mipmaps,

.fullscreen = options->fullscreen,

.buffering_time = options->display_buffer,

};

screen_init(&s->screen, &screen_params); ///> 12. screen_init();

decoder_add_sink(&s->decoder, &s->screen.frame_sink); ///> 13. decoder_add_sink();

#ifdef HAVE_V4L2

sc_v4l2_sink_init(&s->v4l2_sink, options->v4l2_device, frame_size,

options->v4l2_buffer); ///> 14. sc_v4l2_sink_init();

decoder_add_sink(&s->decoder, &s->v4l2_sink.frame_sink);

#endif

stream_start(&s->stream); ///> 14+. Stream start configuration , Missing the first release , I'm sorry . Add

input_manager_init(&s->input_manager, &s->controller, &s->screen, options); ///> 15. input_manager_init();

ret = event_loop(s, options); ///> 16. event_loop();

///> Program launch releases resource related content

screen_hide_window(&s->screen);

controller_stop(&s->controller);

file_handler_stop(&s->file_handler);

screen_interrupt(&s->screen);

server_stop(&s->server);

stream_join(&s->stream);

sc_v4l2_sink_destroy(&s->v4l2_sink);

screen_join(&s->screen);

screen_destroy(&s->screen);

controller_join(&s->controller);

controller_destroy(&s->controller);

recorder_destroy(&s->recorder);

file_handler_join(&s->file_handler);

file_handler_destroy(&s->file_handler);

server_destroy(&s->server); ///> The destruction server

return ret;

}

The second part socket How data streams are converted to video streams

///> Call in main function stream_start(&s->stream) This function ;

bool

stream_start(struct stream *stream) {

LOGD("Starting stream thread");

printf("%s, %d DEBUG\n", __FILE__, __LINE__);

bool ok = sc_thread_create(&stream->thread, run_stream, "stream", stream);

if (!ok) {

LOGC("Could not start stream thread");

return false;

}

return true;

}

///> Video stream encapsulation process thread

run_stream(void *data) {

AVCodec *codec = avcodec_find_decoder(AV_CODEC_ID_H264);

stream->codec_ctx = avcodec_alloc_context3(codec);

stream_open_sinks(stream, codec);

stream->parser = av_parser_init(AV_CODEC_ID_H264);

AVPacket *packet = av_packet_alloc();

for (;;) {

bool ok = stream_recv_packet(stream, packet); ///> 1>. Read socket Raw video stream data , Store in packet in

if (!ok) {

// end of stream

break;

}

ok = stream_push_packet(stream, packet); ///> 2>. Put a single frame AVPacket Data placement ffmpeg->codec In the stream

av_packet_unref(packet);

if (!ok) {

// cannot process packet (error already logged)

break;

}

}

if (stream->pending) {

av_packet_free(&stream->pending);

}

av_packet_free(&packet);

av_parser_close(stream->parser);

stream_close_sinks(stream);

avcodec_free_context(&stream->codec_ctx);

stream->cbs->on_eos(stream, stream->cbs_userdata);

}

///> 1>. Read socket Raw video stream data , Store in packet in

static bool

stream_recv_packet(struct stream *stream, AVPacket *packet) {

// The video stream contains raw packets, without time information. When we

// record, we retrieve the timestamps separately, from a "meta" header

// added by the server before each raw packet.

// important :: This part is scrcpy Video data encoding format

// The "meta" header length is 12 bytes:

// [. . . . . . . .|. . . .]. . . . . . . . . . . . . . . ...

// <-------------> <-----> <-----------------------------...

// PTS packet raw packet

// size

//

// It is followed by <packet_size> bytes containing the packet/frame.

uint8_t header[HEADER_SIZE];

ssize_t r = net_recv_all(stream->socket, header, HEADER_SIZE);

if (r < HEADER_SIZE) {

return false;

}

uint64_t pts = buffer_read64be(header);

uint32_t len = buffer_read32be(&header[8]);

assert(pts == NO_PTS || (pts & 0x8000000000000000) == 0);

assert(len);

if (av_new_packet(packet, len)) {

///> apply AVPacket Memory space

LOGE("Could not allocate packet");

return false;

}

r = net_recv_all(stream->socket, packet->data, len); ///> hold socket The data in the stream is put into AVPacket Of Frame data area

if (r < 0 || ((uint32_t) r) < len) {

av_packet_unref(packet);

return false;

}

packet->pts = pts != NO_PTS ? (int64_t) pts : AV_NOPTS_VALUE;///> To configure packet-> pts Parameters

LOGI("%s, %d , LEN:%d",__FILE__,__LINE__,len);

return true;

}

///> 2>. Put a single frame AVPacket Data placement ffmpeg->codec In the stream , wait for FFMPEG Video rendering for

static bool

stream_push_packet(struct stream *stream, AVPacket *packet) {

bool is_config = packet->pts == AV_NOPTS_VALUE;

// A config packet must not be decoded immediately (it contains no

// frame); instead, it must be concatenated with the future data packet.

if (stream->pending || is_config) {

size_t offset;

if (stream->pending) {

offset = stream->pending->size;

if (av_grow_packet(stream->pending, packet->size)) {

///> Append the frame to the stream slot

LOGE("Could not grow packet");

return false;

}

} else {

offset = 0;

stream->pending = av_packet_alloc();

if (!stream->pending) {

LOGE("Could not allocate packet");

return false;

}

if (av_new_packet(stream->pending, packet->size)) {

///> Put the frame in the launder

LOGE("Could not create packet");

av_packet_free(&stream->pending);

return false;

}

}

memcpy(stream->pending->data + offset, packet->data, packet->size);

if (!is_config) {

// prepare the concat packet to send to the decoder

stream->pending->pts = packet->pts;

stream->pending->dts = packet->dts;

stream->pending->flags = packet->flags;

packet = stream->pending;

}

}

if (is_config) {

// config packet

bool ok = push_packet_to_sinks(stream, packet); ///> 3>. hold packet Put it in stream in , Specific implementation reference push_packet_to_sinks function

if (!ok) {

return false;

}

} else {

// data packet

bool ok = stream_parse(stream, packet); ///> 4>. Frame by frame compression is separated from the raw data stream H264 Encoding data

if (stream->pending) {

// the pending packet must be discarded (consumed or error)

av_packet_free(&stream->pending);

}

if (!ok) {

return false;

}

}

return true;

}

///> 3>. hold packet Put it in stream in

static bool

push_packet_to_sinks(struct stream *stream, const AVPacket *packet) {

for (unsigned i = 0; i < stream->sink_count; ++i) {

struct sc_packet_sink *sink = stream->sinks[i];

if (!sink->ops->push(sink, packet)) {

///> Detailed process 、 Please refer to part III push() Callback function walk

LOGE("Could not send config packet to sink %d", i);

return false;

}

}

return true;

}

///> 4>. Frame by frame compression is separated from the raw data stream H264 Encoding data

static bool

stream_parse(struct stream *stream, AVPacket *packet) {

uint8_t *in_data = packet->data;

int in_len = packet->size;

uint8_t *out_data = NULL;

int out_len = 0;

int r = av_parser_parse2(stream->parser, stream->codec_ctx, ///> Separate the frame by frame compression from the input raw data stream H264 Encoding data .

&out_data, &out_len, in_data, in_len,

AV_NOPTS_VALUE, AV_NOPTS_VALUE, -1);

// PARSER_FLAG_COMPLETE_FRAMES is set

assert(r == in_len);

(void) r;

assert(out_len == in_len);

if (stream->parser->key_frame == 1) {

packet->flags |= AV_PKT_FLAG_KEY;

}

packet->dts = packet->pts;

bool ok = push_packet_to_sinks(stream, packet); ///> hold packet Put it in stream in , Detailed process 、 Please refer to part III push() Callback function walk

if (!ok) {

LOGE("Could not process packet");

return false;

}

return true;

}

In the above part, I divide it into socket Data flow 、 The switch to stream Flow process , This data is H264 Encode raw video data .

The sequence number has been marked in the source code fragment , 1> ~ 4> Clearly indicate the data flow processing process .

When raw video data is obtained , To get into H264 Video decoding process , Let's go down to the source code .

The third part Video decoding process

///> Decoder initialization

void

decoder_init(struct decoder *decoder) {

decoder->sink_count = 0;

static const struct sc_packet_sink_ops ops = {

.open = decoder_packet_sink_open, ///> call decoder_open(struct decoder *decoder, const AVCodec *codec) function

.close = decoder_packet_sink_close,

.push = decoder_packet_sink_push, ///> call decoder_push(struct decoder *decoder, const AVPacket *packet) function

};

decoder->packet_sink.ops = &ops;

}

///> Turn on the decoder

static bool

decoder_open(struct decoder *decoder, const AVCodec *codec) {

decoder->codec_ctx = avcodec_alloc_context3(codec); ///> apply codec context3 Space

if (!decoder->codec_ctx) {

LOGC("Could not allocate decoder context");

return false;

}

if (avcodec_open2(decoder->codec_ctx, codec, NULL) < 0) {

///> hold context Put content into decoder in

LOGE("Could not open codec");

avcodec_free_context(&decoder->codec_ctx);

return false;

}

decoder->frame = av_frame_alloc(); ///> apply AV Frame Space , Here is Played Frame frame

if (!decoder->frame) {

LOGE("Could not create decoder frame");

avcodec_close(decoder->codec_ctx);

avcodec_free_context(&decoder->codec_ctx);

return false;

}

if (!decoder_open_sinks(decoder)) {

///> decode

LOGE("Could not open decoder sinks");

av_frame_free(&decoder->frame);

avcodec_close(decoder->codec_ctx);

avcodec_free_context(&decoder->codec_ctx);

return false;

}

return true;

}

///>

static bool

decoder_push(struct decoder *decoder, const AVPacket *packet) {

bool is_config = packet->pts == AV_NOPTS_VALUE;

if (is_config) {

// nothing to do

return true;

}

int ret = avcodec_send_packet(decoder->codec_ctx, packet); ///> 1>. hold Packet Send your data to FFMPEG In decoder

if (ret < 0 && ret != AVERROR(EAGAIN)) {

LOGE("Could not send video packet: %d", ret);

return false;

}

ret = avcodec_receive_frame(decoder->codec_ctx, decoder->frame); ///> 2>. receive FFMPEG Decoded data

if (!ret) {

// a frame was received

bool ok = push_frame_to_sinks(decoder, decoder->frame); ///> 3>. hold Frame Data is sent to the player stream

// A frame lost should not make the whole pipeline fail. The error, if

// any, is already logged.

(void) ok;

av_frame_unref(decoder->frame);

} else if (ret != AVERROR(EAGAIN)) {

LOGE("Could not receive video frame: %d", ret);

return false;

}

return true;

}

///> push_frame_to_sinks() function

static bool

push_frame_to_sinks(struct decoder *decoder, const AVFrame *frame) {

for (unsigned i = 0; i < decoder->sink_count; ++i) {

struct sc_frame_sink *sink = decoder->sinks[i];

if (!sink->ops->push(sink, frame)) {

///> The callback function here is : The fourth part 5>. screen_frame_sink_push() function , Send data to the screen properties sink entrance .

LOGE("Could not send frame to sink %d", i);

return false;

}

}

return true;

}

This part 1> ~ 3> The process is FFMPEG Standard video decoding process , So we have decoded the raw video data 、 Encode into playable video stream .

The fourth part Video announcer

Next, we will return to the main program , Go through the program entrance for video playback .

///> 4.1 Call in main program screen_init(&s->screen, &screen_params) This function .

screen_init(struct screen *screen, const struct screen_params *params) {

static const struct sc_video_buffer_callbacks cbs = {

///> 1>. sc_video_buffer Callback function for

.on_new_frame = sc_video_buffer_on_new_frame,

};

bool ok = sc_video_buffer_init(&screen->vb, params->buffering_time, &cbs, screen); ///> 2>. sc_video_buffer_init() Initialization function

ok = sc_video_buffer_start(&screen->vb); ///> 3>. start-up sc_video_buffer

fps_counter_init(&screen->fps_counter);

screen->window = SDL_CreateWindow(params->window_title, x, y,

window_size.width, window_size.height,

window_flags);

screen->renderer = SDL_CreateRenderer(screen->window, -1,

SDL_RENDERER_ACCELERATED);

int r = SDL_GetRendererInfo(screen->renderer, &renderer_info);

struct sc_opengl *gl = &screen->gl;

sc_opengl_init(gl);

SDL_Surface *icon = read_xpm(icon_xpm); ///> Android small icon file

SDL_SetWindowIcon(screen->window, icon);

SDL_FreeSurface(icon);

screen->texture = create_texture(screen);

SDL_SetWindowSize(screen->window, window_size.width, window_size.height);

screen_update_content_rect(screen);

if (params->fullscreen) {

screen_switch_fullscreen(screen);

}

SDL_AddEventWatch(event_watcher, screen);

static const struct sc_frame_sink_ops ops = {

.open = screen_frame_sink_open,

.close = screen_frame_sink_close,

.push = screen_frame_sink_push, ///> 5>. screen_frame_sink_push() function

};

screen->frame_sink.ops = &ops;

return true;

}

///> 4.2 Main program call decoder_add_sink(&s->decoder, &s->screen.frame_sink) function

void

decoder_add_sink(struct decoder *decoder, struct sc_frame_sink *sink) {

assert(decoder->sink_count < DECODER_MAX_SINKS);

assert(sink);

assert(sink->ops);

decoder->sinks[decoder->sink_count++] = sink;

}

By function decoder_add_sink() We can roughly guess , Put the decoder and The screen sink After docking ,

The output of the decoder is directly played on the screen . according to FFMPEG or Gstreamer To speculate in a useful way .

Is it right , Let's read the source code together , The main analysis follows screen_init() Function 5 About functions .

///> 2>. sc_video_buffer_init() Initialization function

bool

sc_video_buffer_init(struct sc_video_buffer *vb, sc_tick buffering_time,

const struct sc_video_buffer_callbacks *cbs,

void *cbs_userdata) {

bool ok = sc_frame_buffer_init(&vb->fb);

///> Put the relevant contents of this initialization function directly in this location

{

fb->pending_frame = av_frame_alloc(); ///> apply pending Of av_frame Memory space

fb->tmp_frame = av_frame_alloc(); ///> apply tmp Of av_frame Memory space

fb->pending_frame_consumed = true;

};

ok = sc_cond_init(&vb->b.queue_cond);

ok = sc_cond_init(&vb->b.wait_cond);

sc_clock_init(&vb->b.clock);

sc_queue_init(&vb->b.queue);

vb->buffering_time = buffering_time; ///> buffering_time Time

vb->cbs = cbs; ///> The callback function assignment points to sc_video_buffer_on_new_frame() function

vb->cbs_userdata = cbs_userdata; ///> Callback function entry data

return true;

}

///> Next, let's look at the related data structures

struct sc_video_buffer_frame_queue SC_QUEUE(struct sc_video_buffer_frame);

struct sc_video_buffer {

struct sc_frame_buffer fb;

sc_tick buffering_time;

// only if buffering_time > 0

struct {

sc_thread thread;

sc_mutex mutex;

sc_cond queue_cond;

sc_cond wait_cond;

struct sc_clock clock;

struct sc_video_buffer_frame_queue queue; ///> The screen buf Queues .

bool stopped;

} b; // buffering

const struct sc_video_buffer_callbacks *cbs;

void *cbs_userdata;

};

struct sc_video_buffer_callbacks {

void (*on_new_frame)(struct sc_video_buffer *vb, bool previous_skipped,

void *userdata);

};

///> 1>. sc_video_buffer Callback function for

static void

sc_video_buffer_on_new_frame(struct sc_video_buffer *vb, bool previous_skipped,

void *userdata) {

(void) vb;

struct screen *screen = userdata;

if (previous_skipped) {

fps_counter_add_skipped_frame(&screen->fps_counter);

// The EVENT_NEW_FRAME triggered for the previous frame will consume

// this new frame instead

} else {

static SDL_Event new_frame_event = {

.type = EVENT_NEW_FRAME, ///> 4>. SDL The type of event EVENT_NEW_FRAME

};

// Post the event on the UI thread

SDL_PushEvent(&new_frame_event);

}

}

/// When new data frame On arrival , Just call SDL_PushEvent(&new_frame_event) Issue event notification .

/// Two questions :1 Where does the data of the new frame come from ? 2. SDL_PushEvent() Who was notified , What will he do after receiving this notice ?

///> 3>. start-up sc_video_buffer_start(&screen->vb);

bool

sc_video_buffer_start(struct sc_video_buffer *vb) {

if (vb->buffering_time) {

bool ok =

sc_thread_create(&vb->b.thread, run_buffering, "buffering", vb);

if (!ok) {

LOGE("Could not start buffering thread");

return false;

}

}

return true;

}

///> start-up run_buffering Threads

static int

run_buffering(void *data) {

struct sc_video_buffer *vb = data;

assert(vb->buffering_time > 0);

for (;;) {

sc_mutex_lock(&vb->b.mutex);

while (!vb->b.stopped && sc_queue_is_empty(&vb->b.queue)) {

sc_cond_wait(&vb->b.queue_cond, &vb->b.mutex);

}

if (vb->b.stopped) {

sc_mutex_unlock(&vb->b.mutex);

goto stopped;

}

struct sc_video_buffer_frame *vb_frame;

sc_queue_take(&vb->b.queue, next, &vb_frame); ///> from Out of the queue frame

sc_tick max_deadline = sc_tick_now() + vb->buffering_time;

// PTS (written by the server) are expressed in microseconds

sc_tick pts = SC_TICK_TO_US(vb_frame->frame->pts);

bool timed_out = false;

while (!vb->b.stopped && !timed_out) {

sc_tick deadline = sc_clock_to_system_time(&vb->b.clock, pts)

+ vb->buffering_time;

if (deadline > max_deadline) {

deadline = max_deadline;

}

timed_out =

!sc_cond_timedwait(&vb->b.wait_cond, &vb->b.mutex, deadline);

}

if (vb->b.stopped) {

sc_video_buffer_frame_delete(vb_frame);

sc_mutex_unlock(&vb->b.mutex);

goto stopped;

}

sc_mutex_unlock(&vb->b.mutex);

#ifndef SC_BUFFERING_NDEBUG

LOGD("Buffering: %" PRItick ";%" PRItick ";%" PRItick,

pts, vb_frame->push_date, sc_tick_now());

#endif

sc_video_buffer_offer(vb, vb_frame->frame); ///> to video_buffer supply Frame data , Key functions found .

sc_video_buffer_frame_delete(vb_frame);

}

stopped:

// Flush queue

while (!sc_queue_is_empty(&vb->b.queue)) {

struct sc_video_buffer_frame *vb_frame;

sc_queue_take(&vb->b.queue, next, &vb_frame);

sc_video_buffer_frame_delete(vb_frame);

}

LOGD("Buffering thread ended");

return 0;

}

///> sc_video_buffer_offer() Data provision method

static bool

sc_video_buffer_offer(struct sc_video_buffer *vb, const AVFrame *frame) {

bool previous_skipped;

bool ok = sc_frame_buffer_push(&vb->fb, frame, &previous_skipped);

if (!ok) {

return false;

}

vb->cbs->on_new_frame(vb, previous_skipped, vb->cbs_userdata); ///> perform on_new_frame Callback function ,sc_video_buffer_on_new_frame()

return true; ///> Answer the first question , The new frame is composed of run_buffering() Thread monitoring queue Send out the data when you have it .

}

///> Data frame provider

bool

sc_frame_buffer_push(struct sc_frame_buffer *fb, const AVFrame *frame,

bool *previous_frame_skipped) {

sc_mutex_lock(&fb->mutex);

// Use a temporary frame to preserve pending_frame in case of error.

// tmp_frame is an empty frame, no need to call av_frame_unref() beforehand.

int r = av_frame_ref(fb->tmp_frame, frame);

if (r) {

LOGE("Could not ref frame: %d", r);

return false;

}

// Now that av_frame_ref() succeeded, we can replace the previous

// pending_frame

swap_frames(&fb->pending_frame, &fb->tmp_frame);

av_frame_unref(fb->tmp_frame);

if (previous_frame_skipped) {

*previous_frame_skipped = !fb->pending_frame_consumed;

}

fb->pending_frame_consumed = false;

sc_mutex_unlock(&fb->mutex);

return true;

}

///> 4>. SDL The type of event EVENT_NEW_FRAME

/// The program listening for this type of event is screen_handle_event() function

bool

screen_handle_event(struct screen *screen, SDL_Event *event) {

switch (event->type) {

case EVENT_NEW_FRAME:

if (!screen->has_frame) {

screen->has_frame = true;

// this is the very first frame, show the window

screen_show_window(screen);

}

bool ok = screen_update_frame(screen); ///> There are new ones frame The event came , Refresh the screen display

if (!ok) {

LOGW("Frame update failed\n");

}

return true;

case SDL_WINDOWEVENT:

if (!screen->has_frame) {

// Do nothing

return true;

}

switch (event->window.event) {

case SDL_WINDOWEVENT_EXPOSED:

screen_render(screen, true);

break;

case SDL_WINDOWEVENT_SIZE_CHANGED:

screen_render(screen, true);

break;

case SDL_WINDOWEVENT_MAXIMIZED:

screen->maximized = true;

break;

case SDL_WINDOWEVENT_RESTORED:

if (screen->fullscreen) {

// On Windows, in maximized+fullscreen, disabling

// fullscreen mode unexpectedly triggers the "restored"

// then "maximized" events, leaving the window in a

// weird state (maximized according to the events, but

// not maximized visually).

break;

}

screen->maximized = false;

apply_pending_resize(screen);

screen_render(screen, true);

break;

}

return true;

}

return false;

}

/// Answer the second question here , SDL The event was notified to screen_handle_event() function , This function does screen refresh work .

/// So far, we have transferred the video data from "socket data ==> decoder ==> Screen refresh " The whole process is thoroughly analyzed .

/// The problem now is video frame Apply colours to a drawing? , It's in this process , And that part ? I continue to analyze screen_update_frame() function

static bool

screen_update_frame(struct screen *screen) {

av_frame_unref(screen->frame);

sc_video_buffer_consume(&screen->vb, screen->frame);

AVFrame *frame = screen->frame;

fps_counter_add_rendered_frame(&screen->fps_counter);

struct size new_frame_size = {

frame->width, frame->height};

if (!prepare_for_frame(screen, new_frame_size)) {

return false;

}

update_texture(screen, frame); ///> What is this function for ? When the screen size changes , Adjust the size scale .

screen_render(screen, false); ///> What does this function do ?

return true;

}

///>

void

screen_render(struct screen *screen, bool update_content_rect) {

if (update_content_rect) {

screen_update_content_rect(screen);

}

SDL_RenderClear(screen->renderer);

if (screen->rotation == 0) {

SDL_RenderCopy(screen->renderer, screen->texture, NULL, &screen->rect);

} else {

// rotation in RenderCopyEx() is clockwise, while screen->rotation is

// counterclockwise (to be consistent with --lock-video-orientation)

int cw_rotation = (4 - screen->rotation) % 4;

double angle = 90 * cw_rotation;

SDL_Rect *dstrect = NULL;

SDL_Rect rect;

if (screen->rotation & 1) {

rect.x = screen->rect.x + (screen->rect.w - screen->rect.h) / 2;

rect.y = screen->rect.y + (screen->rect.h - screen->rect.w) / 2;

rect.w = screen->rect.h;

rect.h = screen->rect.w;

dstrect = ▭

} else {

assert(screen->rotation == 2);

dstrect = &screen->rect;

}

SDL_RenderCopyEx(screen->renderer, screen->texture, NULL, dstrect,

angle, NULL, 0);

}

SDL_RenderPresent(screen->renderer); ///> Use SDL_Render Interface to render .

}

/// So far, we have added the processing flow as "socket data ==> decoder ==> Screen refresh ==> Rendering ". There seems to be something missing ?

///> 5>. screen_frame_sink_push() function

{

static const struct sc_frame_sink_ops ops = {

.open = screen_frame_sink_open,

.close = screen_frame_sink_close,

.push = screen_frame_sink_push, ///> 5>. screen_frame_sink_push() function

};

screen->frame_sink.ops = &ops;

}

///> Remember 4.2 Main program call decoder_add_sink(&s->decoder, &s->screen.frame_sink) Function , Do not use flow chart , It's kind of a detour .

void

decoder_add_sink(struct decoder *decoder, struct sc_frame_sink *sink) {

assert(decoder->sink_count < DECODER_MAX_SINKS);

assert(sink);

assert(sink->ops);

decoder->sinks[decoder->sink_count++] = sink; ///>

}

/* This section is related Screen and decoder sink spot , hold screen->frame_sink Assigned to Decoder decoder->sinks[decoder->sink_count] Array , After the single decoder decodes successfully , Called decoder->sinks[decoder->sink_count]->sink.ops->push() function , Is to perform screen_frame_sink_push() This function . */

static bool

screen_frame_sink_push(struct sc_frame_sink *sink, const AVFrame *frame) {

struct screen *screen = DOWNCAST(sink);

return sc_video_buffer_push(&screen->vb, frame);

}

bool

sc_video_buffer_push(struct sc_video_buffer *vb, const AVFrame *frame) {

if (!vb->buffering_time) {

// No buffering

return sc_video_buffer_offer(vb, frame); //> Fast screen swiping channel

}

sc_mutex_lock(&vb->b.mutex);

sc_tick pts = SC_TICK_FROM_US(frame->pts);

sc_clock_update(&vb->b.clock, sc_tick_now(), pts);

sc_cond_signal(&vb->b.wait_cond);

if (vb->b.clock.count == 1) {

sc_mutex_unlock(&vb->b.mutex);

// First frame, offer it immediately, for two reasons:

// - not to delay the opening of the scrcpy window

// - the buffering estimation needs at least two clock points, so it

// could not handle the first frame

return sc_video_buffer_offer(vb, frame); //> Fast screen swiping channel

}

struct sc_video_buffer_frame *vb_frame = sc_video_buffer_frame_new(frame);

if (!vb_frame) {

sc_mutex_unlock(&vb->b.mutex);

LOGE("Could not allocate frame");

return false;

}

#ifndef SC_BUFFERING_NDEBUG

vb_frame->push_date = sc_tick_now();

#endif

sc_queue_push(&vb->b.queue, next, vb_frame); ///> hold vb_frame push Go to the queue , adopt run_buffering Thread to refresh the screen .

sc_cond_signal(&vb->b.queue_cond); ///> send out signal to queue_cond

sc_mutex_unlock(&vb->b.mutex);

return true;

}

static bool

sc_video_buffer_offer(struct sc_video_buffer *vb, const AVFrame *frame) {

bool previous_skipped;

bool ok = sc_frame_buffer_push(&vb->fb, frame, &previous_skipped);

if (!ok) {

return false;

}

vb->cbs->on_new_frame(vb, previous_skipped, vb->cbs_userdata);

return true;

}

in other words After the decoder decodes successfully 、 Call screen refresh directly through this interface , If it is the first screen or No buffering Status will quickly refresh the screen ,

In other cases, put the data into In line , from run_buffering Thread to refresh the screen , Thus, the screen refresh rate parameter .

Next I will refactor android Open source video player code , To verify the results of this code walk . Please be wise before further verification 、 Identify the logical justification for this code walk through . If something is wrong 、 Please leave a message to correct , thank you .

边栏推荐

- Simple use of event bus

- MFS details (vii) - - MFS client and Web Monitoring installation configuration

- The title of the WebView page will be displayed in the top navigation bar of the app. How to customize

- App performance test: (IV) power

- Kotlin foundation extension

- 二分查找

- Dart class inherits and implements mixed operators

- Free screen recording software captura download and installation

- App performance test: (II) CPU

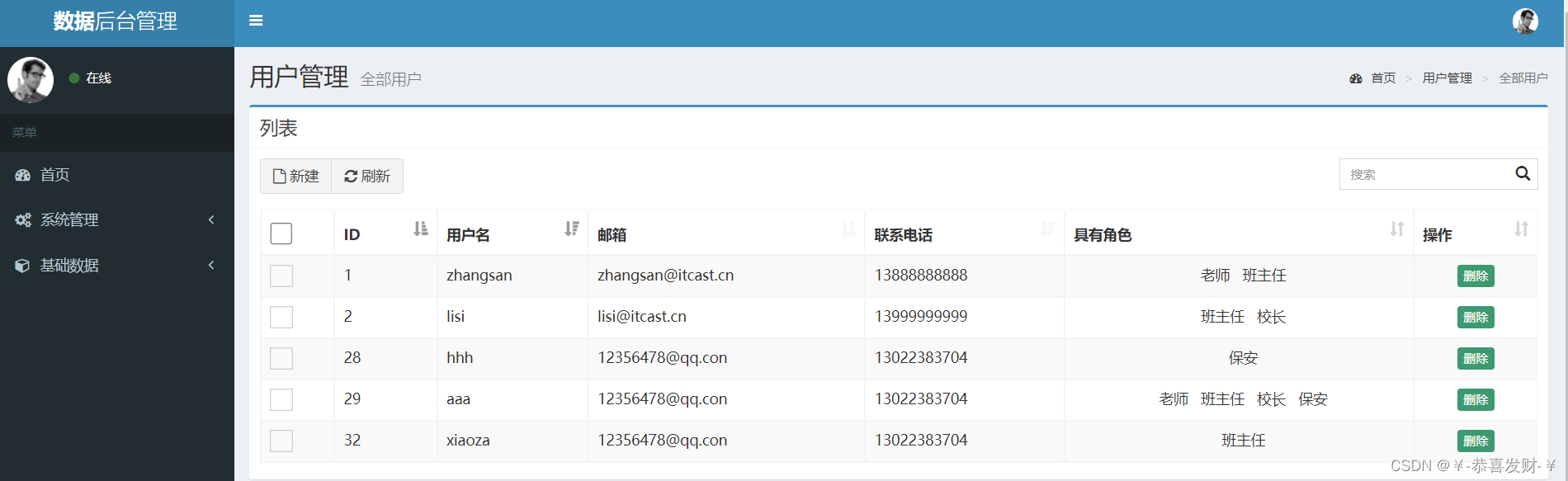

- SSM框架整合--->简单后台管理

猜你喜欢

JS convert text to language for playback

MFS详解(五)——MFS元数据日志服务器安装与配置

Echart line chart: different colors are displayed when the names of multiple line charts are the same

Wechat applet: click the event to obtain the current device information (basic)

SSM framework integration -- > simple background management

Echart histogram: stacked histogram displays value

The boys x pubgmobile linkage is coming! Check out the latest game posters

不在以下合法域名列表中,微信小程序解决办法

![[FAQs for novices on the road] understand program design step by step](/img/33/24ced00918bc7bd59f504cf1a73827.jpg)

[FAQs for novices on the road] understand program design step by step

【Kernel】驱动编译的两种方式:编译成模块、编译进内核(使用杂项设备驱动模板)

随机推荐

App performance test: (III) traffic monitoring

MFS explanation (V) -- MFS metadata log server installation and configuration

线程池学习

本地文件秒搜工具 Everything

Hbuilderx: installation of hbuilderx and its common plug-ins

Echart histogram: stack histogram value formatted display

MFS explanation (VI) -- MFS chunk server installation and configuration

本地文件秒搜工具 Everything

Echart折线图:当多条折线图的name一样时也显示不同的颜色

JetPack - - - LifeCycle、ViewModel、LiveData

synchronized浅析

推荐扩容工具,彻底解决C盘及其它磁盘空间不够的难题

Download and installation of universal player potplayer, live stream m3u8 import

ADB shell content command debug database

El form form verification

A brief analysis of the overall process of view drawing

MFS详解(六)——MFS Chunk Server服务器安装与配置

Recommend a capacity expansion tool to completely solve the problem of insufficient disk space in Disk C and other disks

杨辉三角形详解

[2022 college entrance examination season] what I want to say as a passer-by