当前位置:网站首页>Neural network of PRML reading notes (1)

Neural network of PRML reading notes (1)

2022-07-05 12:39:00 【NLP journey】

Forward neural network

In the classification and regression problems , The final form can be expressed as

y(x,w)=f(∑Mj=1wiϕj(x)) (1)

among , If it's a classification problem ,f(·) Is an activation function , If it's a question of return ,f(·) Is the regression function . The goal now is to adjust during training w, Adapt it to ϕj(x) Prepare training data .

Now let's look at a basic three-layer neural network model : First we will have M About input variables x1,..xd Linear combination function of :

aj=∑Di=1w(1)jixi+w(1)j0 (2)

among j=1,…,M, meanwhile w The superscript of (1) It represents the number of layers of neural network . Then there will be a nonlinear function h(·):

zj=h(aj) (3)

(3) In the same form as (1) Exactly the same as . This is only the output of a unit in the hidden layer , These inputs will go through another linear combination :

ak=∑Mj=1w(2)kjzj+w(2)k0 (4)

among k=1,…,k And start the number of all outputs . For the return question ,yk=ak. For the classification problem ,yk=σ(ak) (5)

among ,σ(a)=11+exp(−1) (6)

Writing all the above formulas together is :

yk(x,w)=σ(∑Mj=1w(2)kjh(∑Di=1w(1)jixi+w(i)j0)+w(2)k0)

Therefore, the essence of neural network model is input variables { xi} In the parameter vector w Nonlinear transformation under .(7)

This network can be represented by the following figure :

By introducing subscripts 0 And set x1 You can put the formula 7 to :

yk(x,w)=σ(∑Mj=0w(2)kjh(∑Di=0w(1)jixi)) (8)

Because the combination of linear transformation is still linear transformation , If the activation functions of all hidden layers are linear transformations , Such a neural network can find an equivalent network without hidden layer . If the number of cells in the hidden layer is less than that of the input layer or the output layer , Information will be lost in the process of transmission .

chart 5.1 The network structure in is the most common neural network . There are different names in terms , It can be called three-layer neural network ( According to the number of layers of nodes ) Or single hidden layer neural network ( Number of hidden layers ), Or two-layer neural network ( According to the number of layers of parameters ,PRML Recommended name ).

One way to extend the neural network is to add hop layer connections , In other words, the output layer is not just connected as the hidden layer , It is also directly connected to the input layer . Although it is mentioned in the book that sigmoidal Function as the activation function of the hidden layer, the network can achieve the same effect as the one containing the thermocline connection by adjusting the weight of the input and output , It may be more effective to display the connection with jump layer on time .

On the premise of forward propagation ( That is, it will not contain closed rings , Output cannot be your own input ), There can be more complex networks . The input of the next layer can be all the previous layers or subsets , Or other nodes in the current layer .. An example is as follows :

In this case z1,z2,z3 Is the hidden layer , meanwhile z1 again z2 and z3 The input of .

The approximation ability of neural networks has been widely studied and has become a universal approximator . For example, a two-layer network with linear output can approximate any continuous function with any accuracy in a compact input space , As long as the network has enough hidden layers .

Symmetry of weight space

A property of feedforward neural network is that for the same input and output , There are many possibilities for the weight parameters of the network .

First consider an image 5.1 Two layer neural network . Yes M Hidden layers , And adopt full connection ( Each lower layer is connected to all inputs of the previous layer ), The activation function is tanh. For a unit of the hidden layer . If you change the sign of the input weight of all the units , Then the symbol after linear transformation will be changed , Again because tanh It's an odd function ,tanh(-a)=-tanh(a), Then the output of the unit will also change the symbol . By changing the symbol of the output weight of the unit, the value of the layer finally flowing into the output will not change . That is, by changing the symbols of the weights of the input to the hidden layer and the hidden layer to the output layer at the same time , The input and output of the whole network will not be affected . For having M Hidden layer of units , So there's a total of 2M Group production raw phase Same as transport Enter into − transport Out network Collateral Of power heavy ( Every time individual single element two individual ).

Due to the symmetry of the middle hidden layer , You can swap the input and output parameters of any two hidden layer objects , It will not affect the input and output results of the whole network . therefore , All in all M! In different ways ( That is, in the middle M The number of permutations of hidden layers ).

So for a middle one M For a two-layer neural network with hidden units , The total number of symmetric weights is 2M∗M!. And not only for the activation function tanh It was established. .

边栏推荐

- Volatile instruction rearrangement and why instruction rearrangement is prohibited

- Flutter2 heavy release supports web and desktop applications

- MySQL index - extended data

- Acid transaction theory

- Preliminary exploration of basic knowledge of MySQL

- Learning JVM garbage collection 06 - memory set and card table (hotspot)

- UNIX socket advanced learning diary - advanced i/o functions

- MySQL function

- Semantic segmentation experiment: UNET network /msrc2 dataset

- View and terminate the executing thread in MySQL

猜你喜欢

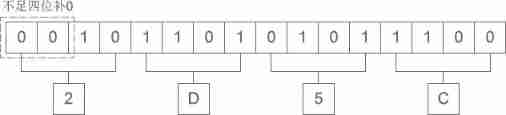

Hexadecimal conversion summary

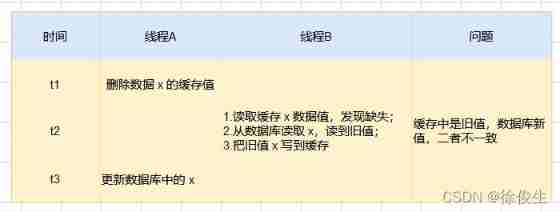

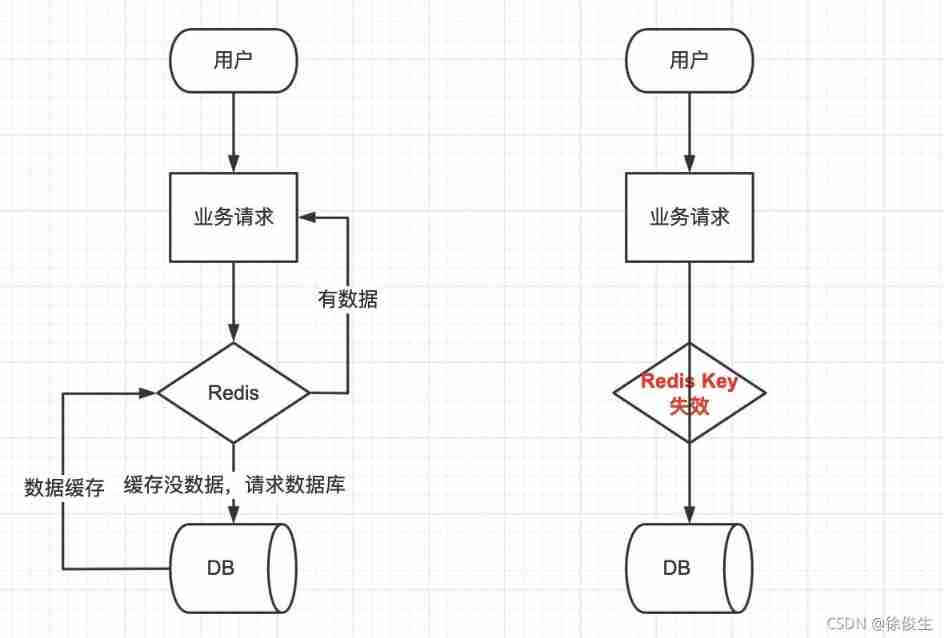

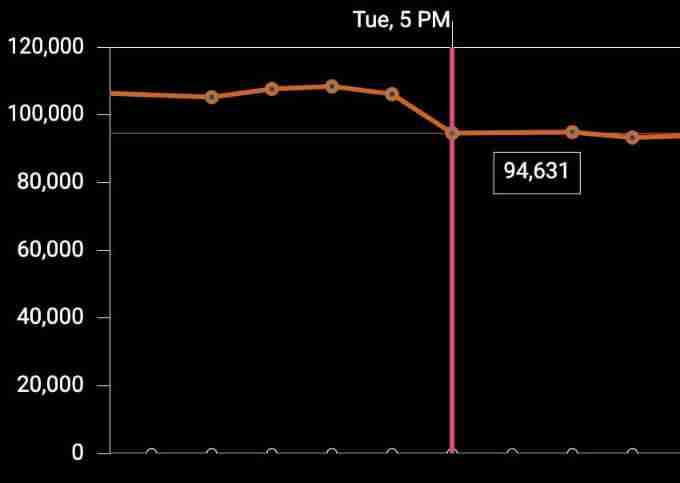

Solve the problem of cache and database double write data consistency

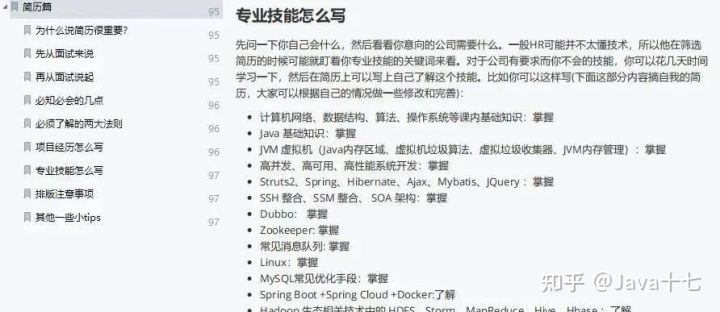

前几年外包干了四年,秋招感觉人生就这样了..

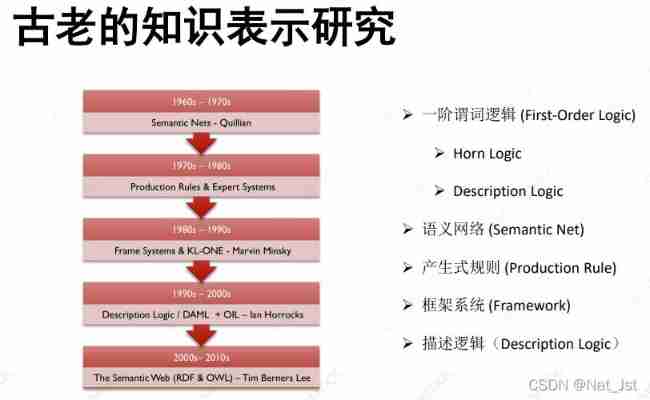

Knowledge representation (KR)

Preliminary exploration of basic knowledge of MySQL

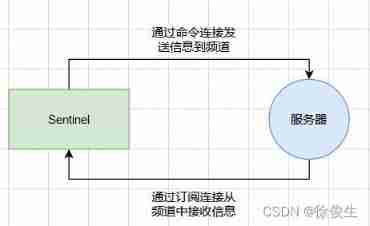

Redis highly available sentinel cluster

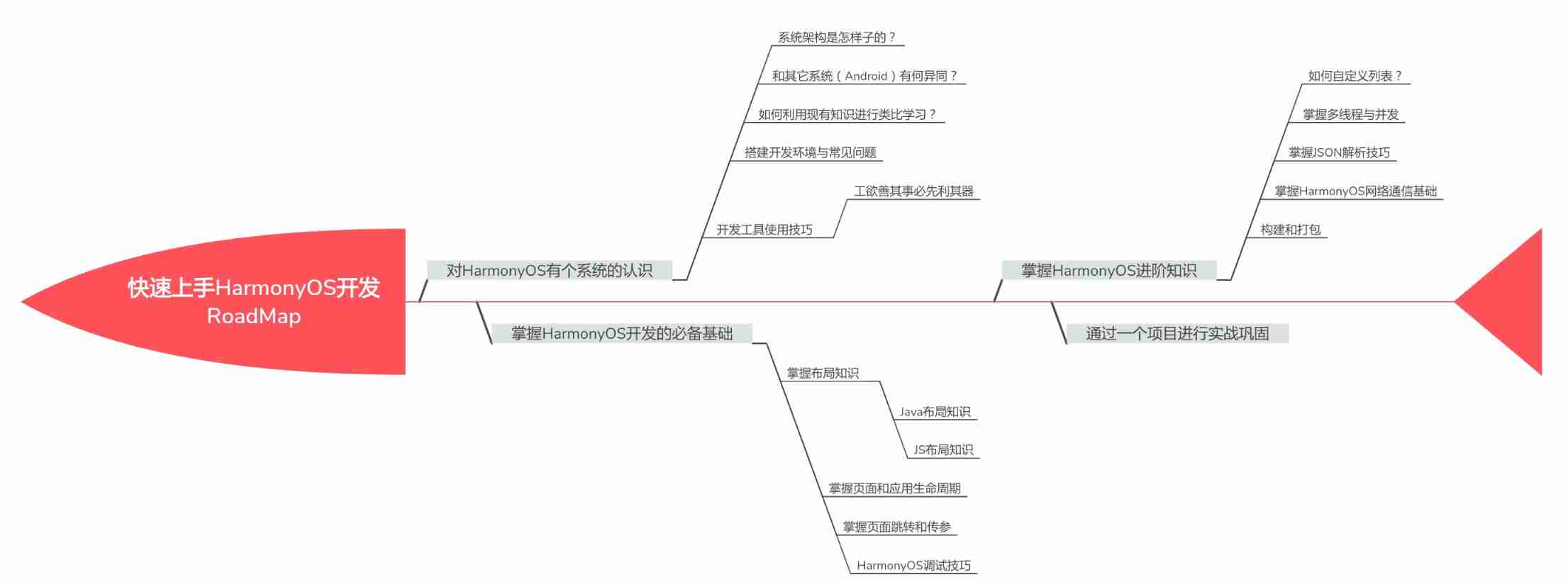

Why learn harmonyos and how to get started quickly?

About cache exceptions: solutions for cache avalanche, breakdown, and penetration

Master the new features of fluent 2.10

Detailed steps for upgrading window mysql5.5 to 5.7.36

随机推荐

Kotlin变量

Understanding the architecture type of mobile CPU

JDBC -- use JDBC connection to operate MySQL database

Redis master-slave configuration and sentinel mode

Pytoch counts the number of the same elements in the tensor

Learn the memory management of JVM 03 - Method area and meta space of JVM

Pytoch uses torchnet Classerrormeter in meter

Flutter2 heavy release supports web and desktop applications

MySQL function

Distributed solution - distributed session consistency problem

Hexadecimal conversion summary

[HDU 2096] 小明A+B

IPv6与IPv4的区别 网信办等三部推进IPv6规模部署

The evolution of mobile cross platform technology

Flume common commands and basic operations

Learn JVM garbage collection 02 - a brief introduction to the reference and recycling method area

MySQL log module of InnoDB engine

byte2String、string2Byte

PXE startup configuration and principle

Deep discussion on the decoding of sent protocol