当前位置:网站首页>About tensorflow using GPU acceleration

About tensorflow using GPU acceleration

2022-06-27 15:33:00 【Peng Xiang】

We're installing tensorflow-gpu after , When it runs, we can choose to use gpu To speed up , This will undoubtedly help us speed up our training pace .

( Be careful : When our tensorflow-gpu After installation , It will be used by default gpu To train )

Previous bloggers have made their own python The environment is installed tensorflow-gpu, Details refer to :

Tensorflow install

After installation , We use BP The project of handwritten numeral recognition based on neural network algorithm is taken as an example

First of all BP A simple understanding of the principle of neural networks

BP Neural network realizes handwritten digit recognition

# -*- coding: utf-8 -*-

""" Handwritten digit recognition , BP Neural network algorithm """

# -------------------------------------------

''' Use python Parsing binary files '''

import numpy as np

import struct

import random

import tensorflow as tf

from sklearn.model_selection import train_test_split

import os

os.environ["CUDA_VISIBLE_DEVICES"] = "0" # Compulsory use cpu

import time

T1 = time.clock()

class LoadData(object):

def __init__(self, file1, file2):

self.file1 = file1

self.file2 = file2

# Load training set

def loadImageSet(self):

binfile = open(self.file1, 'rb') # Read binary

buffers = binfile.read() # buffer

head = struct.unpack_from('>IIII', buffers, 0) # Take before 4 It's an integer , Returns a tuple

offset = struct.calcsize('>IIII') # Locate the data Starting position

imgNum = head[1] # The number of images

width = head[2] # Row number ,28 That's ok

height = head[3] # Number of columns ,28

bits = imgNum*width*height # data Altogether 60000*28*28 Pixel values

bitsString = '>' + str(bits) + 'B' # fmt Format :'>47040000B'

imgs = struct.unpack_from(bitsString, buffers, offset) # take data data , Returns a tuple

binfile.close()

imgs = np.reshape(imgs, [imgNum, width*height])

return imgs, head

# Load training set labels

def loadLabelSet(self):

binfile = open(self.file2, 'rb') # Read binary

buffers = binfile.read() # buffer

head = struct.unpack_from('>II', buffers, 0) # Take before 2 It's an integer , Returns a tuple

offset = struct.calcsize('>II') # Locate the label Starting position

labelNum = head[1] # label Number

numString = '>' + str(labelNum) + 'B'

labels = struct.unpack_from(numString, buffers, offset) # take label data

binfile.close()

labels = np.reshape(labels, [labelNum]) # Transition to list ( One dimensional array )

return labels, head

# Expand the label to 10 Dimension vector

def expand_lables(self):

labels, head = self.loadLabelSet()

expand_lables = []

for label in labels:

zero_vector = np.zeros((1, 10))

zero_vector[0, label] = 1

expand_lables.append(zero_vector)

return expand_lables

# Combine samples and labels into an array [[array(data), array(label)], []...]

def loadData(self):

imags, head = self.loadImageSet()

expand_lables = self.expand_lables()

data = []

for i in range(imags.shape[0]):

imags[i] = imags[i].reshape((1, 784))

data.append([imags[i], expand_lables[i]])

return data

file1 = r'train-images.idx3-ubyte'

file2 = r'train-labels.idx1-ubyte'

trainingData = LoadData(file1, file2)

training_data = trainingData.loadData()

file3 = r't10k-images.idx3-ubyte'

file4 = r't10k-labels.idx1-ubyte'

testData = LoadData(file3, file4)

test_data = testData.loadData()

X_train = [i[0] for i in training_data]

y_train = [i[1][0] for i in training_data]

X_test = [i[0] for i in test_data]

y_test = [i[1][0] for i in test_data]

X_train, X_validation, y_train, y_validation = train_test_split(X_train, y_train, test_size=0.1, random_state=7)

# print(np.array(X_test).shape)

# print(np.array(y_test).shape)

# print(np.array(X_train).shape)

# print(np.array(y_train).shape)

INUPUT_NODE = 784

OUTPUT_NODE = 10

LAYER1_NODE = 500

BATCH_SIZE = 200

LERANING_RATE_BASE = 0.005 # Basic learning rate

LERANING_RATE_DACAY = 0.99 # The decay rate of learning rate

REGULARZATION_RATE = 0.01 # Coefficient of regularization term in loss function

TRAINING_STEPS = 30000

MOVING_AVERAGE_DECAY = 0.99 # Sliding average decay rate

# Three layer fully connected neural network , Moving average class

def inference(input_tensor, avg_class, weights1, biases1, weights2, biases2):

if not avg_class:

layer1 = tf.nn.relu(tf.matmul(input_tensor, weights1)+biases1)

# Not used softmax Layer output

return tf.matmul(layer1, weights2)+biases2

else:

layer1 = tf.nn.relu(tf.matmul(input_tensor, avg_class.average(weights1))+

avg_class.average(biases1))

return tf.matmul(layer1, avg_class.average(weights2))+avg_class.average(biases2)

def train(X_train, X_validation, y_train, y_validation, X_test, y_test):

x = tf.placeholder(tf.float32, [None, INUPUT_NODE], name="x-input")

y_ = tf.placeholder(tf.float32, [None, OUTPUT_NODE], name="y-input")

# Generate hidden layers

weights1 = tf.Variable(

tf.truncated_normal([INUPUT_NODE, LAYER1_NODE], stddev=0.1))

biases1 = tf.Variable(tf.constant(0.1, shape=[LAYER1_NODE]))

# Generate output layer

weights2 = tf.Variable(

tf.truncated_normal([LAYER1_NODE, OUTPUT_NODE], stddev=0.1))

biases2 = tf.Variable(tf.constant(0.1, shape=[OUTPUT_NODE]))

y = inference(x, None, weights1, biases1, weights2, biases2)

global_step = tf.Variable(0, trainable=False)

variable_averages = tf.train.ExponentialMovingAverage(MOVING_AVERAGE_DECAY, global_step)

variable_averages_op = variable_averages.apply(tf.trainable_variables())

average_y = inference(x, variable_averages, weights1, biases1, weights2, biases2)

cross_entropy = tf.nn.sparse_softmax_cross_entropy_with_logits(logits=y, labels=tf.argmax(y_, 1))

cross_entropy_mean = tf.reduce_mean(cross_entropy)

# L2 Regularization loss

regularizer = tf.contrib.layers.l2_regularizer(REGULARZATION_RATE)

regularization = regularizer(weights1) + regularizer(weights2)

loss = cross_entropy_mean + regularization

# Exponentially decaying learning rate

learning_rate = tf.train.exponential_decay(LERANING_RATE_BASE,

global_step,

len(X_train)/BATCH_SIZE,

LERANING_RATE_DACAY)

train_step = tf.train.GradientDescentOptimizer(learning_rate).minimize(loss, global_step=global_step)

with tf.control_dependencies([train_step, variable_averages_op]):

train_op = tf.no_op(name='train')

correct_prediction = tf.equal(tf.argmax(average_y, 1), tf.argmax(y_, 1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

with tf.Session() as sess:

init_op = tf.global_variables_initializer()

sess.run(init_op)

validation_feed = {

x: X_validation, y_: y_validation}

train_feed = {

x: X_train, y_: y_train}

test_feed = {

x: X_test, y_: y_test}

for i in range(TRAINING_STEPS):

if i % 500 == 0:

validate_acc = sess.run(accuracy, feed_dict=validation_feed)

print("after %d training step(s), validation accuracy "

"using average model is %g" % (i, validate_acc))

start = (i * BATCH_SIZE) % len(X_train)

end = min(start + BATCH_SIZE, len(X_train))

sess.run(train_op,

feed_dict={

x: X_train[start:end], y_: y_train[start:end]})

# print('loss:', sess.run(loss))

test_acc = sess.run(accuracy, feed_dict=test_feed)

print("after %d training step(s), test accuracy using"

"average model is %g" % (TRAINING_STEPS, test_acc))

train(X_train, X_validation, y_train, y_validation, X_test, y_test)

T2 = time.clock()

print(' Program running time :%s millisecond ' % ((T2 - T1)*1000))

GPU Running results

CPU Running results

From the results of operation , The difference in running time between the two is two times

The blogger's graphics card is too stretched , Looking at other people's tests, the two are very different , Purring , But at least it has some acceleration effect , Bye-bye !

边栏推荐

- 避孕套巨头过去两年销量下降40% ,下降原因是什么?

- E-week finance Q1 mobile banking has 650million active users; Layout of financial subsidiaries in emerging fields

- Cannot determine value type from string ‘<p>1</p>‘

- Lei Jun lost another great general, and liweixing, the founding employee of Xiaomi No. 12, left his post. He once had porridge to create Xiaomi; Intel's $5.4 billion acquisition of tower semiconductor

- Design and implementation of food recipe and ingredients website based on vue+node+mysql

- Piblup test report 1- pedigree based animal model

- [interview questions] common interview questions (I)

- E ModuleNotFoundError: No module named ‘psycopg2‘(已解决)

- AQS Abstract queue synchronizer

- Computer screen splitting method

猜你喜欢

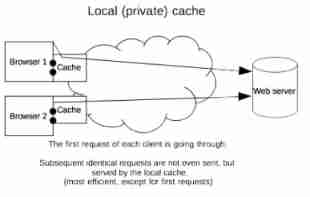

HTTP Caching Protocol practice

Unity3d best practices: folder structure and source control

Interview question: rendering 100000 data solutions

反射学习总结

PSS: you are only two convolution layers away from the NMS free+ point | 2021 paper

Référence forte, faible, douce et virtuelle de threadlocal

Is flutter easy to learn? How to learn? The most complete introduction and actual combat of flutter in history. Take it away without thanks~

基于WEB平台的阅读APP设计与实现

Leetcode 724. Find the central subscript of the array (yes, once)

![[high concurrency] deeply analyze the callable interface](/img/24/33c3011752c8f04937ad68d85d4ece.jpg)

[high concurrency] deeply analyze the callable interface

随机推荐

2022-2-16 learning the imitated Niuke project - Section 6 adding comments

QT 如何在背景图中将部分区域设置为透明

Use of abortcontroller

洛谷入门2【分支结构】题单题解

R language triple becomes matrix matrix becomes triple

Creation and use of static library (win10+vs2022

CAS之比较并交换

老师能给我说一下固收+产品主要投资于哪些方面?

FPGA based analog I ² C protocol system design (with main code)

ReentrantLock、ReentrantReadWriteLock、StampedLock

R language error

Pycharm安装与设置

[advanced mathematics] from normal vector to surface integral of the second kind

Great God developed the new H5 version of arXiv, saying goodbye to formula typography errors in one step, and the mobile phone can easily read literature

Massive data! Second level analysis! Flink+doris build a real-time data warehouse scheme

Knowledge map model

Programming skills: script scheduling

Maximum profit of stock (offer 63)

ReentrantLock、ReentrantReadWriteLock、StampedLock

ERROR L104: MULTIPLE PUBLIC DEFINITIONS