当前位置:网站首页>Surpass the strongest variant of RESNET! Google proposes a new convolution + attention network: coatnet, with an accuracy of 89.77%!

Surpass the strongest variant of RESNET! Google proposes a new convolution + attention network: coatnet, with an accuracy of 89.77%!

2022-06-13 02:32:00 【Prodigal son's private dishes】

The paper :https://arxiv.org/abs/2106.04803

Transformer Although cross-border computer vision has made some good achievements , But most of the time , It still lags behind the most advanced convolution network .

Now? , Google has come up with an idea called CoAtNets Model of , Look at the name, you also found , This is a Convolution + Attention The combination model of .

The model implements ImageNet Data sets 86.0% Of top-1 precision , But in the use of JFT In the case of data set 89.77% The accuracy of the , The performance is better than all existing convolutional networks and Transformer!

Convolution combined self attention , Stronger generalization ability and higher model capacity

How do they decide to combine convolution networks with Transformer Combine them to make a new model ?

First , The researchers found that , Convolutional networks and Transformer In two basic aspects of machine learning —— Generalization and model capacity have their own advantages .

Because the convolution layer has a strong inductive bias (inductive bias), So convolution network model has better generalization ability and faster convergence speed , And those who have the attention mechanism Transformer There is a higher model capacity , Can benefit from the big data set .

That combines the convolution layer and the attention layer , You can get better generalization ability and larger model capacity at the same time !

Good. , Here comes the key question : How to effectively combine them , And achieve a better balance between accuracy and efficiency ?

边栏推荐

- Mbedtls migration experience

- Understand HMM

- [learning notes] xr872 GUI littlevgl 8.0 migration (file system)

- Understanding and thinking about multi-core consistency

- [Dest0g3 520迎新赛] 拿到WP还整了很久的Dest0g3_heap

- Superficial understanding of conditional random fields

- [reading point paper] deeplobv3 rethinking atlas revolution for semantic image segmentation ASPP

- Number of special palindromes in basic exercise of test questions

- The precision of C language printf output floating point numbers

- Paipai loan parent company Xinye quarterly report diagram: revenue of RMB 2.4 billion, net profit of RMB 530million, a year-on-year decrease of 10%

猜你喜欢

Open source video recolor code

After idea uses c3p0 connection pool to connect to SQL database, database content cannot be displayed

智能安全配电装置如何减少电气火灾事故的发生?

Armv8-m learning notes - getting started

![How to solve the problem of obtaining the time through new date() and writing out the difference of 8 hours between the database and the current time [valid through personal test]](/img/c5/f17333cdb72a1ce09aa54e38dd0a8c.png)

How to solve the problem of obtaining the time through new date() and writing out the difference of 8 hours between the database and the current time [valid through personal test]

01 initial knowledge of wechat applet

Paper reading - jukebox: a generic model for music

![[reading papers] transformer miscellaneous notes, especially miscellaneous](/img/c3/7788b1bcd71b90c18cf66bb915db32.jpg)

[reading papers] transformer miscellaneous notes, especially miscellaneous

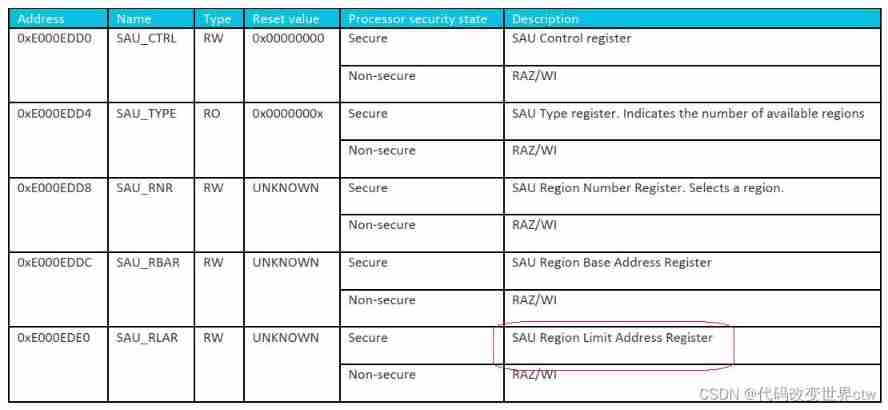

Armv8-m (Cortex-M) TrustZone summary and introduction

微信云开发粗糙理解

随机推荐

Exam23 named windows and simplified paths, grayscale conversion

02 优化微信开发者工具默认的结构

An image is word 16x16 words: transformers for image recognition at scale

AutoX. JS invitation code

About the fact that I gave up the course of "Guyue private room course ROS manipulator development from introduction to actual combat" halfway

[reading point paper] deeplobv3+ encoder decoder with Atlas separable revolution

Thinking back from the eight queens' question

Number of special palindromes in basic exercise of test questions

Introduction to armv8/armv9 - learning this article is enough

Automatic differential reference

Think: when do I need to disable mmu/i-cache/d-cache?

Opencv 08 demonstrates the effect of opening and closing operations of erode, dilate and morphological function morphologyex.

[pytorch] kaggle large image dataset data analysis + visualization

Hstack, vstack and dstack in numpy

【LeetCode-SQL】1532. Last three orders

智能安全配电装置如何减少电气火灾事故的发生?

A real-time target detection model Yolo

GMM Gaussian mixture model

ROS learning-7 error in custom message or service reference header file

[reading papers] comparison of deeplobv1-v3 series, brief review