当前位置:网站首页>Garbage collector with serial, throughput priority and response time priority

Garbage collector with serial, throughput priority and response time priority

2022-07-06 05:38:00 【Hey, fish bones ^o^】

4. Garbage collector

This article describes serial 、 Throughput priority 、 Response time first garbage collector , About since JDK9 Start default G1 The garbage collector will be described in the next article .

- Serial

- Single thread

- Heap memory is small , Suitable for personal computers

- Throughput priority

- Multithreading

- Heap memory is large , Multicore cpu

- Let the unit time ,STW The shortest time 0.2 0.2 = 0.4

- Response time first

- Multithreading

- Heap memory is large , Multicore cpu

- Try to make a single STW The shortest time 0.1 0.1 0.1 0.1 0.1 = 0.5

Next, let's learn about garbage collector , Garbage collectors can be divided into these three categories , The first type is called serial garbage collector , The second category

It is called the garbage collector with priority of throughput , The third type is called response time first garbage collector . Let's explain , The first serial garbage return

Receiver , This can also be guessed from the name , Its bottom layer is a single threaded garbage collector , in other words , It happens when garbage collection ,

Other threads are suspended , At this time, a single threaded garbage collector will appear , It uses a thread to complete garbage collection , Obviously, its application

The scenario is when the heap memory is small , and cpu It's useless to have too many cores , Because there's only one thread , Suitable for personal computers, it's like we have

A residential building , There is a cleaner to clean , A cleaning worker is similar to this single thread garbage collector , If the floor is relatively

Short , Small third floor , The cleaning workers may finish cleaning in one day , But if the floor is particularly high , It is a high-rise building with more than 30 floors

Build , The cleaner came to clean , This workload may be very large , I can't finish it for several days . This is the serial garbage collector and Its Application

Use the scene . It is suitable for small heap memory , Personal computers are cpu Such a small number of working environment . The next two types of throughput priority and response time

These two garbage collectors are preferred , They are all multithreaded , Benefits of multithreading , Take the example just now as an analogy , This floor is very high ,

But I can find more cleaners , Each of them cleans one or more floors , Many people are powerful , It must still be possible within the specified time

Complete the task of garbage collection . Therefore, the latter two garbage collectors are suitable for scenarios with large heap memory , And generally it needs multi-core cpu To support ,

Why do we have to say multi-core cpu Well , Although there are multiple threads , But suppose there is only one cpu, When working, multiple threads take turns

Compete for this single core cpu Time slice of , In fact, this efficiency is not as good as single thread , for instance , For example, although there are many cleaning workers to clean the bathroom

raw , But there is only one broom , You must use this broom in turn to clean , This efficiency is obviously the same as cleaning alone , the

The next two kinds of garbage collectors are suitable for working in large heap memory , But there's a requirement , It must be multi-core cpu Can be fully distributed

Wield their power , Obviously multicore cpu It's all server computers , So they are all suitable for working on servers . The latter two throughput give priority to response

Time first, they are all multithreaded , What's the difference between them . Let's start with response time first , The priority of response time is to note

The important thing is to let the garbage recycle when it Stop The World As short as possible , We all know that when garbage collection, it needs to put other lines

Cheng paused , After cleaning the garbage , Other threads can resume running , This time we call it STW, The world pauses , this

Obviously, the shorter the pause time, the better , For the garbage collector with priority of response time, its consideration is to make the pause time as long as possible

shorter , As far as possible let STW The shortest time , This is one of its goals . What about throughput first , Its goal is different , throughput

Priority refers to the amount of garbage I need to recycle per unit of time STW The shortest time , Let the unit time STW The shortest time .

Throughput first and response time first are STW The shortest , So what's the difference between them , This thread takes precedence. It allows single STW

Shortest time , for instance , for instance , Garbage collection is triggered many times per unit time , Every garbage collection only costs 0.1s, hypothesis

1 It happened within hours 5 Garbage collection , Every time 0.1, Then they add up to 0.5s, That is, it took one hour 0.5s,

But each time is very short , This is the goal of response time first . But throughput priority is different , It may take a single garbage collection time

longer , for instance 1 Time spent 0.2s, But it only happens twice in an hour , So its total time is only 0.4s,

From this point on , It seems to be better than the response time in terms of total time , The so-called throughput refers to the time of garbage collection in the program

Proportion of line time , The lower the proportion of garbage collection time , The higher the throughput , This is a meaning of throughput .

Now let's learn about each garbage collector

4.1 Serial

-XX:+UseSerialGC = Serial + SerialOld

The first kind of garbage collector is called serial garbage collector , The statement to start the serial garbage collector VM Parameter is :

-XX:+UseSerialGC = Serial + SerialOld

, You can open the serial garbage collector , But pay attention here , The serial garbage collector is divided into two parts ,

One is called Serial, One is called SerialOld, This is the front. Serial It is working in the new generation ,

It adopts the recycling algorithm or the replication algorithm , and SerialOld Working in the old generation , It uses

Recycling algorithm is not a replication algorithm , It uses markers + Sorting algorithm , Collation will not generate memory

debris , But it will be less efficient , This is to turn on the serial garbage collector JVM Parameters , Including the new generation of

And the two garbage collectors of the old generation , In fact, the garbage collectors of the new generation and the old generation run separately ,

For example, the memory of the new generation is insufficient , Use Serial To complete garbage collection , Wait until the old age, there is insufficient space ,

Only then will it be used Serial To complete the new generation Minor GC,SerialOld To complete

Old age Full GC. This is about JVM Parameters . What about the specific recycling process , Let's also come together

to glance at .

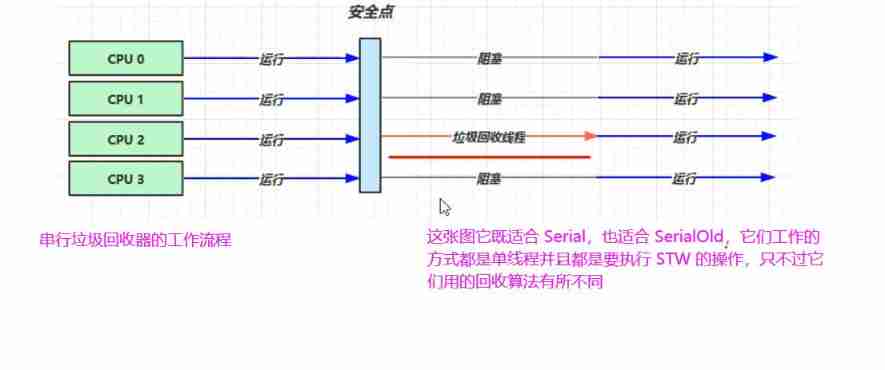

Suppose there are multiple cores cpu,0、1、2、3, There are four cores cpu, At first, these threads are running , At this time, I found heap memory

Maybe not enough , Not enough, triggered a garbage collection , When triggering garbage collection, first of all, let these threads stop at a safe point

Come down , Why should they all stop , We also explained earlier , Because in the process of garbage collection , Maybe this object's

The address will change , To ensure the safe use of these object addresses , All threads that users are working on need to reach this

Safety point pause , At this time, when I finish garbage collection, there will be no other threads to interfere with me , Otherwise, if you are

Move an object , Its address has been changed , If other threads look for this object, they may find the wrong address , Thus problems occur ,

Of course, we should pay attention here, because our Serial Include SerialOld They are all single threaded garbage collectors , So there is only one

Garbage collection threads are running , While this garbage collection thread is running , Other user threads are blocked , It's a pause ,

Wait for the end of the garbage collection thread , Wait until the garbage collection thread ends , Then our other user threads will resume running .

good , This is a working mode of our serial garbage collector .

This picture is suitable for Serial, Also suitable for SerialOld, They all work in a single thread and have to be executed STW The operation of , But they use different recycling algorithms

4.2 Throughput priority

-XX:+UseParallelGC ~ -XX:+UseParallelOldGC

This parameter is to open the garbage collector with priority to throughput ,1.8 The default garbage collector , The front one is the new generation of throughput priority garbage collector , The latter is the old throughput priority garbage collector , Just open one of them and the other will open , Among them, the throughput of the new generation gives priority to garbage recycling The receiver adopts the replication algorithm , In the old days, the throughput priority garbage collector adopts the mark + Arrangement , Parallel garbage collector .-XX:UseAdaptiveSizePolicy

Dynamically adjust the proportion of Eden and surviving areas 、 Heap size 、 Promotion threshold, etc. to achieve the goal ,-XX:GCTimeRatio=ratio

It's a goal , The garbage collector will adjust dynamically to achieve this goal 1/(1+ratio) It's a formula , The total time taken by garbage collection The proportion of cannot exceed this value , Generally, heap memory will be increased , In this way, the frequency of garbage collection is reduced , Total garbage collection time reduced . Be careful : This goal refers to the proportion of total garbage collection time-XX:MaxGCPauseMillis=ms

The time of each largest garbage collection , Generally, the heap memory will be reduced , Because the heap memory becomes smaller , The time for a single garbage collection will be reduced , But because Heap memory is getting smaller , It conflicts with the above goal , So these two goals are actually in conflict . Be careful : This goal is the garbage collection time of a single time-XX:ParallelGCThreads=n

Control the number of threads when the garbage collector with priority in throughput works

Next, we will introduce the garbage collector with priority of throughput , To use this throughput first garbage collector, we can use

-XX:+UseParallelGC ~ -XX:+UseParallelOldGC

These two switches turn it on , In fact, these two switches are 1.8 The default is already on , That is to say 1.8 Let's take our jdk

This is used by default ParallelGC, A parallel garbage collector , This one in front UseParallelGC generation

It is a new generation garbage collector , Its algorithm is replication algorithm , So this one in the back UseParallelOldGC

You can guess from its name that it is also a parallel garbage collector , However, it works in the old age , It uses calculation

Law is a sign + Sorting algorithm , From the perspective of their algorithms alone, they are the same as the serial garbage collector we introduced before , Not at all

Generate memory fragmentation , The main difference lies in its Parallel The term ,Parallel The original meaning is parallel ,

So it alludes to our two garbage collectors (ParallelGC and ParallelOldGC) It's all multithreaded , Of course

these two items. Parallel I little interesting , Just open one of them , It will automatically turn on the other one at the same time ,

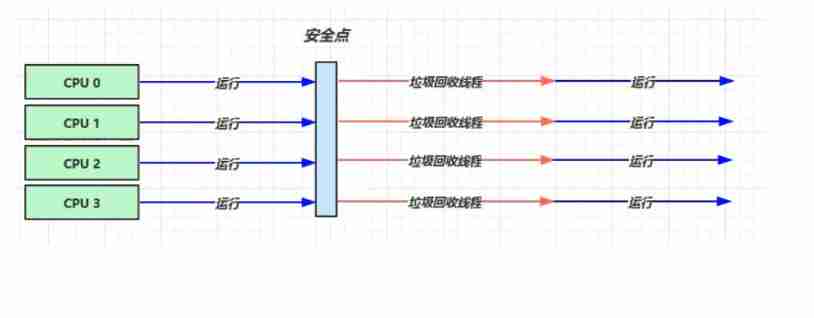

Then its working mode is like this . Is to assume that there are multiple cores cpu, Four core cpu, There are four threads running , At this time, there is insufficient memory

了 , Triggered a garbage collection , At this time, these user threads will run to a safe point and stop , This is the same as before

SerialGC It's similar , After stopping , The difference is that the garbage collector will start multiple threads for garbage collection ,

These multiple recycling threads swarmed , Let's clean up the garbage as soon as possible , The number of garbage collection threads here is by default

It's with you cpu The number of cores is related , As long as yours. cpu When the kernel number is less than a value , It's just like yours cpu The number of cores is exactly the same ,

For example, I now have four cores cpu, Then in the future, four garbage collection threads will be opened to complete the recycling work , Of course, after recycling , Restore others

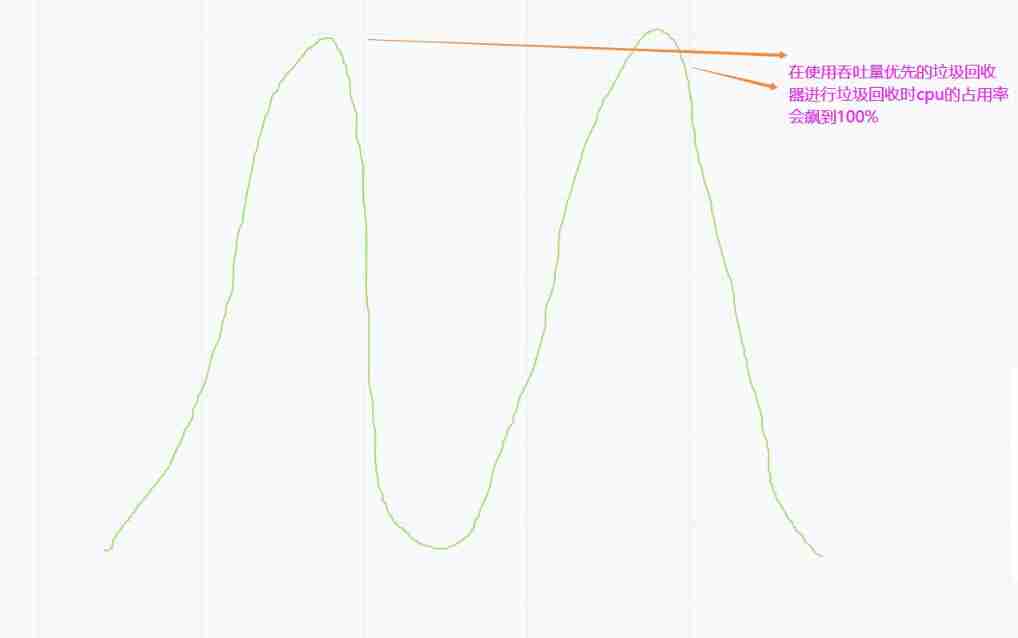

Thread running , When it works , One of them cpu The use curve of is probably like the figure above , Because four cores cpu Everyone is busy

Garbage collection , So in the process of garbage collection cpu The occupancy rate of will soar to 100%, If you encounter garbage collection next time , also

It's coming 100%, This is when its garbage collection occurs on cpu One effect of occupancy . But it also makes sense , Why? , Multicore cpu

I want to give full play to their performance , Let's go together , Finish the garbage collection work quickly , To resume the operation of the remaining user threads ,

Of course, the number of threads can be controlled by a parameter , Namely -XX:ParallelGCThreads=n You can control

ParallelGC One thread of it is counted at runtime .ParallelGC One of its characteristics is that it can be adjusted , According to a

Goal setting ParallelGC A way of working , There are three important parameters related to it , The first one is called

-XX:UseAdaptiveSizePolicy

, This means that I use an adaptive resizing strategy , Adjust whose size , It mainly refers to the adjustment of our new generation

A size , As for the new generation, we have said before , It is divided into Eden and two surviving areas , So if we turn this switch on , Then we

Of ParallelGC When working, it will dynamically adjust the proportion between our Eden and the surviving area , Including the size of the whole heap ,

It will be adjusted , Including the promotion threshold , It will also be affected by this switch , This is a switch , In addition to this switch ,

There are two more goals , The first goal -XX:GCTimeRatio=ratio and The second goal -XX:MaxGCPauseMillis=ms

They have different meanings , The goal is the opposite , The first goal is called GCTimeRatio, The second goal is MaxGCPauseMillis,

What do they mean respectively , This is our ParallelGC It is more intelligent , It can be tried according to one of your set goals

Adjust the size of the heap to achieve your desired goal , For example, the first goal GCTimeRatio, It is mainly to adjust our throughput

A goal of quantity , What do you mean , Let's first look at a calculation formula , It is used to adjust our garbage collection time and total time

A proportion of , For example, let's first look at this formula 1/( 1 + ratio) Such a formula , Let's apply this formula to calculate

Will understand the , for instance ratio The default value of is 99, So let's see , that 1/( 1 + 99) Namely 1/100 be equal to 0.0.1,

That means that your garbage collection time cannot exceed the total time 1%, let me put it another way , If you work 100 minute , So this 100 Within minutes ,

Only 1 Minutes can be used for garbage collection , If this goal is not achieved , So our ParallelGC The recycler will try to adjust

Heap size to achieve this goal , Generally, this heap is enlarged , Because the number of garbage collection becomes less frequent after it is increased , such

The total time of garbage collection can be reduced , So as to achieve an increase in throughput , This is the first parameter . So the second parameter

be called MaxGCPauseMillis, Paule It means pause ,Millis It refers to the number of milliseconds you pause , There's a front. Max,

Is your maximum pause milliseconds , Its default value is 200 millisecond , But here we should pay attention to one thing , These two goals are actually in conflict , because

Adjusted this for you TimeRatio, That means my pile is going to get bigger , Generally, the reactor will be turned up , Increase the throughput

It's a promotion , But the pile is too big , Then the time you spend on garbage collection may increase , Then there is no way to achieve this every time it this garbage

The pause time for recycling has reached MaxGCPauseMillis This indicator is , On the contrary, you should make the pause time shorter , That means you have to make this

The heap space becomes smaller , In this way, my garbage collection time will be short , But in this case, your throughput has come down again , So you have to make a compromise between them ,

It is to take a reasonable value according to your actual application , The two are opposite goals , Generally, this ratio Its default value is 99,

But this 99 It's hard to achieve , Because we just calculated , You are the one 100 In minutes 1 Minutes of STW Garbage collection pause time , This

It's a little hard to reach , So we usually ratio The value set is generally 19, That is to say, it is 1/(1+19) = 1/20 = 0.05, That is to say

100 Within minutes 5 Minutes of garbage collection time , This is relatively easy to reach

4.3 Response time first

-XX:UseConcMarkSweepGC ~ -XX:+UseParNewGC ~ SerialOld

Turn on ConcMarkSweepGC Garbage collector , Abbreviation for CMS, Using tag + Clear algorithm , It is a garbage collector with priority in response time , It is an old garbage collector , It's concurrent , because CMS Adopt mark clearing algorithm , It will cause memory fragments , When the memory fragment is too There will be more concurrent garbage , If concurrency fails, it will degenerate into SerialOld The serial old-fashioned garbage collector uses the tag sorting algorithm to execute garbage once Recycling , But because it is serial , So the response time is too long , and CMS It was originally a garbage collector with priority in response time , So this will give users Bad experience .-XX:ParallelGCThreads=n ~ -XX:ConcGCThreads

-XX:ParallelGCThreads=n , It refers to the number of parallel garbage collection threads , It's usually with you cpu The number of cores is the same , such as cpu The core number is 4,, This n yes 4, But we concurrent this GC The number of threads is different , It can pass another parameter -XX:ConcGCThreads To set up , Generally, we recommend setting this parameter to the number of parallel threads 1/4, That is to say 4 nucleus cpu, Then its value is 1, That is, a thread occupies cpu Go to Garbage collection , There are three left cpu It is also left to the user thread to perform the work , These are two configurations related to the number of threads .-XX:CMSInitiatingOccupancyFraction=percent

Parameters -XX:CMSInitiatingOccupancyFraction=percent It's to control when we do this CMS Garbage collection An opportunity , This parameter has a long name , It roughly means our execution CMS A memory proportion of garbage collection , This percent Indicates the proportion of memory , For example, it is set to 80, That is, when my memory is not enough , As long as the memory occupation of the old age reaches 80% Of When , I will perform garbage collection once , This is to reserve some space for those floating garbage . Early JVM Inside percent The default value should be 65% about , That is to say, the smaller the value is set , that CMS The earlier it triggers garbage collection .-XX:+CMSScavengeBeforeRemark

Last parameter -XX:+CMSScavengeBeforeRemark, It is at this stage of our re marking that there is a special scene , It is possible that some objects of the new generation will refer to objects of our old age , At this time, if it is carried out here It must scan the entire heap when it is relabeled , Then, quote through the new generation , Scan the objects of the elderly generation , Do that accessibility analysis , But such words are actually right My performance is a little affected , Why? , The object of the new generation , There are many objects created , And many of them are garbage themselves , If I ever Look for our old age from the new generation , Even if you find it, these new generation garbage will be recycled in the future , So it's equivalent to we do more before recycling Useless search work , Then how to avoid this phenomenon , We can use the last parameter -XX:+CMSScavengeBeforeRemark Come before you do this heavy Before the new mark, I will do a garbage collection for the new generation , Of course, the new generation uses UseParNewGC This new generation of garbage collector does this recycling work , Finish one After the first recycling , If there are fewer surviving objects in the new generation, there will be fewer objects I will scan in the future , In this way, it can reduce the pressure when I re label , This is the end This CMSScavengeBeforeRemark One of its functions , It's just a switch , When you add, you open , When you reduce it, it is forbidden . In conclusion, it is : Do a garbage collection before re marking to clean up the new generation of garbage , Prevent useless searches .

Next, we will introduce the garbage collector with priority of response time , be called CMS, To start this response time priority garbage collector , Then one of its virtual machine parameters

be called -XX:UseConcMarkSweepGC ~ -XX:+UseParNewGC ~ SerialOld

,Conc yes Concurrent Concurrency means ,Mark Mark means , The last one Sweep Is to clear away , If you recall the garbage collection algorithm we introduced ,

I can probably guess from its name , It is a garbage collector based on mark removal algorithm , And it is concurrent , Be careful Concurrent The word

As we introduced earlier Parallel Dissimilarity ,Parallel It's parallel ,Concurrent It's concurrent , Now that these two nouns are mentioned , Let's explain a little

once , So-called Concurrent Concurrency means that our garbage collector can work at the same time as other user threads , That is, user threads and garbage

Recycling threads are executed concurrently , They all want to seize cpu, And before Parallel Parallel means that I have multiple garbage collectors running in parallel , But in the meantime

It does not allow our user worker threads to continue running during garbage collection , let me put it another way STW 了 , This looks good , Our model CMS This garbage goes back

It can have a concurrent effect at some time , That is, it works at the same time , User threads can also work , In this way, it will be further reduced STW Time for ,

Of course, it still needs STW Of , But in some stages of garbage collection, it is not necessary STW Of , It can execute concurrently with user threads , This is also

One of its biggest characteristics , It is a garbage collector that works in the old age , With it is a person called ParNewGC, It is based on replication, which works in the new generation

Algorithm garbage collector , They are a pair , Work together , however CMS Sometimes this garbage collector will have a concurrent failure , This is the time

It will take a remedial measure to make the old garbage collector from CMS This concurrent garbage collector degenerates into a SerialOld Single threaded garbage collector ,

We saw this when we introduced the serial garbage collector , It is a garbage collector based on tag sorting in the old days , Understand its basic parameters , Then we

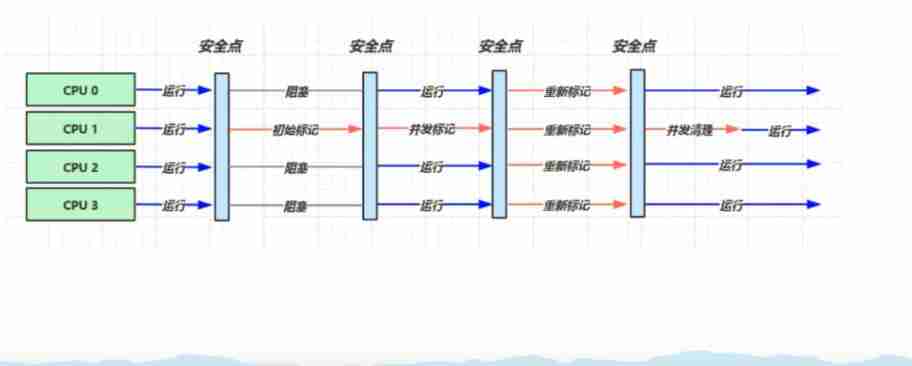

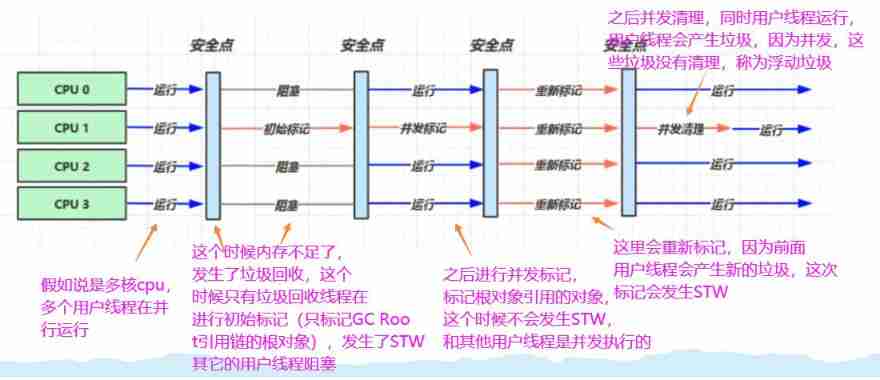

Let's take a look at its workflow , Its workflow is relatively complex , First , Multiple cpu, They begin to execute in parallel , Now the old generation has paid attention

It's the old age that ran out of memory , Then these threads have reached the safe point and paused , After the pause , Now CMS The garbage collector is working ,

It will perform an action of initial marking , In this initial marking action , Still need STW, That is, our other user threads are blocked and suspended , but

Wait until the initial mark is completed , Initial marking it quickly , Why? , It only marks some root objects , It will not traverse all objects in the heap memory ,

It will only list those root objects , So this initial tag is very fast , The pause time is also very short , Then wait until the initial marking is over , Next, user threads can

It's back on , meanwhile , Our garbage collection thread can also continue concurrent marking , Take the rest of the garbage ( Because the last tag only marked the root object ) Find it

come out , Here, it is executed concurrently with the user thread , In the process , It doesn't use it STW, So its response time is very short , It hardly affects the work of your user thread

do , Wait until the concurrency flag , One more step , It's called relabeling , This is another step STW 了 , Why is it necessary to retag STW Well , Because you are marking concurrently ,

User threads are also working , When it works, it may produce some new objects , Change the reference of some objects , It may interfere with your garbage collection , So ,

Wait until the concurrency flag is over , It has to do the work of re marking again , When the re marking is finished , Then the user thread can resume running , At this time, my garbage goes back

Collect the thread and do another concurrent cleanup . We can see that in the whole working stage, only the initial marking and re marking stages will cause STW, Other stages are executed concurrently ,

So its response time is very short , Also in line with our title, it is an old-fashioned garbage collector dedicated to response time priority , Here we pay attention to several details ,

The first detail is the number of threads when it is concurrent , At the initial mark, the number of concurrent threads , Affected by the following two parameters , The first one is what we have seen before

One parameter -XX:ParallelGCThreads=n , It refers to the number of parallel garbage collection threads , It's usually with you cpu The number of cores is the same , In our case

Should be 4, This n yes 4, But we concurrent this GC The number of threads is different , It can pass another parameter -XX:ConcGCThreads To set up , Generally, this is

We suggest setting the parameter to the number of parallel threads 1/4, That is to say 4 nucleus cpu, Then its value is 1, That is, a thread occupies cpu To recycle , There are three left cpu

It is also left to the user thread to perform the work , good , These are two configurations related to the number of threads . What about that , Let's talk about it a little CMS This garbage collection work is part of it

Yes cpu It's right cpu The occupation of is actually not as good as before ParallelGC Yes cpu High occupancy , Because take the example just now , It has four cores cpu It only takes one

Nuclear waste recycling , So it's right cpu The occupation is not high , But are our user worker threads also running , But when the user worker thread is supposed to run, it can

Full core Quad cpu Can be used , But among them 1 The core is occupied by garbage collection threads , So the user worker thread can only occupy the original 3/4 Of cpu The number of , therefore

It actually has an impact on the throughput of our entire application , Originally, you used four cores to complete logical calculation , It may only cost 1s The time of the clock, but one of the cores was given

Garbage recycling is used , Then I can use it for calculation cpu The number is less , Then it may take longer for you to calculate the same workload , So this one of us CMS

Although it achieves the priority of response time , But because it takes up a certain cpu Usage of , Therefore, it has some impact on the throughput of our entire application .

Next, we will introduce other parameters respectively , In our world CMS While the garbage collector is working , It performs concurrent cleanup

Because other user threads can continue to run, other user threads are running at the same time , New garbage may be generated , So while cleaning up concurrently, it can't put these

Clean up the new garbage , So it has to wait until the next garbage collection to clean up these new garbage generated at the same time of garbage cleaning , We call these new garbage

For floating garbage , These floating garbage can't be cleaned up until the next garbage collection , But this brings about a problem , Because you may

Generate new trash , Then it can't wait until the whole heap is out of memory like other garbage collectors before I do garbage collection , In that case, there is no place for these new garbage

Let go of , So you have to reserve some space to keep these floating garbage , So this parameter -XX:CMSInitiatingOccupancyFraction=percent It is the

To control when we do this CMS Garbage collection is an opportunity , This parameter has a long name , It roughly means our execution CMS It's recycled

A memory proportion , This percent Indicates the proportion of memory , For example, it is set to 80, That is, when my memory is not enough , As long as the old memory

Take up to 80% When , I will perform garbage collection once , This is to reserve some space for those floating garbage . Early JVM Inside percent

The default value should be 65% about , That is to say, the smaller the value is set , that CMS The earlier it triggers garbage collection . Last parameter

-XX:+CMSScavengeBeforeRemark,

It is at this stage of our re marking that there is a special scene , It is possible that some objects of the new generation will refer to objects of our old age , At this time, if it is carried out here

It must scan the entire heap when it is relabeled , Then, quote through the new generation , Scan the objects of the elderly generation , Do that accessibility analysis , But such words are actually right

My performance is a little affected , Why? , The object of the new generation , There are many objects created , And many of them are garbage themselves , If I ever

Look for our old age from the new generation , Even if you find it, these new generation garbage will be recycled in the future , So it's equivalent to we do more before recycling

Useless search work , Then how to avoid this phenomenon , We can use the last parameter -XX:+CMSScavengeBeforeRemark Come before you do this heavy

Before the new mark, I will do a garbage collection for the new generation , Of course, the new generation uses UseParNewGC This new generation of garbage collector does this recycling work , Finish one

After the first recycling , If there are fewer surviving objects in the new generation, there will be fewer objects I will scan in the future , In this way, it can reduce the pressure when I re label , This is the end

This CMSScavengeBeforeRemark One of its functions , It's just a switch , When you add, you open , When you reduce it, it is forbidden . There is another point directly CMS

When we talked about it CMS Well , It has a characteristic , When there are many memory fragments , Because it is based on a tag + Clear algorithm , It may produce

More memory fragments , This will cause me to allocate objects in the future Minor GC Insufficient , As a result, there were too many fragments in the old age , This will cause

A concurrency failure , This is equivalent to too many fragments causing concurrent failure , Cause concurrency failure, my name is CMS The old garbage collector won't work properly ,

At this time, our garbage collector will degenerate into SerialOld Do a single threaded serial garbage collection , Do some sorting , Clean up that fragment

I can continue to work after reducing , But once this kind of concurrent failure occurs , The garbage collection time will suddenly soar , This is the same thing CMS Garbage back

One of the biggest problems of the receiver , Because there may be too many memory fragments in the future , Will cause it to fail concurrently , If concurrency fails, it degenerates into SerialOld 了 , What about garbage

The recycling time will suddenly become very long , As a result, I was originally a garbage collector with priority in response time , As a result, your response time becomes very long , This will cause

I had a bad experience , So this is also CMS One of the biggest problems of this garbage collector ,

The article is piled up with a lot of words , It looks very ugly in appearance , But the principle is described in detail in the text , Yes, even

Together , So I didn't open it , I think reading patiently and understanding while reading will be very rewarding .

边栏推荐

- Vulhub vulnerability recurrence 73_ Webmin

- js Array 列表 实战使用总结

- SQLite queries the maximum value and returns the whole row of data

- 类和对象(一)this指针详解

- Codeforces Round #804 (Div. 2) Editorial(A-B)

- Codeless June event 2022 codeless Explorer conference will be held soon; AI enhanced codeless tool launched

- B站刘二大人-多元逻辑回归 Lecture 7

- LeetCode_字符串反转_简单_557. 反转字符串中的单词 III

- 改善Jpopup以实现动态控制disable

- Graduation design game mall

猜你喜欢

![[experience] install Visio on win11](/img/f5/42bd597340d0aed9bfd13620bb0885.png)

[experience] install Visio on win11

The ECU of 21 Audi q5l 45tfsi brushes is upgraded to master special adjustment, and the horsepower is safely and stably increased to 305 horsepower

![[untitled]](/img/7e/d0724193f2f2c8681a68bda9e08289.jpg)

[untitled]

Rustdesk builds its own remote desktop relay server

What is independent IP and how about independent IP host?

Codeforces Round #804 (Div. 2) Editorial(A-B)

Promise summary

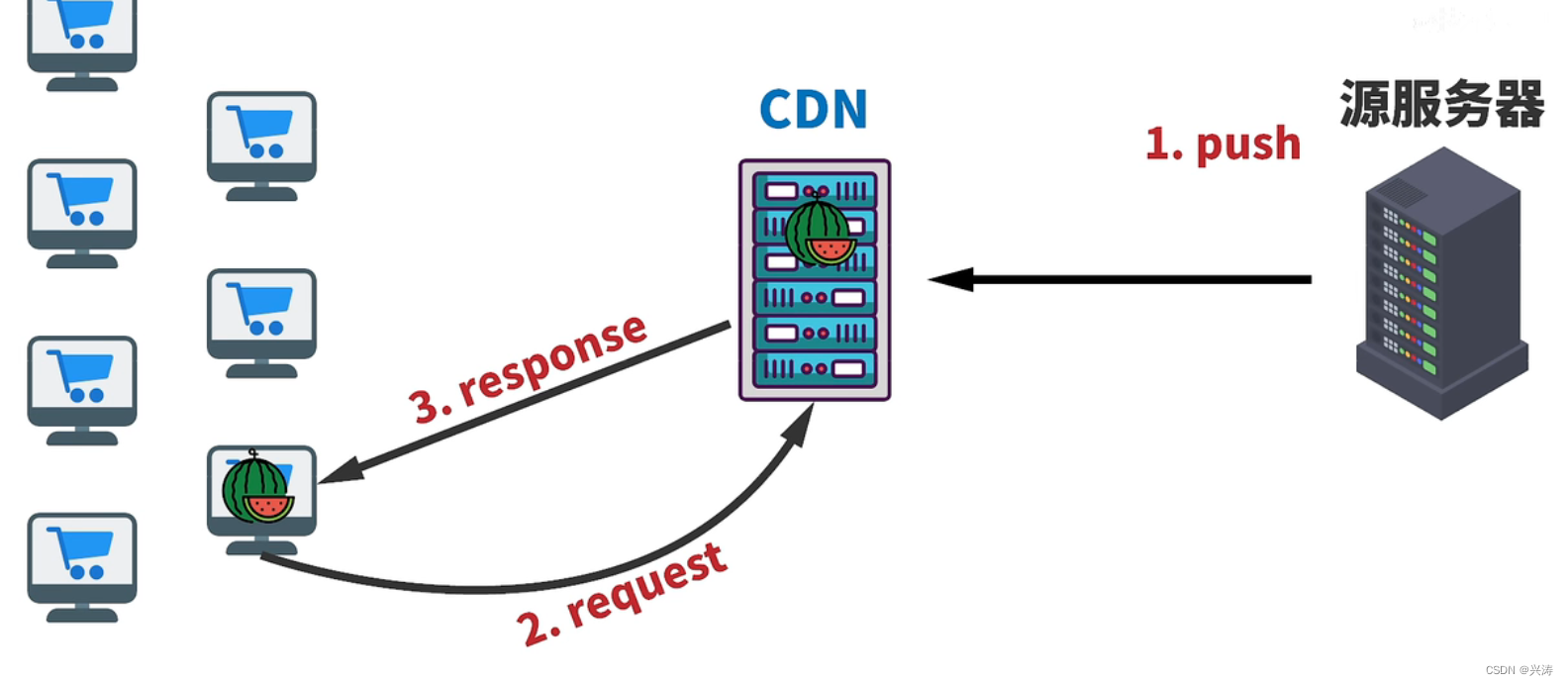

初识CDN

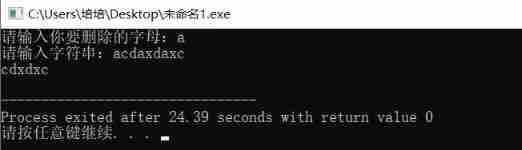

(column 22) typical column questions of C language: delete the specified letters in the string.

js Array 列表 实战使用总结

随机推荐

The ECU of 21 Audi q5l 45tfsi brushes is upgraded to master special adjustment, and the horsepower is safely and stably increased to 305 horsepower

Web Security (V) what is a session? Why do I need a session?

Redis消息队列

What preparations should be made for website server migration?

[SQL Server fast track] - authentication and establishment and management of user accounts

巨杉数据库再次亮相金交会,共建数字经济新时代

ArcGIS应用基础4 专题图的制作

01. Project introduction of blog development project

Self built DNS server, the client opens the web page slowly, the solution

Codeforces Round #804 (Div. 2) Editorial(A-B)

PDK工艺库安装-CSMC

Promise summary

指針經典筆試題

【LeetCode】18、四数之和

Jvxetable用slot植入j-popup

【torch】|torch. nn. utils. clip_ grad_ norm_

Codeforces Round #804 (Div. 2) Editorial(A-B)

HAC cluster modifying administrator user password

js Array 列表 实战使用总结

注释、接续、转义等符号