当前位置:网站首页>Thesis reading (0) - alexnet of classification

Thesis reading (0) - alexnet of classification

2022-07-28 22:54:00 【leu_ mon】

ALexNet

author :Alex Krizhevsky

Company :University of Toronto

Time :NeurIPS,ILSVRC 2012 champion

subject :ImageNet Classification with Deep Convolutional Neural Networks

Abstract

We trained a large-scale deep convolutional neural network to classify ImageNet LSVRC-2010 The contest 120 10000 high-resolution images ,1000 Different categories . On the test data , We got top-1 37.5% and top-5 17.0% Error rate , This result is much better than the best one at present . This neural network has 6000 Ten thousand parameters and 650000 Neurons , contain 5 Convolution layers ( Some convolutions are followed by pooling layers ) and 3 All connection layers , And finally a 1000 Dimensional softmax. In order to train faster , We used unsaturated neurons (ReLU), And in the convolution operation using a very effective GPU. In order to reduce the over fitting of full connection layer , We used a recently developed one called dropout The regularization method of , It turned out to be very effective . We also used a variant of this model to participate in ILSVRC-2012 competition , Won the championship and finished second top-5 26.2% Compared with , We have top-5 15.3% Error rate .

background

The huge complexity of the object recognition task means that this problem cannot be specified , Convolutional neural networks (CNNs) The capacity of can be controlled by changing the depth and breadth , They can also make powerful and often correct assumptions about the nature of images ( Due to the stability of statistics and the locality of pixels ).

Compared with the standard feedforward neural network with similar hierarchical size ,CNNs There are fewer connections and parameters , It's easier to train, but training on high-resolution images is still expensive .

current GPU, With a highly optimized 2D Convolution realization , Strong enough to do a lot CNN Training for , And recent data sets such as ImageNet It contains enough annotation samples to train such a model without serious over fitting .

Model

Before the final network 5 The layer is the convolution layer , The rest 3 The layer is the fully connected layer . The output of the last full connection layer is fed to 1000 Dimensional softmax layer ,softmax Will produce 1000 Distribution of class labels . Our network maximizes the goal of multiple logistic regression , This is equivalent to the mean value of the logarithm probability of the correct label of the training sample under the maximum prediction distribution .

result

The author in ILSVRC-2012 The training set trained five CNN The network averages 16.4% Error rate , stay ImageNet 2011 Pre training on the whole data set released in autumn , Then a volume layer is added after the pooling layer of the above network ILSVRC-2012 Training set tuning , Trained two CNN The Internet . Seven CNN The prediction of the network is averaged and 15.3% Error rate . The second place won 26.2% Error rate .

It shows the convolution kernel learned from the two data connection layers of the network . The Internet has learned a lot of frequency cores 、 Direction selection kernel , Also learned a variety of color spots .

( On the left ) By means of 8 It's calculated on a test image top-5 The prediction qualitatively evaluates what the network learns . Note that even targets that are not in the center of the image can be recognized by the network , For example, the bug in the upper left corner .( Right ) The first column is 5 Zhang ILSVRC-2010 Test image . The remaining columns show 6 Training images , The feature vector of these images in the last hidden layer has the minimum Euclidean distance from the feature vector of the test image .

Training & test

- Of the sample batch size by 128,, momentum by 0.9, The weight decay rate is 0.0005

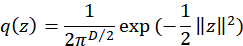

- The mean value used is 0, The standard deviation is 0.01 The Gaussian distribution initializes the weight of each layer

- The learning rate is initialized to 0.01, When the error is fixed, the learning rate becomes the original 0.1

Highlights of the article

Sort according to the importance of the author

- Used ReLU Activation function : Training is equivalent tanh The activation function is several times faster

- many GPU Model parallel training : Cross validation is used for different GPU Unified adjustment of the model

- Local response normalization : Follow up work is rarely seen

- Overlapping pooling : Each part of the previous pooling does not overlap , The model is more difficult to fit after overlapping pooling

- Data to enhance : Translation and horizontal flipping of the image , Change the of the image RGB Strength

- dropout discarded : Reduce overfitting , Model fusion is a method to reduce test error , But it takes too much time ,dropout Method will be 0.5 The probability of each hidden layer neuron output is set to 0, It only takes twice as long .

Author outlook

- The result can be simply by waiting faster GPU And a larger set of available data to improve

- Use a very large deep convolution network on video sequences , The timing structure of video sequence will provide very helpful information

边栏推荐

- 弹框遮罩层「建议收藏」

- php二维数组如何删除去除第一行元素

- npm run dev,运行项目后自动打开浏览器

- Paper reading vision gnn: an image is worth graph of nodes

- Gd32f303 firmware library development (10) -- dual ADC polling mode scanning multiple channels

- How to delete and remove the first row of elements in PHP two-dimensional array

- The simple neural network model based on full connection layer MLP is changed to the model based on CNN convolutional neural network

- Common library code snippet pytorch_ based【tips】

- Paper reading: deep forest / deep forest /gcforest

- 歌尔股份与上海泰矽微达成长期合作协议!专用SoC共促TWS耳机发展

猜你喜欢

Anomaly detection summary: intensity_ based/Normalizing Flow

Anaconda environment installation skimage package

Yolov5 improvement 7: loss function improvement

简单的es高亮实战

How to delete and remove the first row of elements in PHP two-dimensional array

winform跳转第二个窗体案例

STM32 - memory, I2C protocol

![[3D target detection] 3dssd (I)](/img/84/bcd3fe0ba811ea79248a5f50b15429.png)

[3D target detection] 3dssd (I)

STM32 - reset and clock control (cubemx for clock configuration)

一份来自奎哥的全新MPLS笔记,考IE必看 ----尚文网络奎哥

随机推荐

WebApplicationType#deduceFromClasspath

PCA学习

Install PCL and VTK under the background of ROS installation, and solve VTK and PCL_ ROS conflict problem

OSV_ q The size of tensor a (704) must match the size of tensor b (320) at non-singleton dime

Pictures are named in batches in order (change size /jpg to PNG) [tips]

How to delete and remove the first row of elements in PHP two-dimensional array

记录一下关于三角函数交换积分次序的一道题

Anomaly detection summary: intensity_ based/Normalizing Flow

shell脚本基础——Shell运行原理+变量、数组定义

简单的es高亮实战

Wheel 6: qserialport serial port data transceiver

A simple neural network model based on MLP full connection layer

Differernet [anomaly detection: normalizing flow]

Find out the maximum value of all indicators in epoch [tips]

STM32 -- program startup process

Improvement 14 of yolov5: replace the backbone network C3 with the lightweight network GhostNet

Improvement 13 of yolov5: replace backbone network C3 with lightweight network efficientnetv2

美国FCC提供16亿美元资助本国运营商移除华为和中兴设备

775. Inverted words

Binary source code, inverse code, complement code