当前位置:网站首页>深度学习实验:Softmax实现手写数字识别

深度学习实验:Softmax实现手写数字识别

2022-07-26 16:56:00 【老师我作业忘带了】

文章相关知识点:AI遮天传 DL-回归与分类_老师我作业忘带了的博客-CSDN博客

MNIST数据集

MNIST手写数字数据集是机器学习领域中广泛使用的图像分类数据集。它包含60,000个训练样本和10,000个测试样本。这些数字已进行尺寸规格化,并在固定尺寸的图像中居中。每个样本都是一个784×1的矩阵,是从原始的28×28灰度图像转换而来的。MNIST中的数字范围是0到9。下面显示了一些示例。 注意:在训练期间,切勿以任何形式使用有关测试样本的信息。

代码清单

- data/ 文件夹:存放MNIST数据集。下载数据,解压后存放于该文件夹下。下载链接:MNIST handwritten digit database, Yann LeCun, Corinna Cortes and Chris Burges

- solver.py 这个文件中实现了训练和测试的流程。;

- dataloader.py 实现了数据加载器,可用于准备数据以进行训练和测试;

- visualize.py 实现了plot_loss_and_acc函数,该函数可用于绘制损失和准确率曲线;

- optimizer.py 实现带momentum的SGD优化器,可用于执行参数更新;

- loss.py 实现softmax_cross_entropy_loss,包含loss的计算和梯度计算;

- runner.ipynb 完成所有代码后的执行文件,执行训练和测试过程。

要求

- 记录训练和测试的准确率。画出训练损失和准确率曲线;

- 比较使用和不使用momentum结果的不同,可以从训练时间,收敛性和准确率等方面讨论差异;

- 调整其他超参数,如学习率,Batchsize等,观察这些超参数如何影响分类性能。写下观察结果并将这些新结果记录在报告中。

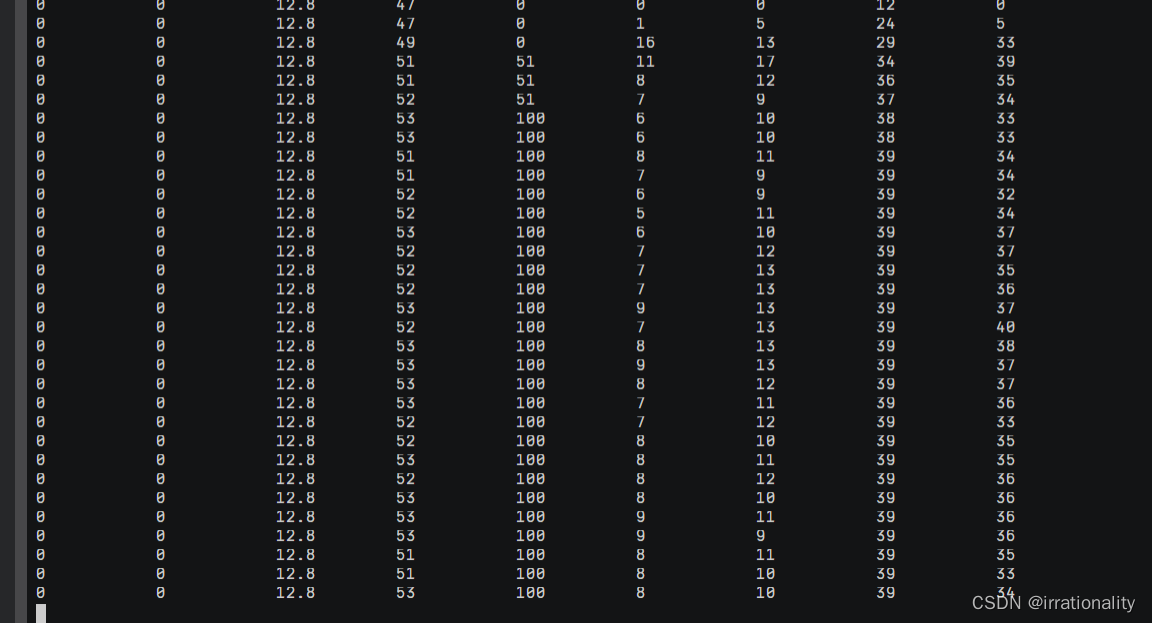

运行结果如下:

代码如下:

solver.py

import numpy as np

from layers import FCLayer

from dataloader import build_dataloader

from network import Network

from optimizer import SGD

from loss import SoftmaxCrossEntropyLoss

from visualize import plot_loss_and_acc

class Solver(object):

def __init__(self, cfg):

self.cfg = cfg

# build dataloader

train_loader, val_loader, test_loader = self.build_loader(cfg)

self.train_loader = train_loader

self.val_loader = val_loader

self.test_loader = test_loader

# build model

self.model = self.build_model(cfg)

# build optimizer

self.optimizer = self.build_optimizer(self.model, cfg)

# build evaluation criterion

self.criterion = SoftmaxCrossEntropyLoss()

@staticmethod

def build_loader(cfg):

train_loader = build_dataloader(

cfg['data_root'], cfg['max_epoch'], cfg['batch_size'], shuffle=True, mode='train')

val_loader = build_dataloader(

cfg['data_root'], 1, cfg['batch_size'], shuffle=False, mode='val')

test_loader = build_dataloader(

cfg['data_root'], 1, cfg['batch_size'], shuffle=False, mode='test')

return train_loader, val_loader, test_loader

@staticmethod

def build_model(cfg):

model = Network()

model.add(FCLayer(784, 10))

return model

@staticmethod

def build_optimizer(model, cfg):

return SGD(model, cfg['learning_rate'], cfg['momentum'])

def train(self):

max_epoch = self.cfg['max_epoch']

epoch_train_loss, epoch_train_acc = [], []

for epoch in range(max_epoch):

iteration_train_loss, iteration_train_acc = [], []

for iteration, (images, labels) in enumerate(self.train_loader):

# forward pass

logits = self.model.forward(images)

loss, acc = self.criterion.forward(logits, labels)

# backward_pass

delta = self.criterion.backward()

self.model.backward(delta)

# updata the model weights

self.optimizer.step()

# restore loss and accuracy

iteration_train_loss.append(loss)

iteration_train_acc.append(acc)

# display iteration training info

if iteration % self.cfg['display_freq'] == 0:

print("Epoch [{}][{}]\t Batch [{}][{}]\t Training Loss {:.4f}\t Accuracy {:.4f}".format(

epoch, max_epoch, iteration, len(self.train_loader), loss, acc))

avg_train_loss, avg_train_acc = np.mean(iteration_train_loss), np.mean(iteration_train_acc)

epoch_train_loss.append(avg_train_loss)

epoch_train_acc.append(avg_train_acc)

# validate

avg_val_loss, avg_val_acc = self.validate()

# display epoch training info

print('\nEpoch [{}]\t Average training loss {:.4f}\t Average training accuracy {:.4f}'.format(

epoch, avg_train_loss, avg_train_acc))

# display epoch valiation info

print('Epoch [{}]\t Average validation loss {:.4f}\t Average validation accuracy {:.4f}\n'.format(

epoch, avg_val_loss, avg_val_acc))

return epoch_train_loss, epoch_train_acc

def validate(self):

logits_set, labels_set = [], []

for images, labels in self.val_loader:

logits = self.model.forward(images)

logits_set.append(logits)

labels_set.append(labels)

logits = np.concatenate(logits_set)

labels = np.concatenate(labels_set)

loss, acc = self.criterion.forward(logits, labels)

return loss, acc

def test(self):

logits_set, labels_set = [], []

for images, labels in self.test_loader:

logits = self.model.forward(images)

logits_set.append(logits)

labels_set.append(labels)

logits = np.concatenate(logits_set)

labels = np.concatenate(labels_set)

loss, acc = self.criterion.forward(logits, labels)

return loss, acc

if __name__ == '__main__':

# You can modify the hyerparameters by yourself.

relu_cfg = {

'data_root': 'data',

'max_epoch': 10,

'batch_size': 100,

'learning_rate': 0.1,

'momentum': 0.9,

'display_freq': 50,

'activation_function': 'relu',

}

runner = Solver(relu_cfg)

relu_loss, relu_acc = runner.train()

test_loss, test_acc = runner.test()

print('Final test accuracy {:.4f}\n'.format(test_acc))

# You can modify the hyerparameters by yourself.

sigmoid_cfg = {

'data_root': 'data',

'max_epoch': 10,

'batch_size': 100,

'learning_rate': 0.1,

'momentum': 0.9,

'display_freq': 50,

'activation_function': 'sigmoid',

}

runner = Solver(sigmoid_cfg)

sigmoid_loss, sigmoid_acc = runner.train()

test_loss, test_acc = runner.test()

print('Final test accuracy {:.4f}\n'.format(test_acc))

plot_loss_and_acc({

"relu": [relu_loss, relu_acc],

"sigmoid": [sigmoid_loss, sigmoid_acc],

})

dataloader.py

import os

import struct

import numpy as np

class Dataset(object):

def __init__(self, data_root, mode='train', num_classes=10):

assert mode in ['train', 'val', 'test']

# load images and labels

kind = {'train': 'train', 'val': 'train', 'test': 't10k'}[mode]

labels_path = os.path.join(data_root, '{}-labels-idx1-ubyte'.format(kind))

images_path = os.path.join(data_root, '{}-images-idx3-ubyte'.format(kind))

with open(labels_path, 'rb') as lbpath:

magic, n = struct.unpack('>II', lbpath.read(8))

labels = np.fromfile(lbpath, dtype=np.uint8)

with open(images_path, 'rb') as imgpath:

magic, num, rows, cols = struct.unpack('>IIII', imgpath.read(16))

images = np.fromfile(imgpath, dtype=np.uint8).reshape(len(labels), 784)

if mode == 'train':

# training images and labels

self.images = images[:55000] # shape: (55000, 784)

self.labels = labels[:55000] # shape: (55000,)

elif mode == 'val':

# validation images and labels

self.images = images[55000:] # shape: (5000, 784)

self.labels = labels[55000:] # shape: (5000, )

else:

# test data

self.images = images # shape: (10000, 784)

self.labels = labels # shape: (10000, )

self.num_classes = 10

def __len__(self):

return len(self.images)

def __getitem__(self, idx):

image = self.images[idx]

label = self.labels[idx]

# Normalize from [0, 255.] to [0., 1.0], and then subtract by the mean value

image = image / 255.0

image = image - np.mean(image)

return image, label

class IterationBatchSampler(object):

def __init__(self, dataset, max_epoch, batch_size=2, shuffle=True):

self.dataset = dataset

self.batch_size = batch_size

self.shuffle = shuffle

def prepare_epoch_indices(self):

indices = np.arange(len(self.dataset))

if self.shuffle:

np.random.shuffle(indices)

num_iteration = len(indices) // self.batch_size + int(len(indices) % self.batch_size)

self.batch_indices = np.split(indices, num_iteration)

def __iter__(self):

return iter(self.batch_indices)

def __len__(self):

return len(self.batch_indices)

class Dataloader(object):

def __init__(self, dataset, sampler):

self.dataset = dataset

self.sampler = sampler

def __iter__(self):

self.sampler.prepare_epoch_indices()

for batch_indices in self.sampler:

batch_images = []

batch_labels = []

for idx in batch_indices:

img, label = self.dataset[idx]

batch_images.append(img)

batch_labels.append(label)

batch_images = np.stack(batch_images)

batch_labels = np.stack(batch_labels)

yield batch_images, batch_labels

def __len__(self):

return len(self.sampler)

def build_dataloader(data_root, max_epoch, batch_size, shuffle=False, mode='train'):

dataset = Dataset(data_root, mode)

sampler = IterationBatchSampler(dataset, max_epoch, batch_size, shuffle)

data_lodaer = Dataloader(dataset, sampler)

return data_lodaer

loss.py

import numpy as np

# a small number to prevent dividing by zero, maybe useful for you

EPS = 1e-11

class SoftmaxCrossEntropyLoss(object):

def forward(self, logits, labels):

"""

Inputs: (minibatch)

- logits: forward results from the last FCLayer, shape (batch_size, 10)

- labels: the ground truth label, shape (batch_size, )

"""

############################################################################

# TODO: Put your code here

# Calculate the average accuracy and loss over the minibatch

# Return the loss and acc, which will be used in solver.py

# Hint: Maybe you need to save some arrays for backward

self.one_hot_labels = np.zeros_like(logits)

self.one_hot_labels[np.arange(len(logits)), labels] = 1

self.prob = np.exp(logits) / (EPS + np.exp(logits).sum(axis=1, keepdims=True))

# calculate the accuracy

preds = np.argmax(self.prob, axis=1) # self.prob, not logits.

acc = np.mean(preds == labels)

# calculate the loss

loss = np.sum(-self.one_hot_labels * np.log(self.prob + EPS), axis=1)

loss = np.mean(loss)

############################################################################

return loss, acc

def backward(self):

############################################################################

# TODO: Put your code here

# Calculate and return the gradient (have the same shape as logits)

return self.prob - self.one_hot_labels

############################################################################

network.py

class Network(object):

def __init__(self):

self.layerList = []

self.numLayer = 0

def add(self, layer):

self.numLayer += 1

self.layerList.append(layer)

def forward(self, x):

# forward layer by layer

for i in range(self.numLayer):

x = self.layerList[i].forward(x)

return x

def backward(self, delta):

# backward layer by layer

for i in reversed(range(self.numLayer)): # reversed

delta = self.layerList[i].backward(delta)

optimizer.py

import numpy as np

class SGD(object):

def __init__(self, model, learning_rate, momentum=0.0):

self.model = model

self.learning_rate = learning_rate

self.momentum = momentum

def step(self):

"""One backpropagation step, update weights layer by layer"""

layers = self.model.layerList

for layer in layers:

if layer.trainable:

############################################################################

# TODO: Put your code here

# Calculate diff_W and diff_b using layer.grad_W and layer.grad_b.

# You need to add momentum to this.

# Weight update with momentum

if not hasattr(layer, 'diff_W'):

layer.diff_W = 0.0

layer.diff_W = layer.grad_W + self.momentum * layer.diff_W

layer.diff_b = layer.grad_b

layer.W += -self.learning_rate * layer.diff_W

layer.b += -self.learning_rate * layer.diff_b

# # Weight update without momentum

# layer.W += -self.learning_rate * layer.grad_W

# layer.b += -self.learning_rate * layer.grad_b

############################################################################

visualize.py

import matplotlib.pyplot as plt

import numpy as np

def plot_loss_and_acc(loss_and_acc_dict):

# visualize loss curve

plt.figure()

min_loss, max_loss = 100.0, 0.0

for key, (loss_list, acc_list) in loss_and_acc_dict.items():

min_loss = min(loss_list) if min(loss_list) < min_loss else min_loss

max_loss = max(loss_list) if max(loss_list) > max_loss else max_loss

num_epoch = len(loss_list)

plt.plot(range(1, 1 + num_epoch), loss_list, '-s', label=key)

plt.xlabel('Epoch')

plt.ylabel('Loss')

plt.legend()

plt.xticks(range(0, num_epoch + 1, 2))

plt.axis([0, num_epoch + 1, min_loss - 0.1, max_loss + 0.1])

plt.show()

# visualize acc curve

plt.figure()

min_acc, max_acc = 1.0, 0.0

for key, (loss_list, acc_list) in loss_and_acc_dict.items():

min_acc = min(acc_list) if min(acc_list) < min_acc else min_acc

max_acc = max(acc_list) if max(acc_list) > max_acc else max_acc

num_epoch = len(acc_list)

plt.plot(range(1, 1 + num_epoch), acc_list, '-s', label=key)

plt.xlabel('Epoch')

plt.ylabel('Accuracy')

plt.legend()

plt.xticks(range(0, num_epoch + 1, 2))

plt.axis([0, num_epoch + 1, min_acc, 1.0])

plt.show()

边栏推荐

- What kind of product is the Jetson nano? (how about the performance of Jetson nano)

- SQL injection (mind map)

- Week 16 OJ practice 1 calculates the day of the year

- 使用 replace-regexp 在行首添加序号

- CCS tm4c123 new project

- PIP installation module, error

- 【虚拟机数据恢复】意外断电导致XenServer虚拟机不可用,虚拟磁盘文件丢失的数据恢复案例

- Application of machine vision in service robot

- SQL中去去重的三种方式

- 常用超好用正则表达式!

猜你喜欢

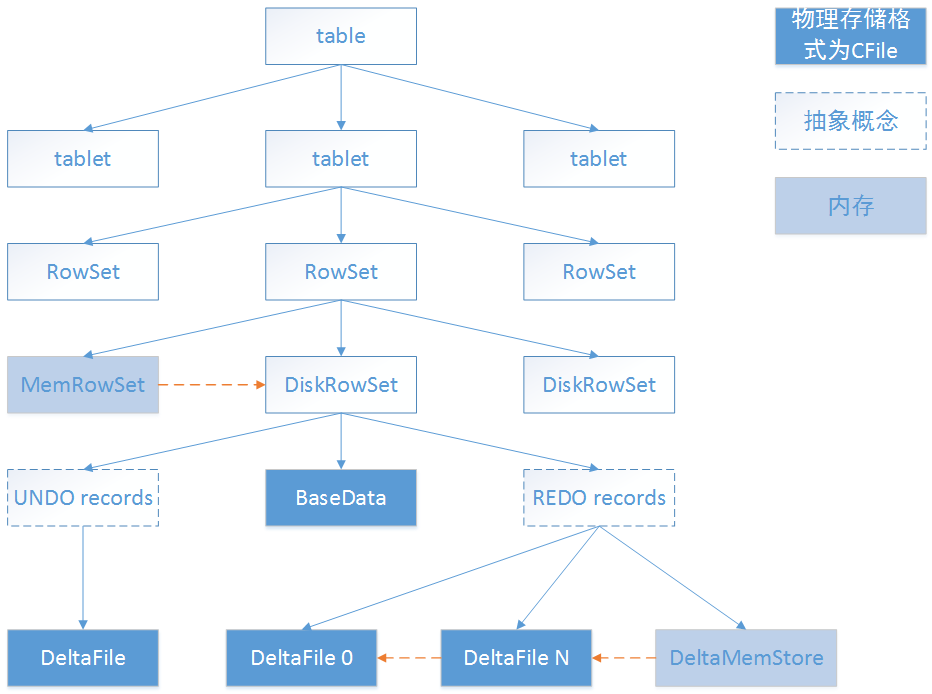

Kudu design tablet

The latest interface of Taobao / tmall keyword search

Spark统一内存划分

![Cloud rendering volume cloud [theoretical basis and implementation scheme]](/img/38/0e97d6f015f3cb51e872a8d3ce584a.png)

Cloud rendering volume cloud [theoretical basis and implementation scheme]

Everything is available Cassandra: the fairy database behind Huawei tag

#夏日挑战赛# OpenHarmony基于JS实现的贪吃蛇

来吧开发者!不只为了 20 万奖金,试试用最好的“积木”来一场头脑风暴吧!

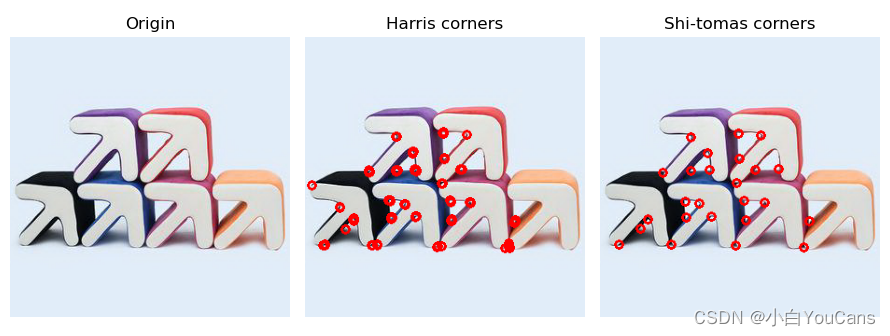

【OpenCV 例程 300篇】240. OpenCV 中的 Shi-Tomas 角点检测

Ascend target detection and recognition - customize your own AI application

Pytorch中的tensor操作

随机推荐

uni-app

国际大咖 VS 本土开源新星 | ApacheCon Asia 主题演讲议程全览

[machine learning] principle and code of mean shift

Comparison between agile development and Devops

Common super easy to use regular expressions!

Tupu 3D visual national style design | collision between technology and culture "cool" spark“

On the growth of data technicians

After vs code is formatted, the function name will be automatically followed by a space

如何使用 align-regexp 对齐 userscript 元信息

机器学习-什么是机器学习、监督学习和无监督学习

236. 二叉树的最近公共祖先

Everything is available Cassandra: the fairy database behind Huawei tag

一文详解吞吐量、QPS、TPS、并发数等高并发指标

Is it safe for Huishang futures to open an account online? What is the account opening process?

Environment setup mongodb

Basic select statement

RedisDesktopManager去除升级提示

简述CUDA镜像构建

ASEMI整流桥KBPC2510,KBPC2510参数,KBPC2510规格书

树形dp问题