当前位置:网站首页>Introduction to convolutional neural network

Introduction to convolutional neural network

2022-06-13 01:07:00 【dddd_ jj】

If you are interested in convolutional neural networks, you can b Stand and look Li Hongyi Teacher's video , Every time I see it, I will have some new understanding .

Next is my understanding of convolutional neural networks .

First of all , You need to understand what the input to your task is , Then the convolutional neural network is a function , Process the input of the task , Output what you want . Consider the convolutional neural network as a function , Then this function will have Convolution operation 、 Pooling operation 、 Tiling operation 、 Full connection operation etc. .

Convolution operation :

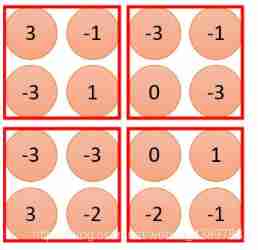

Here's the picture ,66 The matrix of is the data we input , then 33 Of Filter 1 It's ours Convolution kernel , We first operate on the convolution kernel corresponding to the input matrix , Input the upper left corner of the matrix 3*3 And are multiplied by each other and added up , Is the so-called convolution operation .

Like in the picture below , The first result 3, Namely 1 * 1 +(-1)*0 +(-1)*0 +(-1)*0 + 1 * 1 +(-1)*0 + 0 *(-1)+ 0 *(-1)+1 * 1 , The result is 3. In this way , Convolution kernel then performs convolution operation with the next part of the input matrix , Output another number . In the figure stride by 1, That is, the next part of the input matrix moves only one element to the right at a time in the current operation .

Pooling operation

The following figure is the result of the above convolution check input matrix operation , You can calculate the result by yourself , See if it is the same as the following figure , If the same , It means that you have learned the convolution operation .

Pooling operation :

Yes Maximum pooling 、 The average pooling Equal pooling operation .

The maximum pool operation is in the current matrix , And then block , Get the maximum value in each piece , Then put the maximum value in each piece together , Get the final result .

The following figure shows how to maximize the pool , It's the picture 2.

The difference between average pooling and maximum pooling is , Maximum pooling is to keep the maximum value in each block , Average pooling is to get the average value of each block .

chart 1

chart 2

Tiling operation :

That is, the matrix after convolution pooling and other operations ( Convolution pooling can be operated many times ) Tile into a sequence . For example, after the above matrix is tiled , It's going to be like this .

Full connection operation :

The above figure is after the above tiling operation , Become a sequence of elements , Then you can choose the number and size of the output elements , But full connection means that every input element and output element are connected ( I remember that the concept of full connection seems to be discrete mathematics or something that has been taught in some class , I should understand this meaning ) The formula is as follows :

Output elements = Activation function ( coefficient * Input elements + bias )

the second , You have to set up the model loss function .

loss Function is a function to judge whether your model is good or not , common loss Function you can search by yourself . Common is MSE

Third , To find the optimal parameters of the model

In fact, we need to find the optimal parameters in convolutional neural networks , Let's first look at the parameters that need to be optimized .

1. Convolution layer

The specific value of convolution kernel 、 bias ( After convolution , Plus the bias )

2. Pooling layer

3. Fully connected layer

The weight

The above parameters are first Random initialization , Then after a batch of training , We need to adjust the parameters , bring loss Function minimum . The roughest way is to enumerate all the parameter combinations , But that has to be reckoned till the end of time , So we should take some methods to quickly find the optimal parameters , The commonly used method for adjusting parameters is gradient descent 、adam wait , It can make the parameters converge to the optimal parameters faster .

For specific gradient descent methods, check the blog , Or take a look at teacher lihongyi's video

That's the basic thing cnn Concept , It all depends on personal understanding , If there is a mistake , You can point out in the comment area .

边栏推荐

- Traditional machine learning classification model predicts the rise and fall of stock prices under more than 40 indicators

- Understanding of the detach() function of pytorch

- MySQL performance analysis - explain

- Tangent and tangent plane

- Physical orbit simulation

- [JS component] simulation framework

- Common skills for quantitative investment - drawing 3: drawing the golden section line

- With a market value of more than trillion yuan and a sales volume of more than 100000 yuan for three consecutive months, will BYD become the strongest domestic brand?

- [backtrader source code analysis 7] analysis of the functions for calculating mean value, variance and standard deviation in mathsupport in backtrader (with low gold content)

- Et5.0 configuring Excel

猜你喜欢

Leetcode-11- container with the most water (medium)

Mathematical knowledge arrangement: extremum & maximum, stagnation point, Lagrange multiplier

Deep learning model pruning

What is dummy change?

Undirected graph -- computing the degree of a node in compressed storage

Breadth first search for node editor runtime traversal

Rasa对话机器人之HelpDesk (三)

. The way to prove the effect of throwing exceptions on performance in. Net core

ArrayList underlying source code

The scope builder coroutinescope, runblocking and supervisorscope of kotlin collaboration processes run synchronously. How can other collaboration processes not be suspended when the collaboration pro

随机推荐

408 true question - division sequence

Rotating camera

[JS component] dazzle radio box and multi box

单片机串口中断以及消息收发处理——对接受信息进行判断实现控制

Characteristics of transactions - persistence (implementation principle)

Pipeline pipeline project construction

[Latex] 插入圖片

[JS] battle chess

spiral matrix visit Search a 2D Matrix

Lessons learned from the NLP part of the course "Baidu architects hands-on deep learning"

The tle4253gs is a monolithic integrated low dropout tracking regulator in a small pg-dso-8 package.

Leetcode-9-palindromes (simple)

Go simple read database

Binary tree traversal - recursive and iterative templates

sort

Tree - delete all leaf nodes

Method of cleaning C disk

切线与切平面

How to handle different types of data

[JS component] simulation framework