当前位置:网站首页>Tensorflow loss function

Tensorflow loss function

2022-07-27 08:51:00 【qq_ twenty-seven million three hundred and ninety thousand and 】

Loss function is also called cost function or objective function , Is the difference between the real value and the predicted value , The goal of model optimization is to minimize this difference .

from tensorflow import keras

from tensorflow.keras import layers

model = keras.Sequential()

model.add(layers.Dense(64, kernel_initializer='uniform', input_shape=(10,)))

model.add(layers.Activation('softmax'))

loss_function = keras.losses.SparseCategoricalCrossentropy(from_logits=True)

model.compile(loss=loss_function, optimizer='adam')

model.compile(loss='sparse_categorical_crossentropy', optimizer='Adam')

### 1. Binary cross entropy

# The output data is 0-1 Between , The activation function of the model output layer is sigmoid

import tensorflow as tf

y_true = [[0., 1.], [0.2, 0.8], [0.3, 0.7], [0.4, 0.6]]

y_pred = [[0.6, 0.4], [0.4, 0.6], [0.6, 0.4], [0.8, 0.2]]

bce = tf.keras.losses.BinaryCrossentropy(reduction='sum_over_batch_size')

print(bce(y_true, y_pred).numpy()) # 0.839445

bce = tf.keras.losses.BinaryCrossentropy(reduction='sum')

print(bce(y_true, y_pred).numpy()) # 3.35778

bce = tf.keras.losses.BinaryCrossentropy(reduction='none')

print(bce(y_true, y_pred).numpy()) # [0.9162905 0.5919184 0.79465103 1.0549198 ]

### 2. Classification cross entropy CategoricalCrossentropy

# The sample label is one-hot code , The activation function is softmax

y_true = [[0, 1, 0], [0, 0, 1]]

y_pred = [[0.05, 0.95, 0], [0.1, 0.8, 0.1]]

cce = tf.keras.losses.CategoricalCrossentropy()

print(cce(y_true, y_pred).numpy())

print(cce(y_true, y_pred, sample_weight=tf.constant([0.3, 0.7])).numpy())

cce = tf.keras.losses.CategoricalCrossentropy(reduction='sum')

print(cce(y_true, y_pred).numpy())

cce = tf.keras.losses.CategoricalCrossentropy(reduction='none')

print(cce(y_true, y_pred).numpy())

### 3. Classification cross entropy SparseCategoricalCrossentropy

# The sample label is the index position , The activation function is softmax

# Such as mnist Data set and y Value does not pass one-hot conversion , Model fit You should use sparse_categorical_crossentropy As a loss function

# Other discrete classification labels , It can be converted into a numerical index

from sklearn import preprocessing

enc = preprocessing.OrdinalEncoder()

X = [['class1'], ['class2'],['class3']]

enc.fit(X)

X_transform = enc.transform(X)

print(X_transform)

y_true = [1, 2, 2]

y_pred = [[0.05, 0.95, 0], [0.1, 0.8, 0.1],[0.05, 0.05, 0.9]]

# Using 'auto'/'sum_over_batch_size' reduction type.

scce = tf.keras.losses.SparseCategoricalCrossentropy()

scce(y_true, y_pred).numpy()

### 4.KL The divergence KLDivergence

# Relative entropy KLDivergence , Also known as KL The divergence , Is a distance measure of continuous distribution , Usually, it is directly regressed in the distribution space of discrete sampling continuous output .

# loss = y_true * log(y_true / y_pred)

y_true = [[0, 1], [0, 0]]

y_pred = [[0.6, 0.4], [0.4, 0.6]]

kl = tf.keras.losses.KLDivergence()

print(kl(y_true, y_pred).numpy())

print(kl(y_true, y_pred, sample_weight=[0.8, 0.2]).numpy())

kl = tf.keras.losses.Poisson(reduction='sum')

print(kl(y_true, y_pred).numpy())

kl = tf.keras.losses.Poisson(reduction='none')

print(kl(y_true, y_pred).numpy())

### 5. Poisson loss Poisson

# Poisson loss Poisson, It is suitable for data sets that conform to Poisson distribution

y_true = [[0., 1.], [0., 0.]]

y_pred = [[1., 1.], [0., 0.]]

p = tf.keras.losses.Poisson()

print(p(y_true, y_pred).numpy()) # Average error

print(p(y_true, y_pred, sample_weight=[0.8, 0.2]).numpy()) # With sample weight

p = tf.keras.losses.Poisson(reduction='sum') # The sum of the

print(p(y_true, y_pred).numpy())

p = tf.keras.losses.Poisson(reduction='none') # Calculate the error of each sample separately

print(p(y_true, y_pred).numpy())

### 6. Mean square error Mean Squared Error

# The return question ,MeanSquaredError Calculate the square average of the error between the real value and the predicted value .

y_true = [[0., 1.], [0., 0.]]

y_pred = [[1., 1.], [1., 0.]]

mse = tf.keras.losses.MeanSquaredError()

print(mse(y_true, y_pred).numpy())

print(mse(y_true, y_pred, sample_weight=[0.8, 0.2]).numpy())

mse = tf.keras.losses.MeanSquaredError(reduction='sum')

print(mse(y_true, y_pred).numpy())

mse = tf.keras.losses.MeanSquaredError(reduction='none')

print(mse(y_true, y_pred).numpy())

### 7. Mean square logarithm error Mean Squared Logarithmic Error

# loss = square(log(y_true + 1.) - log(y_pred + 1.))

y_true = [[0., 1.], [0., 0.]]

y_pred = [[1., 1.], [1., 0.]]

msle = tf.keras.losses.MeanSquaredLogarithmicError()

print(msle(y_true, y_pred).numpy())

print(msle(y_true, y_pred, sample_weight=[0.7, 0.3]).numpy())

msle = tf.keras.losses.MeanSquaredLogarithmicError(reduction='sum')

print(msle(y_true, y_pred).numpy())

msle = tf.keras.losses.MeanSquaredLogarithmicError(reduction='none')

print(msle(y_true, y_pred).numpy())

### 8. Custom loss function

def get_loss(y_pre,y_input):

passReference resources :

https://tensorflow.google.cn/api_docs/python/tf/keras/losses/Loss

边栏推荐

- Login to homepage function implementation

- 2040: [Blue Bridge Cup 2022 preliminary] bamboo cutting (priority queue)

- Full Permutation (depth first, permutation tree)

- Day5 - Flame restful request response and Sqlalchemy Foundation

- 4276. Good at C

- Matlab 利用M文件产生模糊控制器

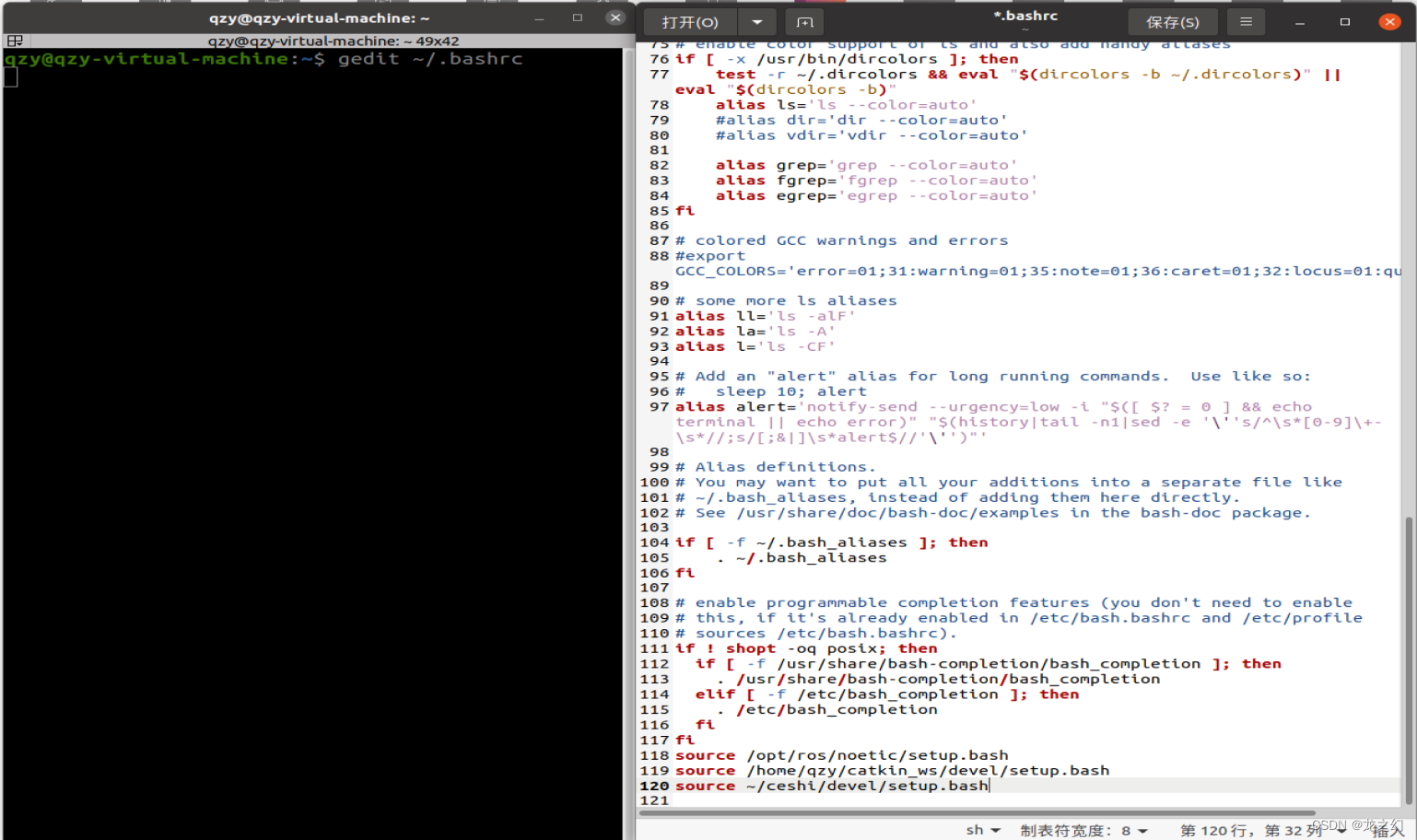

- Connection failed during installation of ros2 [ip: 91.189.91.39 80]

- 杭州电子商务研究院发布“数字化存在”新名词解释

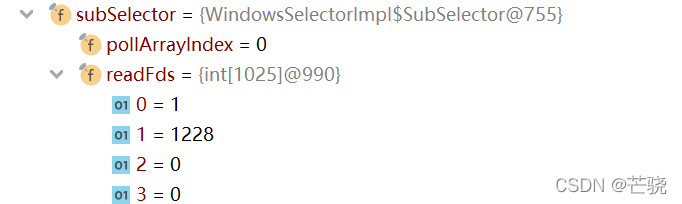

- 网络IO总结文

- 03. Use quotation marks to listen for changes in nested values of objects

猜你喜欢

网络IO总结文

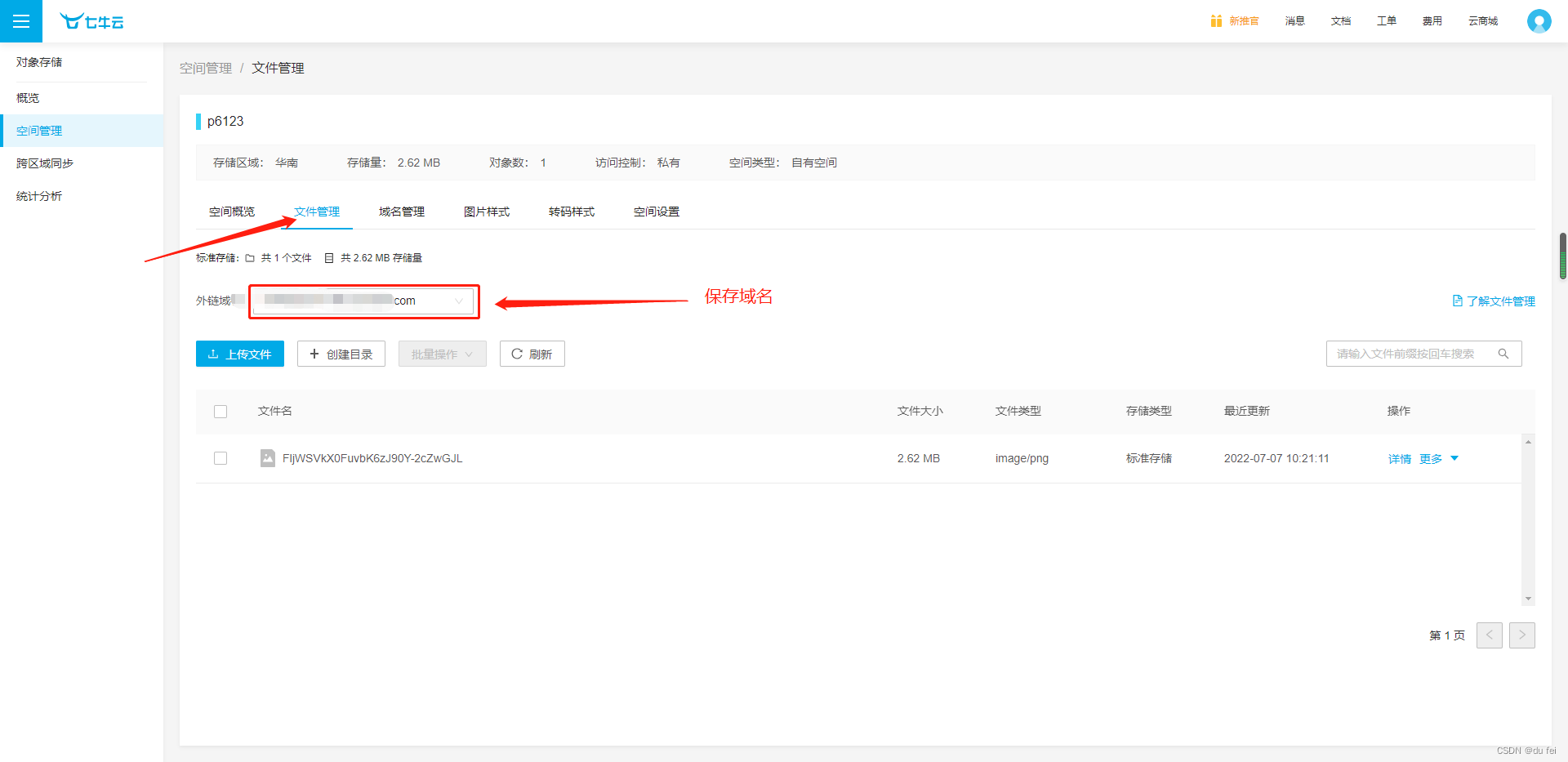

How to upload qiniu cloud

E. Split into two sets

【Flutter -- GetX】准备篇

The shelf life you filled in has been less than 10 days until now, and it is not allowed to publish. If the actual shelf life is more than 10 days, please truthfully fill in the production date and pu

NiO Summary - read and understand the whole NiO process

说透缓存一致性与内存屏障

How to permanently set source

User management - restrictions

微信安装包从0.5M暴涨到260M,为什么我们的程序越来越大?

随机推荐

[penetration test tool sharing] [dnslog server building guidance]

Realization of specification management and specification option management functions

Minio 安装与使用

3311. 最长算术

String type and bitmap of redis

List delete collection elements

Openresty + keepalived 实现负载均衡 + IPV6 验证

4277. Block reversal

Solve the problem of Chinese garbled code on the jupyter console

接口测试工具-Postman使用详解

Matlab求解微分代数方程 (DAE)

Realization of backstage brand management function

3428. Put apples

Background image related applications - full, adaptive

Flink1.15 source code reading Flink clients client execution process (reading is boring)

How to upload qiniu cloud

693. 行程排序

N queen problem (backtracking, permutation tree)

四个开源的人脸识别项目分享

Network IO summary