当前位置:网站首页>Share | guide language image pre training to achieve unified visual language understanding and generation

Share | guide language image pre training to achieve unified visual language understanding and generation

2022-06-11 04:54:00 【Shenlan Shenyan AI】

Visual language pre training (VLP) The performance of many visual language tasks has been improved . However , Most of the existing pre training models only perform well in understanding based tasks or generation based tasks . Besides , The performance is improved largely by expanding the noisy images collected from the network - Data set implementation of text pairs , And this is a suboptimal source of oversight .

In this paper , We proposed BLIP, A new VLP frame , It can flexibly take into account the visual - Language understanding and generation tasks .BLIP Through self-help sampling method, the network data with noise is effectively utilized , One of the description generators generates a composite image description , A filter removes noisy descriptions . We have achieved state-of-the-art results on several visual language tasks , Such as image text retrieval ( Average recall rate +2.7%@1)、 Image description generation (CIDEr+2.8%) and VQA(VQA score +1.6%).BLIP It also shows strong generalization ability in zero sample learning .

Two main limitations

Model angle : Most methods use either an encoder based model or an encoder - Decoder model . However , Encoder based models are not easy to transfer directly to text generation tasks , And the encoder - The decoder model has not been successfully applied to images - Text retrieval task .

Data angle : Most of the most advanced methods CLIP,ALBEF,SimVLM) Use images collected from the network - Pre train the text . Despite the performance improvement achieved by expanding the data set , Our paper shows that noisy web texts are suboptimal for visual language learning .

Two contributions

Multimode hybrid encoder - decoder (MED): A new model architecture , For effective multi task pre training and flexible transfer learning .MED It can be used as a single-mode encoder , Or an image-based text encoder , Or an image-based text decoder . The pre training model has three training tasks : Image text contrast learning 、 Image text matching and language model under image condition .

Describe generation and filtering (CapFilt): We will train in advance MED Fine tuning into two modules : One is a description generator for compositing picture descriptions , The other is a filter that removes noise descriptions .

Two key findings

We found that , Description generators work with filters , Can significantly improve performance in a variety of situations . In various downstream tasks , By describing Bootstrapping, The performance is greatly improved . We also found that , More diverse image descriptions will yield greater benefits .

BLIP Achieved state-of-the-art performance on multiple visual language tasks . Visual language task , Including image text retrieval 、 Image description generation 、VQA. When our model is migrated to two video language tasks : Text video retrieval and videoQA.. We have also achieved the best Zero-shot effect .

Model structure

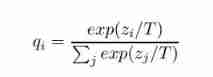

With images - Text contrast (ITC) Loss to train the single-mode encoder , Make visual and verbal representations consistent .

Image-grounded The text coder uses an additional layer of cross attention to simulate vision - The interaction of languages , And through images - Text matching (ITM) Loss to distinguish between positive and negative cases .

Image-grounded The text decoder replaces the bidirectional self - attention layer with the causal self - attention layer . It shares the same cross attention layer and feedforward network with the encoder . The decoder is modeled in language (LM) Loss to train , To generate an image description .

The training process

We use a description generator , And a denoised image - Filter for text pairs . Description generator and filter are initialized from the same pre trained model , And fine tune it on a small-scale manually annotated data set .bootstrap Data sets are used to train a new model .

author : Howard

| About Deep extension technology |

Shenyan technology was founded in 2018 year 1 month , Zhongguancun High tech enterprise , It is an enterprise with the world's leading artificial intelligence technology AI Service experts . In computer vision 、 Based on the core technology of natural language processing and data mining , The company launched four platform products —— Deep extension intelligent data annotation platform 、 Deep extension AI Development platform 、 Deep extension automatic machine learning platform 、 Deep extension AI Open platform , Provide data processing for enterprises 、 Model building and training 、 Privacy computing 、 One stop shop for Industry algorithms and solutions AI Platform services .

边栏推荐

- Carbon path first, Huawei digital energy injects new momentum into the green development of Guangxi

- 华为设备配置通过GRE隧道接入虚拟专用网

- Writing a good research title: Tips & Things to avoid

- An adaptive chat site - anonymous online chat room PHP source code

- Google drive download failed, network error

- Detailed decomposition of the shortest path problem in Figure

- Cartographer learning records: 3D slam part of cartographer source code (I)

- PostgreSQL database replication - background first-class citizen process walreceiver receiving and sending logic

- Split all words into single words and delete the product thesaurus suitable for the company

- Redis master-slave replication, sentinel, cluster cluster principle + experiment (wait, it will be later, but it will be better)

猜你喜欢

USB to 232 to TTL overview

Exness: Liquidity Series - order Block, Unbalanced (II)

Lianrui: how to rationally see the independent R & D of domestic CPU and the development of domestic hardware

![[Transformer]Is it Time to Replace CNNs with Transformers for Medical Images?](/img/83/7025050667c382857c032bdd8f6649.jpg)

[Transformer]Is it Time to Replace CNNs with Transformers for Medical Images?

Google Code Coverage best practices

New UI learning method subtraction professional version 34235 question bank learning method subtraction professional version applet source code

Iris dataset - Introduction to machine learning

Simple knowledge distillation

Win10+manjaro dual system installation

Cartographer learning record: cartographer Map 3D visualization configuration (self recording dataset version)

随机推荐

Discussion on the construction of Fuzhou nucleic acid testing laboratory

Decision tree (hunt, ID3, C4.5, cart)

Chia Tai International: anyone who really invests in qihuo should know

Carbon path first, Huawei digital energy injects new momentum into the green development of Guangxi

Go unit test example; Document reading and writing; serialize

[Transformer]MViTv1:Multiscale Vision Transformers

What is the difference between a wired network card and a wireless network card?

Tips and websites for selecting papers

Google drive download failed, network error

[Transformer]Is it Time to Replace CNNs with Transformers for Medical Images?

华为设备配置跨域虚拟专用网

[Transformer]On the Integration of Self-Attention and Convolution

Pychart displays pictures with imshow

C language test question 3 (grammar multiple choice question - including detailed explanation of knowledge points)

【Markdown语法高级】 让你的博客更精彩(三:常用图标模板)

Huawei equipment is configured with cross domain virtual private network

An adaptive chat site - anonymous online chat room PHP source code

Analysis of 17 questions in Volume 1 of the new college entrance examination in 2022

Leetcode question brushing series - mode 2 (datastructure linked list) - 21:merge two sorted lists merge two ordered linked lists

Comparison of gigabit network card chips: who is better, a rising star or a Jianghu elder?