This article is from OPPO Internet technology team , Reprint please note the author . Meanwhile, welcome to our official account :OPPO_tech, Share with you OPPO Cutting edge Internet technology and activities .

One 、 The problem of resource preemption

With the adjustment of storage architecture , Many application services will run in the same resource pool , Provide unified storage capacity to the outside world . There may be multiple types of resource pool traffic , Such as those in the upper business IO Traffic 、 Data migration within storage 、 Repair 、 Compression etc. , Different traffic through competition to determine the way to the hardware IO The order , So there's no way to ensure some kind of traffic IO Service quality , For example, the internal data migration traffic may take up too much bandwidth and affect the business traffic reading and writing , As a result, the quality of service provided by storage to the outside world is reduced , Due to the uncertainty of the result of resource competition, it is impossible to guarantee that storage can provide a stable cluster environment .

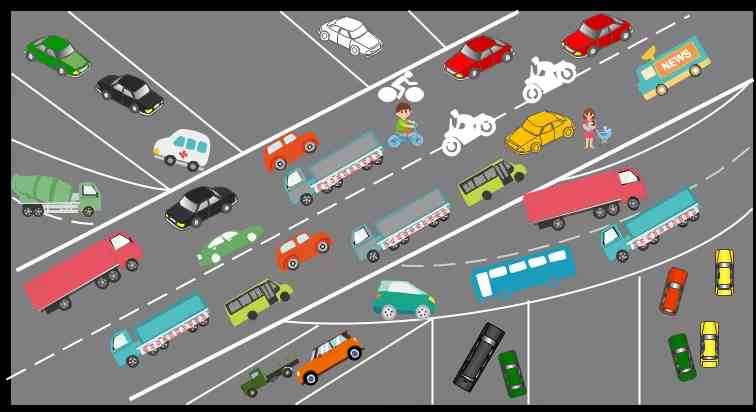

As shown in the traffic map below , The car is retrograde 、 Gaster is at his pleasure , Pedestrians cross 、 There is no scruple in chatting , Finally, there are traffic jams and even safety accidents .

Two 、 How to solve the problem of resource preemption

Compare to the last traffic map , How to avoid this phenomenon, we may have their own views , Two nouns are introduced here

- QoS, The quality of service , Provide end-to-end quality of service according to different requirements of different service types .

- Storage QoS, In the guarantee of service bandwidth and IOPS Under the circumstances , Reasonable allocation of storage resources , Effectively alleviate or control the preemption of resources by application services , To achieve traffic monitoring 、 Rational allocation of resources 、 Important service quality assurance and internal traffic avoidance , Is an essential key technology in the storage field .

that QoS How to do it ? The following is an example of traffic .

2.1 Traffic classification

From the picture above, we can see that no matter what car it is , It's all self-centered , Free from any restrictions , The first way we can get there first is to classify the roads , For example, it is divided into bus lanes 、 Small car lanes 、 Truck Lane 、 Non motorized vehicle lanes and crosswalks, etc , Under normal circumstances, only buses are allowed in the bus lane , And motor vehicles are not allowed on non motorized lanes , In this way, we can ensure that there is no restricted interference between lanes .

Again , There will also be a lot of traffic inside the storage , We can assign different traffic types different “ Vehicle Lane ”, For example, we divide the traffic lanes wider , And the lanes for internal traffic compression can be narrower , This leads to QoS One of the more important overviews is Traffic classification , According to the classification results, more accurate and personalized current limiting control can be carried out .

2.2 Traffic priority

It doesn't work just by classification , Because there are always special circumstances , For example, ambulance rescue 、 Police car arrest, etc , We can't say that this lane can only run ordinary private cars , Some special vehicles ( ambulance , Fire engines and police cars ) They should have priority access .

For storage, business traffic is our Special vehicles , We need to ensure the stability of business flow , For example, the bandwidth of business traffic and IOPS There is no limit on the , And internal traffic such as migration 、 Repair requires limiting the bandwidth or IOPS, Its distribution is fixed “ Vehicle Lane ”. With sufficient resources , Internal traffic can be quiet in their own lanes , But when resources are tight , Such as sudden increase of business flow or continuous high flow level , At this time, we need to limit the width of internal flow , In extreme cases, you can pause . Of course , If the internal traffic stops, it still can't meet the reading and writing requirements of normal business traffic , At this time, we need to consider expansion .

QoS Another important concept is that Prioritization , Execute the pre allocation strategy when resources are sufficient , When resources are tight, dynamically adjust the service resources with low priority , Make appropriate evasion or suspension , To a certain extent, it can make up for the deficiency of the pre allocation scheme .

2.3 Traffic monitoring

As mentioned earlier, when resources are insufficient , We can dynamically adjust other traffic thresholds , So how do we know we're short of resources ? At this time, we need to have a flow monitoring component .

We often use maps when we travel , Get to your destination as quickly as possible by choosing the right route . Generally, lines will be marked with different colors for congestion , For example, red means traffic jam 、 Green means unblocked .

There are two ways for storage to know the current traffic situation of the machine or disk :

- Count the machine load , For example, we often go to the machine and pass through iostat Name to see the disk's io situation , This approach is decoupled from the application on the machine , Just focus on the machine itself

- Count the read and write traffic of each application , For example, a storage node application is deployed on a certain machine , Then we can count the read / write bandwidth and IOPS

Compared with the first method, the second method can achieve more detailed traffic classification within the application , For example, the storage application node mentioned above , It contains a variety of traffic , We can't limit all the flow through the granularity of the machine .

3、 ... and 、 common QoS Current limiting algorithm

3.1 Fixed window algorithm

- It is divided into several current limiting windows according to time , such as 1 Second is the size of a current limiting window ;

- Each window has a counter , The counter is incremented by one for each request passed ;

- When the counter size exceeds the limit size ( For example, you can only pass through in one second 100 A request ), Other requests in the window will be discarded or queued , Wait until the next time node counter is cleared before processing the request .

The ideal flow control effect of fixed window algorithm is shown in the figure on the left , Suppose you set 1 The maximum number of requests allowed per second is 100, that 1 The maximum number of requests per second will not exceed 100.

But most of the time we get the graph on the right , That is, the effect of doubling the flow rate may occur . Like before T1\~T2 Time period no request ,T2\~T3 coming 100 A request , All pass . Next current limiting window counter is cleared , then T3T4 Time has come 100 A request , All processed successfully , This time period T4T5 Even if there is a request in the time period, it can't be processed , So the set threshold is exceeded , Final T2~T4 The request processed in this second is 200 individual , So the traffic doubled .

Summary

- The algorithm is easy to understand , Implement a simple ;

- The flow control is not fine enough , It is easy to double the flow rate ;

- It is suitable for the model with smooth traffic and double traffic .

3.2 Sliding window algorithm

As mentioned above, the fixed window algorithm is prone to uncontrollable traffic ( Traffic doubled ), Sliding window can be considered as an upgrade of fixed window , It can avoid double traffic caused by fixed window .

- The time window is subdivided into several cells , Like a window a second ago ( Maximum allowed to pass through 60 A request ), Now a second is divided into 3 Between communities , The maximum allowed passage between each cell 20 A request ;

- Each interval has a separate counter , It can be understood that an interval is a current limiting window in the fixed window algorithm ;

- When an interval runs out of time , Slide the window back one partition , Old partition (T1~T2) To be discarded , New partition (T4~T5) Add sliding window , As shown in the figure .

Summary

- More accurate flow control , It solves the problem of traffic doubling caused by fixed window algorithm ;

- The granularity of interval partition is not easy to determine , Too small granularity will increase computing resources , If the particle size is too large, the overall flow curve will not be smooth enough , It makes the system load up and down ;

- Suitable for more stable flow , There's no massive traffic surge model .

3.3 Funnel algorithm

- All the water drops ( request ) It's going to pass first “ funnel ” Store it ( Waiting in line );

- When the funnel is full , The excess water will be discarded or put into a waiting queue ;

- The other end of the funnel will discharge the water droplets at a fixed rate .

For the funnel , He didn't know the water drop ( request ) When will it flow into , But it can always ensure that the speed of water will not exceed the set threshold , Requests are always processed at a relatively smooth speed , As shown in the figure , After the system is limited by funnel algorithm , The flow can be guaranteed under a constant threshold .

Summary

- Stable processing speed , It can achieve the effect of rectification , It mainly protects the downstream system ;

- Unable to cope with the sudden increase in traffic , All requests are slowed down through the funnel , Therefore, it is not suitable for the current limiting scenario with traffic burst ;

- It is suitable for models without sudden increase of traffic or want to achieve traffic integration at a fixed rate .

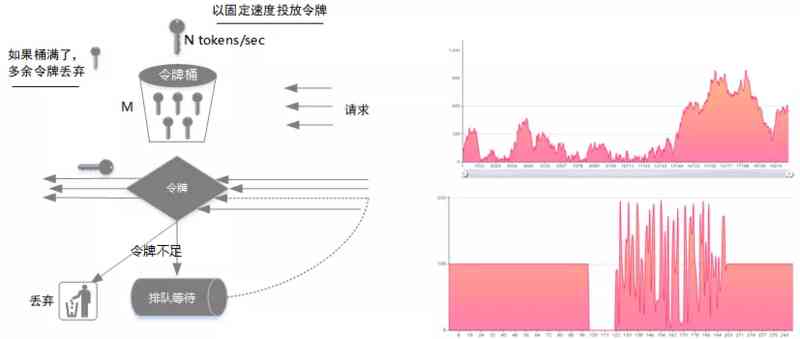

3.4 Token bucket algorithm

Token bucket algorithm is an improvement of funnel algorithm , The main solution is that funnel algorithm can't deal with traffic burst scenarios

- The token is generated at a fixed rate and put into the bucket , For example, one second delivery N A token ;

- If the number of tokens in the token bucket is greater than the token bucket size M, The extra token will be discarded ;

- When all requests arrive , The token is taken from the token first , Get the token and execute the request , If no token is obtained, the request will be discarded or queued for the next attempt to obtain the token .

As shown in the figure , Suppose that the token delivery rate is 100/s, The maximum number of tokens that a bucket can hold 200, When the request speed is greater than the other delivery rate , Requests will be limited to 100/s. If there is no request for a certain period of time , At this point, the number of tokens in the token bucket will slowly increase until 200 individual , This is a request that can be executed at one time 200, That is, the traffic within the set threshold is allowed to be concurrent .

Summary

- The flow is smooth ;

- Allow traffic within a specific threshold to be concurrent ;

- A model suitable for rectifying and allowing a certain degree of sudden increase in flow .

In terms of algorithm alone , No algorithm is the best or the worst , It is necessary to select the most appropriate algorithm according to the actual traffic characteristics and system requirements .

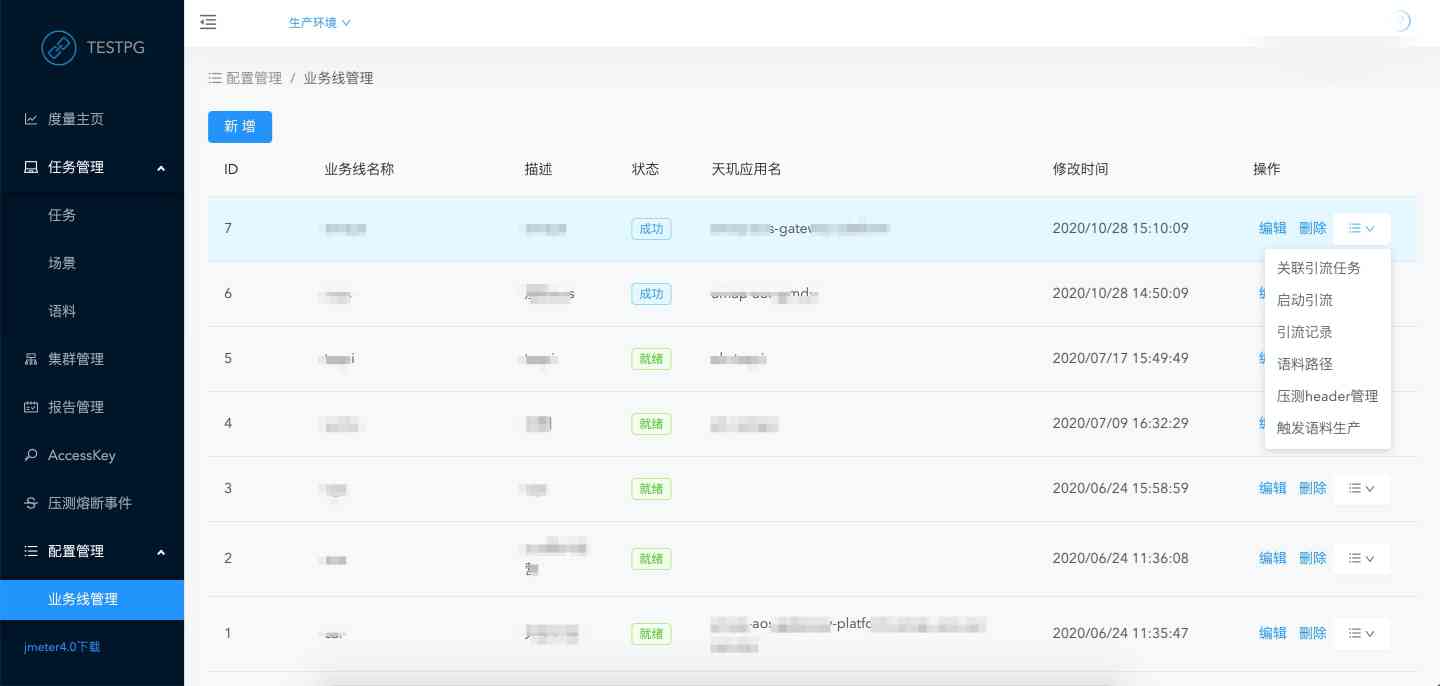

Four 、 Storage QoS Design and Implementation

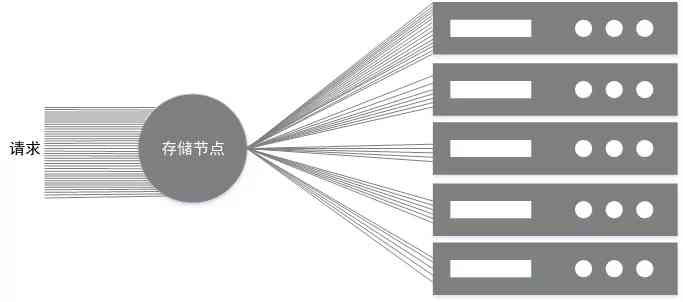

4.1 demand

Generally speaking, a machine will deploy at least one storage node , The node is responsible for reading and writing requests of multiple disks , Storage requests are divided into many types , For example, normal business read and write traffic 、 Disk damage repair traffic 、 The space compression traffic after data hole appears in data deletion and erasure code to reduce the storage cost of multiple copies (EC) Migration traffic and so on , Different traffic in the same storage node will compete with each other to seize system resources , In order to better ensure the quality of business services , Need bandwidth for traffic and IOPS Limit control , For example, you need to meet the following conditions :

- You can limit the bandwidth of traffic at the same time IOPS, Separate bandwidth or IOPS The limitation will lead to another parameter out of control and affect the stability of the system , For example, only the bandwidth is controlled , But there is no limit IOPS, For a large number of small IO The scene will lead to the machine ioutil Too high ;

- It can realize the limitation of disk granularity , Avoid disk traffic overload caused by machine granularity limit , As shown in the picture ,ec The maximum bandwidth of the traffic limiting node is 10Mbps, The expected effect is to allocate 2Mbps, But it's very likely that 10Mbps All assigned to the first disk ;

- Can support traffic classification control , Set different current limiting parameters according to different flow characteristics , For example, business traffic is what we need to focus on protecting , Therefore, the traffic flow cannot be limited , and EC、 Other traffic such as compression is internal flow , The appropriate current limiting threshold can be configured according to its characteristics ;

- It can support dynamic adaptation of current limiting threshold , Because the business flow cannot be controlled , It's like a horse to the system “ Runaway Mustang ”, There may be a sudden increase in 、 A sudden decrease or sustained peak , For the scenario of sudden increase or continuous peak, the system needs to allocate resources as much as possible , This means that the flow limiting threshold of internal flow needs to be set dynamically, which is pause avoidance .

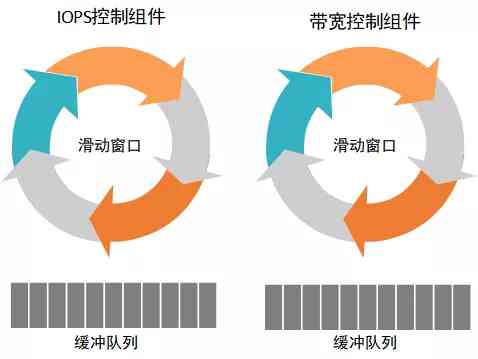

4.2 Algorithm to choose

As mentioned earlier QoS There are a lot of algorithms for , Here we choose the sliding window algorithm according to the actual needs , There are mainly the following reasons :

- The system needs to control the internal flow, which is relatively stable and gentle ;

- It can avoid traffic burst and affect business traffic ;

QoS Components in addition to the sliding window , You also need to add a Cache queue , When a request is limited, it cannot be discarded , It needs to be added to the cache queue , Wait for the next time window to execute , As shown in the figure below .

4.3 Bandwidth and IOPS At the same time limit

In order to achieve bandwidth and IOPS Control at the same time ,QoS The component will consist of two parts :IOPS The control component is responsible for controlling the reading and writing of IOPS, The bandwidth control component is responsible for controlling the bandwidth of read and write , Bandwidth control and IOPS Control is like , For example, the bandwidth limit threshold is 1Mbps, So it means that you can only read and write in one second 1048576Bytes Size data ; Assume IOPS Limit to 20iops, It can only be sent within one second 20 Second read / write requests , As for the size of each read and write request, it doesn't matter .

The two components are isolated from each other , On the whole, they influence each other , For example, when IOPS When control is low , The corresponding bandwidth may also be smaller , When the bandwidth control is very small IOPS It's also smaller .

Let's take repairing traffic as an example , Test in three groups

- The first group :20iops-1Mbps

- The second group :40iops-2Mbps

- The third group :80iops-4Mbps

The test results are shown in the figure above , As you can see from the diagram qos The module can control the bandwidth of traffic and iops Keep within the set threshold .

4.4 Traffic classification restrictions

In order to distinguish between different traffic , We label traffic , And initialize one for different traffic on different disks QoS Components ,QoS Components are independent of each other and do not affect each other , Finally, it can achieve the bandwidth of disk granularity and IOPS control .

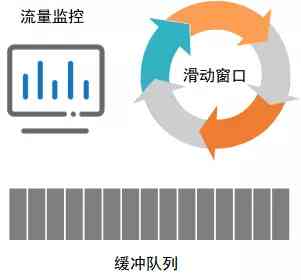

4.5 Dynamic threshold adjustment

aforementioned QoS Current limiting scheme , Although it can well control the internal traffic bandwidth or IOPS Within the threshold range , But there are some shortcomings

- Not aware of the status quo of business traffic , When the business flow suddenly increases or continues to peak , There will still be resource preemption in internal traffic and business traffic , Unable to achieve traffic avoidance or pause effect .

- The current limiting of different traffic on the disk is independent of each other , When the overall traffic bandwidth of the disk or IOPS Overload , The internal traffic threshold can not be dynamically lowered, which will also affect the quality of service of business traffic .

So you need to QoS Some improvements have been made to the components , increase Flow monitoring components , The monitoring component mainly monitors the bandwidth of different traffic types and IOPS, dynamic QoS The current limiting scheme supports the following functions :

- Get the growth rate of traffic through monitoring components , If there is a sudden increase in flow , Then the sliding window threshold is dynamically lowered to reduce the internal traffic ; When traffic returns to flat , Restore the initial threshold of sliding window to make full use of system resources .

- Get the overall disk traffic through monitoring components , When the overall flow size exceeds the set threshold , Then dynamically reduce the size of the sliding window ; When the overall traffic size is lower than the set threshold , Then restore the sliding window to the initial threshold .

Set the overall disk traffic threshold below 2Mbps-40iops,ec The threshold of traffic is 10Mbps-600iops

When the overall disk traffic reaches the disk threshold, the threshold of other internal traffic will be adjusted dynamically , As you can see from the test results ec There are some fluctuations in the dynamic threshold adjustment , After the overall disk traffic goes down ec The traffic threshold will return to the original threshold (10Mbps-600iops), But you can see that the overall disk traffic is not controlled in 2Mbps-40iops following , It's going up and down in this range , Therefore, we need to ensure that the internal traffic threshold is less than the overall traffic threshold of the disk during initialization , Only in this way can we achieve a relatively stable internal flow control effect .

4.6 Pseudo code implementation

I mentioned storage QoS It mainly limits the bandwidth of reading and writing and IOPS, How to realize it ?IO Reading and writing mainly involves the following interfaces

Read(p []byte) (n int, err error)

ReadAt(p []byte, off int64) (n int, err error)

Write(p []byte) (written int, err error)

WriteAt(p []byte, off int64) (written int, err error)So we need to encapsulate the above interfaces again , It is mainly to add current limiting components

Bandwidth control components to achieve

Read Realization

// Assume c Flow limiting components

func (self *bpsReader) Read(p []byte) (n int, err error) {

size := len(p)

size = self.c.assign(size) // Request to read file size

n, err = self.underlying.Read(p[:size]) // Read the corresponding size data according to the application size

self.c.fill(size - n) // If the read data size is smaller than the application size , Fill the unused count into the current limiting window

return

}Read The following will happen after current limiting

- Read size n<len(p) And err=nil, For example, you need to read 4K size , But the current time window can only be read 3K, This is allowed

Here you might think ,Read How can the realization of current limiting not make a loop ? For example, it is not returned until the specified size data is read . We need to refer to the standard for the implementation here IO The definition of the read interface of , It says In the process of reading, if the prepared data is insufficient len(p) size , Here we return the ready data directly , Instead of waiting , In other words, the standard semantics is to support read-only parts of the prepared data , So the current limiting implementation here is consistent .

// Reader is the interface that wraps the basic Read method.

//

// Read reads up to len(p) bytes into p. It returns the number of bytes

// read (0 <= n <= len(p)) and any error encountered. Even if Read

// returns n < len(p), it may use all of p as scratch space during the call.

// If some data is available but not len(p) bytes, Read conventionally

// returns what is available instead of waiting for more.

// Omit

//

// Implementations must not retain p.

type Reader interface {

Read(p []byte) (n int, err error)

}ReadAt Realization

Here's how ReadAt The implementation of the , From the definition of the interface , May feel that ReadAt And Read Little difference , It just specifies the starting position of data reading , Careful partners may find that we have a layer of loop when we implement it here , Need to read the specified size data or error to return , comparison Read for ReadAt It's not allowed to appear \n<len(p) And err==nil\ The situation of .

func (self *bpsReaderAt) ReadAt(p []byte, off int64) (n int, err error) {

for n < len(p) && err == nil {

var nn int

nn, err = self.readAt(p[n:], off)

off += int64(nn)

n += nn

}

return

}

func (self *bpsReaderAt) readAt(p []byte, off int64) (n int, err error) {

size := len(p)

size = self.c.assign(size)

n, err = self.underlying.ReadAt(p[:size], off)

self.c.fill(size - n)

return

}// ReaderAt is the interface that wraps the basic ReadAt method.

//

// ReadAt reads len(p) bytes into p starting at offset off in the

// underlying input source. It returns the number of bytes

// read (0 <= n <= len(p)) and any error encountered.

//

// When ReadAt returns n < len(p), it returns a non-nil error

// explaining why more bytes were not returned. In this respect,

// ReadAt is stricter than Read.

//

// Even if ReadAt returns n < len(p), it may use all of p as scratch

// space during the call. If some data is available but not len(p) bytes,

// ReadAt blocks until either all the data is available or an error occurs.

// In this respect ReadAt is different from Read.

// Omit

//

// Implementations must not retain p.

type ReaderAt interface {

ReadAt(p []byte, off int64) (n int, err error)

}Write Realization

Write The implementation of the interface is relatively simple , Loop until the data is written or an error occurs

func (self *bpsWriter) Write(p []byte) (written int, err error) {

size := 0

for size != len(p) {

p = p[size:]

size = self.c.assign(len(p))

n, err := self.underlying.Write(p[:size])

self.c.fill(size - n)

written += n

if err != nil {

return written, err

}

}

return

}// Writer is the interface that wraps the basic Write method.

//

// Write writes len(p) bytes from p to the underlying data stream.

// It returns the number of bytes written from p (0 <= n <= len(p))

// and any error encountered that caused the write to stop early.

// Write must return a non-nil error if it returns n < len(p).

// Write must not modify the slice data, even temporarily.

//

// Implementations must not retain p.

type Writer interface {

Write(p []byte) (n int, err error)

}WriteAt Realization

The implementation here follows Write similar

func (self *bpsWriterAt) WriteAt(p []byte, off int64) (written int, err error) {

size := 0

for size != len(p) {

p = p[size:]

size = self.c.assign(len(p))

n, err := self.underlying.WriteAt(p[:size], off)

self.c.fill(size - n)

off += int64(n)

written += n

if err != nil {

return written, err

}

}

return

}// WriterAt is the interface that wraps the basic WriteAt method.

//

// WriteAt writes len(p) bytes from p to the underlying data stream

// at offset off. It returns the number of bytes written from p (0 <= n <= len(p))

// and any error encountered that caused the write to stop early.

// WriteAt must return a non-nil error if it returns n < len(p).

//

// If WriteAt is writing to a destination with a seek offset,

// WriteAt should not affect nor be affected by the underlying

// seek offset.

//

// Clients of WriteAt can execute parallel WriteAt calls on the same

// destination if the ranges do not overlap.

//

// Implementations must not retain p.

type WriterAt interface {

WriteAt(p []byte, off int64) (n int, err error)

}IOPS The control component implements

IOPS The implementation of the control component is similar to bandwidth , I won't go into details here

Read Interface implementation

func (self *iopsReader) Read(p []byte) (n int, err error) {

self.c.assign(1) // Here you just need to get a count , If there is none in the current window , You will wait until you get one and wake up to perform the next step

n, err = self.underlying.Read(p)

return

}ReadAt Interface implementation

func (self *iopsReaderAt) ReadAt(p []byte, off int64) (n int, err error) {

self.c.assign(1)

n, err = self.underlying.ReadAt(p, off)

return

}Think about the ReadAt Why don't you need to cycle like bandwidth ?

Write Interface implementation

func (self *iopsWriter) Write(p []byte) (written int, err error) {

self.c.assign(1)

written, err = self.underlying.Write(p)

return

}WriteAt

func (self *iopsWriterAt) WriteAt(p []byte, off int64) (n int, err error) {

self.c.assign(1)

n, err = self.underlying.WriteAt(p, off)

return

}

![What is website [new four modernizations]?](/img/3b/00bc81122d330c9d59909994e61027.jpg)