当前位置:网站首页>Call the classic architecture and build the model based on the classic

Call the classic architecture and build the model based on the classic

2022-07-01 01:22:00 【L_ bloomer】

1 Call the classic architecture

Most of the time , We won't go from 0 To create our own architecture , Instead, choose a suitable architecture or idea from the classic architecture , On the classic architecture, the architecture is customized and modified according to the needs of data ( Yes, of course , We can only call what we have learned 、 And master the structure of the principle , Otherwise, we will have no way to start when revising ). stay PyTorch in , Basically all classical architectures have been implemented , So we can go straight from PyTorch in “ Transfer Library ” To use . Unfortunately , Most of the libraries directly transferred out cannot meet our own needs , But we can still call PyTorch As the basis of our own architecture .

PyTorch There is a very important and easy-to-use package in the framework :torchvision, The package is mainly composed of 3 The sub package consists of , Namely :torchvision.datasets、torchvision.models、torchvision.transforms. from torchvision Invoking the complete model architecture , These architectures are located in “CV Common models ” modular torchvision.models in . stay torchvision.models in . stay torchvision.models in , framework / The model is divided into 4 Large type : classification 、 Semantic segmentation 、 object detection / Instance segmentation and key point detection 、 Video classification . For each type of Architecture ,models Both contain at least one parent class that implements the architecture itself ( Present as “ Hump type ‘ name ) And a subclass containing pre training functions ( All lowercase ). For having different depths 、 In terms of architectures with different structures , It may also contain multiple subclasses .( Parents and children : The relationship between the basic skeleton and the basic implementation )

For only one architecture 、 There are no different depths of AlexNet Come on , The structure of the two classes is the same

For only one architecture 、 There are no different depths of AlexNet Come on , The structure of the two classes is the same

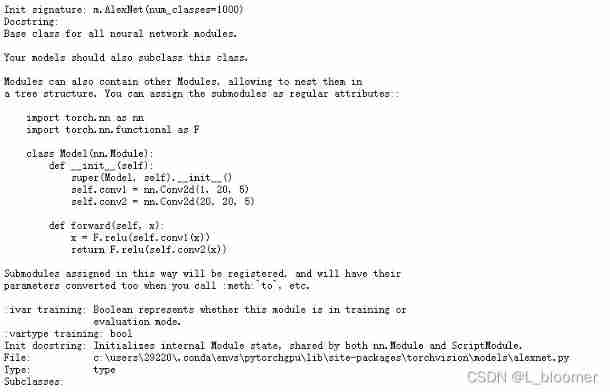

m.AlexNet() # Check what parameters need to be filled in ?

m.alexnet() # take AlexNet The functions of the parent class are included in , Parameter input to the original schema is not allowed , But you can do pre training

For residual Networks , A parent class is a class that implements a specific architecture , Subcategory is a class that has filled in the required parameters

m.ResNet() # You can implement structures of different depths from this class

m.resnet152() # The specific depth and parameters are locked , You can perform pre training on this class

When actually using the model , We almost always call lowercase classes directly , Unless we want to modify the internal architecture on a large scale . As shown below , The way to call a class is very simple :

Due to different network architectures , Therefore, different architecture classes do not share properties or methods , We need to check the architecture ourselves before calling . We can modify the super parameter settings of some layers according to our own needs .

If we want to modify the classic architecture , We have to revise... Layer by layer . One layer of the convolutional network may be used for all subsequent layers An impact , Therefore, we often only input to the network 、 Fine tune the output layer , The middle tier of the schema will not be modified . However , Most of it Time sharing and full application of classical architecture can not meet our modeling needs , So we need to build our own architecture based on the classic architecture .

2 Self built architecture based on classic architecture

Almost all modern classic architectures are based on ImageNet Data sets 224x224 The size of the 、1000 Constructed by classification , Therefore, almost all classical architectures will have 5 Next sampling ( The pool layer or step size is 2 The convolution of layer ). When our data set is large enough , We will give priority to using the classic architecture to run on the data set , But when our image size is small , We don't have to expand the image to 224x224 Size to fit the classic architecture ( It can be used transform.Resize Do it , However, the prediction effect after amplification and conversion may not be very good ). This will not only increase the computational power requirements 、 The calculation time becomes longer , It may not be able to achieve good results . If possible , On smaller pictures , We hope to keep the status quo as far as possible to control the overall amount of calculation .

For convolution Architecture , Change the of the characteristic graph Number of inputs and outputs The behavior of is only related to oneortwo layers , To change the Dimension of characteristic drawing The behavior of will affect the whole architecture . therefore , We usually learn from the classic architecture ” extract “ Part to use , There is also a small possibility from 0 Build your own new architecture . Suppose we are using something similar to Fashion-MNIST Size ,28x28 Data set of , On such a dataset , The only chance we may perform the next sampling is 2 Time , Once from 28x28 Dimensionality reduction 14x14, Another time is from 14x14 Dimensionality reduction 7x7. Such a data set is not very suitable for tens of millions 、 Classic architectures with hundreds of millions of parameters . under these circumstances , torchvision.models The built-in architecture cannot flexibly meet the requirements , Therefore, we often do not directly use the built-in Architecture , Instead, the architecture is reconstructed on the basis of its own architecture .

Here's an example based on VGG And residual network self built architecture :

We may have many different ideas for building architecture . The most common way is to follow VGG To deepen the network , The other is to use blocks in classical Networks ( For example, the classical residual unit in residual network 、GoogLeNet Medium inception Isostructure ) To deepen the network . Experience proves that , stay inception It would be good to add some ordinary convolution layer before the residual unit , So here I use VGG The idea of , Then use the residual unit . You can freely combine your favorite structures .

边栏推荐

- Installing mongodb database in Windows Environment

- Oracle table creation and management

- 用Steam教育启发学生多元化思维

- 双位置继电器DLS-5/2 DC220V

- What is the difference between Pipeline and Release Pipeline in azure devops?

- Shift operators

- Hoo research | coinwave production - nym: building the next generation privacy infrastructure

- 双位置继电器ST2-2L/AC220V

- Vnctf 2022 cm CM1 re reproduction

- 【学习笔记】简单dp

猜你喜欢

Analysis of blocktoken principle

The quantity and quality of the devil's cold rice 101; Employee management; College entrance examination voluntary filling; Game architecture design

ORB-SLAM2源码学习(二)地图初始化

2021电赛F题openmv和K210调用openmv api巡线,完全开源。

uniapp官方组件点击item无效,解决方案

![[learning notes] double + two points](/img/d4/1ef449e3ef326a91966da11b3c8210.png)

[learning notes] double + two points

Dls-20 double position relay 220VDC

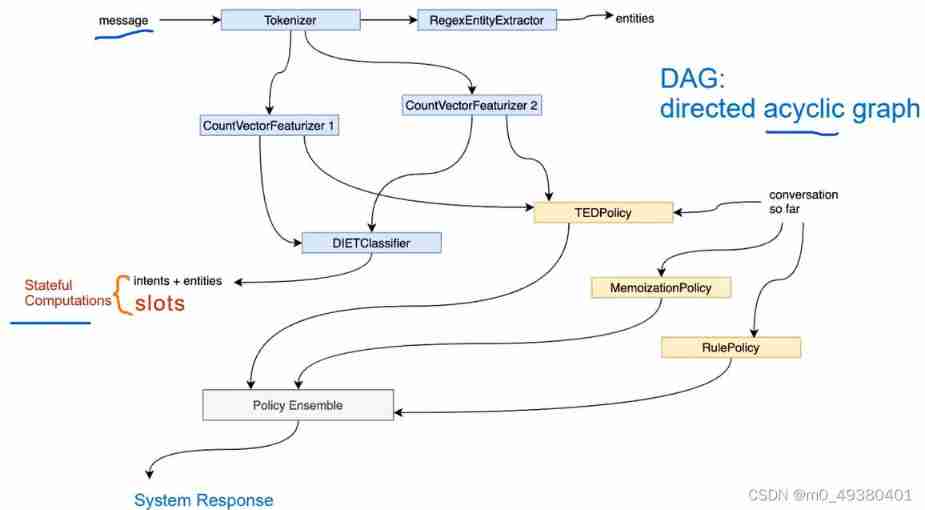

Gavin's insight on the transformer live broadcast course - rasa project's actual banking financial BOT Intelligent Business Dialogue robot system startup, language understanding, dialogue decision-mak

Kongyiji's first question: how much do you know about service communication?

解析融合学科本质的创客教育路径

随机推荐

Green, green the reed. dew and frost gleam.

K210工地安全帽

[LeetCode] 爬楼梯【70】

The question of IBL precomputation is finally solved

ORB-SLAM2源码学习(二)地图初始化

个人博客搭建与美化

Chromatic judgement bipartite graph

Xjy-220/43ac220v static signal relay

Metauniverse and virtual reality (II)

Impact relay zc-23/dc220v

Share your own terminal DIY display banner

Two-stage RO: part 1

解析创客教育实践中的智慧原理

DX-11Q信号继电器

5. TPM module initialization

JS方法大全的一个小文档

js中把数字转换成汉字输出

解析融合学科本质的创客教育路径

Mustache syntax

Openmv and k210 of the f question of the 2021 video game call the openmv API for line patrol, which is completely open source.