当前位置:网站首页>[A summary of the sorting and use of activation functions in deep learning]

[A summary of the sorting and use of activation functions in deep learning]

2022-08-03 13:45:00 【vcsir】

Arrange and use of the activation function of deep learning summary

介绍

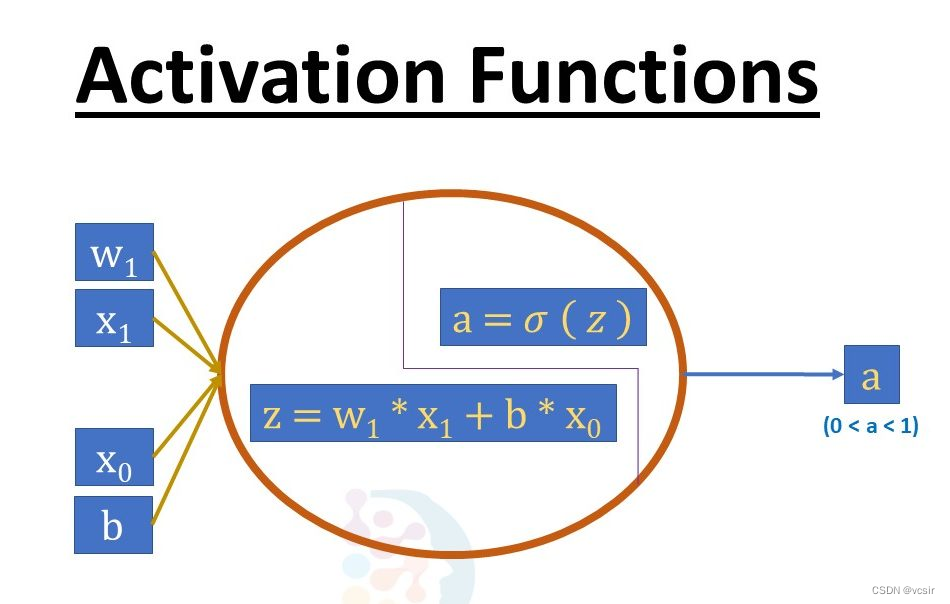

激活函数定义如下:Activation function weighted sum,Then add to its deviation to decide whether should activate neurons.The goal is to nonlinear activation function into the output of the neuron.No activation functions of neural network is basically linear regression network model of deep learning,Because these functions to perform nonlinear neural network's input to calculate,使其能够学习和执行更复杂的任务.因此,The activation functions of derivative and application,And analysis the advantages and disadvantages of each activation function,For selection in a specific neural network model with nonlinear and the accuracy of the appropriate type of activation function is vital.

We know that neurons in the neural network is according to their weight、Deviation and activation function and work,According to to change the neuron weights of the neural network output error and deviation.Back propagation is the term of this process,Due to the gradient and error at the same time provide updated weights and bias,Therefore support back propagation activation function.

为什么我们需要它?

非线性激活函数:如果没有激活函数,Neural network is just a linear regression model.The activation function in nonlinear way transformation input,To study and complete the task more complicated.

激活函数的种类

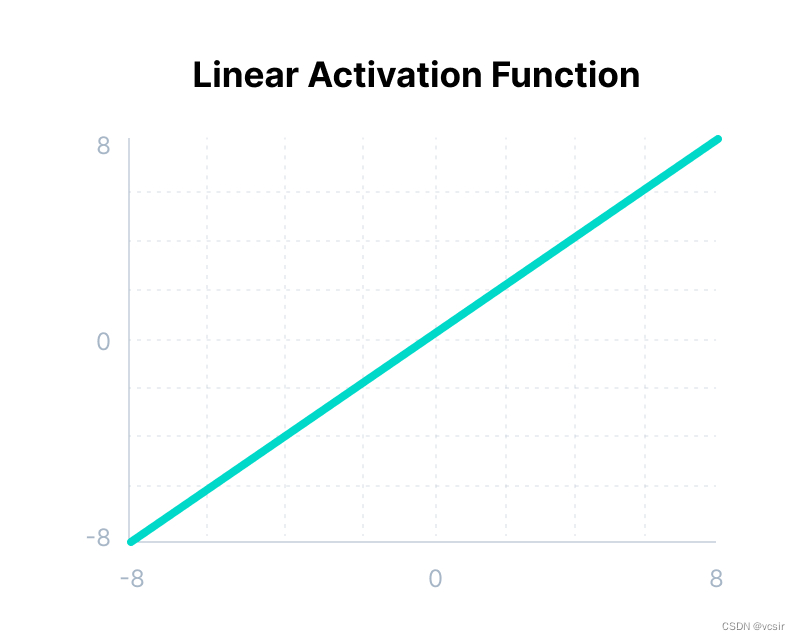

1)线性激活函数

• 方程:Linear function equation isy = ax,With the linear equations are very similar.

• -inf 到 +inf 范围

• 应用:Linear activation function in the output layer using only one time.

• 问题:If we are to differential linear function to introduce nonlinear,The result will no longer with the input“x”Relevant and function will become a constant,Therefore our program will not be shown any behavior.

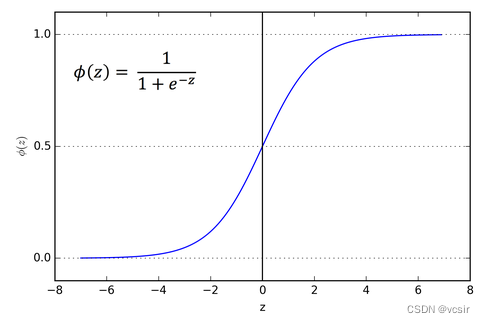

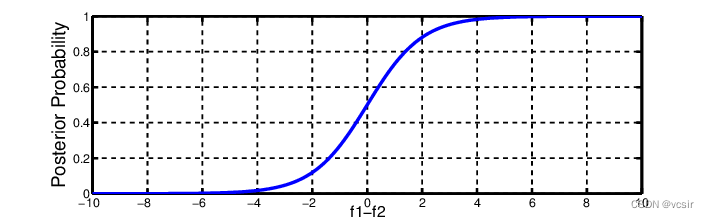

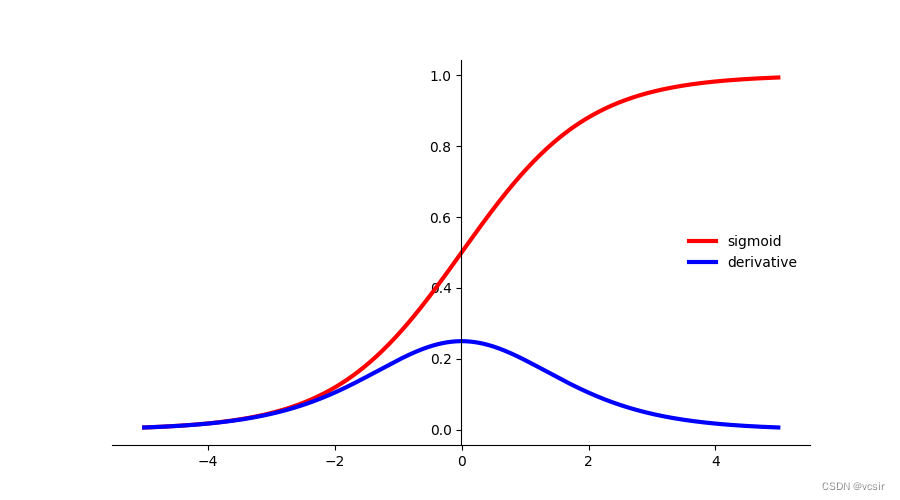

2)sigmoid激活函数:

• 这是一个以“S”Of mapping function.

• 公式:A = 1/(1 + ex)

•非线性.X 的值范围从 -2 到 2,但 Y The value is very steep.这表明x Small changes will lead to Y 值的巨大变化.

• 0 到 1 的范围值

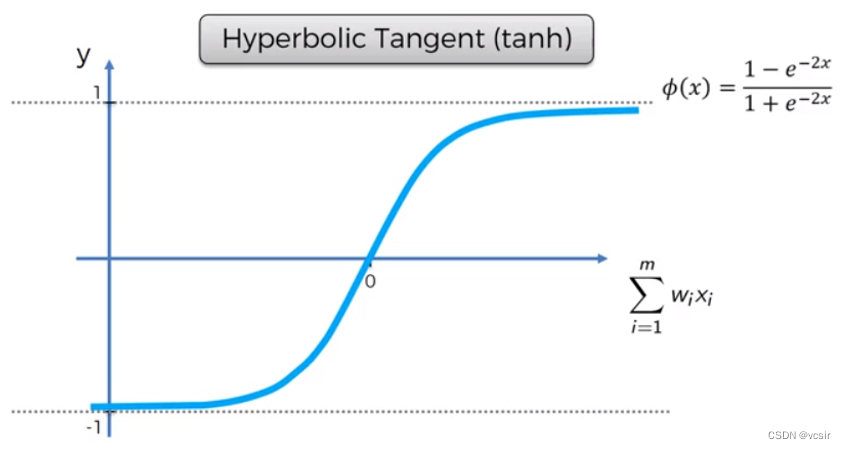

3)Tanh 激活函数:

Tanh 函数,Also known as the tangent hyperbolic function,Is an almost always better than sigmoid Better function of activation function.It is just an adjusted sigmoid 函数.Both are related to,Can be derived to each other.

• 方程:f(x) = tanh(x) = 2/(1 + e-2x) – 1 OR tanh(x) = 2 * sigmoid(2x) – 1 OR tanh(x) = 2 * sigmoid(2x) – 1

• 值范围:-1 到 +1

• 用途:Usually used in neural network hidden layer,因为它的值从 -1 变为 1,Lead to hidden layers on the average of 0 Or very close to it,This helps by making the average close to 0 To help the data centralized,This makes learning under a layer of more direct.

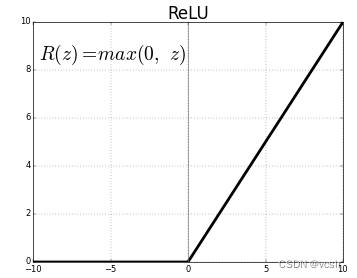

4)RELU激活函数.

This is the most commonly used way to activate,Mainly used in neural network hidden layer.

• 公式:A(x) = max (0,x).如果 x 为正,则返回 x;否则,它返回 0.

• 值范围:(inf, 0)

• 本质上是非线性的,This means that the simple error back propagation and also has the activation of multilayer neurons ReLU 函数.

• 应用:Because it contains less math,ReLu The calculation of costs less than tanh 和 sigmoid,A only a small number of neurons active,This makes the network sparse and high calculation efficiency.

简单地说,RELU Function of the learning faster than sigmoid 和 Tanh 函数快得多.

5)Softmax 激活函数

softmax 函数是一种 sigmoid 函数,Come in handy when dealing with classification problem.

• In essence the nonlinear

• 用途:Usually when dealing with multiple classification using.softmaxThe sum of functions will be divided by output,And the output of each class compression in 0 和 1 之间.

• 输出:softmax Function best in classifier output layer,We try to use probability to define each input categories.

Select the correct activation function

If not sure you want to use the activation function,只需选择 RELU,This is a widespread activation function,At present, in most cases is used.If our output layer is used for binary classification recognition/检测,那么 sigmoid Function is a right choice.

Python代码实现

import numpy as np

import matplotlib.pyplot as plt

#实现sigmoid函数

def sigmoid(x):

s=1/(1+np.exp(-x))

ds=s*(1-s)

return s,ds

if __name__ == '__main__':

x=np.arange(-5,5,0.01)

sigmoid(x)

fig, ax = plt.subplots(figsize=(9, 5))

ax.spines['left'].set_position('center')

ax.spines['right'].set_color('none')

ax.spines['top'].set_color('none')

ax.xaxis.set_ticks_position('bottom')

ax.yaxis.set_ticks_position('left')

ax.plot(x,sigmoid(x)[0], color='#ff0000', linewidth=3, label='sigmoid')

ax.plot(x,sigmoid(x)[1], color='#0000ff', linewidth=3, label='derivative')

ax.legend(loc="center right", frameon=False)

fig.show()

plt.pause(-1)

结论

在本文中,I mainly to sort out and sums up the depth study of the different types of activation function for your reference and study,若有不正确的地方,还请大家指正,谢谢!

边栏推荐

猜你喜欢

随机推荐

JS get browser type

Nanoprobes 金纳米颗粒标记试剂丨1.4 nm Nanogold 标记试剂

Petri网-2、有向网

类和对象(中上)

Golang strings

Classes and Objects (lower middle)

农产品企业如何进行全网营销?

【OpenCV】 书本视图矫正 + 广告屏幕切换 透视变换图像处理

Multithreading in Redis 6

MySQL数据表操作实战

GameFi industry down but not out | June Report

软件测试面试(四)

客户:我们系统太多,能不能实现多账号互通?

力扣刷题 每日两题(一)

保健用品行业B2B电子商务系统:供采交易全链路数字化,助推企业管理精细化

OpenCV 透视变换

BOM系列之sessionStorage

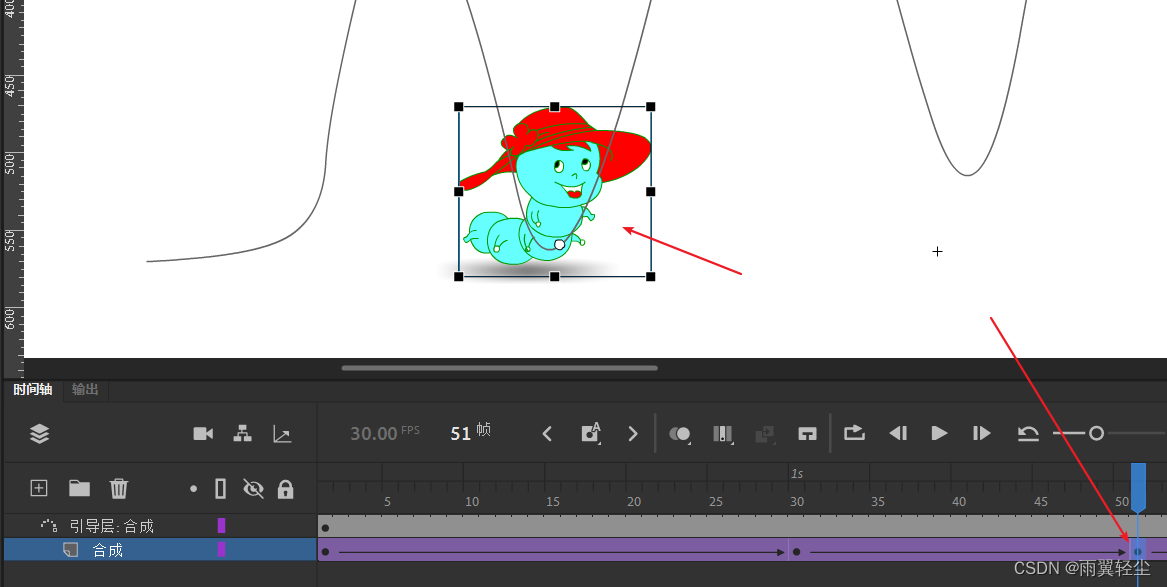

The components of the basis of An animation movie clip animation between traditional filling

TensorFlow离线安装包

Notepad++ install jsonview plugin