当前位置:网站首页>Record an online interface slow query problem troubleshooting

Record an online interface slow query problem troubleshooting

2022-07-01 06:41:00 【Cold tea ice】

Catalog

2 Code and database optimization

3 ConcurrentLinkedQueue programme

Problem description

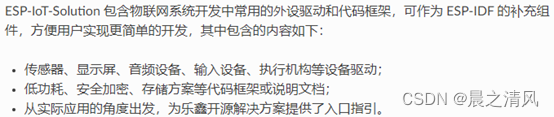

There is an interface for classification prediction , The main business logic is to enter a piece of text , The interface calls the model internally to predict the text classification . Model data is directly in memory , So the prediction process itself is very fast . After the forecast is completed , Insert a piece of data into the forecast record table . Later, other applications will correct the record , Judge whether the prediction is successful , In order to follow-up self-learning .

At the initial stage of the interface launch, it was highly praised , Whether it is intelligent dispatch or circulation prediction of handling units, the effect is very good , With the addition of self-learning function , It is expected that the application will run better . But two days ago, the customer suddenly reported that the system was very slow , It takes threeorfour seconds or even fiveorsix seconds for intelligent dispatch and circulation to produce results .

At first, I thought there were too many classification models on site , The model tree is also relatively deep , Therefore, a multi-level prediction will inevitably be more time-consuming , However, the most model trees found after verification are only four levels , A single model directly verifies that the predicted results are all in milliseconds , But calling the interface directly is slow . If it is not the problem of algorithm model , Nor is it a matter of machine resources , That must be a point in the code logic , And as the amount of data surges , The problem is becoming more and more obvious .

After a simple investigation , Finding problems is very low level , The main reason for the slow speed is that the data in the forecast record table is nearly 100w 了 , And this table has no index , There is only one self incrementing primary key , In the program , Because business logic requires , Every time the prediction interface is called, there will be an action of query updating or inserting , And it's synchronous . As the amount of data increases , Interface RT Destined to be slower and slower .

Solution

The problem is a small one , The main reason is that there is no in-depth consideration at the initial stage of interface design , The code is beautifully written , It's a pity that it hasn't been tested , An embroidered pillow . For this kind of problem , The most intuitive solution is decoupling , Forecast and forecast record warehousing are asynchronous . Of course, if there are business scenarios that require hard synchronization , You can also optimize from the code level and database level to increase the processing speed .

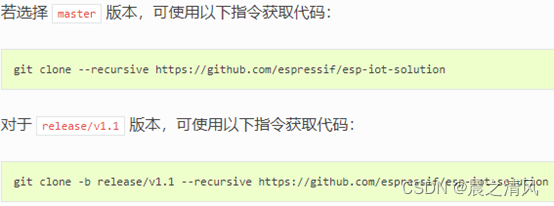

1 Message middleware

(1) You can introduce kafka perhaps RocketMQ Decoupling , This kind of insurance , The data won't be lost , And it can decouple core functions from non core functions . It's the mainstream solution , It also supports horizontal expansion and distribution .

(2) If you don't want to use it MQ, It can also be used directly Redis,Redis You can also realize the function of message queue , Reference here

2 Code and database optimization

If you have to synchronize , It is necessary to optimize and adjust from the code level , The main ideas are as follows :

(1) Add an index to the field of the query , Improve the capability of the database IO

(2) Set partition table or day table ( Lunar surface ) The concept of , Reduce the amount of data in a single table

(3) Code optimization , Merge the update or insert after query into one sql operation , Reference here

insertOrUpdate The implementation is based on mysql Of on duplicate key update To achieve .

Use ON DUPLICATE KEY UPDATE, Insert as new line , Then the affected row value of each row is 1. If you update an existing row , Then the affected row value of each row is 2; If you set an existing row to its current value , Then the affected row value of each row is 0( Can be configured by , The value of the affected row is 1).

Official address :

For the asynchronous decoupling scheme , If the previous application itself did not MQ、Redis, To solve this problem, install these middleware , To some extent, this undoubtedly increases the complexity of the system and the workload of dimensional integration . Based on this , We can also use java Its own multithreading to achieve asynchronous , The basic idea is to do IO Where to operate , Directly start a new thread to process .

But if the request is large , This will undoubtedly result in frequent thread creation 、 Free up resources , If the thread pool is introduced , It may also be blocked due to resource exhaustion , This does not fundamentally solve the problem of synchronization .

Can pass java Multi thread simulation message queue , In need of IO The business code of the operation , Encapsulate business data as Bo Put it in a queue . An independent thread can consume and process the queue . Sure Reference here

I mainly use ConcurrentLinkedQueue To solve this problem , The basic idea is , Created a scheduled task , Every time 30 Once per second , Deal with it every time ConcurrentLinkedQueue Data in the queue , Put data in storage . Business code encapsulates business data into Bo Put it in the queue .

3 ConcurrentLinkedQueue programme

Timing task

@Component

public class TaskJob

{

private static final Logger logger = LoggerFactory.getLogger(TaskJob.class);

@Scheduled(cron = "*/10 * * * * ?") // Every time 10 Once per second , Processing forecast result information asynchronously , Put in storage

public void execuPredictResult() throws Exception

{

SyncSavePredictResultService syncSavePredictResultService = new SyncSavePredictResultService();

syncSavePredictResultService.consumeData();

}

}Asynchronous processing

public class SyncSavePredictResultService

{

private static final Logger logger = LoggerFactory.getLogger(SyncSavePredictResultService.class);

// Message queue for forecast logging , Asynchronously process receipt operations

public static final ConcurrentLinkedQueue<PredictResultBo> RESULT_BO = new ConcurrentLinkedQueue<>();

public void consumeData()

{

PredictResultBo resultBo = RESULT_BO.poll();

while (resultBo != null)

{

// Do business logic processing

excuSubPdResult(resultBo.getContent(),

resultBo.getBegin(),

resultBo.getEnd(),

resultBo.getmId(),

resultBo.getFlagValue(),

resultBo.getPredictResult(),

resultBo.getRootPid(),

resultBo.getD());

logger.info(" Write forecast records asynchronously , The object content is :{}", resultBo.toString());

// to update resultBo

resultBo = RESULT_BO.poll();

}

}

private void excuSubPdResult(String pstr, long begin, long end, Integer mId, String flagValue, String result, Integer rootPid, Date d)

{

NlpSubPredictResults nResult = NlpSubPredictResults.GetInstance().findFirst("select * from nlp_sub_predict_results where flag_value=?", flagValue);

if (nResult != null)

{

nResult.set("predict_result", result)

.set("start_time", begin)

.set("end_time", end)

.set("content", pstr)

.set("updated_at", d)

.update();

}

else

{

nResult = NlpSubPredictResults.GetInstance();

nResult.set("flag_value", flagValue)

.set("root_p_id", rootPid)

.set("m_id", mId)

.set("predict_result", result)

.set("start_time", begin)

.set("end_time", end)

.set("content", pstr)

.set("created_at", d)

.set("updated_at", d)

.save();

}

}

}The business process

private void excuSubPdResult(String pstr,long begin,long end,Integer mId,String flagValue,String typeId,String typeName,Integer rootPid){

Map<String, String> rMap = new HashMap<>();

rMap.put("name",typeName);

rMap.put("id", typeId);

String result = JSON.toJSONString(rMap);

Date d = new Date();

PredictResultBo predictResultBo = new PredictResultBo(mId,rootPid,flagValue,result,begin,end,pstr,d);

SyncSavePredictResultService.RESULT_BO.add(predictResultBo);

/*

//2022-05-30 Change to asynchronous

NlpSubPredictResults nResult = NlpSubPredictResults.GetInstance().findFirst("select * from nlp_sub_predict_results where m_id=? and flag_value=? and root_p_id=?",mId,flagValue,rootPid);

if(nResult!=null){

nResult.set("predict_result", result)

.set("start_time",begin)

.set("end_time",end)

.set("content",pstr)

.set("updated_at",d)

.update();

}else{

nResult = NlpSubPredictResults.GetInstance();

nResult.set("flag_value",flagValue)

.set("root_p_id",rootPid)

.set("m_id",mId)

.set("predict_result", result)

.set("start_time",begin)

.set("end_time",end)

.set("content",pstr)

.set("created_at",d)

.set("updated_at",d)

.save();

}

*/

}other

The above transformation method actually has hidden dangers and drawbacks

(1) If the number of interface calls is large , There will inevitably be a backlog of information , At this time, if the node hangs , Then the data is lost .

(2) If there is a large backlog of messages , It's possible to burst the memory , This will affect the normal use of the application , It can also cause data loss .

(3) Cannot support multiple consumers .

(4) The logic of consumption warehousing actually has nothing to do with the core prediction function , But if there are many consumption data , It will inevitably affect the use of the core prediction function . This is unreasonable in terms of software architecture .

These questions are passed MQ perhaps Redis Can solve the problem very well .

however , But nothing is absolute , Many times, we need to adjust measures to local conditions , According to actual business requirements 、 Data requirement 、 Project emergencies 、 Cost budget, etc , Consider which solution to use .

such as : Through single node multithreading ConcurrentLinkedQueue Can solve the problem 99% The problem of , The required cost budget is 1, The development cycle 1 God , And by MQ Can solve 99.9% The problem of , The required cost budget is 10, The development cycle 7 God . The fault tolerance allowed by the customer is 5%. There is obviously no need to use MQ The plan , Have no meaning .

In fact, what I want to express is , Nothing is absolute , We should always face problems with an open mind , There's no need to 0.1% The advantages of 99% Additional input .

reference

【1】redis Implement message queuing -java Code implementation

边栏推荐

- 谷粒商城-环境(p1-p27)

- [network security tool] what is the use of USB control software

- Esp32 monitors the battery voltage with ULP when the battery is powered

- Esp32 - ULP coprocessor reading Hall sensor in low power mode

- Requests module (requests)

- 考研目录链接

- buildroot override 机制

- Draw a directed graph based on input

- 【微信小程序低代码开发】二,在实操中化解小程序的代码组成

- WiFi settings for raspberry Pie 4

猜你喜欢

图解事件坐标screenX、clientX、pageX, offsetX的区别

Figure out the difference between event coordinates screenx, clientx, pagex and offsetx

C language course set up student elective course system (big homework)

Record MySQL troubleshooting caused by disk sector damage

K8S搭建Redis集群

Embedded system

8 张图 | 剖析 Eureka 的首次同步注册表

C语言课设学生信息管理系统(大作业)

ESP32 - ULP 协处理器在低功耗模式下读片内霍尔传感器HALL SENSOR

ESP32在电池供电时用ULP监测电池电压

随机推荐

How to use SCI hub

问题:OfficeException: failed to start and connect(二)

Draw a directed graph based on input

Promise

ESP32 ESP-IDF ADC监测电池电压(带校正)

软件工程复习

If I am in Guangzhou, where can I open an account? Is it safe to open an account online?

mysql约束学习笔记

H5 web page determines whether an app is installed. If it is installed, it will jump to the summary of the scheme to download if it is not installed

ESP32 ESP-IDF GPIO按键中断响应

Summary of wechat official account embedded program to jump to wechat

Comment imprimer le tableau original

【Unity Shader 描边效果_案例分享第一篇】

TDB中多个model情况下使用fuseki查询

8 张图 | 剖析 Eureka 的首次同步注册表

谷粒商城-环境(p1-p27)

基金定投是高风险产品吗?

Is it safe to buy funds on Alipay? Where can I buy funds

C语言课设学生信息管理系统(大作业)

buildroot override 机制