当前位置:网站首页>Code implementation of sorting and serializing cases in MapReduce

Code implementation of sorting and serializing cases in MapReduce

2022-06-10 16:00:00 【QYHuiiQ】

The case we want to implement here is to sort the students' names first ( Dictionary sort ), In case of duplicate names , Then sort the age ( Ascending ).

- Upload the original data file to HDFS

[[email protected] test_data]# hdfs dfs -mkdir /test_comparation_input

[[email protected] test_data]# hdfs dfs -put test_comparation.txt /test_comparation_input

This is where the original 5 The row data is based on the name ascii Dictionary sort by value , For duplicate names Bob, It's going to be good for 2 Xing He 4 OK, two Bob Sort twice by age .

newly build project:

- introduce pom rely on

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>wyh.test</groupId>

<artifactId>test_comparation</artifactId>

<version>1.0-SNAPSHOT</version>

<properties>

<maven.compiler.source>8</maven.compiler.source>

<maven.compiler.target>8</maven.compiler.target>

</properties>

<packaging>jar</packaging>

<dependencies>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>2.7.5</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>2.7.5</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>2.7.5</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-core</artifactId>

<version>2.7.5</version>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>RELEASE</version>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<version>3.1</version>

<configuration>

<source>1.8</source>

<target>1.8</target>

<encoding>UTF-8</encoding>

</configuration>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-shade-plugin</artifactId>

<version>2.4.3</version>

<executions>

<execution>

<phase>package</phase>

<goals>

<goal>shade</goal>

</goals>

<configuration>

<minimizeJar>true</minimizeJar>

</configuration>

</execution>

</executions>

</plugin>

</plugins>

</build>

</project>- Custom implementation of sorting and serialization Bean class

package wyh.test.comparation;

import org.apache.hadoop.io.WritableComparable;

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

public class ComparationBean implements WritableComparable<ComparationBean> {

// Definition Bean Properties to be included in the object

private String studentName;

private int age;

public String getStudentName() {

return studentName;

}

public void setStudentName(String studentName) {

this.studentName = studentName;

}

@Override

public String toString() {

// The original format is not used here , We redefined ourselves toString The format of

return studentName + "\t" + age;

}

public int getAge() {

return age;

}

public void setAge(int age) {

this.age = age;

}

// Define collation , We just need to define comparison rules , We don't need to care about how to call

@Override

public int compareTo(ComparationBean comparationBean) {

// First use String Class compareTo() Implementation string studentName Sort

int compareResult = this.studentName.compareTo(comparationBean.getStudentName());

// When studentName The comparison is 0 when , Description duplicate name , Then compare age Value

if(compareResult == 0){

return this.age - comparationBean.getAge();

}

return compareResult;

}

// This method is used to implement serialization , Convert the original data into byte stream

@Override

public void write(DataOutput dataOutput) throws IOException {

// take Bean The first property in the object implements serialization , For string serialization , It uses writeUTF()

dataOutput.writeUTF(studentName);

// take Bean The second attribute in the object implements serialization , about int Serialization of types , It uses writeInt()

dataOutput.writeInt(age);

}

// This method is used to implement deserialization

@Override

public void readFields(DataInput dataInput) throws IOException {

// Assign the deserialized value to the member variable

this.studentName = dataInput.readUTF();

this.age = dataInput.readInt();

}

}

- Customize Mapper class

package wyh.test.comparation;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

// there K2 The type is customized by us Bean( Because it is necessary to realize sorting and serialization ), This Bean The properties in the object come from V1.

public class ComparationMapper extends Mapper<LongWritable, Text, ComparationBean, NullWritable> {

@Override

protected void map(LongWritable key, Text value, Mapper<LongWritable, Text, ComparationBean, NullWritable>.Context context) throws IOException, InterruptedException {

// take V1 The row data of is split and extracted into two attributes , Assign to custom Bean object

String[] split = value.toString().split(",");

ComparationBean comparationBean = new ComparationBean();

comparationBean.setStudentName(split[0]);

comparationBean.setAge(Integer.parseInt(split[1]));

// take K2,V2 write in context object

context.write(comparationBean, NullWritable.get());

}

}

- Customize Reducer class

package wyh.test.comparation;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

// In our case reduce No need. K2,V2 Do further processing , So here will be K2,V2 Assign a value to K3,V3 that will do

public class ComparationReducer extends Reducer<ComparationBean, NullWritable, ComparationBean, NullWritable> {

@Override

protected void reduce(ComparationBean key, Iterable<NullWritable> values, Context context) throws IOException, InterruptedException {

context.write(key, NullWritable.get());

}

}

- Customize JobMain class

package wyh.test.comparation;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.conf.Configured;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.OutputFormat;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

import org.apache.hadoop.util.Tool;

import org.apache.hadoop.util.ToolRunner;

public class ComparationJobMain extends Configured implements Tool {

@Override

public int run(String[] strings) throws Exception {

Job job = Job.getInstance(super.getConf(), "test_comparation_job");

job.setJarByClass(ComparationJobMain.class);

job.setInputFormatClass(TextInputFormat.class);

TextInputFormat.addInputPath(job, new Path("hdfs://192.168.126.132:8020/test_comparation_input"));

job.setMapperClass(ComparationMapper.class);

job.setMapOutputKeyClass(ComparationBean.class);

job.setMapOutputValueClass(NullWritable.class);

job.setReducerClass(ComparationReducer.class);

job.setOutputKeyClass(ComparationBean.class);

job.setOutputValueClass(NullWritable.class);

job.setOutputFormatClass(TextOutputFormat.class);

TextOutputFormat.setOutputPath(job, new Path("hdfs://192.168.126.132:8020/test_comparation_output"));

boolean status = job.waitForCompletion(true);

return status?0:1;

}

public static void main(String[] args) throws Exception {

Configuration configuration = new Configuration();

// start-up job Mission

int runStatus = ToolRunner.run(configuration, new ComparationJobMain(), args);

System.exit(runStatus);

}

}

- pack project

clean---package

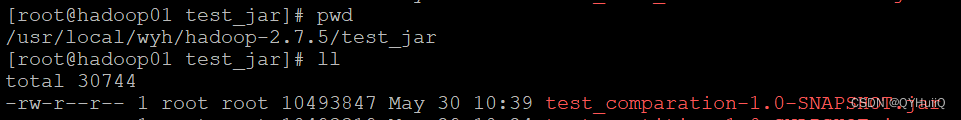

- take jar Upload to server

- function jar

[[email protected] test_jar]# hadoop jar test_comparation-1.0-SNAPSHOT.jar wyh.test.comparation.ComparationJobMain

- see HDFS Directory tree

- View the output result file

[[email protected] test_jar]# hdfs dfs -cat /test_comparation_output/part-r-00000

You can see that the names are sorted first , And then the same name Bob It will be sorted twice according to the ascending order of age :

In this way, it is easy to realize MapReduce Sorting and serialization functions in .

边栏推荐

- 从“初代播种”到“落地生花”,广和通在5G商用三年间做了什么?

- terminator如何设置字体显示不同颜色

- How to improve document management

- 2290. Minimum Obstacle Removal to Reach Corner

- TensorFlow实战Google深度学习框架第二版学习总结-TensorFlow入门

- SQL语言

- Aperçu en direct | déconstruire OLAP! Le nouveau paradigme de l'architecture d'analyse multidimensionnelle est entièrement ouvert! Apache Doris va apporter cinq gros problèmes!

- 姿态估计之2D人体姿态估计 - Associative Embedding: End-to-End Learning for Joint Detection and Grouping

- RK3308--固件编译

- Detailed installation steps of mysql8

猜你喜欢

![[high code file format API] Shanghai daoning provides you with the file format API set Aspose, which can create, convert and operate more than 100 file formats in just a few lines of code](/img/43/086da4950da4c6423d5fc46e46b24f.png)

[high code file format API] Shanghai daoning provides you with the file format API set Aspose, which can create, convert and operate more than 100 file formats in just a few lines of code

姿态估计之2D人体姿态估计 - (OpenPose) Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields

统一认证中心 Oauth2 认证坑

Distribution aware coordinate representation for human pose estimation

MapReduce之排序及序列化案例的代码实现

姿态估计之2D人体姿态估计 - SimDR: Is 2D Heatmap Representation Even Necessary for Human Pose Estimation?

Unified certification center oauth2 certification pit

无线通信模组如何助力智能无人机打造“空中物联网”?

Solution to some problems of shadow knife RPA learning and meeting Excel

姿态估计之2D人体姿态估计 - Numerical Coordinate Regression with Convolutional Neural Networks(DSNT)

随机推荐

2D human pose estimation for pose estimation - simdr: is 2D Heatmap representation even necessity for human pose estimation?

2D human posture estimation for posture estimation - numerical coordinate progression with revolutionary neural networks (dsnt)

2D human posture estimation for posture estimation - associated embedding: end to end learning for joint detection and grouping

MapReduce之排序及序列化案例的代码实现

剑指 Offer 06. 从尾到头打印链表

姿态估计之2D人体姿态估计 - Simple Baseline(SBL)

2D pose estimation for pose estimation - (openpose) realtime multi person 2D pose estimation using part affinity fields

Scope and closure

点投影到平面上的方法总结

How to open an account for agricultural futures? Are there any financial conditions?

ORB_ Slam2 visual inertial tight coupling positioning technology route and code explanation 1 - IMU flow pattern pre integration

百度开源ICE-BA安装运行总结

直播預告 | 解構OLAP!新型多維分析架構範式全公開!Apache Doris 將帶來五個重磅議題!

苹果式中文:似乎表达清楚意思了,懂了没完全懂的苹果式宣传文案

Common QR decomposition, SVD decomposition and other matrix decomposition methods of visual slam to solve full rank and deficient rank least squares problems (analysis and summary of the most complete

The ultimate buff of smart grid - guanghetong module runs through the whole process of "generation, transmission, transformation, distribution and utilization"

[untitled] audio Bluetooth voice chip, wt2605c-32n real-time recording upload technical scheme introduction

22. Generate Parentheses

Server operation and maintenance environment security system (Part 2)

硬件仪器的使用