Hadoop = HDFS( distributed file system ) + MapReduce( Distributed computing framework ) + Yarn( Resource coordination framework ) + Common modular

HDFS

Hadoop Distribute File System A highly reliable , High throughput distributed file system

" Divide and rule "

NameNode(nn): Storing files Metadata . Like the file name , File directory structure , File attribute ( Generation time , replications , File permissions ), And the list of blocks for each file and the DataNode etc.SecondaryNameNode(2nn): auxiliary NameNode Work better , be used for monitor HDFS state Auxiliary background program for , Every once in a while obtain HDFS Metadata snapshotDataNode(dn): On the local file system Storage File block data , And block data check

NN, 2NN, DN These are both role names , It's also the name of the process , It refers to the computer node name

MapReduce

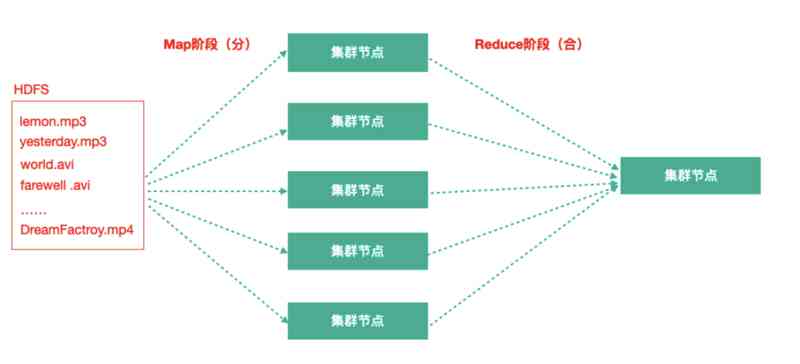

MapReduce Calculation = Map Stage + Reduce Stage

Map The stage is branch The stage of , Parallel processing of input data

Reduce The stage is close The stage of , Yes Map Stage results are summarized

Yarn

The framework of job scheduling and cluster resource management

ResourceManager(rm): Handle client requests , start-up / monitor ApplicationMaster, monitor NodeManager, Resource allocation and schedulingNodeManager(nm): Single node Resource management on , Processing comes from ResourceManager The order of , Processing comes from ApplicationMaster The order ofApplicationMaster(am): Data segmentation , For applications Apply for resources and allocate Give internal tasks , Mission monitoring And Fault toleranceContainer: The abstraction of the task running environment , Encapsulates the CPU, Memory and other multi-dimensional resources and environment variables , Start command, etc Information related to task operation

ResourceManager It's the boss , NodeManager It's little brother , ApplicationMaster It's the computing task force

![[最佳实践]了解 Eolinker 如何助力远程办公](/img/3b/00bc81122d330c9d59909994e61027.jpg)