当前位置:网站首页>Batch (batch size, full batch, mini batch, online learning), iterations and epochs in deep learning

Batch (batch size, full batch, mini batch, online learning), iterations and epochs in deep learning

2022-07-31 18:25:00 【Full stack programmer webmaster】

Hello everyone, meet again, I'm your friend Quanstack Jun.

Concept introduction

We know that in gradient descent, we need to process all samples and then take one step, so if our sample size is particularly large, the efficiency will be relatively low.If there are 5 million or even 50 million samples (in our business scenario, there are generally tens of millions of rows, and some big data has 1 billion rows), it will be very time-consuming to go through one iteration.The gradient descent at this time uses all sample data, so it is called full batch.

To improve efficiency, we can divide the sample into equal subsets.For example, we divide 5 million samples into 1000 parts (subsets), each of which has 5000 samples, and these subsets are called mini batch.Then we iterate over these 1000 subsets with a for loop each.Do a gradient descent for each subset.Then update the values of parameters w and b.Then go on to the next subset to continue gradient descent.This is equivalent to doing 1000 iterations in gradient descent after traversing all mini batches.We call the act of traversing all samples once an epoch, which is a generation.What we do in gradient descent under mini batch is actually the same as full batch, except that the data we train is no longer all samples, but a subset of them.In this way in the mini batch we can perform 1000 gradient descents in one epoch, and only once in the full batch.This greatly increases the speed of our algorithm (and the number of iterations of gradient descent).

- batch: batch is a batch.DepthLearningThe loss function required for each parameter update is not obtained by a {data:label}, but by a set of data weighted, the amount of data in this group is [batch size].

- The maximum batch size is the total number of samples N, which is Full batch learning.If the data set is small, it can be in the form of a full data set (Full batch learning), which has two obvious advantages: 1. Calculated by the full data set2. The gradient values of different weights are very different, so it will be more difficult to choose a global learning rate (?)

- The minimum batch size is 1, that is, only one sample is trained at a time, which is online learning (Online Learning).

- batch size is neither the maximum N nor the minimum 1, at this time it is the batch in the usual sense (some frameworks such as keras also call it mini batch)

- Epoch: Generations.When we learn in batches, each time we use all the training data (all subsets) to complete one Forword operation and one BP operation, it becomes an epoch (generation).

- Iterations: Iterations.For example, if we have 1000 samples and the batch size is 50, it will have 20 Iterations.These 20 Iterations complete an Epoch.

batch pros and cons analysis

The idea of batch has at least two effects. One is to better deal with non-convex loss functions. In the case of non-convexity, even if the whole sample is calculated in engineering, it will be stuck on local optimization. Batch representationPartial sampling of the whole sample is realized, which is equivalent to artificially introducing sampling noise on the modified gradient, making it more likely to search for the optimal value of “no way to find another way”; the second is to rationally use the memory capacity.

The advantages of batch: 1. Less memory; 2. Fast training speed

Disadvantages of batch: 1. Low accuracy; 2. During the iteration process, the loss function (loss) will fluctuate up and down (but overall it is downward)

As shown above, The left is the gradient descent effect of the full batch.It can be seen that the cost function of each iteration shows a downward trend, which is a good phenomenon, indicating that the settings of our w and b have been reducing the error.In this way, we can continue to iterate until we can find the optimal solution.On the right is the gradient descent effect of mini batch, you can see that it fluctuates up and down, the value of the cost function is sometimes high and sometimes low, but the overall trend is still declining.This is also normal, because we run each gradient descent on min batches instead of the entire dataset.Differences in data may lead to such effects (maybe some pieces of data work particularly well, and some pieces of data work poorly).But that's okay, because he's on a downward trend overall.

Think of the graph above as a gradient descent space.The blue part below is the full batch and the top is the mini batch.As mentioned above, the mini batch does not reduce the loss function every iteration, so it seems to have taken a lot of detours.However, the whole is still iterating towards the optimal solution.And since the mini batch takes 5000 steps per epoch (5000 gradient descents), the full batch has only one step per epoch.So although mini batch has taken a detour, it will still be much faster.

batch size experience formula

Since there is a mini batch, there will be a hyperparameter of batch size, which is the block size.Represents how many samples are in each mini batch.We generally set it to the nth power of 2.For example, 64, 128, 512, 1024. Generally, it will not exceed this range.It can't be too large, because it will be infinitely close to the behavior of full batch, and the speed will be slow.It can't be too small, because the algorithm may never converge if it is too small.Of course, if our data is relatively small, there is no need for mini batch.The effect of full batch is the best.

Publisher: Full-stack programmer, please indicate the source: https://javaforall.cn/127491.htmlOriginal link: https://javaforall.cn

边栏推荐

猜你喜欢

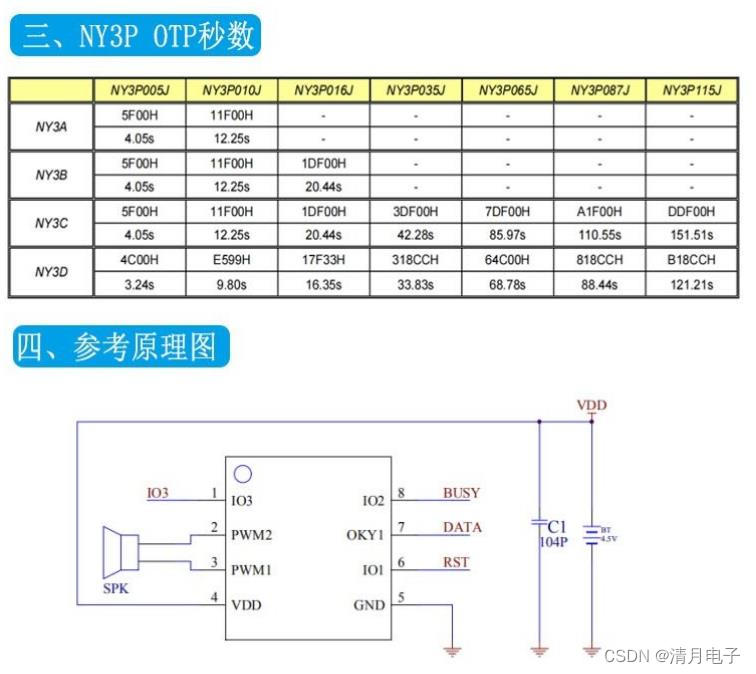

Jiuqi ny3p series voice chip replaces the domestic solution KT148A, which is more cost-effective and has a length of 420 seconds

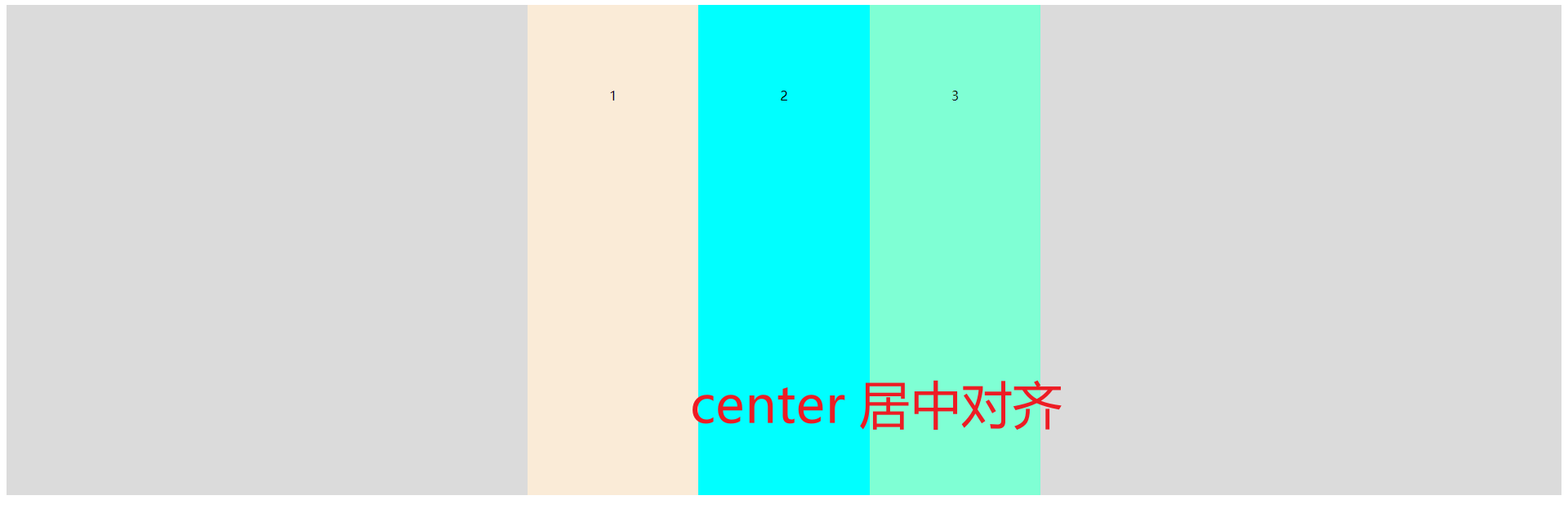

Flex布局详解

AcWing 1282. Search Keyword Problem Solution ((AC Automata) Trie+KMP)+bfs)

如何识别假爬虫?

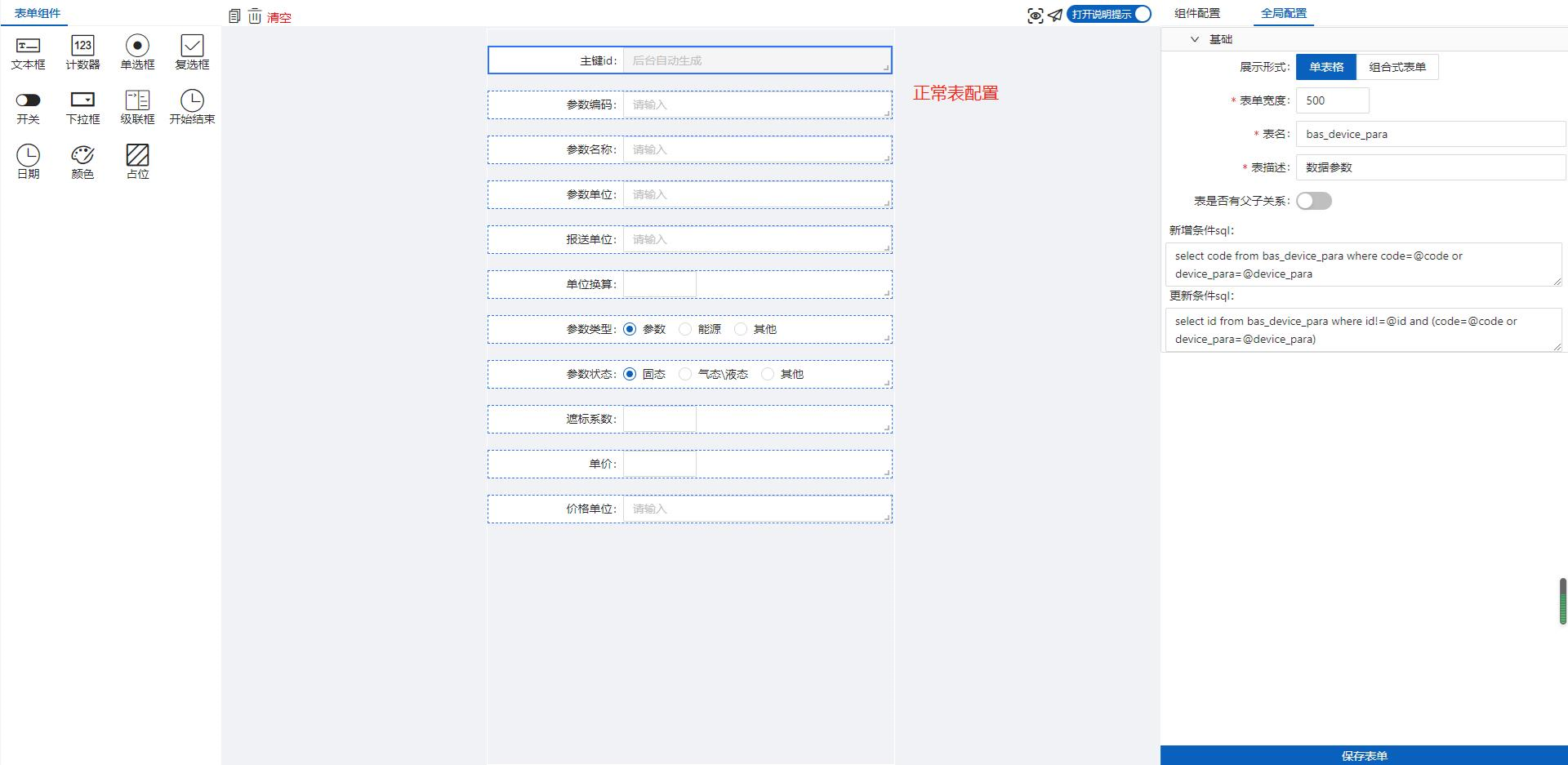

iNeuOS工业互联网操作系统,设备运维业务和“低代码”表单开发工具

MySQL - multi-table query

2022 Android interview summary (with interview questions | source code | interview materials)

自动化测试—web自动化—selenium初识

Intelligent bin (9) - vibration sensor (raspberries pie pico implementation)

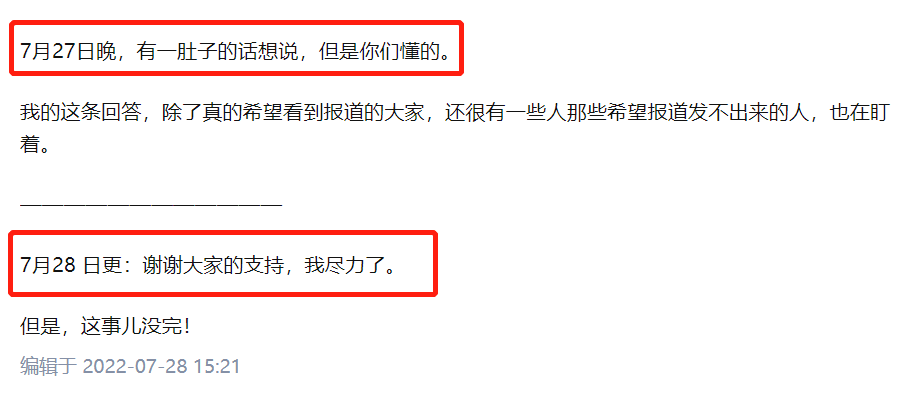

这位985教授火了!当了10年博导,竟无一博士毕业!

随机推荐

移动web开发02

组合学笔记(六)局部有限偏序集的关联代数,Möbius反演公式

Handling Write Conflicts under Multi-Master Replication (1)-Synchronous and Asynchronous Conflict Detection and Conflict Avoidance

MySQL---operator

Bika LIMS 开源LIMS集—— SENAITE的使用(检测流程)

A common method and the use of selenium

Introduction of Jerry voice chip ic toy chip ic_AD14NAD15N full series development

MySQL---Create and manage databases and data tables

Bika LIMS 开源LIMS集—— SENAITE的使用(检测流程)

MySQL---单行函数

Tkinter 入门之旅

Taobao/Tmall get Taobao password real url API

Apache EventMesh 分布式事件驱动多运行时

ECCV 2022 华科&ETH提出首个用于伪装实例分割的一阶段Transformer的框架OSFormer!代码已开源!...

MySQL common statements

Masterless replication system (2) - read and write quorum

Flink_CDC搭建及简单使用

rj45对接头千兆(百兆以太网接口定义)

go记录之——slice

Huawei mobile phone one-click to open "maintenance mode" to hide all data and make mobile phone privacy more secure