当前位置:网站首页>[Deep learning] Detailed explanation of Transformer model

[Deep learning] Detailed explanation of Transformer model

2022-07-31 00:15:00 【One poor and two white to an annual salary of one million】

Foreword

This article is a learning record, and the content and pictures in it are mostly borrowed from other articles. Links to related blog posts are given in the references.

Overall Architecture

Encoder

Decoder

References

[1]Self-Attention and Transformer

[3]Highly recommended!NTU Li Hongyi's self-attention mechanism and Transformer explained in detail!

[4]The Illustrated Transformer

[5] Understanding of Q, K, V in Transformer

[6]Why is the V in the (KQV) of the transformer's self_attention also multiplied by a Wv matrix?

[9]The Annotated Transformer

边栏推荐

- 数据清洗-使用es的ingest

- transition transition && animation animation

- asser利用蚁剑登录

- 封装、获取系统用户信息、角色及权限控制

- Linux 部署mysql 5.7全程跟踪 完整步骤 django部署

- In MySQL, the stored procedure cannot realize the problem of migrating and copying the data in the table

- flex布局父项常见属性flex-wrap

- 【唐宇迪 深度学习-3D点云实战系列】学习笔记

- xss靶机训练【实现弹窗即成功】

- Unity 加载读取PPT

猜你喜欢

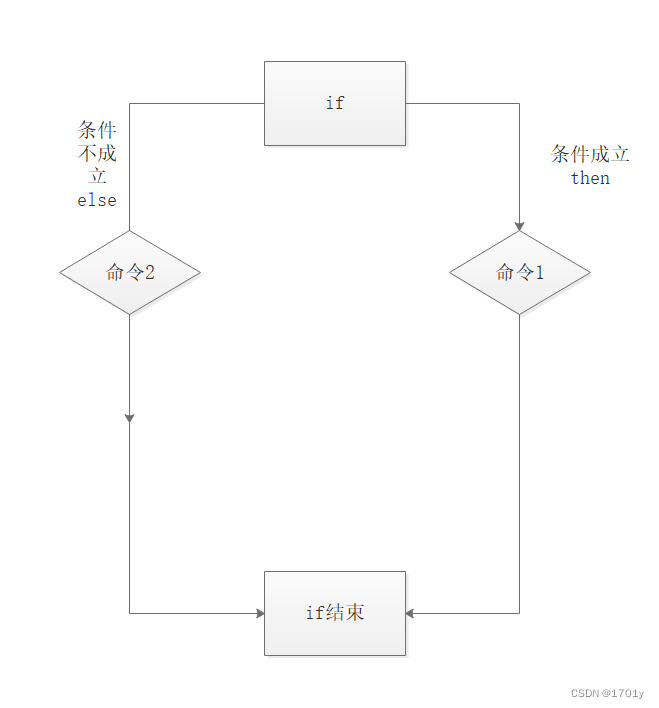

Shell script if statement

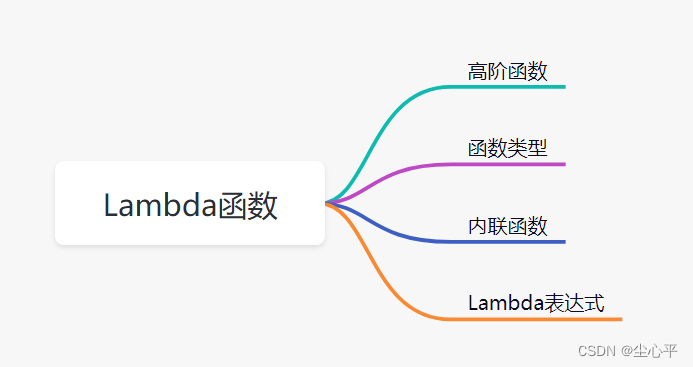

Lambda表达式

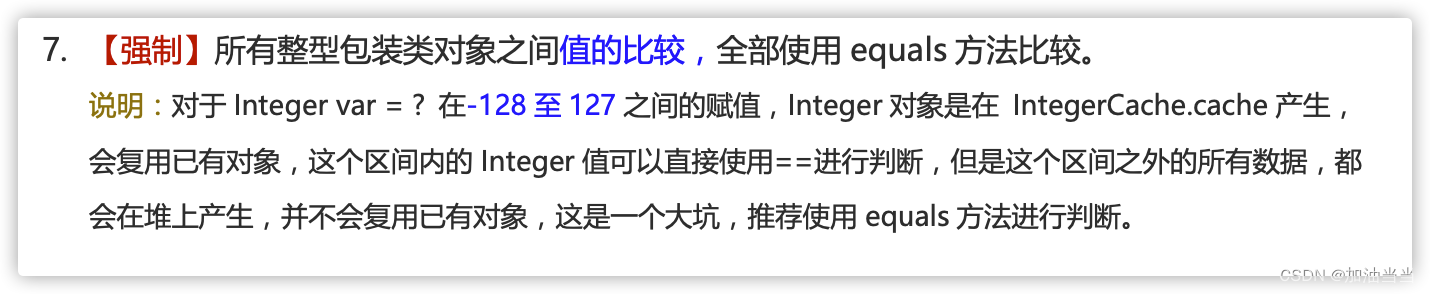

从笔试包装类型的11个常见判断是否相等的例子理解:包装类型、自动装箱与拆箱的原理、装箱拆箱的发生时机、包装类型的常量池技术

web漏洞之需要准备的工作

Steven Giesel 最近发布了一个由5部分内容组成的系列,记录了他首次使用 Uno Platform 构建应用程序的经验。

.NET 跨平台应用开发动手教程 |用 Uno Platform 构建一个 Kanban-style Todo App

Chevrolet Trailblazer, the first choice for safety and warmth for your family travel

Shell编程条件语句 test命令 整数值,字符串比较 逻辑测试 文件测试

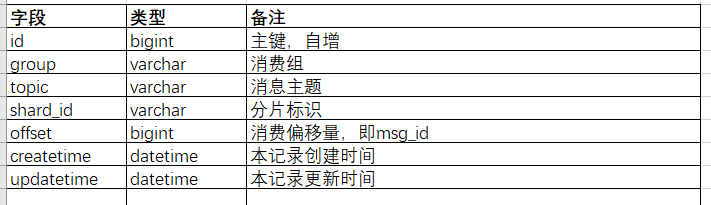

消息队列存储消息数据的MySQL表设计

数据库的严格模式

随机推荐

网络常用的状态码

从编译的角度来学作用域!

HCIP第十六天笔记

如何在WordPress网站上添加导航菜单

作业:iptables防止nmap扫描以及binlog

H5跳转微信公众号解决方案

软考学习计划

HCIP第十五天笔记

[In-depth and easy-to-follow FPGA learning 15---------- Timing analysis basics]

Shell programming conditional statement test command Integer value, string comparison Logical test File test

oracle数据库版本问题咨询(就是对比从数据库查询出来的版本,和docker里面的oracle版本)?

Necessary artifacts - AKShare quants

Point Cloud Scene Reconstruction with Depth Estimation

joiplay模拟器如何导入游戏存档

A Brief Talk About MPI

Jetpack Compose学习(8)——State及remeber

神经网络(ANN)

@requestmapping注解的作用及用法

joiplay模拟器如何使用

怎么开通代付通道接口?