当前位置:网站首页>ATSs: automatically select samples to eliminate the difference between anchor based and anchor free object detection methods

ATSs: automatically select samples to eliminate the difference between anchor based and anchor free object detection methods

2022-07-01 15:43:00 【Xiaobai learns vision】

Click on the above “ Xiaobai studies vision ”, Optional plus " Star standard " or “ Roof placement ”

Heavy dry goods , First time delivery Reading guide

This paper holds that ,anchor based and anchor free The essential difference between object detection methods lies in how to select samples , This idea is verified by experiments , A method of automatically selecting samples is proposed , Without introducing any calculation amount , Improved the effect , And it can be considered that there are no super parameters .

The paper :https://arxiv.org/abs/1912.02424

Code :https://github.com/sfzhang15/ATSS

The background to reply “ATSS” Get packaged papers and code

Abstract

The article points out that , be based on anchor Methods and anchor free The essential difference of the method is how to define positive and negative samples . therefore , This paper presents an adaptive sample selection method to adaptively select positive and negative samples according to the statistical characteristics of the object , Improved performance , At the same time, it has shrunk significantly anchor based and anchor free intermethod gap.

1. Introduce

anchor free There are generally two ways to : One is to locate predefined feature points that can protect objects , be called keypoint-based Method , The other is to use the center point of the object or the area of the object to define the positive sample , Then predict the positive sample point to 4 The distance between two sides , This is called center-based Method . Of these two methods ,keypoint-based The method is follow The method of feature point detection , and anchor based The methods differ greatly , and center-based The method regards points as samples , This sum anchor based Method anchor As samples, they are very similar .

With RetinaNet and FCOS For example , Compare the differences between the two methods :(1) The number of samples in each spatial location is different ,RetinaNet There are several in each position of anchor, and FCOS There is only one per location anchor spot .(2) Positive and negative samples have different definitions ,RetinaNet Use IOU To define positive and negative samples ,FCOS Use spatial and scale constraints to select samples .(3) The initial state of regression is different ,RetinaNet From the preset anchor Return to the starting point , and FCOS The starting point of the regression is anchor spot . from FCOS You can see in the paper ,FCOS It's better than RetinaNet Much better . So we need to study , These three differences , Which is the decisive .

The contribution of this paper :

Pointed out anchor based and anchor free The essential difference between methods lies in how to select positive and negative samples .

An adaptive method of selecting positive and negative samples based on the statistical characteristics of objects is proposed .

It is pointed out that multiple anchor It's useless to do object detection .

There is no additional cost , stay MS COCO Up to SOTA.

2. Anchor-based and Anchor-free The difference analysis of methods

2.1 Get rid of irrelevant things

We use MS COCO To do experiments , We use only one square anchor Of RetinaNet Remember to do RetinaNet(#A=1), This is actually the same as anchor free Of FCOS Very close . however , stay FCOS In the theory of ,FCOS It's better than RetinaNet Much better , One is 37.1%, One is 32.5%.FCOS Some further optimizations are also used in , Including putting centerness The prediction of moves to the regression Branch , Use GIoU loss, Through the right stride Yes target Normalization , These methods will FCOS from 37.1% Upgrade to 37.8%. stay FCOS Some optimization methods used , In fact, it can also be used in RetinaNet in , For example, add GroupNorm, Use GIoU Loss function , Limit groundtruth box A positive sample of , introduce centerness Branch , The trainable scale parameters are introduced into the feature pyramid s. however , These are not the fundamental differences between the two . We add these optimization methods to RetinaNet(#A=1) in , As shown in the table 1,performance Promoted to 37.0%. Still have 0.8% Of gap. Now? , Get rid of these irrelevant differences , We can explore the fundamental difference between the two .

2.1 The fundamental difference

Now? ,RetinaNet(#A=1) and FCOS There are only two differences , One is related to the classification subtask , That is, the method of defining positive and negative samples , The other is related to the regression subtask , That is, the starting point of the return is anchor box still anchor spot .

classification

Pictured 1(a),RetinaNet Use IOU It will come from different level Of anchor box Divided into positive and negative samples , For each object , stay IOU>θp All of the anchor box in , Choose the largest as the positive sample , all IOU<θn All are considered negative samples , Ignore everything else . Pictured 1(b),FCOS Using spatial and scale constraints will anchor Points are assigned to different level On , First, put all in groundtruth box Internal anchor Points as candidate points , Then, based on the pre-test results of each level Set the scale range to select the final positive sample , The unselected points are negative samples .

These two different schemes eventually lead to different positive and negative samples . See table 2, If in RetinaNet(#A=1) Use spatial and scale constraints instead of IOU To select positive and negative samples ,RetinaNet(#A=1) Of performance Can rise to 37.8%. And for FCOS, If you use IOU The strategy is to select positive and negative samples , that performance It will go down to 36.9%. This shows that the selection strategy of positive and negative samples is the fundamental difference between the two methods .

Return to

After the positive and negative samples are determined , It is necessary to carry out regression of object position for positive samples . If the figure 2,RetinaNet What's coming back is anchor box and groundtruth Of 4 individual offset, and FCOS What's coming back is anchor Point to 4 The distance between the edges . This shows that RetinaNet The starting point of the regression is a box , and FCOS The starting point of the regression is a point . And tables 2 Can be seen in , When RetinaNet and FCOS When using the same positive and negative sample selection strategy , There is no obvious difference between the two , This shows that the starting point of regression is not the essential difference between the two methods .

Conclusion From the above experiment, we can draw a conclusion , A stage of anchor based Object detection methods and center-based anchor free The essential difference of the object detection method is the selection strategy of positive and negative samples .

3. Adaptive sample selection

3.1 describe

Previous sample selection strategies have some sensitive hyperparameters , such as anchor based Methods include IOU The threshold of ,anchor free There is a scale range in the method . Our adaptive method , Through the statistical properties of objects , Automatically distinguish positive and negative samples , No super parameters are required .

For each groundtruth box g, We first find its candidate positive sample . At every level On , We choose k individual anchor box, Their center point and g The distance from the center of is the nearest . Suppose there is L Characteristics of level,groundtruth box g You can get k×L A candidate positive sample , then , We calculate these candidate positive samples and g Of IOU, these IOU The mean and variance of are recorded as mg and vg, obtain IOU The threshold of tg=mg+vg, then , We choose among these candidate positive samples IOU Greater than or equal to the threshold tg As the final positive sample . We also have special restrictions on the center of positive samples , It must fall inside the object , in addition , If a anchor box It matches more than one groundtruth box On , Just choose IOU The highest one is the final match . The rest are negative samples . The algorithm flow is as follows :

Select the candidate positive samples based on the center distance

about RetinaNet,anchor box and groundtruth box The closer the center point of ,IOU The bigger , about FCOS,anchor The closer the point is to the center of the object , The higher the quality of the resulting test . therefore , The closer to the center of the object anchor Is a better candidate positive sample .

Use the sum of mean and variance as IOU The threshold of

IOU The mean value of is this object and anchor box The measure of matching degree ,mg The larger the size, the higher the quality of the candidate positive samples , that IOU The threshold of can be set higher .mg The smaller the size, the lower the quality of the candidate positive samples , The threshold should be set smaller .vg What are the characteristics level The measure that matches this object ,vg high , Indicates that for this object , There is a very matching feature level, You can use a larger threshold to select only positive samples from which layer best matches , and vg low , Show several characteristics level Can match this object , Setting a small threshold can widely select samples from these appropriate layers as positive samples . See the picture 3.

Limitations of positive sample centers

anchor The candidate positive sample whose center point is outside the object is a bad positive sample , These positive samples are predicted by the features outside the object , It should be removed during training .

Maintain fairness between different objects

According to statistical theory , Yes 16% The sample is in [mg+vg, 1] The confidence interval of , Although the candidate samples IOU The distribution of is not a standard positive distribution , The statistical results show that for each object , There are about 0.2×kL A positive sample , This is for scale , The proportion , Position is invariant , And for RetinaNet and FCOS The strategy of , Large objects tend to have more positive samples , This leads to unfairness between objects of different scales .

There is almost no need for super parameters

There is only one super parameter k, Later experiments show that the experimental results are not sensitive to this super parameter , Therefore, it can be considered that there is no need for super parameters .

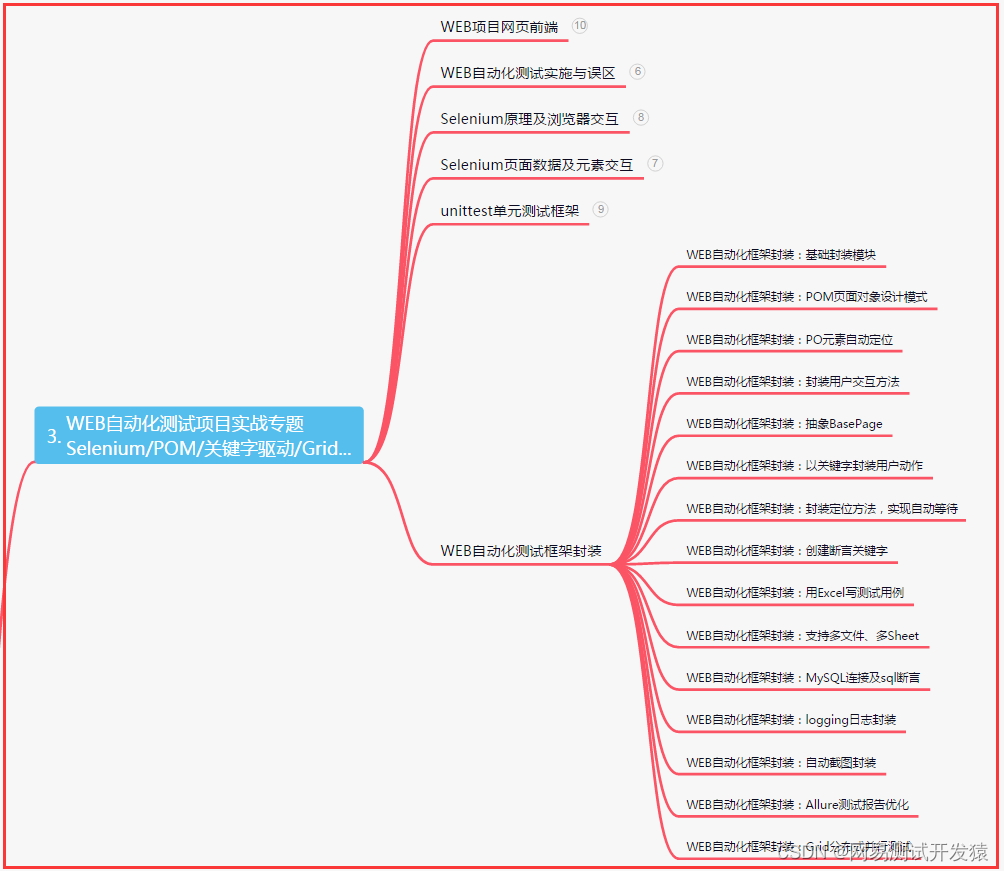

3.2 verification

Anchor based RetinaNet

In order to verify ATSS The effectiveness of the , We use this strategy to replace RetinaNet(#A=1) Strategy in , The effect is shown in table 3.

Anchor Free FCOS

take ATSS The method uses FCOS There are two versions in , One is lite edition , One is full edition , about lite edition , We put ATSS The method used FCOS in , Replaced the method of sample selection .FCOS hold anchor Points are considered as candidate sample points , This leads to a large number of low-quality candidate sample points , Our method in each feature level For each GT I chose k=9 Candidate sample points .lite Version has been used as center sampling Merge into FCOS In the official code of , take FCOS from 37.8% Promoted to 38.6%. however , The superparameters of the scale range still exist .

about full edition , We will FCOS Medium anchor Point to use 8S Scale anchor box To define positive and negative samples , But still with anchor Point to point regression . The results are shown in the table 3. Both versions have the same strategy of selecting candidate positive samples , The difference lies in the method of selecting the final positive sample in the scale dimension . From the table 3 You can see ,full The version is better than lite edition , This shows that , The method of adaptive selection of final positive samples is better than the method of fixed selection of final positive samples .

3.3 analysis

During training , Now there is only one super parameter k And the related anchor box Set up . Let's analyze :

Hyperparameters k

We did several experiments to verify the hyperparameter k The robustness of , See table 4, We used different k, Found out k The value of the 7~19 There is little change in the results within the range of , Too big k Too many low-quality candidate samples will lead to AP falling ,k Too small can also lead to AP falling , Because too few candidate positive samples will lead to the instability of Statistics . in general , Hyperparameters k Is very robust .

Anchor Size

In the previous experiment , It USES one 8S(S Indicates the feature level Of stride size) The square of anchor, We experimented with squares of different sizes anchor, See table 5, Found little difference . in addition , We also tested different proportions of anchor, See table 6, It doesn't make any difference . This shows that this method is suitable for anchor The setting of is insensitive .

3.4 contrast

Comparison with other methods .

3.5 Discuss

Previous experiments used RetinaNet, Only one is used in each location anchor, about anchor based and anchor free Method , In fact, there is another difference , It's in every position anchor The number of , actually , The original RetinaNet Each location has 9 individual anchor,3 A scale and 3 A proportion , We remember that RetinaNet (#A=9) , From the table 7 Can be seen in ,AP by 36.3%, The general optimization method can also be used in RetinaNet (#A=9) On , take AP Promoted to 38.4%, This is better than RetinaNet (#A=1) It is better to , This explanation , In traditional IOU based Under the positive and negative sample selection strategy , added anchor Can achieve better results .

however , After using our method ,RetinaNet (#A=9) and RetinaNet (#A=1) Of performance Almost the same as . let me put it another way , As long as the sample selection is reasonable , How many are selected for each location anchor It doesn't matter . therefore , We think , Multiple at each location anchor There is no need to .

The good news !

Xiaobai learns visual knowledge about the planet

Open to the outside world

download 1:OpenCV-Contrib Chinese version of extension module

stay 「 Xiaobai studies vision 」 Official account back office reply : Extension module Chinese course , You can download the first copy of the whole network OpenCV Extension module tutorial Chinese version , Cover expansion module installation 、SFM Algorithm 、 Stereo vision 、 Target tracking 、 Biological vision 、 Super resolution processing and other more than 20 chapters .

download 2:Python Visual combat project 52 speak

stay 「 Xiaobai studies vision 」 Official account back office reply :Python Visual combat project , You can download, including image segmentation 、 Mask detection 、 Lane line detection 、 Vehicle count 、 Add Eyeliner 、 License plate recognition 、 Character recognition 、 Emotional tests 、 Text content extraction 、 Face recognition, etc 31 A visual combat project , Help fast school computer vision .

download 3:OpenCV Actual project 20 speak

stay 「 Xiaobai studies vision 」 Official account back office reply :OpenCV Actual project 20 speak , You can download the 20 Based on OpenCV Realization 20 A real project , Realization OpenCV Learn advanced .

Communication group

Welcome to join the official account reader group to communicate with your colleagues , There are SLAM、 3 d visual 、 sensor 、 Autopilot 、 Computational photography 、 testing 、 Division 、 distinguish 、 Medical imaging 、GAN、 Wechat groups such as algorithm competition ( It will be subdivided gradually in the future ), Please scan the following micro signal clustering , remarks :” nickname + School / company + Research direction “, for example :” Zhang San + Shanghai Jiaotong University + Vision SLAM“. Please note... According to the format , Otherwise, it will not pass . After successful addition, they will be invited to relevant wechat groups according to the research direction . Please do not send ads in the group , Or you'll be invited out , Thanks for your understanding ~边栏推荐

- What time do you get off work?!!!

- swiper 轮播图,最后一张图与第一张图无缝衔接

- MySQL高级篇4

- 雷神科技冲刺北交所,拟募集资金5.4亿元

- HR面试:最常见的面试问题和技巧性答复

- Wechat official account subscription message Wx open subscribe implementation and pit closure guide

- 【云动向】6月上云新风向!云商店热榜揭晓

- 张驰咨询:锂电池导入六西格玛咨询降低电池容量衰减

- 采集数据工具推荐,以及采集数据列表详细图解流程

- 硬件开发笔记(九): 硬件开发基本流程,制作一个USB转RS232的模块(八):创建asm1117-3.3V封装库并关联原理图元器件

猜你喜欢

HR面试:最常见的面试问题和技巧性答复

工厂高精度定位管理系统,数字化安全生产管理

MySQL的零拷贝技术

STM32F4-TFT-SPI时序逻辑分析仪调试记录

Qt+pcl Chapter 6 point cloud registration ICP Series 2

Zhang Chi Consulting: lead lithium battery into six sigma consulting to reduce battery capacity attenuation

做空蔚来的灰熊,以“碰瓷”中概股为生?

大龄测试/开发程序员该何去何从?是否会被时代抛弃?

张驰咨询:锂电池导入六西格玛咨询降低电池容量衰减

Detailed explanation of stm32adc analog / digital conversion

随机推荐

go-zero实战demo(一)

Create employee data in SAP s/4hana by importing CSV

6.2 normalization 6.2.6 BC normal form (BCNF) 6.2.9 normalization summary

Description | Huawei cloud store "commodity recommendation list"

Guide de conception matérielle du microcontrôleur s32k1xx

Some abilities can't be learned from work. Look at this article, more than 90% of peers

Pocket Network为Moonbeam和Moonriver RPC层提供支持

Short Wei Lai grizzly, to "touch China" in the concept of stocks for a living?

Advanced cross platform application development (24): uni app realizes file download and saving

MySQL高级篇4

C#/VB.NET 合并PDF文档

ATSS:自动选择样本,消除Anchor based和Anchor free物体检测方法之间的差别

【目标跟踪】|模板更新 时间上下文信息(UpdateNet)《Learning the Model Update for Siamese Trackers》

硬件开发笔记(九): 硬件开发基本流程,制作一个USB转RS232的模块(八):创建asm1117-3.3V封装库并关联原理图元器件

Hardware development notes (9): basic process of hardware development, making a USB to RS232 module (8): create asm1117-3.3v package library and associate principle graphic devices

[one day learning awk] conditions and cycles

Gaussdb (for MySQL):partial result cache, which accelerates the operator by caching intermediate results

[video memory optimization] deep learning video memory optimization method

Summary of point cloud reconstruction methods I (pcl-cgal)

HR interview: the most common interview questions and technical answers