当前位置:网站首页>(论文翻译]未配对Image-To-Image翻译使用Cycle-Consistent敌对的网络

(论文翻译]未配对Image-To-Image翻译使用Cycle-Consistent敌对的网络

2022-07-30 13:50:00 【xiongxyowo】

Only the method part is translated

III. Formulation

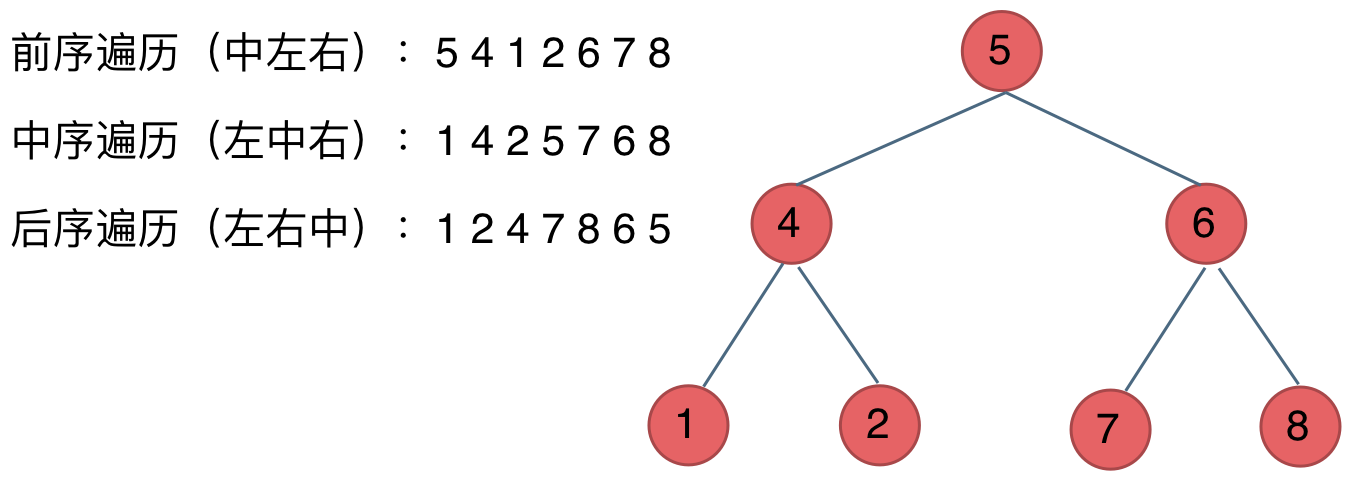

Our target is given training samples { x i } i = 1 N ∈ X \left\{x_{i}\right\}_{i=1}^{N} \in X { xi}i=1N∈X和{ { x j } j = 1 M ∈ X \left\{x_{j}\right\}_{j=1}^{M} \in X { xj}j=1M∈XLearn between the two domains X X X和 Y Y Y的映射函数.如图3(a)所示,我们的模型包括两个映射 G : X → Y G: X→Y G:X→Y和 F : Y → X F: Y→X F:Y→X.此外,We introduce two adversarial discriminators D X D_X DX和 D Y D_Y DY,其中 D X D_X DX旨在区分图像 { x } \{x\} { x}and translated images { F ( y ) } \{F(y)\} { F(y)};同样, D Y D_Y DY旨在区分 { y } \{y\} { y}和 { G ( x ) } \{G(x)\} { G(x)}.Our goals consist of two kinds:对抗性损失(Adversarial Loss),Used to match the resulting image distribution to the data distribution of the target domain;以及循环一致性损失(Cycle Consistency Loss

),to prevent learned mappings G G G和 F F F相互矛盾.

Adversarial Loss

We apply adversarial loss to two mapping functions.对于映射函数 G : X → Y G:X→Y G:X→Yand its discriminator D Y D_Y DY,We express the goal as : L GAN ( G , D Y , X , Y ) = E y ∼ p data ( y ) [ log D Y ( y ) ] + E x ∼ p data ( x ) [ log ( 1 − D Y ( G ( x ) ) ] \mathcal{L}_{\text{GAN}}(G,\ D_{Y},\ X,\ Y) = \mathbb{E}_{y\sim p_{\text{data}}(y)}[\log D_{Y}(y)]\\ +\mathbb{E}_{x\sim p_{\text{data}}(x)}[\log(1- D_{Y}(G(x))] LGAN(G, DY, X, Y)=Ey∼pdata(y)[logDY(y)]+Ex∼pdata(x)[log(1−DY(G(x))] 其中 G G GTrying to generate with fields Y Y YThe image is similar to the image G ( x ) G(x) G(x),而 D Y D_Y DYAims to distinguish translated samples G ( x ) G(x) G(x)and real samples Y Y Y.我们为映射函数 F : Y → X F:Y→X F:Y→Xand its discriminator D X D_X DX也引入了类似的对抗性损失:即 L G A N ( F , D X , Y , X ) L_{GAN}(F, D_X, Y, X) LGAN(F,DX,Y,X).

Cycle Consistency Loss

理论上,对抗性训练可以学习映射 G G G和 F F F,Generated with the target domain Y Y Y和 X X Xdistribute the same output(严格来说,这需要G和F是随机的函数).然而,with sufficient capacity,The network can map the same set of input images to any random arrangement of images in the target domain,Any of these learned mappings can lead to an output distribution that matches the target distribution.为了进一步减少可能的映射函数的空间,We believe that the learned mapping function should be cycle-consistent:如图3b所示,对于来自域 X X X的每个图像 x x x,The image translation loop should be able to x x x带回原始图像,即 x → G ( x ) → F ( G ( x ) ) ≈ x x→G(x)→F(G(x))≈x x→G(x)→F(G(x))≈x.We call this forward cycle consistency.同样,如图3c所示,对于来自域 Y Y Y的每个图像 y y y, G G G和 F F FBackward circular consistency should also be satisfied: y → F ( y ) → G ( F ( y ) ) ≈ y y→F(y)→G(F(y))≈y y→F(y)→G(F(y))≈y.We can incentivize this behavior with a cycle consistency loss. L cyc ( G , F ) = E x ∼ p data ( x ) [ ∥ F ( G ( x ) ) − x ∥ 1 ] + E y ∼ p data ( ( y ) [ ∥ G ( F ( y ) ) − y ∥ 1 ] . \mathcal{L}_{\text{cyc}}(G,\ F)=\mathbb{E}_{x\sim p_{\text{data}}(x)}[\Vert F(G(x))-x \Vert_{1}]\\ +\mathbb{E}_{y\sim p_{\text{data}}((y)}[\Vert G(F(y))-y \Vert_{1}]. Lcyc(G, F)=Ex∼pdata(x)[∥F(G(x))−x∥1]+Ey∼pdata((y)[∥G(F(y))−y∥1]. 在初步实验中,我们还尝试用 F ( G ( x ) ) F(G(x)) F(G(x))和 x x x之间以及 G ( F ( y ) ) G(F(y)) G(F(y))和 y y yThe adversarial loss between is replaced in this lossL1准则,但没有观察到性能的改善.Behavior caused by cycle consistency loss can be found in arXivversion observed.

Full Objective

Our overall objective function is : L ( G , F , D X , D Y ) = L GAN ( G , D Y , X , Y ) + L GAN ( F , D X , Y , X ) + λ L cyc ( G , F ) \mathcal{L}(G,\ F,\ D_{X},\ D_{Y})=\mathcal{L}_{\text{GAN}}(G,\ D_{Y},\ X,\ Y)\\ +\mathcal{L}_{\text{GAN}}(F,\ D_{X},\ Y,\ X)\\ +\lambda \mathcal{L}_{\text{cyc}}(G,\ F) L(G, F, DX, DY)=LGAN(G, DY, X, Y)+LGAN(F, DX, Y, X)+λLcyc(G, F) 其中 λ \lambda λ控制两个目标的相对重要性.我们的目标是解决: G ∗ , F ∗ = arg min G , F max D x , D Y L ( G , F , D X , D Y ) G^{\ast},\ F^{\ast}= \arg\min_{G,\ F}\ \max_{D_{x},\ D_{Y}}\mathcal{L}(G,\ F,\ D_{X},\ D_{Y}) G∗, F∗=argG, Fmin Dx, DYmaxL(G, F, DX, DY) 请注意,Our model can be seen as training two"自动编码器":We will use an autoencoder F ∘ G : X → X F∘G:X→X F∘G:X→X与另一个 G ∘ F : Y → Y G∘F:Y→Y G∘F:Y→Y共同学习.然而,这些自动编码器都有特殊的内部结构:They map images to themselves through an intermediate representation,This intermediate representation is the translation of the image in another domain.Such a setup can also be seen as"对抗性自动编码器"的一个特例,It uses an adversarial loss to train the bottleneck layer of the autoencoder to match an arbitrary target distribution.在我们的例子中, X → X X→X X→XThe target distribution of the autoencoder is the domain Y Y Y的分布.在第5.1.3节中,We compare our method with full target subtraction,and shown by experience,These two goals play a key role in obtaining high-quality results.

边栏推荐

- js背景切换时钟js特效代码

- AT4108 [ARC094D] Normalization

- 高性能数据访问中间件 OBProxy(三):问题排查和服务运维

- 重保特辑|拦截99%恶意流量,揭秘WAF攻防演练最佳实践

- LeetCode二叉树系列——199二叉树的右视图

- jsArray数组复制方法性能测试2207300040

- Parallelized Quick Sort Ideas

- The way of programmers' cultivation: do one's own responsibilities, be clear in reality - lead to the highest realm of pragmatism

- 第十五天笔记

- strlen跟sizeof区别

猜你喜欢

随机推荐

电池包托盘有进水风险,存在安全隐患,紧急召回52928辆唐DM

20220729 Securities, Finance

[VMware virtual machine installation mysql5.7 tutorial]

LeetCode二叉树系列——199二叉树的右视图

(一)Multisim安装与入门

Learning notes - 7 weeks as data analyst "in the first week: data analysis of thinking"

永州动力电池实验室建设合理布局方案

树形dp小总结(换根,基环树,杂七杂八的dp)

Smart pointer implementation conjecture

激光雷达点云语义分割论文阅读小结

[PostgreSQL] - explain SQL analysis introduction

腾讯称电竞人才缺口200万;华为鸿蒙3.0正式发布;乐视推行每周工作4天半?...丨黑马头条...

OFDM 十六讲 3- OFDM Waveforms

一文读懂Elephant Swap,为何为ePLATO带来如此高的溢价?

TaskDispatcher source code parsing

svg波浪动画js特效代码

程序员修炼之道:务以己任,实则明心——通向务实的最高境界

CF1677E Tokitsukaze and Beautiful Subsegments

matlab画图,仅显示部分图例

学习笔记——七周成为数据分析师《第二周:业务》:业务分析指标

![[VMware virtual machine installation mysql5.7 tutorial]](/img/eb/4b47b859bb5695c38d48c8c01c9da0.png)