当前位置:网站首页>Data extraction 2

Data extraction 2

2022-07-27 07:59:00 【Horse sailing】

Regularity of data extraction

The goal is : Master the common syntax of regular expressions

master re Common syntax of modules

Master the original string r Usage of

1. What is regular expression

With some specific characters defined in advance , And the combination of these specific characters , Form a regular string , This rule string is used to express a kind of filtering logic for Strings

2. Common syntax of regular expressions

Knowledge point :

Characters in regular

Predefined character set in regular

Quantifier in regular

There are many regular grammars , Can't review all , For other grammars , You can check the information temporarily , such as : Indicates or can be used |

practice : What is the output below ?

import re

str1 = '<meta http-equiv="content-type" content="text/html;charset=utf-8"/>adacc/sd/sdef/24'

result = re.findall(r'<.*>', str1)

print(result)

3. re Common methods of modules

pattern.match( Find one from the beginning )

pattern.search( Find one. )

pattern.findall( Find all )

Greedy pattern under the premise of successful expression matching , As many matches as possible

The non greedy pattern is based on the successful expression matching , As few matches as possible

Return a list , No, it's an empty list

re.findall("\d", "aef5teacher2") >>>> ['5', '2']

pattern.sub( Replace )

re.sub("\d", "_", "aef5teacher2") >>>> ['aef_teacher_']

re.compile( compile )

Return a model p, With and re The same way , But the parameters passed are different

The matching pattern needs to be passed to compile in

p = re.compile("\d", re.s)

p.findall("aef_teacher")

4. python Original string r Usage of

Definition : All strings are used literally , Special characters without escape or characters that cannot be printed , The original string is often for special characters , for example :“\n" The original string of is ”\n"

The length of the original string :

len('\n')

# result 1

len(r'\n')

# result 2

'\n'[0]

# result '\n'

r'\n'[0]

# result '\\'

Data extraction lxml Module and xpath Tools

The goal is : understand xpath The definition of

understand lxml

master xpath The grammar of

lxml It is a high-tech python html/xml Parser , We can use xpath, To quickly locate specific elements and get node information

1. understand lxml Module and xpath grammar

Yes html or xml Form text to extract specific content , We need to master lxml Use of modules and xpath grammar .

- lxml Modules can use XPath Rule grammar , To quickly locate HTML\XML Specific elements in the document and getting node information ( Text content 、 Property value )

- XPath (XML Path Language) Is a door in HTML\XML Looking for information in the document Language , Can be used in HTML\XML In the document for Elements and attributes are traversed .

- W3School Official documents :http://www.w3school.com.cn/xpath/index.asp

- extract xml、html The data in requires lxml Module and xpath Use grammar with

2. Google browser xpath helper Installation and use of plug-ins

If you want to make use of lxml Module extract data , We need to master xpath Rule of grammar . Next let's get to know xpath helper plug-in unit , It can help us practice xpath grammar **( See courseware for installation package – Tools folder )**

download Chrome plug-in unit XPath Helper

- Can be in chrome Download in App Store

take rar Extract the compressed package to the current folder

Open Google browser ----> Three points in the upper right corner ----> More tools ----> add-in

In the extended program interface , Click the switch in the upper right corner , After entering the developer mode , take xpath Drag folder into , Release the mouse

- installation is complete , check

3. xpath The node relationship of

![[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-D6IwuiMy-1658853026856)(../img/ node .png)]](/img/d6/60461d71cfb40ecce51a4ffb2ddec9.png)

![[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-lRUWk5Nv-1658853026858)(../img/xpath The relationship between nodes in .png)]](/img/81/cdb49955f12a6a717579f9505197ab.png)

4. xpath grammar - Base node selection Syntax

- XPath Use path expressions to select XML A node or set of nodes in a document .

- These path expressions and our regular Expressions seen in the computer file system Very similar .

- Use chrome When the plug-in selects the tag , When selected , The selected tag will add properties class=“xh-highlight”

xpath Syntax for locating nodes and extracting attributes or text content

| expression | describe |

|---|---|

| nodename | Select the element . |

| / | Select from root node 、 Or the transition between elements . |

| // | Select the node in the document from the current node that matches the selection , Regardless of their location . |

| . | Select the current node . |

| … | Select the parent of the current node . |

| @ | Select Properties . |

| text() | Select the text . |

5. xpath grammar - Node modifier Syntax

According to the attribute value of the tag 、 Subscripts and so on to obtain specific nodes

5.1 Node modifier Syntax

| Path expression | result |

|---|---|

| //title[@lang=“eng”] | choice lang The property value is eng All of the title Elements |

| /bookstore/book[1] | Choose to belong to bookstore The first of the child elements book Elements . |

| /bookstore/book[last()] | Choose to belong to bookstore The last of the child elements book Elements . |

| /bookstore/book[last()-1] | Choose to belong to bookstore The penultimate of a child element book Elements . |

| /bookstore/book[position()>1] | choice bookstore Below book Elements , Choose... From the second |

| //book/title[text()=‘Harry Potter’] | Select all book Under the title Elements , Just select the text as Harry Potter Of title Elements |

| /bookstore/book[price>35.00]/title | selection bookstore In the element book All of the elements title Elements , And one of them price The value of the element must be greater than 35.00. |

5.2 About xpath The subscript

- stay xpath in , The position of the first element is 1

- The position of the last element is last()

- The next to last is last()-1

6. xpath grammar - Other common node selection syntax

// Use of

- //a At present html All on the page a

- bookstore//book bookstore All under book Elements

@ Use

- //a/@href be-all a Of href

- //title[@lang=“eng”] choice lang=eng Of title label

text() Use

- //a/text() Get all a The text below

- //a[texts()=‘ The next page ’] Gets the text for the next page a label

- a//text() a All text under

xpath Find a specific node

- //a[1] Select first s

- //a[last()] the last one

- //a[position()<4] The first three

contain

- //a[contains(text(),“ The next page ”)] Select a text that contains three words from the next page a label **

- //a[contains(@class,‘n’)] class contain n Of a label

7. lxml Module installation and use examples

lxml Module is a third-party module , Use... After installation

7.1 lxml Module installation

Get on send request xml or html Form the response content to extract

pip/pip3 install lxml

7.2 Reptilian right html Extracted content

- Extract... From the tag Text content

- Extract... From the tag The value of the property

- such as , extract a In the label href The value of the property , obtain url, And continue to make requests

7.3 lxml Use of modules

Import lxml Of etree library

from lxml import etreeutilize etree.HTML, take html character string (bytes Type or str type ) Turn into Element object ,Element Object has xpath Methods , Return a list of results

html = etree.HTML(text) ret_list = html.xpath("xpath Syntax rule string ")xpath Method returns three cases of the list

- Return to empty list : according to xpath Syntax rule string , No elements are located

- Returns a list of strings :xpath String rules must match the text content or the value of a property

- Return from Element List of objects :xpath The rule string matches the label , In the list Element The object can continue xpath

lxml Module use example

Run the following code , View printed results

from lxml import etree

text = ''' <div> <ul> <li class="item-1"> <a href="link1.html">first item</a> </li> <li class="item-1"> <a href="link2.html">second item</a> </li> <li class="item-inactive"> <a href="link3.html">third item</a> </li> <li class="item-1"> <a href="link4.html">fourth item</a> </li> <li class="item-0"> a href="link5.html">fifth item</a> </ul> </div> '''

html = etree.HTML(text)

# obtain href The list and title A list of

href_list = html.xpath("//li[@class='item-1']/a/@href")

title_list = html.xpath("//li[@class='item-1']/a/text()")

# Assemble into a dictionary

for href in href_list:

item = {

}

item["href"] = href

item["title"] = title_list[href_list.index(href)]

print(item)

practice

The following html In the document string , Each one class by item-1 Of li Label as 1 News data . extract a The text content of the tag and the links , Assemble into a dictionary .

text = ''' <div> <ul> <li class="item-1"><a>first item</a></li> <li class="item-1"><a href="link2.html">second item</a></li> <li class="item-inactive"><a href="link3.html">third item</a></li> <li class="item-1"><a href="link4.html">fourth item</a></li> <li class="item-0"><a href="link5.html">fifth item</a> </ul> </div> '''

Be careful :

Group first , Then extract the data , It can avoid data confusion

For null value to judge

Continue to extract data in each group

for li in li_list:

item = {

}

item["href"] = li.xpath("./a/@href")[0] if len(li.xpath("./a/@href"))>0 else None

item["title"] = li.xpath("./a/text()")[0] if len(li.xpath("./a/text()"))>0 else None

print(item)

##### Knowledge point : master lxml Use... In the module xpath Syntax positioning elements extract attribute values or text content

##### lxml Module etree.tostring Use of functions

####> Run the code below , Observe the contrast html The original string and printout results

from lxml import etree

html_str = ''' <div> <ul> <li class="item-1"><a href="link1.html">first item</a></li> <li class="item-1"><a href="link2.html">second item</a></li> <li class="item-inactive"><a href="link3.html">third item</a></li> <li class="item-1"><a href="link4.html">fourth item</a></li> <li class="item-0"><a href="link5.html">fifth item</a> </ul> </div> '''

html = etree.HTML(html_str)

handeled_html_str = etree.tostring(html).decode()

print(handeled_html_str)

Phenomena and conclusions

The results are compared with the original :

- Automatically complete what is missing

lilabel- Automatic completion

htmlSuch as tag

<html><body><div> <ul>

<li class="item-1"><a href="link1.html">first item</a></li>

<li class="item-1"><a href="link2.html">second item</a></li>

<li class="item-inactive"><a href="link3.html">third item</a></li>

<li class="item-1"><a href="link4.html">fourth item</a></li>

<li class="item-0"><a href="link5.html">fifth item</a>

</li></ul> </div> </body></html>

Conclusion :

lxml.etree.HTML(html_str) Can automatically complete the label

lxml.etree.tostringFunction can be converted to Element The object is then converted back to html character stringIf reptiles use lxml To extract data , Should take

lxml.etree.tostringAs the basis of data extraction

Practice after class

Preliminary use

We use it to analyze HTML Code , A simple example :

# lxml_test.py

# Use lxml Of etree library

from lxml import etree

html = ''' <div> <ul> <li class="item-0"><a href="link1.html">first item</a></li> <li class="item-1"><a href="link2.html">second item</a></li> <li class="item-inactive"><a href="link3.html">third item</a></li> <li class="item-1"><a href="link4.html">fourth item</a></li> <li class="item-0"><a href="link5.html">fifth item</a> # Be careful , One is missing here </li> Closed label </ul> </div> '''

# utilize etree.HTML, Parse string to HTML file

xml_doc = etree.HTML(html)

# Serialize by string HTML file

html_doc = etree.tostring(xml_doc)

print(html_doc)

Output results :

<html><body>

<div>

<ul>

<li class="item-0"><a href="link1.html">first item</a></li>

<li class="item-1"><a href="link2.html">second item</a></li>

<li class="item-inactive"><a href="link3.html">third item</a></li>

<li class="item-1"><a href="link4.html">fourth item</a></li>

<li class="item-0"><a href="link5.html">fifth item</a></li>

</ul>

</div>

</body></html>

lxml It can be corrected automatically html Code , The example not only completes li label , And added body,html label .

File read :

In addition to reading strings directly ,lxml It also supports reading content from files . We build a new one hello.html file :

<!-- hello.html -->

<div>

<ul>

<li class="item-0"><a href="link1.html">first item</a></li>

<li class="item-1"><a href="link2.html">second item</a></li>

<li class="item-inactive"><a href="link3.html"><span class="bold">third item</span></a></li>

<li class="item-1"><a href="link4.html">fourth item</a></li>

<li class="item-0"><a href="link5.html">fifth item</a></li>

</ul>

</div>

recycling etree.parse() Method to read the file .

# lxml_parse.py

from lxml import etree

# Read external files hello.html

html = etree.parse('./hello.html')

result = etree.tostring(html, pretty_print=True)

print(result)

The output is the same as before :

<html><body>

<div>

<ul>

<li class="item-0"><a href="link1.html">first item</a></li>

<li class="item-1"><a href="link2.html">second item</a></li>

<li class="item-inactive"><a href="link3.html">third item</a></li>

<li class="item-1"><a href="link4.html">fourth item</a></li>

<li class="item-0"><a href="link5.html">fifth item</a></li>

</ul>

</div>

</body></html>

XPath Example test

1. Get all <li> label

# xpath_li.py

from lxml import etree

xml_doc = etree.parse('hello.html')

print type(html) # Show etree.parse() Return type

result = xml_doc.xpath('//li')

print result # Print <li> List of elements of the tag

print len(result)

print type(result)

print type(result[0])

Output results :

<type 'lxml.etree._ElementTree'>

[<Element li at 0x1014e0e18>, <Element li at 0x1014e0ef0>, <Element li at 0x1014e0f38>, <Element li at 0x1014e0f80>, <Element li at 0x1014e0fc8>]

5

<type 'list'>

<type 'lxml.etree._Element'>

2. Keep getting <li> All of the tags class attribute

# xpath_li.py

from lxml import etree

html = etree.parse('hello.html')

result = html.xpath('//li/@class')

print result

Running results

['item-0', 'item-1', 'item-inactive', 'item-1', 'item-0']

3. Keep getting <li> Under the label href by link1.html Of <a> label

# xpath_li.py

from lxml import etree

html = etree.parse('hello.html')

result = html.xpath('//li/a[@href="link1.html"]')

print result

Running results

[<Element a at 0x10ffaae18>]

4. obtain <li> All under the label <span> label

# xpath_li.py

from lxml import etree

html = etree.parse('hello.html')

#result = html.xpath('//li/span')

# Note that this is wrong :

# because / Is used to get child elements , and <span> Not at all <li> Child elements , therefore , Use a double slash

result = html.xpath('//li//span')

print result

Running results

[<Element span at 0x10d698e18>]

5. obtain <li> Label under <a> Everything in the label class

# xpath_li.py

from lxml import etree

html = etree.parse('hello.html')

result = html.xpath('//li/a//@class')

print result

Running results

['blod']

6. Get the last <li> Inside <a> Of href Property value

# xpath_li.py

from lxml import etree

html = etree.parse('hello.html')

result = html.xpath('//li[last()]/a/@href')

# Predicate [last()] You can find the last element

print result

Running results

['link5.html']

7. Get the content of the penultimate element

# xpath_li.py

from lxml import etree

<a href="www.xxx.com">abcd</a>

html = etree.parse('hello.html')

result = html.xpath('//li[last()-1]/a')

# text Method can get the element content

print result[0].text

Running results

fourth item

8. obtain class The value is bold The tag name of

# xpath_li.py

from lxml import etree

html = etree.parse('hello.html')

result = html.xpath('//*[@class="bold"]')

# tag Method to get the tag name

print result[0].tag

Running results

span

Data extraction BeautifuSoup Module and Css Selectors ( expand )

""" # BeautifulSoup It is an efficient web page parsing library , It can be downloaded from HTML or XML Extract data from files Supports different parsers , such as , Yes HTML analysis , Yes XML analysis , Yes HTML5 analysis Is a very powerful tool , Reptile weapon An inspirational and convenient web page parsing library , Handle efficiently , Support for multiple parsers Using it, we can easily grasp web information without writing regular expressions """

# install pip3 install BeautifulSoup4

# tag chooser

### Select by tag

#### .string() -- Get the text node and content

html = """ <html> <head> <title>The Dormouse's story</title> </head> <body> <p class="title" name="dromouse"><b><span>The Dormouse's story</span></b></p> <p class="story">Once upon a time there were three little sisters; and their names were <a href="http://example.com/elsie" class="sister" id="link1"><!-- Elsie --></a>, <a href="http://example.com/lacie" class="sister" id="link2">Lacie</a> and <a href="http://example.com/tillie" class="sister" id="link3">Tillie</a>; and they lived at the bottom of a well.</p> <p class="story">...</p> """

from bs4 import BeautifulSoup # Guide pack

soup = BeautifulSoup(html, 'lxml') # Parameters 1: To parse html Parameters 2: Parser

# print(soup.prettify()) # Code completion

print(soup.html.head.title.string)

print(soup.title.string) #title It's a node , .string Attribute The function is to get the string text

# Select entire head, Include the tag itself

print(soup.head) # contain head Everything including labels

print(soup.p) # Return the first result of the match

#%% md

### Get the name

#### .name() -- Get the name of the tag itself

#%%

html = """ <html><head><title>The Dormouse's story</title></head> <body> <p class="title" name="dromouse"><b>The Dormouse's story</b></p> <p class="story">Once upon a time there were three little sisters; and their names were <a href="http://example.com/elsie" class="sister" id="link1"><!-- Elsie --></a>, <a href="http://example.com/lacie" class="sister" id="link2">Lacie</a> and <a href="http://example.com/tillie" class="sister" id="link3">Tillie</a>; and they lived at the bottom of a well.</p> <p class="story">...</p> """

from bs4 import BeautifulSoup

soup = BeautifulSoup(html, 'lxml')

print(soup.title.name) # The result is the tag itself --> title

print(soup.p.name) # --> Get the tag name

#%% md

### Get attribute value

#### .attrs() -- Get attribute value through attribute

#%%

html = """ <html><head><title>The Dormouse's story</title></head> <body> <p class="title asdas" name="abc" id = "qwe"><b>The Dormouse's story</b></p> <p class="story">Once upon a time there were three little sisters; and their names were <a href="http://example.com/123" class="sister" id="link1"><!-- Elsie --></a>, <a href="http://example.com/lacie" class="sister" id="link2">Lacie</a> and <a href="http://example.com/tillie" class="sister" id="link3">Tillie</a>; and they lived at the bottom of a well.</p> <p class="story">...</p> """

from bs4 import BeautifulSoup

soup = BeautifulSoup(html, 'lxml')

print(soup.p.attrs['name'])# obtain p label name Property value of property

print(soup.p.attrs['id']) # obtain p label id Property value of property

print(soup.p['id']) # The second way

print(soup.p['class']) # Save as a list

print(soup.a['href']) # Also, only the first value is returned

#%% md

### Nested selection

There must be a child parent relationship

#%%

html = """ <html><head><title>The Dormouse's story</title></head> <body> <p class="title" name="dromouse"><b>The abc Dormouse's story</b></p> <p class="story">Once upon a time there were three little sisters; and their names were <a href="http://example.com/elsie" class="sister" id="link1"><!-- Elsie --></a>, <a href="http://example.com/lacie" class="sister" id="link2">Lacie</a> and <a href="http://example.com/tillie" class="sister" id="link3">Tillie</a>; and they lived at the bottom of a well.</p> <p class="story">...</p> """

from bs4 import BeautifulSoup

soup = BeautifulSoup(html, 'lxml')

print(soup.body.p.b.string) # Look down layer by layer

#%% md

### Child nodes and descendant nodes

#%%

html = """ <html> <head> <title>The Dormouse's story</title> </head> <body> <p class="story"> Once upon a time there were three little sisters; and their names were <a href="http://example.com/elsie" class="sister" id="link1"> <span>Elsie</span> </a> <a href="http://example.com/lacie" class="sister" id="link2">Lacie</a> and <a href="http://example.com/tillie" class="sister" id="link3">Tillie</a> and they lived at the bottom of a well. </p> <p class="story">...</p> """

from bs4 import BeautifulSoup

soup = BeautifulSoup(html, 'lxml')

# The tag selector can only get part of the content , Can't get all , Then how to solve ??

# .contents Property can be used to tag( label ) The child nodes of are output in the form of a list

# print(soup.p.contents) # obtain P Label all child node contents Return to one list

for i in soup.p.contents:

print(i)

#%%

#%%

html = """ <html> <head> <title>The Dormouse's story</title> </head> <body> <p class="story"> Once upon a time there were three little sisters; and their names were <a href="http://example.com/elsie" class="sister" id="link1"> <span>Elsie</span> </a> <a href="http://example.com/lacie" class="sister" id="link2">Lacie</a> and <a href="http://example.com/tillie" class="sister" id="link3">Tillie</a> and they lived at the bottom of a well. </p> <p class="story">...</p> """

from bs4 import BeautifulSoup

soup = BeautifulSoup(html, 'lxml')

# .children It's a list Iterator of type

print(soup.p.children) # Get child nodes Returns an iterator

for i in soup.p.children:

print(i)

for i, child in enumerate(soup.p.children):

print(i, child)

#%%

html = """ <html> <head> <title>The Dormouse's story</title> </head> <body> <p class="story"> Once upon a time there were three little sisters; and their names were <a href="http://example.com/elsie" class="sister" id="link1"> <span>Elsie</span> </a> <a href="http://example.com/lacie" class="sister" id="link2">Lacie</a> and <a href="http://example.com/tillie" class="sister" id="link3">Tillie</a> and they lived at the bottom of a well. </p> <p class="story">...</p> """

from bs4 import BeautifulSoup

soup = BeautifulSoup(html, 'lxml')

print(soup.p.descendants) # Get children's node Returns an iterator

for i, child in enumerate(soup.p.descendants):

print(i, child)

#%% md

### Parent node and ancestor node

#%%

html = """ <html> <head> <title>The Dormouse's story</title> </head> <body> <p class="story"> Once upon a time there were three little sisters; and their names were <a href="http://example.com/elsie" class="sister" id="link1"> <span>Elsie</span> </a> <a href="http://example.com/lacie" class="sister" id="link2">Lacie</a> and <a href="http://example.com/tillie" class="sister" id="link3">Tillie</a> and they lived at the bottom of a well. </p> <p class="story">...</p> """

from bs4 import BeautifulSoup

soup = BeautifulSoup(html, 'lxml')

print(soup.a.parent) # Get parent node

#%%

html = """ <html> <head> <title>The Dormouse's story</title> </head> <body> <p class="story"> Once upon a time there were three little sisters; and their names were <a href="http://example.com/elsie" class="sister" id="link1"> <span>Elsie</span> </a> <a href="http://example.com/lacie" class="sister" id="link2">Lacie</a> and <a href="http://example.com/tillie" class="sister" id="link3">Tillie</a> and they lived at the bottom of a well. </p> <p class="story">...</p> """

from bs4 import BeautifulSoup

soup = BeautifulSoup(html, 'lxml')

print(list(enumerate(soup.a.parents))) # Get ancestor nodes

#%% md

### Brother node

#%%

html = """ <html> <head> <title>The Dormouse's story</title> </head> <body> <p class="story"> <span>abcqweasd</span> Once upon a time there were three little sisters; and their names were <a href="http://example.com/elsie" class="sister" id="link1"> <span>Elsie</span> </a> <a href="http://example.com/lacie" class="sister" id="link2">Lacie</a> and <a href="http://example.com/tillie" class="sister" id="link3">Tillie</a> and they lived at the bottom of a well. </p> <p class="story">...</p> """

from bs4 import BeautifulSoup

soup = BeautifulSoup(html, 'lxml')

print(list(enumerate(soup.a.next_siblings))) # All brother nodes behind

print('---'*15)

print(list(enumerate(soup.a.previous_siblings))) # The front one

#%% md

## practical : Standard selector

#%% md

### find_all( name , attrs , recursive , text , **kwargs )

#%% md

According to the tag name 、 attribute 、 Content search document

#%% md

#### Use find_all Look by tag name

#%%

html=''' <div class="panel"> <div class="panel-heading"> <h4>Hello</h4> </div> <div class="panel-body"> <ul class="list" id="list-1"> <li class="element">Foo</li> <li class="element">Bar</li> <li class="element">Jay</li> </ul> <ul class="list list-small" id="list-2"> <li class="element">Foo-2</li> <li class="element">Bar-2</li> </ul> </div> </div> '''

from bs4 import BeautifulSoup

soup = BeautifulSoup(html, 'lxml')

print(soup.find_all('ul')) # Get all of it ul Label and its contents

print(soup.find_all('ul')[0])

ul = soup.find_all('ul')

print(ul) # Get the whole ul Label and its contents

print('____________'*10)

for ul in soup.find_all('ul'):

# print(ul) # Traverse ul label

for li in ul:

# print(li) # Traverse li label

print(li.string) # Get all of it li The text content in the label

#%% md

#### Get the text value

#%%

for ul in soup.find_all('ul'):

for i in ul.find_all("li"):

print(i.string)

#%% md

#### Find... By attributes

#%%

html=''' <div class="panel"> <div class="panel-heading"> <h4>Hello</h4> </div> <div class="panel-body"> <ul class="list" id="list-1" name="elements"> <li class="element">Foo</li> <li class="element">Bar</li> <li class="element">Jay</li> </ul> <ul class="list list-small" id="list-2"> <li class="element">Foo</li> <li class="element">Bar</li> </ul> </div> </div> '''

from bs4 import BeautifulSoup

soup = BeautifulSoup(html, 'lxml')

# The first way to write it adopt attrs

# print(soup.find_all(attrs={'id': 'list-1'})) # according to id attribute

print("-----"*10)

# print(soup.find_all(attrs={'name': 'elements'})) # according to name attribute

for ul in soup.find_all(attrs={

'name': 'elements'}):

print(ul)

print(ul.li.string) # Only the first value can be returned to you

# # # # print('-----')

for li in ul:

# print(li)

print(li.string)

#%% md

#### Special attribute search

#%%

html=''' <div class="panel"> <div class="panel-heading"> <h4>Hello</h4> </div> <div class="panel-body"> <ul class="list" id="list-1"> <li class="element">Foo</li> <li class="element">Bar</li> <li class="element">Jay</li> </ul> <ul class="list list-small" id="list-2"> <li class="element">Foo</li> <li class="element">Bar</li> </ul> </div> </div> '''

from bs4 import BeautifulSoup

soup = BeautifulSoup(html, 'lxml')

# The second way

print(soup.find_all(id='list-1'))

print(soup.find_all(class_='element')) # class Belong to Python keyword , Do special treatment _

# Recommended search methods li Label under class attribute

print(soup.find_all('li',{

'class','element'}))

#%% md

#### Select... According to the text value text

#%%

html=''' <div class="panel"> <div class="panel-heading"> <h4>Hello</h4> </div> <div class="panel-body"> <ul class="list" id="list-1"> <li class="element">Foo</li> <li class="element">Bar</li> <li class="element">Jay</li> </ul> <ul class="list list-small" id="list-2"> <li class="element">Foo</li> <li class="element">Bar</li> </ul> </div> </div> '''

from bs4 import BeautifulSoup

soup = BeautifulSoup(html, 'lxml')

print(soup.find_all(text='Foo')) # It can be used for content statistics

print(soup.find_all(text='Bar'))

print(len(soup.find_all(text='Foo'))) # Statistical quantity

#%% md

### find( name , attrs , recursive , text , **kwargs )

#%% md

find Return single element ,find_all Return all elements

#%%

html=''' <div class="panel"> <div class="panel-heading"> <h4>Hello</h4> </div> <div class="panel-body"> <ul class="list" id="list-1"> <li class="element">Foo</li> <li class="element">Bar</li> <li class="element">Jay</li> </ul> <ul class="list list-small" id="list-2"> <li class="element">Foo</li> <li class="element">Bar</li> </ul> </div> </div> '''

from bs4 import BeautifulSoup

soup = BeautifulSoup(html, 'lxml')

print(soup.find('ul')) # Only the first one that matches is returned

print(soup.find('li'))

print(soup.find('page')) # If the tag does not exist, return None

#%% md

### find_parents() find_parent()

#%% md

find_parents() Return all ancestor nodes ,find_parent() Return direct parent .

#%% md

### find_next_siblings() find_next_sibling()

#%% md

find_next_siblings() Back to all the sibling nodes in the back ,find_next_sibling() Go back to the first sibling node .

#%% md

### find_previous_siblings() find_previous_sibling()

#%% md

find_previous_siblings() Return to all previous sibling nodes ,find_previous_sibling() Return to the first sibling node .

#%% md

### find_all_next() find_next()

#%% md

find_all_next() All the eligible nodes after returning the node , find_next() Return the first eligible node

#%% md

### find_all_previous() and find_previous()

#%% md

find_all_previous() All the eligible nodes after returning the node , find_previous() Return the first eligible node

#%% md

## CSS Selectors

#%% md

adopt select() Direct in CSS The selector completes the selection

If the HTML Inside CSS Selectors are familiar and can be considered in this way

#%% md

Be careful :

1, Write CSS when , The tag name is not decorated , Add before class name . , id Add before name #

2, The method used soup.select(), The return type is list

3, Multiple filter conditions need to be separated by spaces , From the past to the future, it is screening layer by layer

#%%

html=''' <div class="pan">q321312321</div> <div class="panel"> <div class="panel-heading"> <h4>Hello</h4> </div> <div class="panel-body"> <ul class="list" id="list-1"> <li class="element">Foo</li> <li class="element">Bar</li> <li class="element">Jay</li> </ul> <ul class="list list-small" id="list-2"> <li class="element">Foo</li> <li class="element">Bar</li> </ul> </div> </div> '''

from bs4 import BeautifulSoup

soup = BeautifulSoup(html, 'lxml')

# Hierarchy ul li

print(soup.select('ul li')) # The label is not decorated

print("----"*10)

print(soup.select('.panel .panel-heading')) # Add before class name .

print("----"*10)

print(soup.select('#list-1 .element'))

print("----"*10)

#%%

html=''' <div class="panel"> <div class="panel-heading"> <h4>Hello</h4> </div> <div class="panel-body"> <ul class="list" id="list-1"> <li class="element">Foo</li> <li class="element">Bar</li> <li class="element">Jay</li> </ul> <ul class="list list-small" id="list-2"> <li class="element">Foo</li> <li class="element">Bar</li> </ul> </div> </div> '''

from bs4 import BeautifulSoup

soup = BeautifulSoup(html, 'lxml')

for ul in soup.select('ul'):

for i in ul.select('li'):

print(i.string)

### get attribute

html=''' <div class="panel"> <div class="panel-heading"> <h4>Hello</h4> </div> <div class="panel-body"> <ul class="list" id="list-1"> <li class="element">Foo</li> <li class="element">Bar</li> <li class="element">Jay</li> </ul> <ul class="list list-small" id="list-2"> <li class="element">Foo</li> <li class="element">Bar</li> </ul> </div> </div> '''

from bs4 import BeautifulSoup

soup = BeautifulSoup(html, 'lxml')

# [] obtain id attribute attrs obtain class attribute

for ul in soup.select('ul'):

print(ul['id'])

print(ul.attrs['class'])

### Get content

### get_text()

html=''' <div class="panel"> <div class="panel-heading"> <h4>Hello</h4> </div> <div class="panel-body"> <ul class="list" id="list-1"> <li class="element">Foo</li> <li class="element">Bar</li> <li class="element">Jay</li> </ul> <ul class="list list-small" id="list-2"> <li class="element2">Foo</li> <li class="element2">Bar</li> </ul> </div> </div> '''

from bs4 import BeautifulSoup

soup = BeautifulSoup(html, 'lxml')

for li in soup.select('li'):

print(li.string)

print(li.get_text()) # Get content

* Recommended lxml Parsing library , Use... If necessary html.parser

* Tag selection and filtering is weak but fast

* It is recommended to use find()、find_all() The query matches a single result or multiple results

* If the CSS The selector is familiar with the recommended use of select()

* Remember the common ways to get attributes and text values

Data extraction CSS Selectors

css Syntax summary

Students who are familiar with the front end are right css Selectors must be familiar , such as jquery Through all kinds of css Selector syntax DOM Operation etc.

Data extraction performance comparison

Use in reptiles css Selectors , Code tutorial

>>> from requests_html import session

# Return to one Response object

>>> r = session.get('https://python.org/')

# Get all the links

>>> r.html.links

{

'/users/membership/', '/about/gettingstarted/'}

# Use css Get an element by selector

>>> about = r.html.find('#about')[0]

>>> print(about.text)

About

Applications

Quotes

Getting Started

Help

Python Brochure

边栏推荐

- 增强:BTE流程简介

- Modification case of Ruixin micro rk3399-i2c4 mounting EEPROM

- QingChuang technology joined dragon lizard community to build a new ecosystem of intelligent operation and maintenance platform

- 【pytorch】ResNet18、ResNet20、ResNet34、ResNet50网络结构与实现

- A quick overview of transformer quantitative papers in emnlp 2020

- SQL labs SQL injection platform - level 1 less-1 get - error based - Single Quotes - string (get single quote character injection based on errors)

- DEMO:PA30 银行国家码默认CN 增强

- Lua迭代器

- Installation and use of apifox

- 【Golang】golang开发微信公众号网页授权功能

猜你喜欢

【已解决】单点登录成功SSO转发,转发URL中带参数导致报错There was an unexpected error (type=Internal Server Error, status=500)

How to update PIP3? And running PIP as the 'root' user can result in broken permissions and conflicting behavior

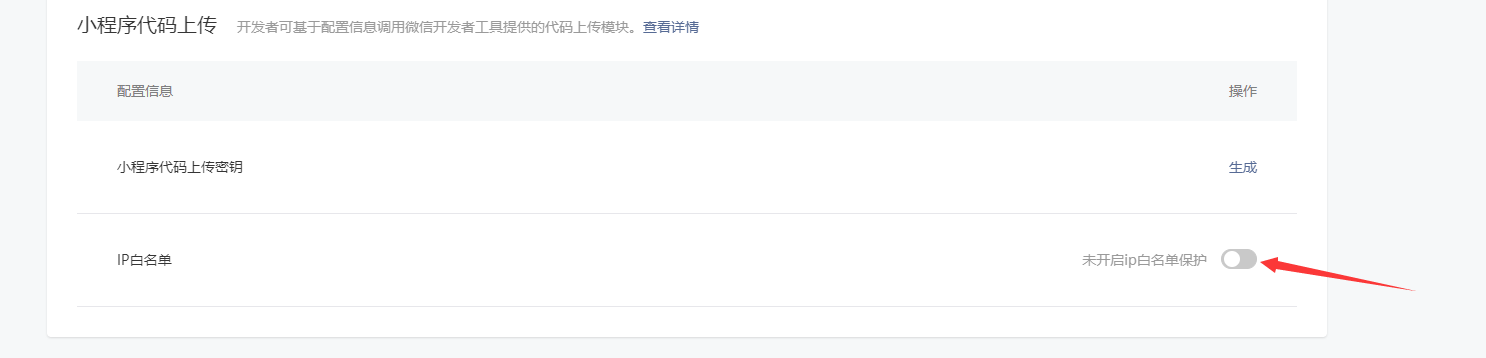

【小程序】uniapp发行微信小程序上传失败Error: Error: {'errCode':-10008,'errMsg':'invalid ip...

Do me a favor ~ don't pay attention, don't log in, a questionnaire in less than a minute

The seta 2020 international academic conference will be held soon. Welcome to attend!

Promise详解

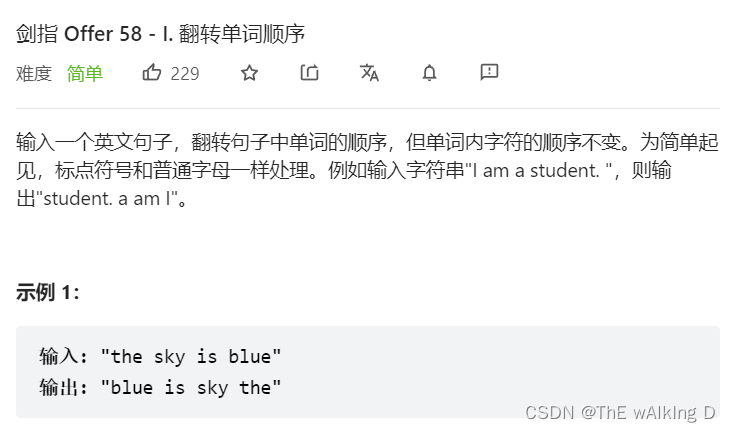

剑指 Offer 58 - I. 翻转单词顺序

Lu Xun: I don't remember saying it, or you can check it yourself!

![[resolved] SSO forwarding succeeded, and there was an unexpected error (type=internal server error, status=500) caused by parameters in the forwarding URL](/img/05/41f48160fa7895bc9e4f314ec570c5.png)

[resolved] SSO forwarding succeeded, and there was an unexpected error (type=internal server error, status=500) caused by parameters in the forwarding URL

How to analyze and locate problems in 60 seconds?

随机推荐

2020 International Machine Translation Competition: Volcano translation won five championships

为啥国内大厂都把云计算当成香饽饽,这个万亿市场你真的了解吗

JS存取cookie示例

Things come to conform, the future is not welcome, at that time is not miscellaneous, neither love

Modification case of Ruixin micro rk3399-i2c4 mounting EEPROM

SQL labs SQL injection platform - level 1 less-1 get - error based - Single Quotes - string (get single quote character injection based on errors)

Resttemplate connection pool configuration

鲁迅:我不记得说没说过,要不你自己查!

「翻译」SAP变式物料的采购如何玩转?看看这篇你就明白了

Comprehensive analysis of ADC noise-01-types of ADC noise and ADC characteristics

HU相关配置

杂谈:手里有竿儿,肩上有网,至于背篓里有多少鱼真的重要吗?

End of year summary

C#winform 窗体事件和委托结合用法

mqtt指令收发请求订阅

物联网工业级UART串口转WiFi转有线网口转以太网网关WiFi模块选型

[resolved] SSO forwarding succeeded, and there was an unexpected error (type=internal server error, status=500) caused by parameters in the forwarding URL

Gossip: is rotting meat in the pot to protect students' rights and interests?

Demo:pa30 Bank Country Code default CN enhancement

Happy holidays, everyone