当前位置:网站首页>Arrangement of statistical learning knowledge points -- maximum likelihood estimation (MLE) and maximum a posteriori probability (map)

Arrangement of statistical learning knowledge points -- maximum likelihood estimation (MLE) and maximum a posteriori probability (map)

2022-06-12 07:30:00 【Turned_ MZ】

Collation of statistical learning knowledge points —— Maximum likelihood estimation (MLE) And the maximum a posteriori probability (MAP)

Likelihood function and probability function

likelihood (likelihood) This word is actually related to probability (probability) It means something similar , For the following equation :

P ( x ∣ θ )

There are two inputs :x Represents a specific data ;θ Represent the parameters of the model .

If θ It's known and certain ,x It's a variable. , This function is called probability function (probability function), It describes for different sample points x, What is the probability of its occurrence .

If x It's known and certain ,θ It's a variable. , This function is called the likelihood function (likelihood function), It describes for different model parameters , appear x What's the probability of this sample point .

Maximum likelihood estimation

Maximum likelihood estimation , In popular sense , Is to use the known sample result information , Backtracking is most likely ( Maximum probability ) The model parameter values that lead to these sample results .

characteristic : Simple and applicable ; It usually converges well when the number of training samples increases ; Based on the assumption that all samples are independent and identically distributed ;

Probability for samples :

The goal is to ask for one θ, Make the above probability maximum , To simplify the calculation , Take the logarithm of the equation :

To maximize L, Yes L Find the derivative and let the derivative be 0 You can solve .

Maximum posterior probability

Maximum posterior estimate (MAP-Maxaposterior): seek p(D|θ )*p(θ) The parameter vector that takes the maximum value θ, Maximum likelihood estimation can be understood as a priori probability p(θ) For uniform distribution MAP estimator .(MAP shortcoming : If we do some arbitrary nonlinear transformation on the parameter space , Such as rotation transformation , So the probability density p(θ) It's going to change , The estimated result is no longer valid .) A point estimate of an unobservable quantity obtained from empirical data . Similar to MLE , But the biggest difference is , The maximum a posteriori estimate is incorporated into the prior distribution of the quantity to be estimated , It can be regarded as regularized maximum likelihood estimation

By Bayesian formula :

P(X) Is something that has happened , Is a fixed value , So maximize the posterior probability P(θ|X) It's about maximizing P(X|θ)P(θ)

As for the solution of the above objective function , It is the same as the maximum likelihood estimation , Take the derivative of the objective function and let the derivative be 0 To solve .

MAP And MLP The difference between

MAP And MLE The biggest difference is MAP The probability distribution of the model parameters is added to the , Or say ,MLE It is considered that the probability of the model parameters themselves is uniform , That is, the probability is a fixed value .MAP It allows us to add prior knowledge to the estimation model , This is useful when there are very few samples , Because when there are few samples, our observation results are likely to deviate , At this point, a priori knowledge will put the estimated result “ PULL ” To a priori , The actual prediction results will form a peak on both sides of the a priori results . By adjusting the parameters of a priori distribution , such as beta The distribution of the , We can also adjust the estimated results “ PULL ” To a priori magnitude , The bigger it is , The sharper the peak . Such a parameter , What we call the predictive model “ Hyperparameters ”.

边栏推荐

- ROS dynamic parameter configuration: use of dynparam command line tool (example + code)

- Scons compiling imgui

- Explain in detail the use of dynamic parameter adjustment and topic communication in ROS (principle + code + example)

- Summary of software testing tools in 2021 - unit testing tools

- How to stop MySQL service under Linux

- RT thread studio learning (I) new project

- AI狂想|来这场大会,一起盘盘 AI 的新工具!

- 2022R2移动式压力容器充装试题模拟考试平台操作

- QT realization tray

- Understanding management - four dimensions of executive power

猜你喜欢

modelarts二

Introduction to JDE object management platform and use of from

Kotlin插件 kotlin-android-extensions

Detailed explanation of addressing mode in 8086

Principle and application of PWM

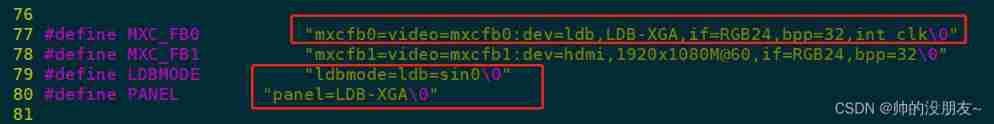

LVDS drive adapter

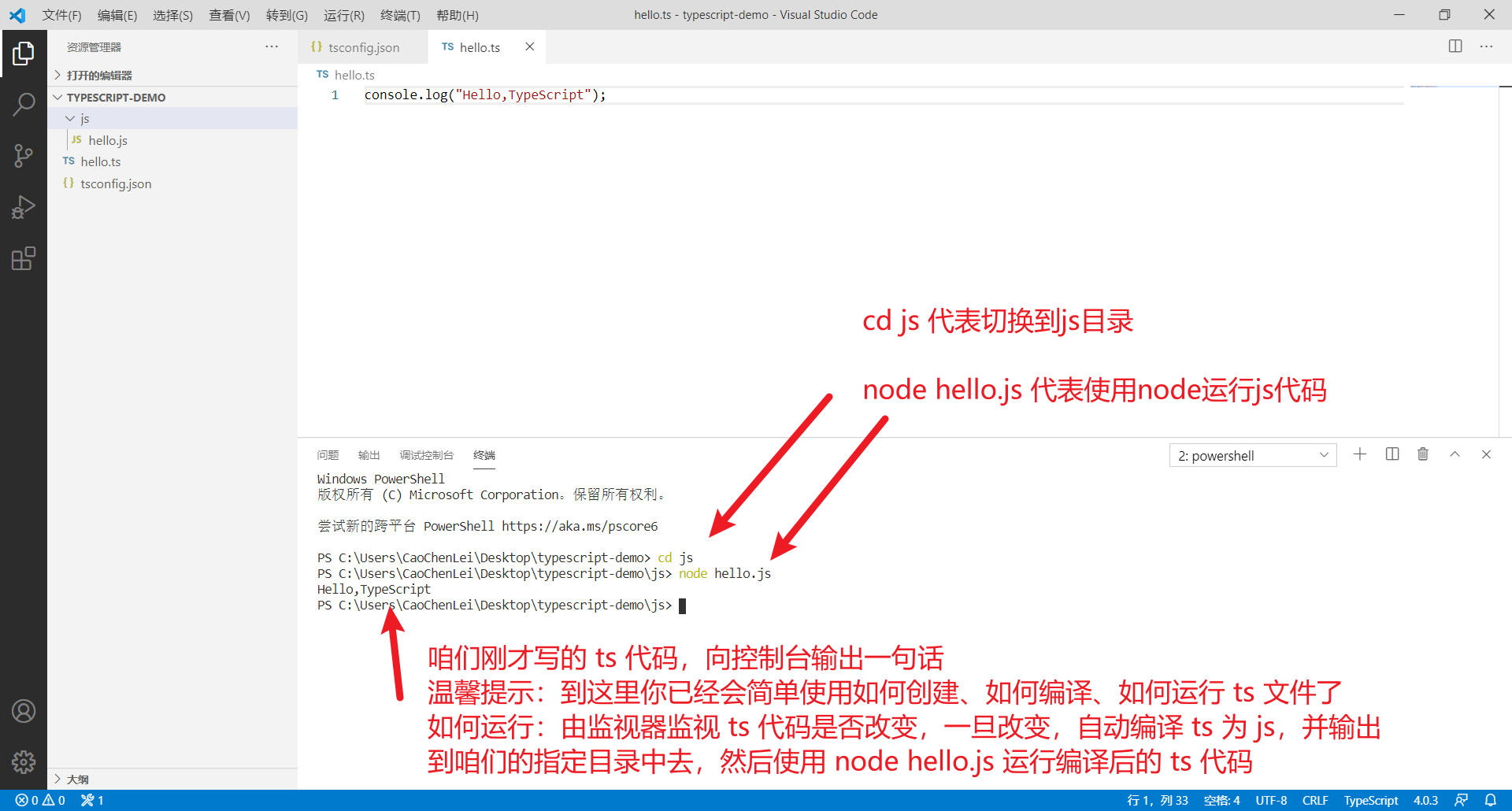

TypeScript基础知识全集

Pyhon的第五天

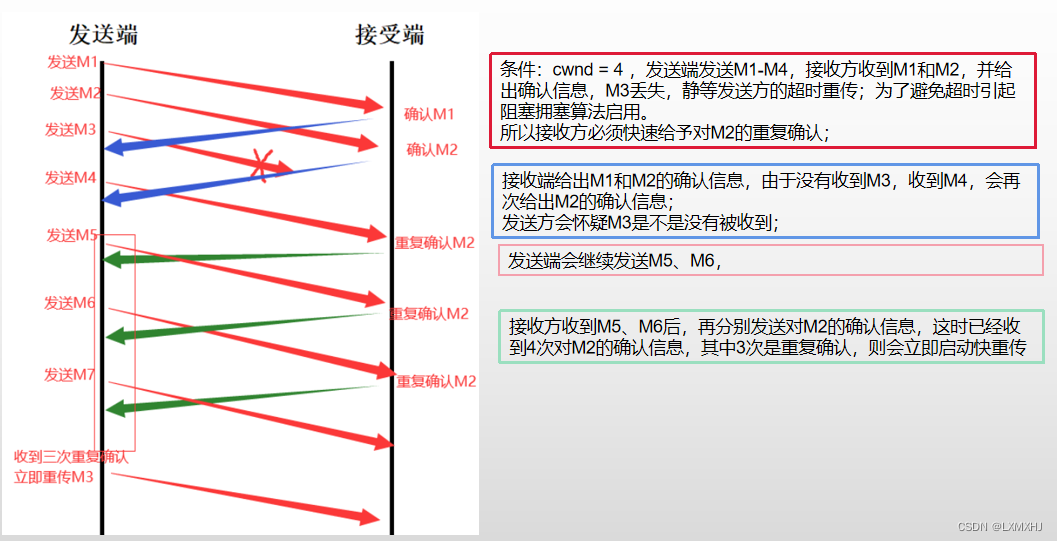

Interview computer network - transport layer

New knowledge: monkey improved app crawler

随机推荐

linux下怎么停止mysql服务

How to stop MySQL service under Linux

Missing getting in online continuous learning with neuron calibration thesis analysis + code reading

Expansion of D @nogc

Detailed explanation of addressing mode in 8086

Fcpx plug-in: simple line outgoing text title introduction animation call outs with photo placeholders for fcpx

C language sizeof strlen

Knife4j first use

Kotlin plug-ins kotlin Android extensions

Nine project management issues that PM should understand

tmux 和 vim 的快捷键修改

Question bank and answers of special operation certificate examination for safety management personnel of hazardous chemical business units in 2022

R语言使用RStudio将可视化结果保存为pdf文件(export--Save as PDF)

2022起重机械指挥考试题模拟考试平台操作

Federated meta learning with fast convergence and effective communication

Static coordinate transformation in ROS (analysis + example)

[college entrance examination] prospective college students look at it, choose the direction and future, and grasp it by themselves

Construction of running water lamp experiment with simulation software proteus

The first demand in my life - batch uploading of Excel data to the database

New knowledge: monkey improved app crawler