当前位置:网站首页>Federated meta learning with fast convergence and effective communication

Federated meta learning with fast convergence and effective communication

2022-06-12 07:16:00 【Programmer long】

The address of the paper is here

One . Introduce

Data in federated learning is not independent and identically distributed , be based on FedAvg After successful algorithm , The author found a meta learning algorithm MAML There is less data on the client , The scenario of uneven data distribution presents FedMeta frame , As a bridge between meta learning and federated learning . In meta learning , The parameterized algorithm learns slowly from a large number of tasks through the meta training process , In the process of meta training , The algorithm quickly trains a specific model in each task . A task consists of a support set and a query set that are not related to each other . Training specific models on support sets , Then test on the query set , The test results are used to update the algorithm . about FedMeta Come on , The algorithm is maintained on the server and distributed to the client for training . After training , The test results on the query set are uploaded to the server for algorithm update .

Two . Algorithm is introduced

First, let's define

D S T : s u p p o r t s e t D_S^T:support\ set DST:support set

D Q T : q u e r y s e t D_Q^T:query\ set DQT:query set

A : element learn xi count Law A: Meta learning algorithm A: element learn xi count Law

ϕ : element learn xi ginseng Count \phi: Meta learning parameters ϕ: element learn xi ginseng Count

θ T : model type ginseng Count \theta_T: Model parameters θT: model type ginseng Count

According to the idea of meta learning , We first pass D S T D_S^T DST Training A The model on f, After updating the output model parameters θ T \theta_T θT, This step is called inner update( Internal update ). After training θ T \theta_T θT Through our query set D Q T D_Q^T DQT To assess the , Calculate the loss of the test L D Q T ( θ T ) L_{D_Q^T}(\theta_T) LDQT(θT), Through the loss we can reflect our algorithm A ϕ A_\phi Aϕ Training ability on , Finally, we minimize and update our parameters according to this test loss ϕ \phi ϕ, This step is called outer update( External update ). These processes are expressed in data : Our algorithm A ϕ A_\phi Aϕ By optimizing the following objectives :

min ϕ E T [ L D Q T ( θ T ) ] = min ϕ E T [ L D Q T ( A ϕ ( D S T ) ) ] \min_\phi E_{T}[L_{D_Q^T}(\theta_T)]=\min_\phi E_{T}[L_{D_Q^T}(A_\phi (D_S^T))] ϕminET[LDQT(θT)]=ϕminET[LDQT(Aϕ(DST))]

If the maml To see if , At the beginning we had an accident ϕ = θ \phi=\theta ϕ=θ, And then through D S T D_S^T DST Training update ( A few steps of gradient descent ) L D S T ( θ ) = 1 ∣ D S T ∣ ∑ ( x , y ) l ( f θ ( x ) , y ) L_{D_S^T}(\theta)=\frac{1}{|D_S^T|}\sum_{(x,y)}l(f_\theta(x),y) LDST(θ)=∣DST∣1∑(x,y)l(fθ(x),y) bring θ = θ T \theta = \theta_T θ=θT, after , take f θ T f_{\theta_T} fθT stay D Q T D_Q^T DQT To test , Get the test loss function L D S T ( θ ) = 1 ∣ D Q T ∣ ∑ ( x ′ , y ′ ) l ( f θ T ( x ′ ) , y ′ ) L_{D_S^T}(\theta)=\frac{1}{|D_Q^T|}\sum_{(x',y')}l(f_{\theta_T}(x'),y') LDST(θ)=∣DQT∣1∑(x′,y′)l(fθT(x′),y′). The minimization goal above the defined value week can be changed to :

min ϕ E T [ L D Q T ( θ − α ∇ L D S T ( θ ) ) ] \min_\phi E_{T}[L_{D_Q^T}(\theta\ -\ \alpha\nabla L_{D_S^T}(\theta))] ϕminET[LDQT(θ − α∇LDST(θ))].

Come here ,meta End of part of , Then there is the federal learning section . How to combine them ? The author thinks of every client in query set After the test , Gain the loss of the test , At the same time, the corresponding gradient is calculated according to this loss , Send this gradient to the server , After the average gradient of the server , Update the parameters of the server according to this gradient , Finally, pass the parameters back to the client , That is, the client performs inner update and outer update( Only gradient calculation ), On the server outer update( Merge gradient updates ).

The algorithm process is shown in the figure

Here to maml as well as meta learning I'm not sure , as well as query set and support set If you have any questions, please see my previous blog Click here .

Four . Code explanation

Of this algorithm github Address here , A large part of the code is to realize the interaction between the client and the server , I won't go into details here , Focus on the client training process and the server update process .

First, let's look at the training of the client ( Corresponding inner update)

for batch_idx, (x, y) in enumerate(support_data_loader):

x, y = x.to(self.device), y.to(self.device)

num_sample = y.size(0)

pred = self.model(x)

loss = self.criterion(pred, y)

# assessment

correct = self.count_correct(pred, y)

# Write related records , This loss It's average

support_loss.append(loss.item())

support_correct.append(correct)

support_num_sample.append(num_sample)

# Calculation loss About the derivative of the current parameter , And update the parameters of the current network ( Back to model)

loss_sum += loss * num_sample

grads = torch.autograd.grad(loss_sum / sum(support_num_sample), list(self.model.parameters()), create_graph=True, retain_graph=True)

for p, g in zip(self.model.parameters(), grads):

p.data.add_(g.data, alpha=-self.inner_lr)

This is based on support set updated , first for A loop is a calculation of the gradient , the second for A loop is an update parameter

The updated parameters will be used for query set Calculate the loss on (outer update Gradient calculation part of )

query_loss, query_correct, query_num_sample = [], [], []

loss_sum = 0.0

for batch_idx, (x, y) in enumerate(query_data_loader):

x, y = x.to(self.device), y.to(self.device)

num_sample = y.size(0)

pred = self.model(x)

loss = self.criterion(pred, y)

# batch_sum_loss

# assessment

correct = self.count_correct(pred, y)

# Write related records , This loss It's average

query_loss.append(loss.item())

query_correct.append(correct)

query_num_sample.append(num_sample)

#

loss_sum += loss * num_sample

spt_sz = np.sum(support_num_sample)

qry_sz = np.sum(query_num_sample)

# The only role of this optimizer is to clear the network of redundant gradient information

# self.optimizer.zero_grad()

# Get the gradient of this , This gradient is a tensor

grads = torch.autograd.grad(loss_sum / qry_sz, list(self.model.parameters()))

Then the server will merge and update , Merge gradients and updates

def aggregate_grads_weighted(self, solns, num_samples, weights_before):

# Use adam

m = len(solns)

g = []

for i in range(len(solns[0])):

# i Of the current gradient index

# Always client 1 The shape of the gradient

grad_sum = torch.zeros_like(solns[0][i])

total_sz = 0

for ic, sz in enumerate(num_samples):

grad_sum += solns[ic][i] * sz

total_sz += sz

# After accumulation , Make a gradient descent

g.append(grad_sum / total_sz)

# Normal gradient descent [u - (v * self.outer_lr / m) for u, v in zip(weights_before, g)]

self.outer_opt.increase_n()

for i in range(len(weights_before)):

# This is a in-place Function of

self.outer_opt(weights_before[i], g[i], i=i)

In fact, it is calculated according to the gradient of the client and the weighted average of the training amount ,outer_opt Parameter update , The update here uses Adam

边栏推荐

- Matlab 6-DOF manipulator forward and inverse motion

- Detailed principle of 4.3-inch TFTLCD based on warship V3

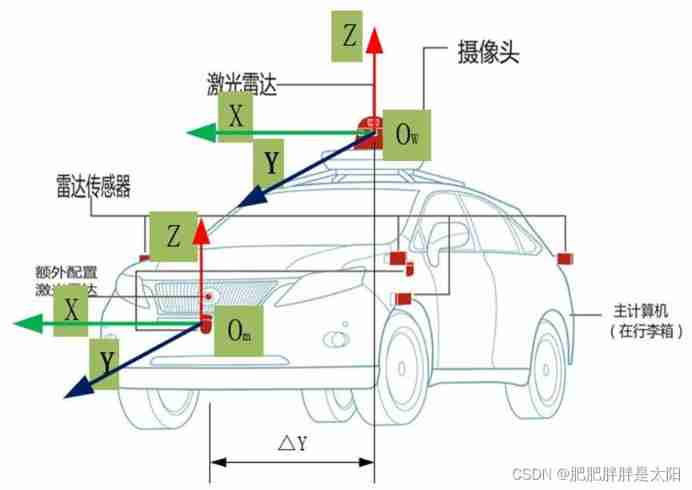

- Detailed explanation of coordinate tracking of TF2 operation in ROS (example + code)

- The function of C language string Terminator

- Scons compiling imgui

- d不能用非常ctfe指针

- "I was laid off by a big factory"

- Detailed explanation of TF2 command line debugging tool in ROS (parsing + code example + execution logic)

- Error mcrypt in php7 version of official encryption and decryption library of enterprise wechat_ module_ Open has no method defined and is discarded by PHP. The solution is to use OpenSSL

- Construction of running water lamp experiment with simulation software proteus

猜你喜欢

![‘CMRESHandler‘ object has no attribute ‘_timer‘,socket.gaierror: [Errno 8] nodename nor servname pro](/img/de/6756c1b8d9b792118bebb2d6c1e54c.png)

‘CMRESHandler‘ object has no attribute ‘_timer‘,socket.gaierror: [Errno 8] nodename nor servname pro

Troubleshooting of cl210openstack operation -- Chapter experiment

CL210OpenStack操作的故障排除--章節實驗

The most understandable explanation of coordinate transformation (push to + diagram)

![[Li Kou] curriculum series](/img/eb/c46a6b080224a71367d61f512326fd.jpg)

[Li Kou] curriculum series

4 expression

Detailed explanation of multi coordinate transformation in ROS (example + code)

Kali and programming: how to quickly build the OWASP website security test range?

Detailed explanation of 8086/8088 system bus (sequence analysis + bus related knowledge)

![[image detection] SAR image change detection based on depth difference and pcanet with matlab code](/img/c7/05bfa88ef1a4a38394b81755966e46.png)

[image detection] SAR image change detection based on depth difference and pcanet with matlab code

随机推荐

【WAX链游】发布一个免费开源的Alien Worlds【外星世界】脚本TLM

Construction of running water lamp experiment with simulation software proteus

esp32 hosted

Dépannage de l'opération cl210openstack - chapitre expérience

RT thread studio learning (VIII) connecting Alibaba cloud IOT with esp8266

循环链表和双向链表—课上课后练

Detailed explanation of coordinate tracking of TF2 operation in ROS (example + code)

CL210OpenStack操作的故障排除--章节实验

Source code learning - [FreeRTOS] privileged_ Understanding of function meaning

d的扩大@nogc

d的自动无垃集代码.

2022电工(初级)考试题库及模拟考试

Some operations of MATLAB array

Paddepaddl 28 supports the implementation of GHM loss, a gradient balancing mechanism for arbitrary dimensional data (supports ignore\u index, class\u weight, back propagation training, and multi clas

5 lines of code identify various verification codes

推荐17个提升开发效率的“轮子”

CL210OpenStack操作的故障排除--章節實驗

Explain ADC in stm32

RT thread studio learning (I) new project

Problems encountered in learning go