当前位置:网站首页>Introduction to the paper | application of machine learning in database cardinality estimation

Introduction to the paper | application of machine learning in database cardinality estimation

2022-07-02 19:04:00 【PKUMOD】

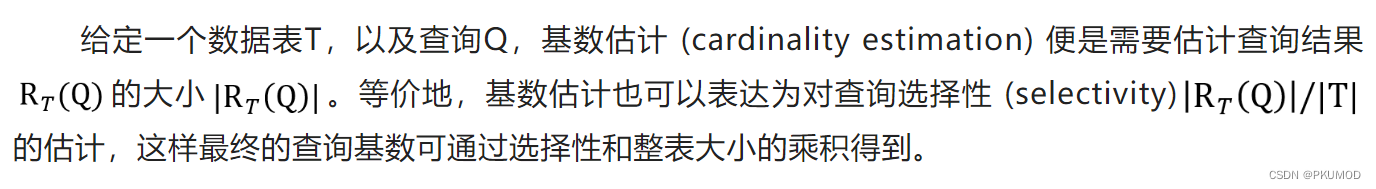

The definition and significance of cardinality estimation

Sometimes we also need complex queries on more than one table ( Contains multiple table joins (join)、 Range queries (range query) etc. ) Estimated base , These are the research contents of database cardinality estimation .

The role of cardinality estimation can be summarized into the following two aspects :

(1) Cardinality estimation is an important part of database query optimization . Because the cardinality of the subquery represents the size of the intermediate result , Therefore, most of the existing cost based query optimization techniques strongly rely on the estimation of the subquery cardinality to estimate the subquery plan cost . An excellent cardinality estimation model can help the optimizer better select the appropriate execution plan .

(2) Cardinality estimation can help answer queries that only need to know the number of results . For example, a user may want to know how many college students in China study computer science , This query only cares about the number of results without knowing the specific value of each result . For such queries, we can quickly return the approximate value of the result size through the cardinality estimation method .

Classification of current methods

Cardinality estimation is an important and difficult problem , For decades, researchers have tried to use various methods and techniques to improve the accuracy and robustness of estimation , The existing methods can be divided into the following categories :

In general, traditional methods and machine learning based methods can be classified . The following is a brief introduction to some representative works .

Traditional cardinality estimation method

Traditional cardinality estimation methods can be generally divided into summary based methods (synopsis-based) And sampling (sampling) There are two main types of methods of .

The profile based cardinality estimation method will collect some statistical information of the database in advance , And based on simple assumptions such as independence , It can easily and quickly solve the query cardinality .

For example, based on histogram (histogram) Methods , It summarizes each column in the data table into an equal width sheet (equal width) Or equal depth (equal depth) Histogram , Finally, according to the query conditions , Based on the assumption that columns are independent of each other , Estimate the size of the query results . Of course, sometimes such independence assumption does not work well , Based on the multidimensional histogram (multi-dimensional histogram) Method of solving .

Data portraits (sketching) It also belongs to the cardinality estimation method based on profile , Its use bitmap Or hash method , Estimate the number of different elements in the database , It is more suitable for streaming data scenarios . Methods for updating a class of data portraits, such as loglog、hyperloglog etc. , Pay more attention to saving memory and improving the robustness of estimation .

The sampling based method will randomly extract a certain proportion or a certain number of tuples from the original data table , Finally, the cardinality estimation of the query in the original database can be obtained by dividing the size of the query result on the sample set by the corresponding scaling ratio . The method based on sampling is better in sampling strategy 、 The effect is better when it can reflect the distribution of most data , However, it is also easy to fail to generate matching results on the sampling set due to the randomness of sampling , Some researchers have proposed an index based sampling method (index-based sampling) [1], When multiple tables are connected , The range to be sampled will be selected according to the query connection conditions , It can reduce the occurrence of zero results in sampling , The specific diagram is as follows :

Query driven learning cardinality estimation method

Since the base estimate can be regarded as “ Inquire about ” To ” Base value ” The regression problem of ( When the database is not updated ), So we can transfer the skills of supervised learning to this problem , Learn the corresponding relationship of the cardinality directly . The workflow of the general query driven method is as follows :

The difficulty lies in the acquisition of training data and the expression of query statements .

generally , When the database is given , We can assume that the query framework (schema) It is known. ( for example , According to which columns a certain two tables will be connected ), From this, we can randomly generate some query statements , After these statements are actually run , We get the real cardinality of these queries , This constitutes the training data required by the training model . Of course, you can also get the query directly according to the query log of the database . It should be noted that , The training data must be representative , Otherwise, the model cannot learn the correspondence between common query patterns and cardinality in the working environment , Will affect the accuracy of the forecast .

Query statements are mainly represented by query tables 、 Predicate conditions 、 Connection conditions, etc , More complex cases include those with regular expressions “like” Inquire about .

Work [2] Applying regression model to estimate query cardinality . It summarizes user input into some semantically related classes , be called templates, For each template, There are some parameters that can be adjusted to represent the query statement , Different parameters make up different queries . The model of this work is relatively simple , It mainly tests the linear regression 、 Model tree and local weighted regression .

2019 year , at work [3] in , Researchers have proposed a multiset convolution network (multi-set convolutional network, MSCN). It represents the query as

In the form of triples , The elements represent the query table 、 Join condition and predicate selection , Are collections with corresponding structures . The model synthesizes tables 、 Connection and predicate condition information , And then through several layers of network , Get the final cardinality estimate . It should be noted that , The randomly generated training query can only contain two connection conditions at most , Researchers hope that the model can be automatically generalized to multiple connections by learning a small number of patterns . The overall architecture is as follows :

Work [4] in , The author combines the cardinality estimation and the cost estimation of the query plan in the same end-to-end learning estimation model . It relies on the optimizer of the database system to generate a physical plan for the input query , The training data is determined by ( Query plan , Exact cardinality , Accurately plan the cost ) The form of triples constitutes . Because the query plan is a tree structure , Therefore, the model uses a tree LSTM. In short , Each node in the plan tree receives information from child nodes , And integrate it with your own information , Finally, the output of the query root nodes is sent to the two models of prediction base and prediction cost to get the desired estimation . In this work, we also put forward the relation according to predicate conditions (AND、OR etc. ), Separate use MIN、MAX Pooling , For details, please refer to his thesis . The following figure shows the workflow of its model :

Data driven learning Radix estimation method

Different from the methods of query driven classes , The data-driven cardinality estimation method is a kind of unsupervised method , Learn the distribution of data directly from the data table , So the training is simple .

at work [5] in , The authors use kernel density estimation (kernel density estimation, KDE) Method to estimate the query cardinality . The idea is to randomly select several tuples from the data table , The closer the query point is to the sampling tuple , Then the query is more likely to have a result . The author uses Gaussian kernel , The dispersion of probability density is controlled by learnable parameters . For the database update scenario , The author uses reservoir sampling (reservoir sampling) Data should be inserted ; By monitoring the impact of removing a certain sampling tuple on the estimation effect, we should deal with data deletion ( That is, deleting a tuple can improve the estimation effect , Delete it ). Because the calculation only needs to calculate the probability of each sample tuple and add it , So we can pass GPU Accelerate Computing . The author is also in the following research , The kernel density estimation method is extended to the cardinality estimation of multi table connection [6]. The following figure shows the main idea of kernel density estimation :

There is also a method of estimating cardinality using autoregressive models . at work [7] in , The author puts forward Naru Model , In the training process, sampling and Mask skill , It can improve the accuracy of the model . Later, the author also extended the method to the case of multi table connection [8].

Another kind of data-driven method uses SPN(sum product network). The main idea is to divide the data table horizontally ( For example, using KMeans And other clustering methods ), Make the tuple columns approximately independent on the split data , In this way, the cardinality can be estimated by constructing histogram or linear regression model on each column .DeepDB [9] It's a use SPN The cardinality estimation method , In recent years, there have also been reports of SPN Improvement , Such as FLAT [10], It divides the data table into two categories: strong correlation and weak correlation , Further use SPN Method partition data table . The following figure shows DeepDB How to calculate “ Age is less than 30 Number of European customers aged ” Of :

Current difficulties and future research directions

The first point , Although the current method can be more accurate than the traditional method , But it also takes a lot of time to train the model , How to choose between accuracy and efficiency is a problem that database users who apply these methods need to consider .

Second point , Most models are difficult to adapt to the update environment . When a large amount of data is inserted or deleted , These models are difficult to track these changes , So you need to retrain , This is in OLTP The environment needs to be improved .

The third point , The estimation effect of these models is still poor in the scenario of multi table connection . When multiple meters are connected , Dependencies between different tables are difficult to capture , It brings great difficulties to the estimation of cardinality . This is in 2021 Experimental paper [11] There is also an introduction to .

Last , Recommend interested readers to read the experimental paper [11], It compares the performance and efficiency of multiple learning methods in various scenarios , It has certain guiding value for the research of cardinality estimation .

reference

[1] Viktor Leis, Bernhard Radke, Andrey Gubichev, Alfons Kemper, and Thomas Neumann. 2017. Cardinality Estimation Done Right: Index-Based Join Sampling. In CIDR.

[2] Tanu Malik, Randal C Burns, and Nitesh V Chawla. 2007. A Black-Box Approach to Query Cardinality Estimation.. In CIDR. Citeseer, 56–67.

[3] Andreas Kipf, Thomas Kipf, Bernhard Radke, Viktor Leis, Peter A. Boncz, and Alfons Kemper. 2019. Learned Cardinalities: Estimating Correlated Joins with Deep Learning. In 9th Biennial Conference on Innovative Data Systems Research, CIDR 2019, Asilomar, CA, USA, January 13-16, 2019, Online Proceedings. www.cidrdb.org.

[4] Ji Sun and Guoliang Li. 2019. An end-to-end learning-based cost estimator. Proceedings of the VLDB Endowment 13, 3 (2019), 307–319.

[5] Max Heimel, Martin Kiefer, and Volker Markl. 2015. Self-tuning, GPU-accelerated kernel density models for multidimensional selectivity estimation. In Proceedings of the 2015 ACM SIGMOD International Conference on Management of Data. 1477–1492.

[6] Martin Kiefer, Max Heimel, Sebastian Breß, and Volker Markl. 2017. Estimating join selectivities using bandwidth-optimized kernel density models. Proceedings of the VLDB Endowment 10, 13 (2017), 2085–2096

[7] Zongheng Yang, Eric Liang, Amog Kamsetty, Chenggang Wu, Yan Duan, Xi Chen, Pieter Abbeel, Joseph M Hellerstein, Sanjay Krishnan, and Ion Stoica. 2019. Deep unsupervised cardinality estimation. Proceedings of the VLDB Endowment 13, 3 (2019), 279–292.

[8] Zongheng Yang, Amog Kamsetty, Sifei Luan, Eric Liang, Yan Duan, Xi Chen, and Ion Stoica. 2020. NeuroCard: one cardinality estimator for all tables. Proceedings of the VLDB Endowment 14, 1 (2020), 61–73.

[9] Benjamin Hilprecht, Andreas Schmidt, Moritz Kulessa, Alejandro Molina, Kristian Kersting, and Carsten Binnig. 2020. DeepDB: learn from data, not from queries! Proceedings of the VLDB Endowment 13, 7 (2020), 992–1005.

[10] Rong Zhu, Ziniu Wu, Yuxing Han, Kai Zeng, Andreas Pfadler, Zhengping Qian, Jingren Zhou, and Bin Cui. 2021. FLAT: fast, lightweight and accurate method for cardinality estimation. Proceedings of the VLDB Endowment 14, 9 (2021), 1489–1502.

[11] Wang, Xiaoying, et al. "Are we ready for learned cardinality estimation?." Proceedings of the VLDB Endowment 14.9 (2021): 1640-1654.

边栏推荐

- Troubleshooting: kubectl reports an error validationerror: unknown field \u00a0

- LightGroupButton* sender = static_ cast<LightGroupButton*>(QObject::sender());

- 文字编辑器 希望有错误的句子用红色标红,文字编辑器用了markdown

- 2022编译原理期末考试 回忆版

- UML class diagram

- The student Tiktok publicized that his alma mater was roast about "reducing the seal of enrollment". Netizen: hahahahahahahaha

- Which securities company has a low, safe and reliable online account opening commission

- Looking for innocence in New York -- a beautiful day at the discovery center of Legoland, New Jersey

- Hospital online inquiry source code hospital video inquiry source code hospital applet source code

- R语言ggplot2可视化分面图(facet):gganimate包基于transition_time函数创建动态散点图动画(gif)

猜你喜欢

![[0701] [论文阅读] Alleviating Data Imbalance Issue with Perturbed Input During Inference](/img/c7/9b7dc4b4bda4ecfe07aec1367fe059.png)

[0701] [论文阅读] Alleviating Data Imbalance Issue with Perturbed Input During Inference

故障排查:kubectl报错ValidationError: unknown field \u00a0

Comprendre complètement le tutoriel de traitement de Point Cloud basé sur open3d!

Mini Golf Course: a good place for leisure and tourism in London

聊聊电商系统中红包活动设计

![[100 cases of JVM tuning practice] 03 -- four cases of JVM heap tuning](/img/54/8a18cd30e6186528599c0556b1ee3b.png)

[100 cases of JVM tuning practice] 03 -- four cases of JVM heap tuning

Thoroughly understand the point cloud processing tutorial based on open3d!

在纽约寻找童真——新泽西州乐高乐园探索中心的美好一天

Tips for material UV masking

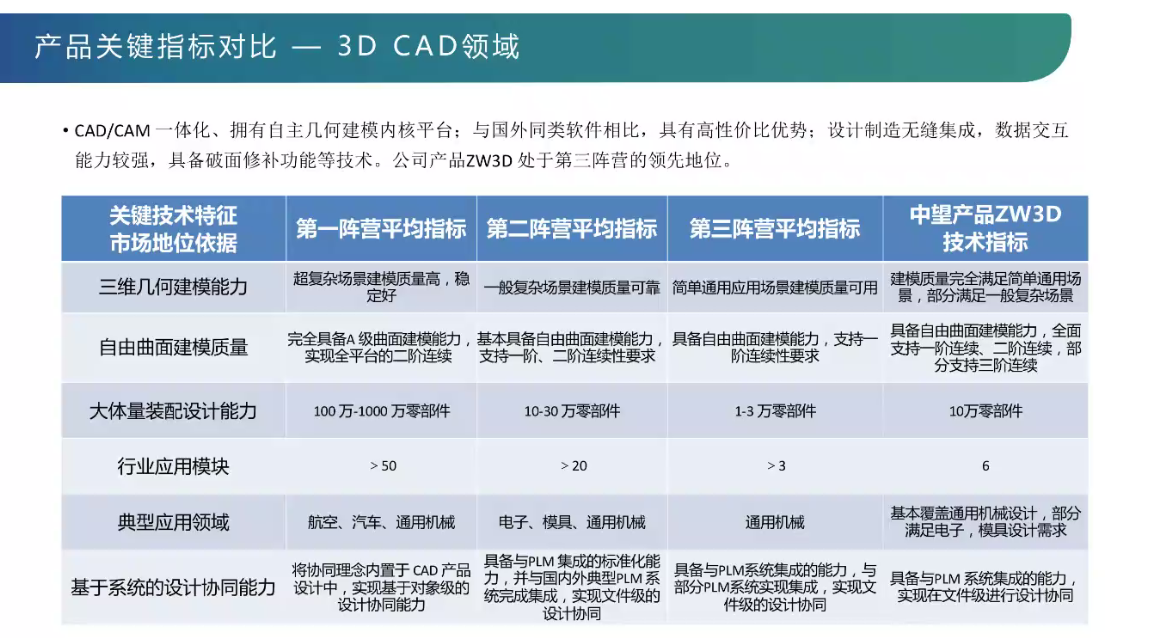

Industrial software lecture - core technology analysis of 3D CAD design software - the second lecture of the Forum

随机推荐

学生抖音宣传母校被吐槽“招生减章”,网友:哈哈哈哈哈哈

SLC、MLC、TLC 和 QLC NAND SSD 之间的区别:哪个更好?

[0701] [论文阅读] Alleviating Data Imbalance Issue with Perturbed Input During Inference

options should NOT have additional properties

R language ggplot2 visualization: visualize the line chart and add customized X-axis label information to the line chart using labs function

【JVM调优实战100例】02——虚拟机栈与本地方法栈调优五例

Detailed explanation of cjson usage

options should NOT have additional properties

The second bullet of AI development and debugging series: the exploration journey of multi machine distributed debugging

[100 cases of JVM tuning practice] 03 -- four cases of JVM heap tuning

消息队列消息丢失和消息重复发送的处理策略

Hospital online inquiry source code hospital video inquiry source code hospital applet source code

Leetcode(81)——搜索旋转排序数组 II

What is cloud primordial? This time, I can finally understand!

The text editor hopes to mark the wrong sentences in red, and the text editor uses markdown

故障排查:kubectl报错ValidationError: unknown field \u00a0

The difference between interceptor and filter

Leetcode(154)——寻找旋转排序数组中的最小值 II

[100 cases of JVM tuning practice] 01 - introduction of JVM and program counter

[100 cases of JVM tuning practice] 02 - five cases of virtual machine stack and local method stack tuning