当前位置:网站首页>Batch size setting skills

Batch size setting skills

2022-07-07 05:24:00 【Ting】

1、 What is? BatchSize

Batch Generally translated as batch , Set up batch_size The purpose of this paper is to let the model select batch data for processing each time in the training process .Batch Size The intuitive understanding is the number of samples selected for a training .

Batch Size The size of the model affects the degree and speed of optimization . At the same time, it directly affects GPU Memory usage , If you GPU Not much memory , It's better to set the value a little lower .

2、 Why Batch_Size?

Before use Batch Size Before , This means that when the network is training , It's all the data at once ( Entire database ) Input into the network , And then calculate their gradients for back propagation , Because the entire database is used to calculate the gradient , So the calculated gradient direction is more accurate . But in this case , There is a great difference between different gradient values , It's hard to use a global learning rate , So at this time, we usually use Rprop This training algorithm based on gradient symbol , Separate gradient updates .

In a small sample database , Don't use Batch Size It is feasible. , And the effect is very good . But once it's a large database , Put all the data into the network at once , It's bound to cause a memory explosion . So I put forward Batch Size The concept of .

3、 How to set up Batch_Size Value ?

Set up BatchSize Pay attention to a few points :

1)batch The number is too small , And when there are more categories , It could really lead to loss Functions oscillate without converging , Especially when your network is complex .

2) With batchsize increase , The faster you can process the same amount of data .

3) With batchsize increase , Required to achieve the same accuracy epoch More and more .

4) Because of the contradiction between the two factors , Batch_Size Increase to a certain point , Achieve the best in time .

5) Because the final convergence accuracy will fall into different local extremum , therefore Batch_Size Grow to some point , To achieve the optimal convergence accuracy .

6) Too much batchsize The result is that the network can easily converge to some bad local optima . Also too small batch There are also some problems , For example, the training speed is very slow , Training is not easy to converge, etc .

7) Concrete batch size The selection of the training set is related to the number of samples in the training set .

8)GPU Yes 2 To the power of batch For better performance , So set it to 16、32、64、128… It's often better than setting it to integer 10、 whole 100 It's better when it's a multiple of

I'm setting up BatchSize When , First, choose the bigger one BatchSize hold GPU completely fill , Observe Loss Convergence , If it doesn't converge , Or the convergence effect is not good Reduce BatchSize, Commonly used 16,32,64 etc. .

4、 Within reason , increase Batch_Size What are the benefits ?

Memory utilization improved , The parallel efficiency of large matrix multiplication is improved .

After a run epoch( Full data set ) The number of iterations required is reduced , The processing speed for the same amount of data is further accelerated .

Within a certain range , Generally speaking Batch_Size The bigger it is , The more accurate the descent direction is , The smaller the training shock .

5、 Blind increase Batch_Size What's the harm ?

Memory utilization improved , But memory capacity may not hold up .

After a run epoch( Full data set ) The number of iterations required is reduced , To achieve the same accuracy , It takes a lot more time , So the parameter correction is more slow .

Batch_Size To a certain extent , Its determined descent direction is no longer changed .

6、 Adjust the Batch_Size How does it affect the training effect ?

Batch_Size Too small , The performance of the model is extremely poor (error soaring ).

With Batch_Size increase , The faster you can process the same amount of data .

With Batch_Size increase , Required to achieve the same accuracy epoch More and more .

Because of the contradiction between the two factors , Batch_Size Increase to a certain point , Achieve the best in time .

Because the final convergence accuracy will fall into different local extremum , therefore Batch_Size Grow to some point , To achieve the optimal convergence accuracy

batchsize Too small : The gradient of each calculation is unstable , The shock caused by training is relatively large , It's hard to converge .

batchsize Too big :

(1) Improved memory utilization , The parallel computing efficiency of large matrix multiplication is improved .

(2) The calculated gradient direction is accurate , The training shock caused by this is relatively small .

(3) After a run epoch The number of iterations required becomes smaller , The data processing speed of the same amount of data is accelerated .

shortcoming : Easy content overflow , To achieve the same accuracy ,epoch It gets bigger and bigger , Prone to local optimality , Poor generalization performance .

batchsize Set up : Usually 10 To 100, Generally set as 2 Of n Power .

reason : The computer gpu and cpu Of memory All are 2 Stored in hexadecimal mode , Set up 2 Of n The power can speed up the calculation .

In depth learning, we often see epoch、 iteration and batchsize The difference between the three :

(1)batchsize: Batch size . In deep learning , It is generally used SGD Training , That is to say, each training session takes batchsize Sample training ;

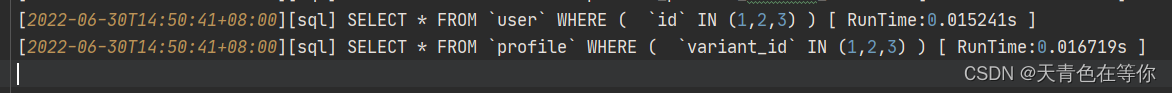

(2)iteration:1 individual iteration Equal to using batchsize One sample training ;

(3)epoch:1 individual epoch It is equal to training once with all the samples in the training set ;

for instance , The training set has 1000 Samples ,batchsize=10, So training a complete sample set requires :

100 Time iteration,1 Time epoch.

1. When the amount of data is large enough, it can be appropriately reduced batch_size, Because of the amount of data , Out of memory . But blind reduction will lead to no convergence ,batch_size=1 Online learning , It's also standard SGD, Learn this way , If the amount of data is small ,noise When data exists , The model is easy to be noise Banded bias , If the amount of data is large enough ,noise The impact will be “ Dilute ”, It has little effect on the model .

2.batch The choice of , The first decision is the direction of descent , If the data set is small , Then it can be in the form of full data set . There are two advantages of doing so ,

1) The direction of the whole data set can better represent the sample population , Determine its extreme value .

2) Because the gradient values of different weights vary greatly , So it's very difficult to choose a global learning rate .

边栏推荐

猜你喜欢

随机推荐

Record a pressure measurement experience summary

带你遨游银河系的 10 种分布式数据库

Pytest testing framework -- data driven

Dbsync adds support for mongodb and ES

[question] Compilation Principle

Let f (x) = Σ x^n/n^2, prove that f (x) + F (1-x) + lnxln (1-x) = Σ 1/n^2

Window scheduled tasks

想要选择一些部门优先使用 OKR, 应该如何选择试点部门?

创始人负债10亿,开课吧即将“下课”?

Two person game based on bevy game engine and FPGA

《2》 Label

pytest测试框架——数据驱动

Redis如何实现多可用区?

线程池的创建与使用

与利润无关的背包问题(深度优先搜索)

【oracle】简单的日期时间的格式化与排序问题

做自媒体,有哪些免费下载视频剪辑素材的网站?

[optimal web page width and its implementation] [recommended collection "

背包问题(01背包,完全背包,动态规划)

利用OPNET进行网络任意源组播(ASM)仿真的设计、配置及注意点