当前位置:网站首页>Dnn+yolo+flask reasoning (raspberry pie real-time streaming - including Yolo family bucket Series)

Dnn+yolo+flask reasoning (raspberry pie real-time streaming - including Yolo family bucket Series)

2022-07-08 02:18:00 【pogg_】

YOLO-Streaming

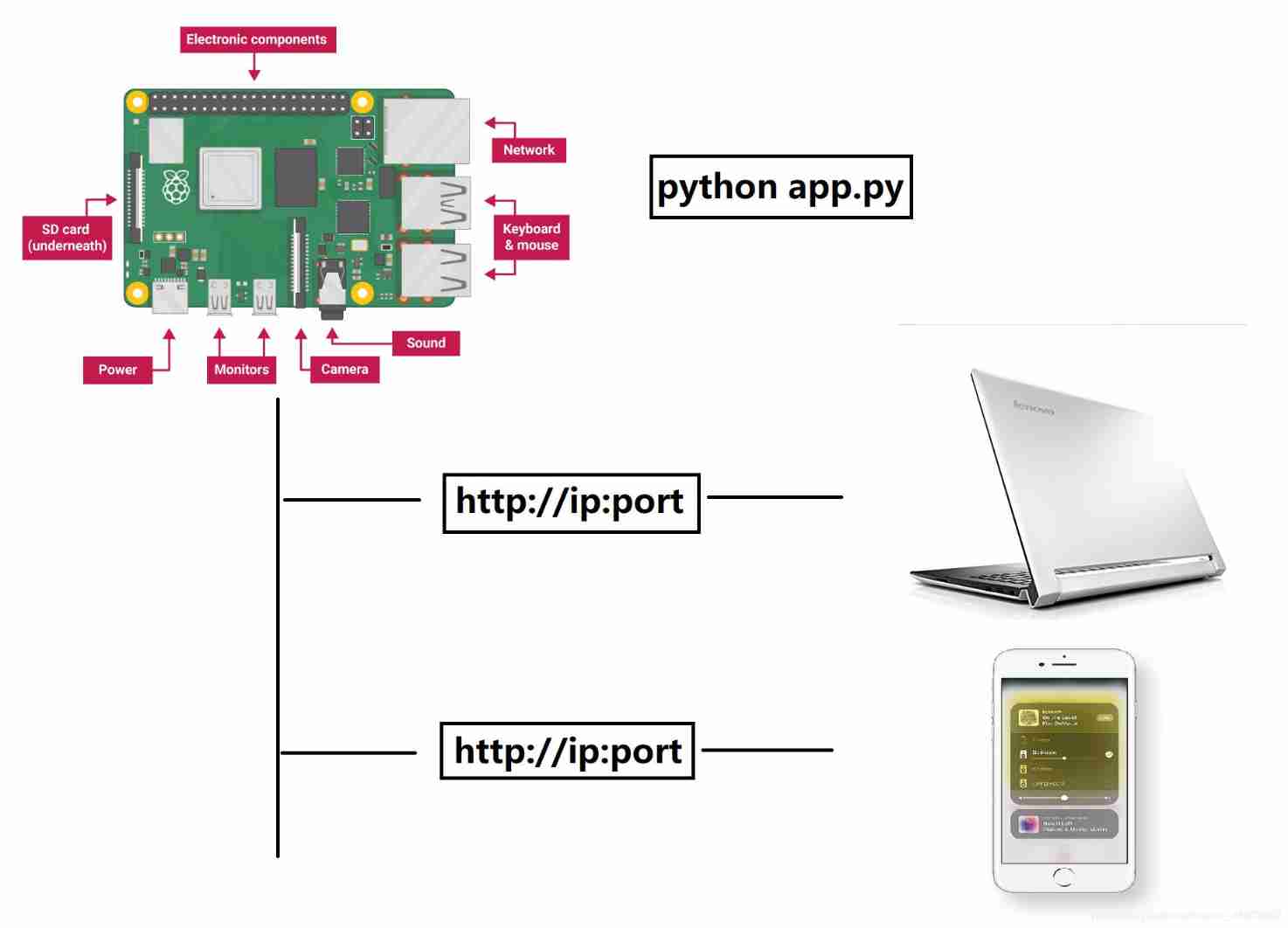

This resource library records the process of pushing video streams on some ultra lightweight Networks . The general procedure is ,opencv Call board ( Like raspberry pie ) 's camera , Transmit the detected real-time video to yolo-fastest、nanodet、ghostnet And other ultra lightweight Networks , Then talk about using flask The lightweight framework pushes the processed video frames to the network , Basically, real-time performance can be guaranteed . also , This repository also records the performance of some side-by-side reasoning frameworks , Interested netizens can communicate .

Warehouse link :https://github.com/pengtougu/DNN-Lightweight-Streaming

welcome Star and PR!

Welcome to add qq Group communication :696654483

Requirements

Please install dependencies first (for dnn→ call dnn Environment )

- Linux & MacOS & window

- python>= 3.6.0

- opencv-python>= 4.2.X

- flask>= 1.0.0

Please install dependencies first (for ncnn→ call ncnn Environment )

- Linux & MacOS & window

- Visual Studio 2019

- cmake-3.16.5

- protobuf-3.4.0

- opencv-3.4.0

- vulkan-1.14.8

inference

- YOLOv3-Fastest: https://github.com/dog-qiuqiu/Yolo-Fastest

Models:Yolo-Fastest-1.1-xl

| Equipment | Computing backend | System | Framework | input_size | Run time |

|---|---|---|---|---|---|

| Raspberrypi 3B | 4xCortex-A53 | Linux(arm64) | dnn | 320 | 89ms |

| Intel | Core i5-4210 | window10(x64) | dnn | 320 | 21ms |

- YOLOv4-Tiny: https://github.com/AlexeyAB/darknet

Models:yolov4-tiny.weights

| Equipment | Computing backend | System | Framework | input_size | Run time |

|---|---|---|---|---|---|

| Raspberrypi 3B | 4xCortex-A53 | Linux(arm64) | dnn | 320 | 315ms |

| Intel | Core i5-4210 | window10(x64) | dnn | 320 | 41ms |

- YOLOv5s-onnx: https://github.com/ultralytics/yolov5

Models:yolov5s.onnx

| Equipment | Computing backend | System | Framework | input_size | Run time |

|---|---|---|---|---|---|

| Raspberrypi 3B | 4xCortex-A53 | Linux(arm64) | dnn | 320 | 673ms |

| Intel | Core i5-4210 | window10(x64) | dnn | 320 | 131ms |

| Raspberrypi 3B | 4xCortex-A53 | Linux(arm64) | ncnn | 160 | 716ms |

| Intel | Core i5-4210 | window10(x64) | ncnn | 160 | 197ms |

- Nanodet: https://github.com/RangiLyu/nanodet

Models:nanodet.onnx

| Equipment | Computing backend | System | Framework | input_size | Run time |

|---|---|---|---|---|---|

| Raspberrypi 3B | 4xCortex-A53 | Linux(arm64) | dnn | 320 | 113ms |

| Intel | Core i5-4210 | window10(x64) | dnn | 320 | 23ms |

updating. . .

Demo

First , I am already in window、Mac and Linux The demo was tested in the environment , It works on all three platforms , And there's no need to change the code , Use as you go .

The following files are included after downloading ( The red line is the main operation file ):

Run v3_fastest.py

- Inference images use

python yolov3_fastest.py --image dog.jpg - Inference video use

python yolov3_fastest.py --video test.mp4 - Inference webcam use

python yolov3_fastest.py --fourcc 0

Run v4_tiny.py

- Inference images use

python v4_tiny.py --image person.jpg - Inference video use

python v4_tiny.py --video test.mp4 - Inference webcam use

python v4_tiny.py --fourcc 0

Run v5_dnn.py

- Inference images use

python v5_dnn.py --image person.jpg - Inference video use

python v5_dnn.py --video test.mp4 - Inference webcam use

python v5_dnn.py --fourcc 0

Run NanoDet.py

- Inference images use

python NanoDet.py --image person.jpg - Inference video use

python NanoDet.py --video test.mp4 - Inference webcam use

python NanoDet.py --fourcc 0

Run app.py -(Push-Streaming online)

- Inference with v3-fastest

python app.py --model v3_fastest - Inference with v4-tiny

python app.py --model v4_tiny - Inference with v5-dnn

python app.py --model v5_dnn - Inference with NanoDet

python app.py --model NanoDet

Please note! Be sure to be on the same LAN!

Demo Effects

Run v3_fastest.py

- image→video→capture→push stream

Run v4_tiny.py

- image→video→capture→push stream

It needs to be optimized , Follow up quantitative version , To be updated …

Run v5_dnn.py

- image(473 ms / Inference Image / Core i5-4210)→video→capture(213 ms / Inference Image / Core i5-4210)→push stream

2021-04-26 remember : Interestingly , use onnx+dnn Method call v5s Model of , It takes twice as long to infer pictures than to process frames with the camera , I watched it for a long time , Still can't find the problem , I hope you can help me look at the code , Point out the problem , thank !

2021-05-01 more : I found the problem today , because v5_dnn.py There is a function of reasoning time drawn on the frame graph in the file (cv2.putText(frame, "TIME: " + str(localtime), (8, 30), cv2.FONT_HERSHEY_SIMPLEX, 1, (0, 0, 0), 2)), And this function actually takes the time of reasoning post-processing per frame 2/3( About one frame is 50-80ms), All subsequent versions are removed , Change to terminal display , The reasoning time of each frame is determined by 190ms→130ms, It's also horrible .

Supplement

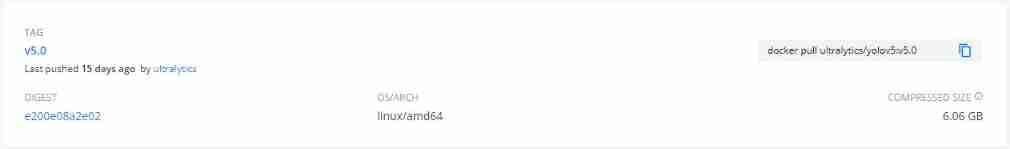

This is a DNN repository that integrates the current detection algorithms. You may ask why call the model with DNN, not just git clone the whole framework down? In fact, when we are working with models, it is more advisable to separate training and inference. More, when you deploy models on a customer’s production line, if you package up the training code and the training-dependent environment for each set of models (yet the customer’s platform only needs you to infer, no training required for you), you will be dead after a few sets of models. As an example, here is the docker for the same version of yolov5 (complete code and dependencies & inference code and dependencies). The entire docker has enough memory to support about four sets of inference dockers.

This is an integration of current detection algorithms DNN The repository . You may ask , Why DNN Call model , Not directly git Clone the whole framework ? in fact , When we are dealing with models , It is wise to separate training from reasoning . Is more of a , When you deploy the model on the customer's production line , If you package the training code of each model with the training dependent environment ( However, the customer's platform only needs your reasoning , You don't need to train ), Then you get cold after several sets of models . As an example , Here is the same version yolov5 Of docker( Complete code and dependencies →6.06G & Reasoning code and dependencies →0.4G). Whole docker There is enough memory to support about 15 Nested reasoning docker.

Thanks

- https://github.com/dog-qiuqiu/Yolo-Fastest

- https://github.com/hpc203/Yolo-Fastest-opencv-dnn

- https://github.com/miguelgrinberg/flask-video-streaming

- https://github.com/hpc203/yolov5-dnn-cpp-python

- https://github.com/hpc203/nanodet-opncv-dnn-cpp-python

other

- Chinese operation tutorial :https://blog.csdn.net/weixin_45829462/article/details/115806322

Welcome to join the deep learning exchange group :696654483

There are many graduate leaders and bigwigs from all walks of life ~

边栏推荐

- Keras深度学习实战——基于Inception v3实现性别分类

- 谈谈 SAP iRPA Studio 创建的本地项目的云端部署问题

- leetcode 866. Prime Palindrome | 866. prime palindromes

- 力扣5_876. 链表的中间结点

- Random walk reasoning and learning in large-scale knowledge base

- 阿锅鱼的大度

- The generosity of a pot fish

- C language -cmake cmakelists Txt tutorial

- The circuit is shown in the figure, r1=2k Ω, r2=2k Ω, r3=4k Ω, rf=4k Ω. Find the expression of the relationship between output and input.

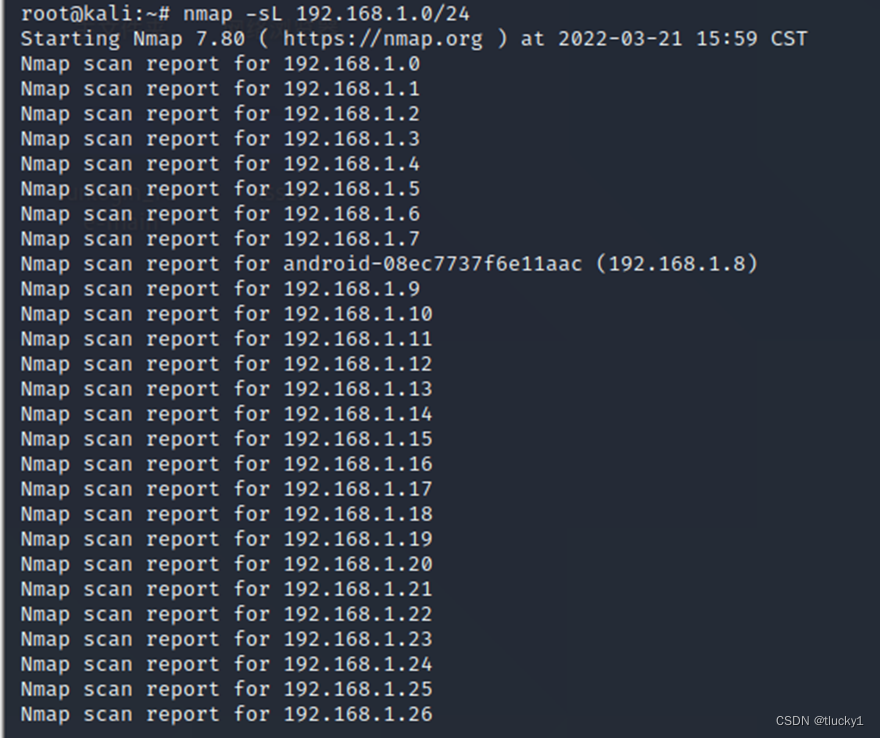

- Introduction à l'outil nmap et aux commandes communes

猜你喜欢

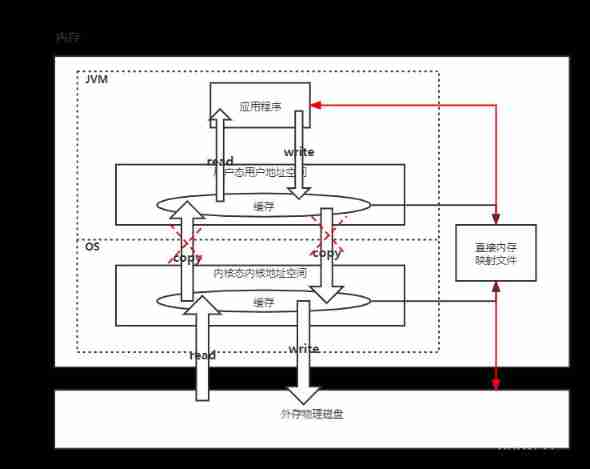

JVM memory and garbage collection-3-direct memory

Industrial Development and technological realization of vr/ar

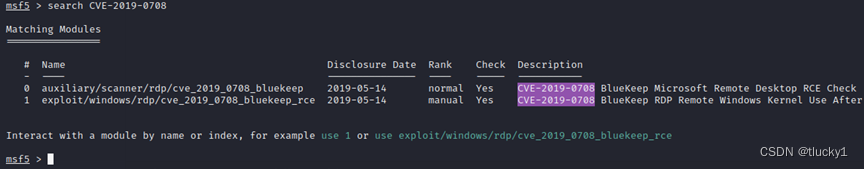

burpsuite

Alo who likes TestMan

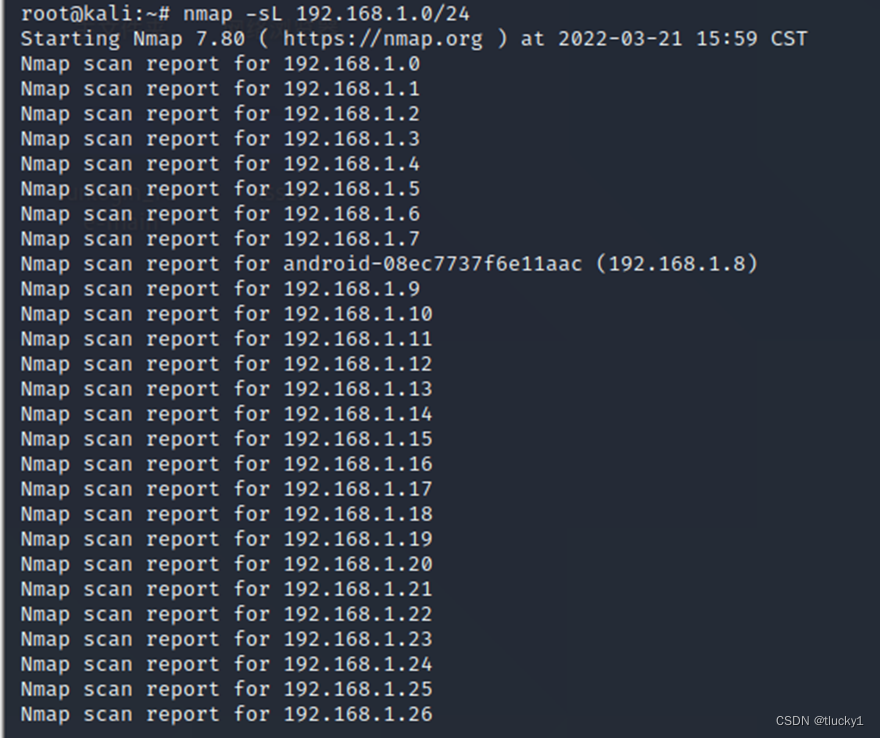

Nmap tool introduction and common commands

![[knowledge map] interpretable recommendation based on knowledge map through deep reinforcement learning](/img/62/70741e5f289fcbd9a71d1aab189be1.jpg)

[knowledge map] interpretable recommendation based on knowledge map through deep reinforcement learning

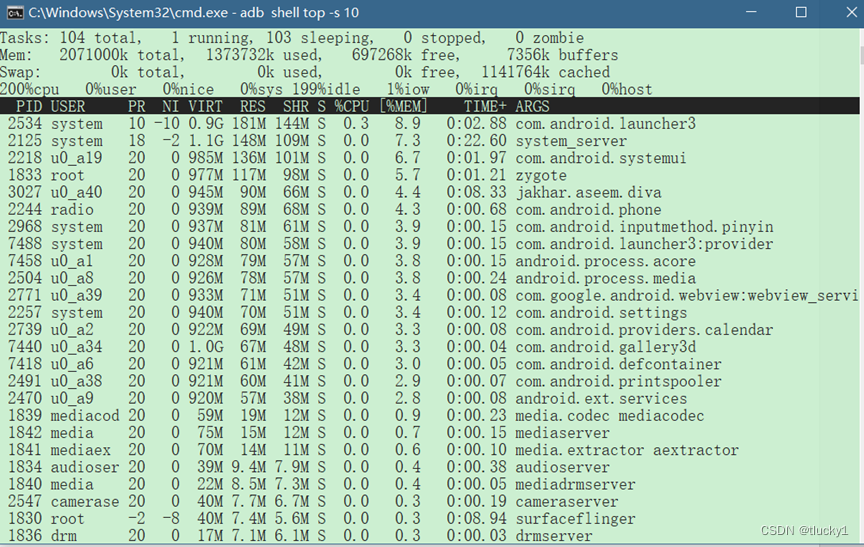

Introduction to ADB tools

Introduction à l'outil nmap et aux commandes communes

metasploit

Leetcode question brushing record | 27_ Removing Elements

随机推荐

JVM memory and garbage collection-3-runtime data area / method area

Node JS maintains a long connection

The way fish and shrimp go

leetcode 865. Smallest Subtree with all the Deepest Nodes | 865.具有所有最深节点的最小子树(树的BFS,parent反向索引map)

VIM use

VR/AR 的产业发展与技术实现

XMeter Newsletter 2022-06|企业版 v3.2.3 发布,错误日志与测试报告图表优化

leetcode 866. Prime Palindrome | 866. prime palindromes

分布式定时任务之XXL-JOB

nmap工具介紹及常用命令

XXL job of distributed timed tasks

[knowledge atlas paper] minerva: use reinforcement learning to infer paths in the knowledge base

阿南的判断

LeetCode精选200道--数组篇

【每日一题】736. Lisp 语法解析

Alo who likes TestMan

Flutter 3.0框架下的小程序运行

adb工具介绍

Nanny level tutorial: Azkaban executes jar package (with test samples and results)

Spock单元测试框架介绍及在美团优选的实践_第四章(Exception异常处理mock方式)