当前位置:网站首页>XXL job of distributed timed tasks

XXL job of distributed timed tasks

2022-07-08 01:56:00 【Jack_ Chen --】

Distributed timed tasks XXL-JOB

XXL-JOB summary

XXL-JOB Is a distributed task scheduling platform , Its core design goal is rapid development 、 Learn easy 、 Lightweight 、 Easy to expand . Now open source and access to a number of companies online product lines , Open the box .

GitHub: https://github.com/xuxueli/xxl-job

Document address : https://www.xuxueli.com/xxl-job/

XXL-JOB Quick start

1. Configuration deployment “ Dispatching center ”

Reference document deployment : xxl-job-admin As “ Dispatching center ”

effect : Unified management of scheduling tasks on the task scheduling platform , Responsible for triggering schedule execution , And provide task management platform .

2. Create an actuator project

effect : Responsible for receiving “ Dispatching center ” To schedule and execute

take xxl-job-core Import this after installing it locally maven rely on , Or introduce the coordinate address in the central warehouse

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>com.xuxueli</groupId>

<artifactId>xxl-job-core</artifactId>

<version>2.3.1-SNAPSHOT</version>

</dependency>

3. Configure the actuator project

server.port=9001

### Dispatch center deployment and address [ optional ]: If there are multiple addresses in the dispatching center cluster deployment, they are separated by commas . The actuator will use this address for " Actuator heartbeat registration " and " Task result callback "; If it is empty, turn off automatic registration ;

xxl.job.admin.addresses=http://127.0.0.1:9000/xxl-job-admin

### Actuator communication TOKEN [ optional ]: Non space time enabled ;

xxl.job.accessToken=

### actuator AppName [ optional ]: Actuator heartbeat registration group by ; If it is empty, turn off automatic registration

xxl.job.executor.appname=my-job

### Actuator registration [ optional ]: This configuration is preferred as the registration address , Use embedded services for null ”IP:PORT“ As the registered address . So as to support container type executor dynamic more flexibly IP And dynamic mapping ports .

xxl.job.executor.address=

### actuator IP [ optional ]: Blank by default means automatic acquisition IP, When multiple network cards are used, you can set them manually IP, The IP Will not bind Host For communication purposes only ; Address information is used for " Actuator registration " and " Dispatch center requests and triggers tasks ";

xxl.job.executor.ip=

### Actuator port number [ optional ]: Less than or equal to 0 Then automatically get ; The default port is 9999, When multiple actuators are deployed in a single machine , Note to configure different actuator ports ;

xxl.job.executor.port=8000

### Executor run log file storage disk path [ optional ] : You need to have read and write permission to this path ; If it is empty, the default path is used ;

xxl.job.executor.logpath=D:/logs

### The number of days to save the actuator log file [ optional ] : Overdue logs are automatically cleaned up , The limit is greater than or equal to 3 Effective when ; otherwise , Such as -1, Turn off the automatic cleaning function ;

xxl.job.executor.logretentiondays=30

4. Configure actuator components

@Configuration

public class MyJobConfig {

private Logger logger = LoggerFactory.getLogger(MyJobConfig.class);

@Value("${xxl.job.admin.addresses}")

private String adminAddresses;

@Value("${xxl.job.accessToken}")

private String accessToken;

@Value("${xxl.job.executor.appname}")

private String appname;

@Value("${xxl.job.executor.address}")

private String address;

@Value("${xxl.job.executor.ip}")

private String ip;

@Value("${xxl.job.executor.port}")

private int port;

@Value("${xxl.job.executor.logpath}")

private String logPath;

@Value("${xxl.job.executor.logretentiondays}")

private int logRetentionDays;

@Bean

public XxlJobSpringExecutor myJobExecutor() {

logger.info("config init start .....");

XxlJobSpringExecutor xxlJobSpringExecutor = new XxlJobSpringExecutor();

xxlJobSpringExecutor.setAdminAddresses(adminAddresses);

xxlJobSpringExecutor.setAppname(appname);

xxlJobSpringExecutor.setAddress(address);

xxlJobSpringExecutor.setIp(ip);

xxlJobSpringExecutor.setPort(port);

xxlJobSpringExecutor.setAccessToken(accessToken);

xxlJobSpringExecutor.setLogPath(logPath);

xxlJobSpringExecutor.setLogRetentionDays(logRetentionDays);

logger.info("config init end .....");

return xxlJobSpringExecutor;

}

}

5. Development Job Method

1、 Annotation configuration : by Job Method add annotation "@XxlJob(value=" Customize jobhandler name ", init = "JobHandler Initialization method ", destroy = "JobHandler Destruction method ")", annotation value The value corresponds to the new task created by the dispatch center JobHandler The value of the property .

2、 Execution log : Need to pass through "XxlJobHelper.log" Print execution log ;

3、 The result of the mission : The default task result is " success " state , There is no need to actively set ; If there is a demand , For example, set the task result as failure , Can pass "XxlJobHelper.handleFail/handleSuccess" Set the task results independently ;

@Component

public class MyJobHandler {

private static Logger logger = LoggerFactory.getLogger(MyJobHandler.class);

/** * 1、 Simple task example (Bean Pattern ) */

@XxlJob(value = "demoJobHandler",init = "init",destroy = "destroy")

public void demoJobHandler() throws Exception {

XxlJobHelper.log("demoJobHandler start ......");

logger.info("demoJobHandler start ......");

XxlJobHelper.log("XXL-JOB, Hello World.");

logger.info("XXL-JOB, Hello World.");

for (int i = 0; i < 5; i++) {

XxlJobHelper.log("beat at:" + i);

logger.info("beat at:" + i);

TimeUnit.SECONDS.sleep(1);

}

XxlJobHelper.log("demoJobHandler end ......");

logger.info("demoJobHandler end ......");

// default success

XxlJobHelper.handleSuccess();

//XxlJobHelper.handleFail();

}

/** * 2、 The task of broadcasting by film */

@XxlJob("shardingJobHandler")

public void shardingJobHandler() throws Exception {

// Fragmentation parameters

int shardIndex = XxlJobHelper.getShardIndex();

int shardTotal = XxlJobHelper.getShardTotal();

XxlJobHelper.log(" Fragmentation parameters : Current slice number = {}, The total number of pieces = {}", shardIndex, shardTotal);

logger.info(" Fragmentation parameters : Current slice number = {}, The total number of pieces = {}", shardIndex, shardTotal);

// Business logic

for (int i = 0; i < shardTotal; i++) {

if (i == shardIndex) {

XxlJobHelper.log(" The first {} slice , Hit shards start processing ", i);

logger.info(" The first {} slice , Hit shards start processing ", i);

} else {

XxlJobHelper.log(" The first {} slice , Ignore ", i);

logger.info(" The first {} slice , Ignore ", i);

}

}

}

public void init() {

logger.info("init");

}

public void destroy() {

logger.info("destory");

}

}

6. Project structure

Use of Dispatching Center

1. Create actuator

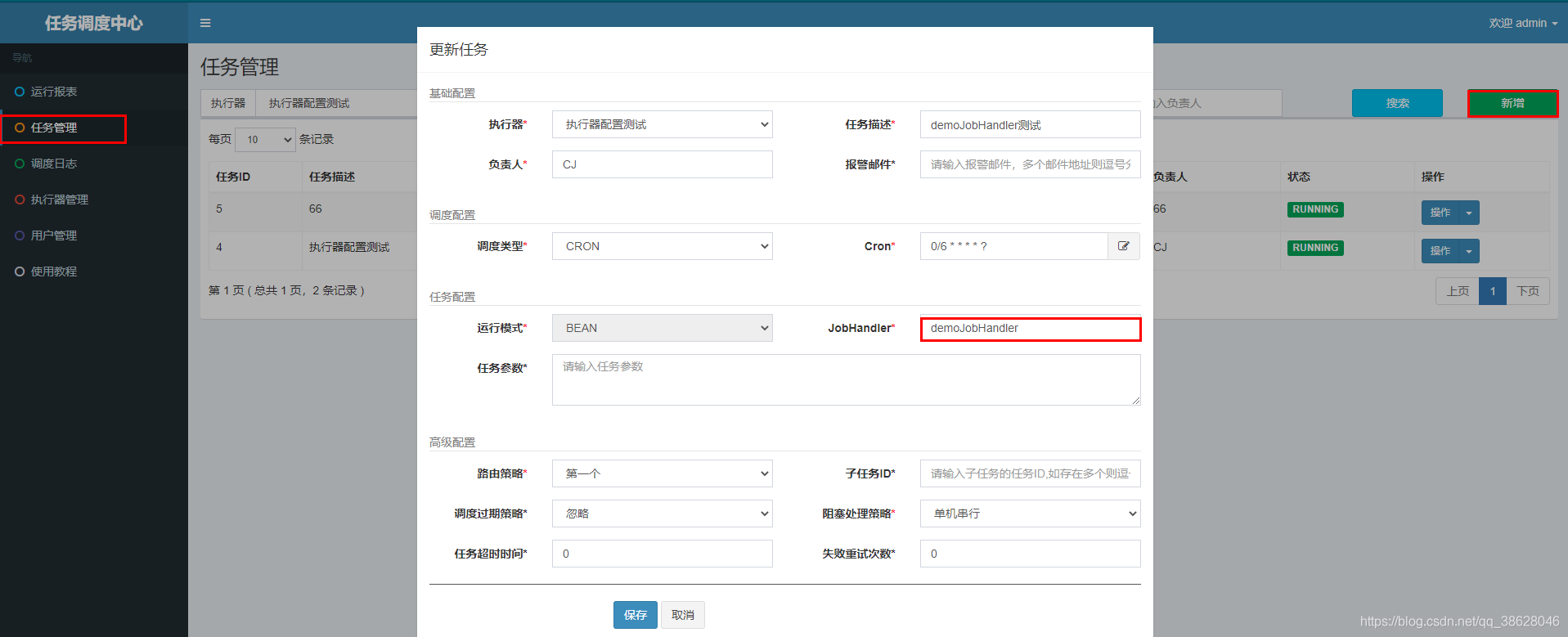

2. New scheduling task

Parameter configuration for the new task , Run mode selected “BEAN Pattern ”,JobHandler Attribute fill in task notes “@XxlJob” The value defined in

3. Check the log

4. Prepare the distributed environment

8080 And 8081 Two machines deploy the same code

5. Polling of routing policy

Check the log

6. Distribute broadcast

2021-04-26 14:01:48.240 INFO 71112 --- [ Thread-11] cn.ybzy.demo.jobhandler.MyJobHandler : Fragmentation parameters : Current slice number = 0, The total number of pieces = 2

2021-04-26 14:01:48.241 INFO 71112 --- [ Thread-11] cn.ybzy.demo.jobhandler.MyJobHandler : The first 0 slice , Hit shards start processing

2021-04-26 14:01:48.241 INFO 71112 --- [ Thread-11] cn.ybzy.demo.jobhandler.MyJobHandler : The first 1 slice , Ignore

2021-04-26 14:02:03.445 INFO 91184 --- [ Thread-9] cn.ybzy.demo.jobhandler.MyJobHandler : Fragmentation parameters : Current slice number = 1, The total number of pieces = 2

2021-04-26 14:02:03.445 INFO 91184 --- [ Thread-9] cn.ybzy.demo.jobhandler.MyJobHandler : The first 0 slice , Ignore

2021-04-26 14:02:03.446 INFO 91184 --- [ Thread-9] cn.ybzy.demo.jobhandler.MyJobHandler : The first 1 slice , Hit shards start processing

7. Fail over

Configuration property details

Basic configuration :

- actuator : The binding executor of the task , When the task triggers the schedule, it will automatically discover the registered executor , Realize the function of automatic task discovery ; On the other hand, it is also convenient to group tasks . Each task must be bound with an actuator , Can be found in " Actuator Management " Set it up ;

- Task description : Description of the task , Easy task management ;

- person in charge : The person in charge of the task ;

- Alarm mail : Email address of email notification when task scheduling fails , Support the configuration of multiple email addresses , Comma separated when configuring multiple mailbox addresses ;

Trigger configuration :

- Scheduling type :

nothing : This type does not actively trigger scheduling ;

CRON: This type will pass CRON, Trigger task scheduling ;

Fixed speed : The type will be at a fixed speed , Trigger task scheduling ; At regular intervals , Periodically trigger ;

Fixed delay : This type will have a fixed delay , Trigger task scheduling ; According to a fixed delay time , The delay time is calculated from the end of the last schedule , When the delay time is reached, the next scheduling will be triggered ;

- CRON: Trigger task execution Cron expression ;

- Fixed speed : Time interval of firmware speed , The unit is in seconds ;

- Fixed delay : Interval of firmware delay , The unit is in seconds ;

Task allocation :

- Operation mode :

BEAN Pattern : The task is to JobHandler Mode maintenance at actuator end ; Need to combine "JobHandler" Properties match tasks in the actuator ;

GLUE Pattern (Java): The task is maintained in the dispatch center by source code ; The task of this pattern is actually a piece of inheritance from IJobHandler Of Java Class code and "groovy" Source maintenance , It runs in the actuator project , You can use @Resource/@Autowire Other services injected into the actuator ;

GLUE Pattern (Shell): The task is maintained in the dispatch center by source code ; The task of this pattern is actually a period of "shell" Script ;

GLUE Pattern (Python): The task is maintained in the dispatch center by source code ; The task of this pattern is actually a period of "python" Script ;

GLUE Pattern (PHP): The task is maintained in the dispatch center by source code ; The task of this pattern is actually a period of "php" Script ;

GLUE Pattern (NodeJS): The task is maintained in the dispatch center by source code ; The task of this pattern is actually a period of "nodejs" Script ;

GLUE Pattern (PowerShell): The task is maintained in the dispatch center by source code ; The task of this pattern is actually a period of "PowerShell" Script ;

- JobHandler: The operation mode is "BEAN Pattern " Effective when , Corresponding to the newly developed JobHandler class “@JobHandler” Annotation custom value value ;

- Execution parameter : Parameters required for task execution ;

Advanced configuration :

- Routing strategy : When the executor cluster is deployed , Provide rich routing strategies , Include ;

FIRST( first ): Fixed selection of the first machine ;

LAST( the last one ): Fixed selection of the last machine ;

ROUND( polling ):;

RANDOM( Random ): Random selection of online machines ;

CONSISTENT_HASH( Uniformity HASH): Each task according to Hash The algorithm selects a certain machine , And all tasks are evenly scattered on different machines .

LEAST_FREQUENTLY_USED( The least used ): The machine with the lowest frequency is elected first ;

LEAST_RECENTLY_USED( Most recently unused ): The longest unused machine is elected first ;

FAILOVER( Fail over ): The heartbeat is detected in sequence , The first machine with successful heartbeat detection is selected as the target actuator and initiates scheduling ;

BUSYOVER( Busy transfer ): Carry out idle detection in sequence , The first machine with successful idle detection is selected as the target actuator and initiates scheduling ;

SHARDING_BROADCAST( Broadcast by film ): Broadcast triggers all machines in the cluster to perform one task , At the same time, the system automatically transfers the partition parameters ; According to the partition parameters, the partition task can be developed ;

- The subtasks : Each task has a unique task ID( Mission ID You can get... From the task list ), When the task is completed and successfully executed , Will trigger subtasks ID An active scheduling of the corresponding task .

- Schedule expiration policy :

- Ignore : After the schedule has expired , Ignore overdue tasks , Recalculate the next trigger time from the current time ;

- Execute immediately : After the schedule has expired , Execute immediately , And recalculate the next trigger time from the current time ;

- Blocking strategy : The processing strategy when the scheduling is too intensive for the executor to process ;

Stand alone serial ( Default ): After the scheduling request enters the stand-alone actuator , Scheduling requests to enter FIFO Queue and run in serial mode ;

Discard subsequent scheduling : After the scheduling request enters the stand-alone actuator , It is found that there are scheduling tasks running in the actuator , This request will be discarded and marked as failed ;

Coverage before scheduling : After the scheduling request enters the stand-alone actuator , It is found that there are scheduling tasks running in the actuator , Will terminate the running scheduling task and clear the queue , Then run the local scheduling task ;

- Task timeout : Support custom task timeout , When the task runs out of time, it will interrupt the task ;

- Failed retries ; Support custom task failure retries , When the task fails, it will actively retry according to the preset number of failed retries ;

边栏推荐

- Mysql database (2)

- Capability contribution three solutions of gbase were selected into the "financial information innovation ecological laboratory - financial information innovation solutions (the first batch)"

- 从Starfish OS持续对SFO的通缩消耗,长远看SFO的价值

- How mysql/mariadb generates core files

- Dataworks duty table

- I don't know. The real interest rate of Huabai installment is so high

- nacos-微服务网关Gateway组件 +Swagger2接口生成

- PHP calculates personal income tax

- PHP to get information such as audio duration

- Break algorithm --- map

猜你喜欢

Why does the updated DNS record not take effect?

用户之声 | 冬去春来,静待花开 ——浅谈GBase 8a学习感悟

分布式定时任务之XXL-JOB

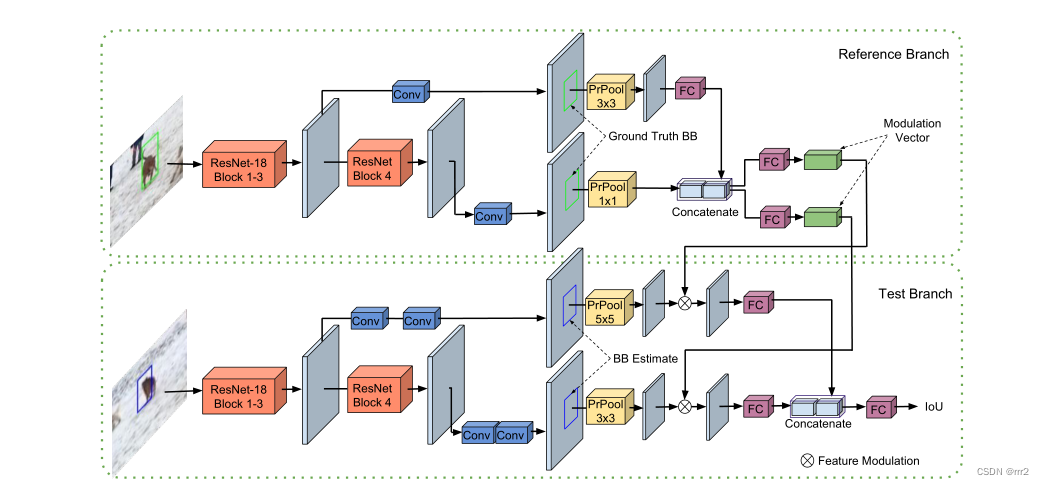

【目标跟踪】|atom

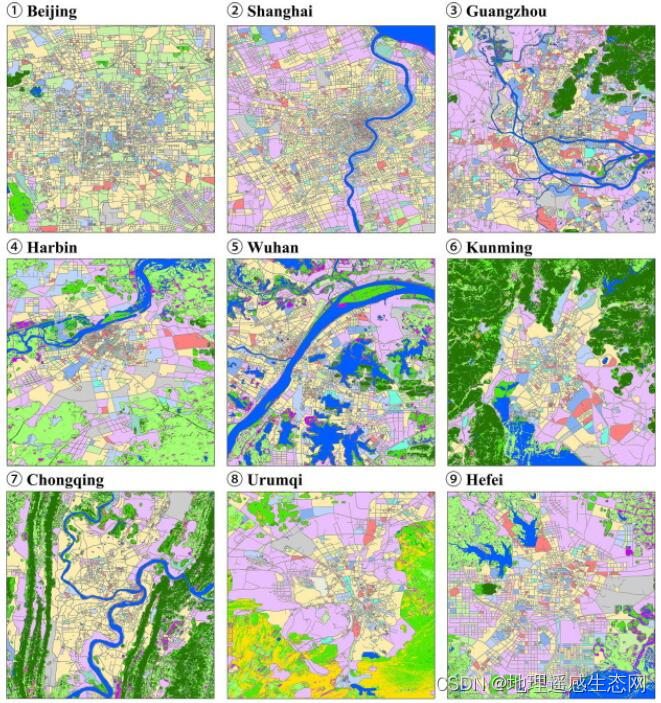

Urban land use distribution data / urban functional zoning distribution data / urban POI points of interest / vegetation type distribution

静态路由配置全面详解,静态路由快速入门指南

Tapdata 的 2.0 版 ,开源的 Live Data Platform 现已发布

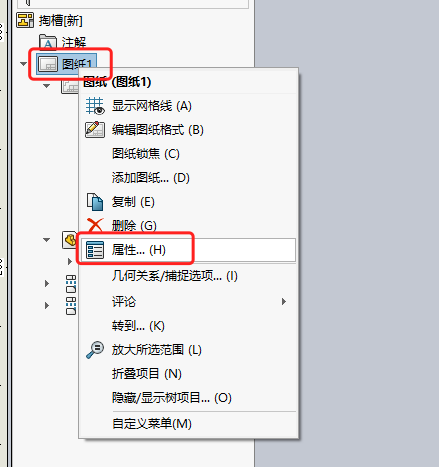

【SolidWorks】修改工程图格式

液压旋转接头的使用事项

How to make enterprise recruitment QR code?

随机推荐

2022国内十大工业级三维视觉引导企业一览

Redission源码解析

Redisson分布式锁解锁异常

进程和线程的退出

微信小程序uniapp页面无法跳转:“navigateTo:fail can not navigateTo a tabbar page“

Kafka connect synchronizes Kafka data to MySQL

MySQL数据库(2)

Voice of users | winter goes and spring comes, waiting for flowers to bloom -- on gbase 8A learning comprehension

uniapp一键复制功能效果demo(整理)

DataWorks值班表

ANSI / nema- mw- 1000-2020 magnetic iron wire standard Latest original

How to realize batch control? MES system gives you the answer

如何制作企业招聘二维码?

C语言-模块化-Clion(静态库,动态库)使用

《ClickHouse原理解析与应用实践》读书笔记(7)

Usage of hydraulic rotary joint

Exit of processes and threads

批次管控如何实现?MES系统给您答案

The function of carbon brush slip ring in generator

子矩阵的和