当前位置:网站首页>TensorFlow2 study notes: 7. Optimizer

TensorFlow2 study notes: 7. Optimizer

2022-08-04 06:05:00 【Live up to [email protected]】

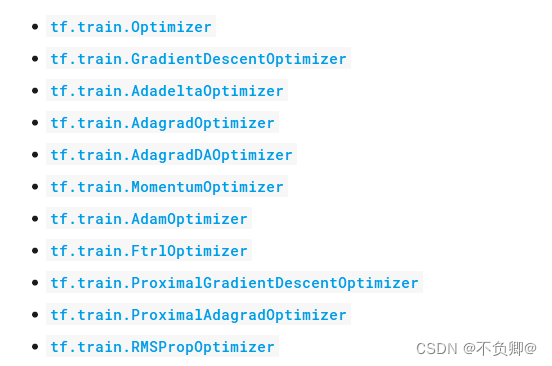

The following are the types of optimizers in the official TensorFlow documentation:

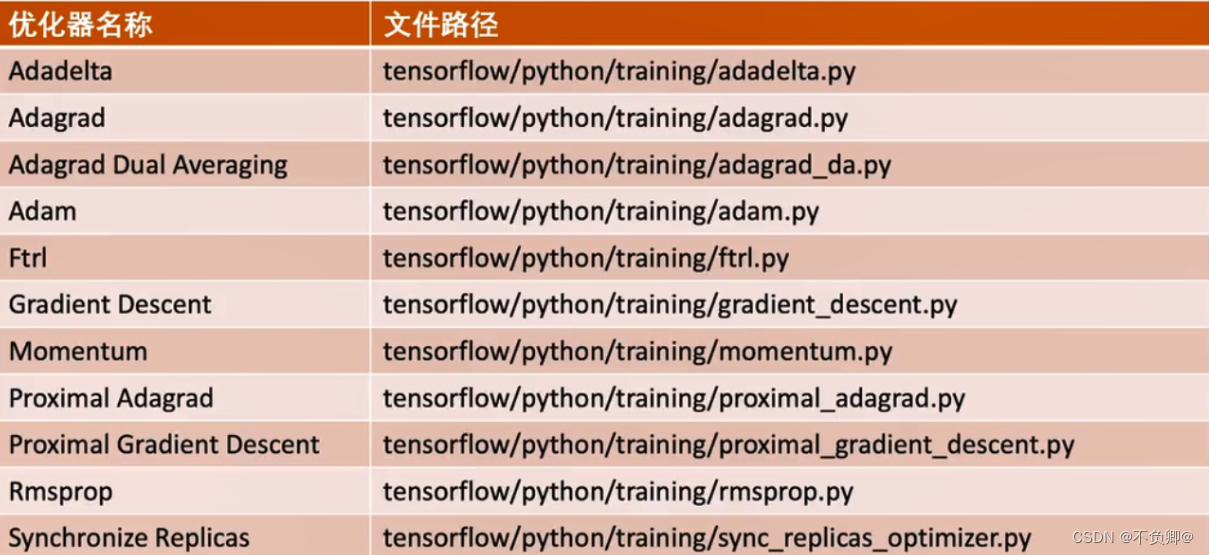

tensorflow built-in optimizer path:

tf.train.GradientDescentOptimizer

This class is an optimizer that implements the gradient descent algorithm.

tf.train.AdadeltaOptimizer

Implemented the optimizer of the Adadelta algorithm, which does not require manual tuning of the learning rate, has strong anti-noise ability, and can choose different model structures.Adadelta is an extension to Adagrad.Adadelta only accumulates items of a fixed size, and does not store these items directly, just calculates the corresponding average.

tf.train.AdagradOptimizer

An optimizer that implements the AdagradOptimizer algorithm, Adagrad will accumulate all previous squared gradients.It is used to deal with large sparse matrices. Adagrad can automatically change the learning rate. It just needs to set a global learning rate, but this is not the actual learning rate. The actual rate is inversely proportional to the square root of the sum of the previous parameters.of.This allows each parameter to have its own learning rate.

tf.train.MomentumOptimizer

The optimizer that implements the MomentumOptimizer algorithm. If the gradient maintains one direction for a long time, the parameter update range will be increased; on the contrary, if the sign flip occurs frequently, it means that this is to reduce the parameter update.Amplitude.This process can be understood as dropping a ball from the top of the mountain, which will slide faster and faster.

tf.train.RMSPropOptimizer

An optimizer that implements the RMSPropOptimizer algorithm, which is similar to Adam, but uses a different sliding average.

tf.train.AdamOptimizer

The optimizer that implements the AdamOptimizer algorithm, which combines the Momentum and RMSProp methods, and retains a learning rate and an exponentially decaying mean based on past gradient information for each parameter.

How to choose:

If the data is sparse, use adaptive methods, i.e. Adagrad, Adadelta, RMSprop, Adam.

RMSprop, Adadelta, Adam are similar in many cases.

Adam adds bias-correction and momentum to RMSprop.

As the gradient becomes sparse, Adam performs better than RMSprop.

Overall, Adam is the best choice.

SGD is used in many papers without momentum, etc.Although SGD can reach a minimum value, it takes longer than other algorithms and may be trapped in a saddle point.

If you need faster convergence, or if you need to train deeper and more complex neural networks, you need to use an adaptive algorithm.

版权声明

本文为[Live up to [email protected]]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/216/202208040525327568.html

边栏推荐

猜你喜欢

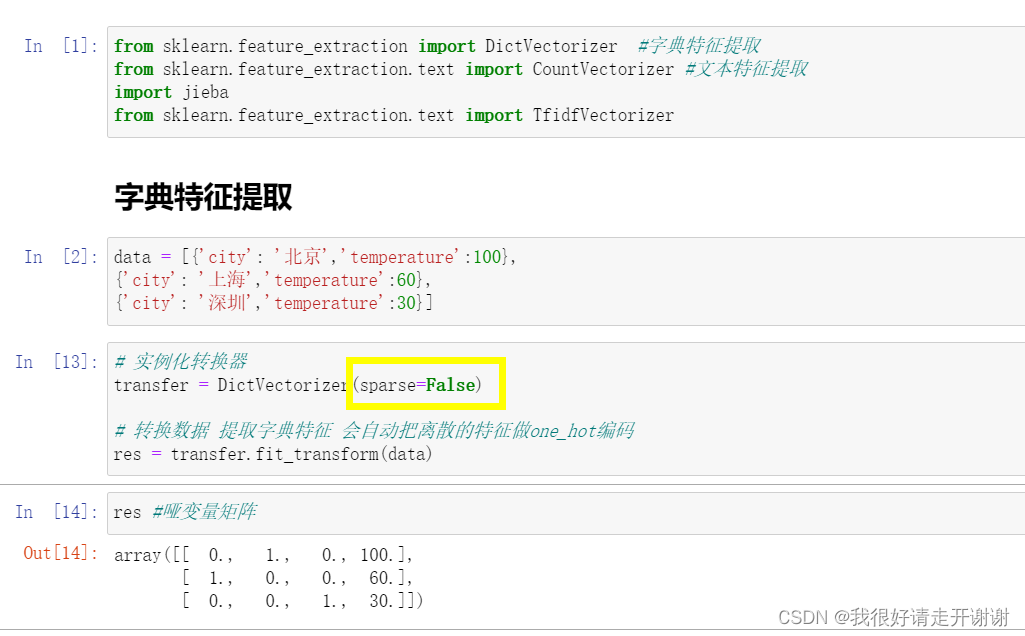

Dictionary feature extraction, text feature extraction.

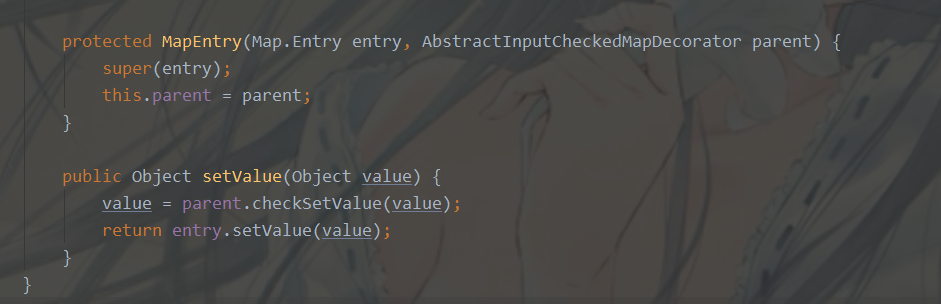

Commons Collections1

判断字符串是否有子字符串重复出现

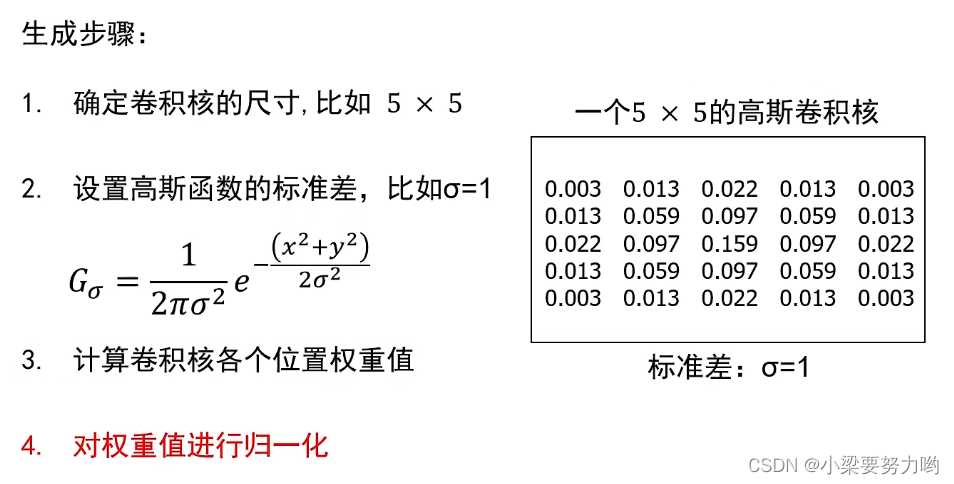

【CV-Learning】卷积神经网络预备知识

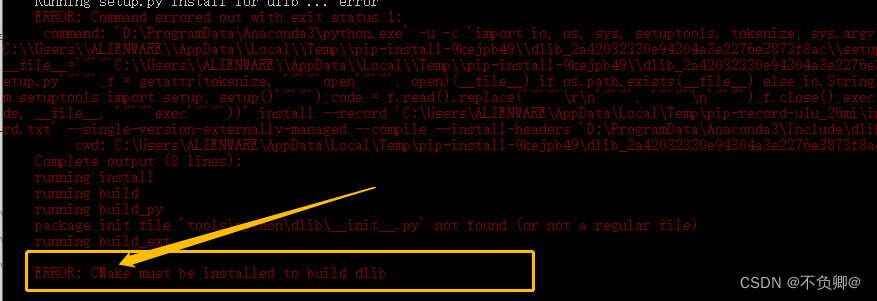

安装dlib踩坑记录,报错:WARNING: pip is configured with locations that require TLS/SSL

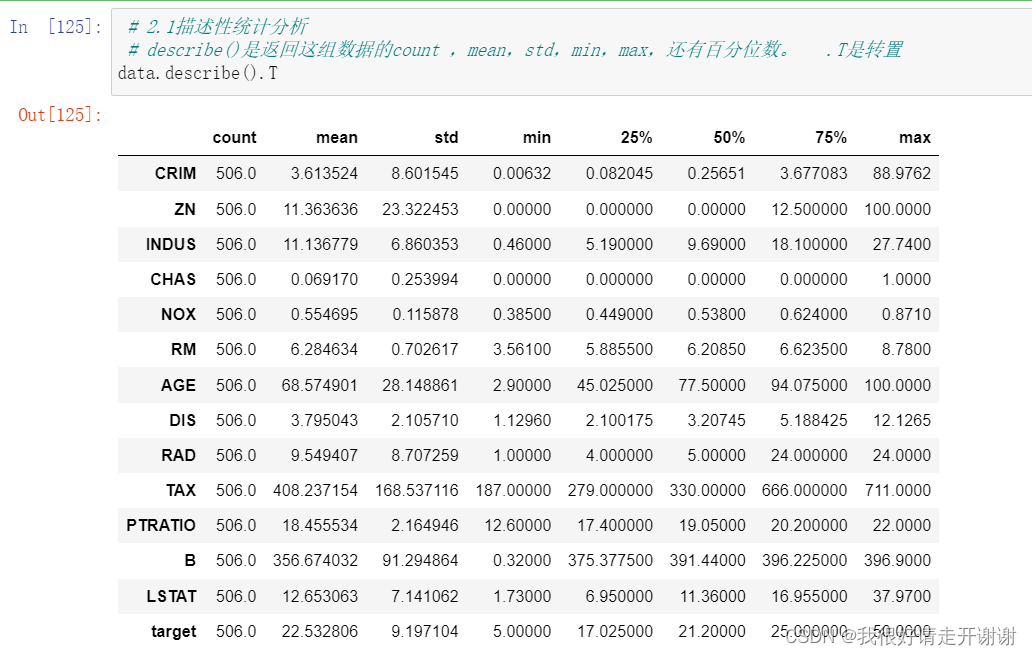

线性回归02---波士顿房价预测

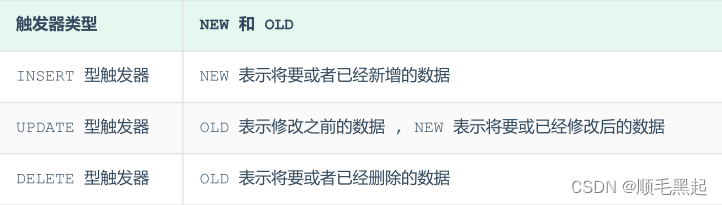

视图、存储过程、触发器

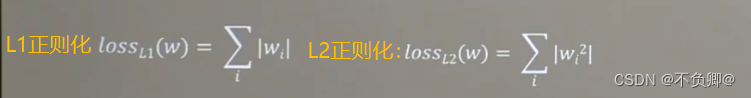

TensorFlow2学习笔记:6、过拟合和欠拟合,及其缓解方案

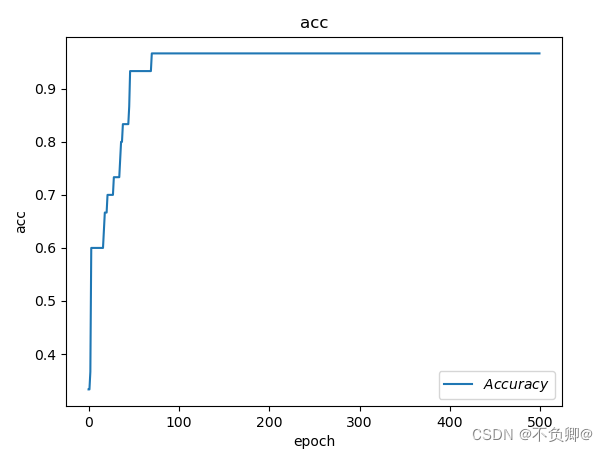

TensorFlow2 study notes: 4. The first neural network model, iris classification

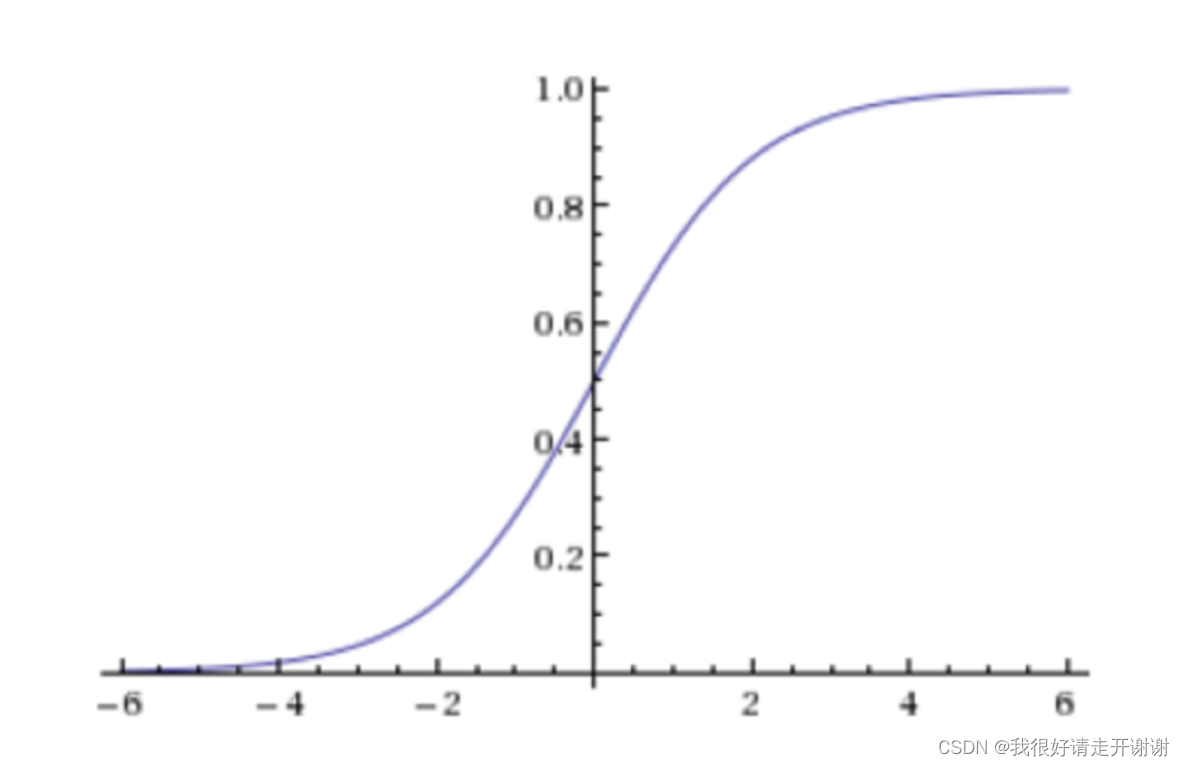

逻辑回归---简介、API简介、案例:癌症分类预测、分类评估法以及ROC曲线和AUC指标

随机推荐

对象存储-分布式文件系统-MinIO-2:服务端部署

剑指 Offer 2022/7/9

数据库根据提纲复习

(十六)图的基本操作---两种遍历

npm install dependency error npm ERR! code ENOTFOUNDnpm ERR! syscall getaddrinfonpm ERR! errno ENOTFOUND

Redis持久化方式RDB和AOF详解

postgres 递归查询

NFT市场可二开开源系统

(十三)二叉排序树

SQL练习 2022/6/30

flink-sql所有语法详解

Upload靶场搭建&&第一二关

[Deep Learning 21 Days Learning Challenge] 1. My handwriting was successfully recognized by the model - CNN implements mnist handwritten digit recognition model study notes

win云服务器搭建个人博客失败记录(wordpress,wamp)

【CV-Learning】目标检测&实例分割

读研碎碎念

lmxcms1.4

二月、三月校招面试复盘总结(二)

智能合约安全——私有数据访问

Kubernetes基础入门(完整版)