当前位置:网站首页>Technical dry goods | alphafold/ rosettafold open source reproduction (2) - alphafold process analysis and training Construction

Technical dry goods | alphafold/ rosettafold open source reproduction (2) - alphafold process analysis and training Construction

2022-07-03 07:35:00 【Shengsi mindspire】

AlphaFold After open source ( If no special instructions are given later ,AlphaFold Mean AlphaFold2), Many research teams are analyzing 、 Reproduce and try to further improve . Compared with AlphaFold Your reasoning runs ,AlphaFold The training is much more complicated . The main challenge is :

1、AlphaFold Open source is reasoning code , The training part is not public , But the deep network structure and the main training parameters are given ;

2、AlphaFold The construction of training data set is very important for good training results, but it is very time-consuming , Contains the original training sequence MAS And the extended sequence of training samples after model convergence MSA Search for , and Template Search for . Every one of them MSA and Template Your search can range from tens of minutes to hours , The calculation cost is very high .

We try to start with open source reasoning code analysis , Build typical training code :

01

The overall structure

AlphaFold Including three parts :

1、Data Protein multi sequence alignment and template data processing ,

2、Model Deep learning network part ,

3、Relax The reprocessing part of the prediction results .

AlphaFold be based on Jax Realization , In the following table, we can see AlphaFold Used in Jax and Jax above NN Related libraries are mainly used API And function . stay Data and Relax part , yes AI Irrelevant , The following table concisely lists the data sets and corresponding processing tools .

If the Jax Not familiar with , The following figure shows an example based on Jax Build simple module relationships for applying algorithms :

Before building training code , Need for AlphaFold The whole process of . Here are three pictures , It's in the middle AI The three main pictures of relevant parts . For the convenience of understanding , Use more parts in the figure than in the original figure - Add text , Indicate the meaning of abbreviations 、 Data flowing between main modules 、 and Recycling Specific implementation corresponding code .

02

Data processing

Training data processing , It can be supplemented by reasoning based data processing , Data set containing :

Raw data :

genetics:

UniRef90: v2020_01 #JackHMMER

MGnify: v2018_12 #JackHMMER

Uniclust30: v2018_08 #HHblits

BFD: only version available #HHblits

templates:

PDB70: (downloaded 2020-05-13) #HHsearch

PDB: (downloaded 2020-05-14) #Kalign(MSA)Derived data :

According to the skills of the paper ,sequence-coordinate Data pairs not only come from PDB The original 17 Cleaning of more than 10000 data , And after training convergence , Choose the one with high confidence 35 About 10000 data . Generation of this part of data , You can choose from your own model training after convergence ; It can also be used directly AlphaFold Provided model parameters , Directly infer unstructured sequences to choose ; Can also from AlphaFold Download sorting selection in the public forecast data set , To save computing resources .

Code structure of data processing part :

run_alphafold.py

data_pipeline.py

The following is a list of input data in the prediction section ( Examples ):

predict-input:

'aatype': (4, 779),

'residue_index': (4, 779),

'seq_length': (4,),

'template_aatype': (4, 4, 779),

'template_all_atom_masks': (4, 4, 779, 37),

'template_all_atom_positions': (4, 4, 779, 37, 3),

'template_sum_probs': (4, 4, 1),

'is_distillation': (4,),

'seq_mask': (4, 779),

'msa_mask': (4, 508, 779),

'msa_row_mask': (4, 508),

'random_crop_to_size_seed': (4, 2),

'template_mask': (4, 4),

'template_pseudo_beta': (4, 4, 779, 3),

'template_pseudo_beta_mask': (4, 4, 779),

'atom14_atom_exists': (4, 779, 14),

'residx_atom14_to_atom37': (4, 779, 14),

'residx_atom37_to_atom14': (4, 779, 37),

'atom37_atom_exists': (4, 779, 37),

'extra_msa': (4, 5120, 779),

'extra_msa_mask': (4, 5120, 779),

'extra_msa_row_mask': (4, 5120),

'bert_mask': (4, 508, 779),

'true_msa': (4, 508, 779),

'extra_has_deletion': (4, 5120, 779),

'extra_deletion_value': (4, 5120, 779),

'msa_feat': (4, 508, 779, 49),

'target_feat': (4, 779, 22)If you reuse AlphaFold Code implementation of train Logic , Some fields need to be added to the input data : Such as pseudo_beta etc. target Information , Of course, you can modify the representation of your own framework .

03

The main network

The structure diagram of the main network is attached at the beginning of the article ,AlphaFold The code implementation part of , The structure is as follows :

model.py

The construction and key points of the whole model , Here's the picture :

About AlphaFold What is the technical point , Make the effect so good , There are many online interpretations . The most objective interpretation is actually the Ablation Experiment of the main technical points in the paper , It's very illustrative . If you have to make the most concise summary , We think : It is the most important to make all kinds of information flow back and forth in the whole network , Various information includes Seq + MSA+ (Pair) + Template, Various functional flows of information at all levels refer to various Iteration + Recycling + Multiplication + Production.

In the construction part of the training code , Because it is train The logic of , stay AlphaFold In the construction parameters of , Need to set up :is_training=True, compute_loss=True, Only in this way can we talk about the composition of all layers loss Come back , Calculate the gradient and let the optimizer optimize the weights .

04

Structural refinement

05

Training build

When the data is ready , To achieve their own training , There are two ways : Without modifying the network structure , It can be downloaded from AlphaFold Start further training and Optimization on the open model parameters ; If you want to modify the network , A basic training logic , You can start training from scratch by implementing two parts :

1、 Build your own datasets and loaders . The simplest way , It's from pipeline.DataPipeline Start to modify , Increase your training needs target Read relevant information .

2、 Similar to reasoning RunModel, Realize one's own TrainModel, Its important logic includes : The code of the model directly utilizes open source reasoning :

modules.AlphaFold(model_config.model)(batch, is_training=True, compute_loss=True, ensemble_representations=True, return_representations=False).

The optimizer uses [email protected] Realization .

Above , Can be based on existing reasoning code , Build the simplest training version .

MindSpore Official information

official QQ Group : 486831414

Official website :https://www.mindspore.cn/

Gitee : https : //gitee.com/mindspore/mindspore

GitHub : https://github.com/mindspore-ai/mindspore

Forum :https://bbs.huaweicloud.com/forum/forum-1076-1.html

边栏推荐

- 1. E-commerce tool cefsharp autojs MySQL Alibaba cloud react C RPA automated script, open source log

- Collector in ES (percentile / base)

- Map interface and method

- Win 2008 R2 crashed at the final installation stage

- 技术干货|昇思MindSpore Lite1.5 特性发布,带来全新端侧AI体验

- Technology dry goods | luxe model for the migration of mindspore NLP model -- reading comprehension task

- Custom generic structure

- Hnsw introduction and some reference articles in lucene9

- 圖像識別與檢測--筆記

- 《指環王:力量之戒》新劇照 力量之戒鑄造者亮相

猜你喜欢

Why is data service the direction of the next generation data center?

HCIA notes

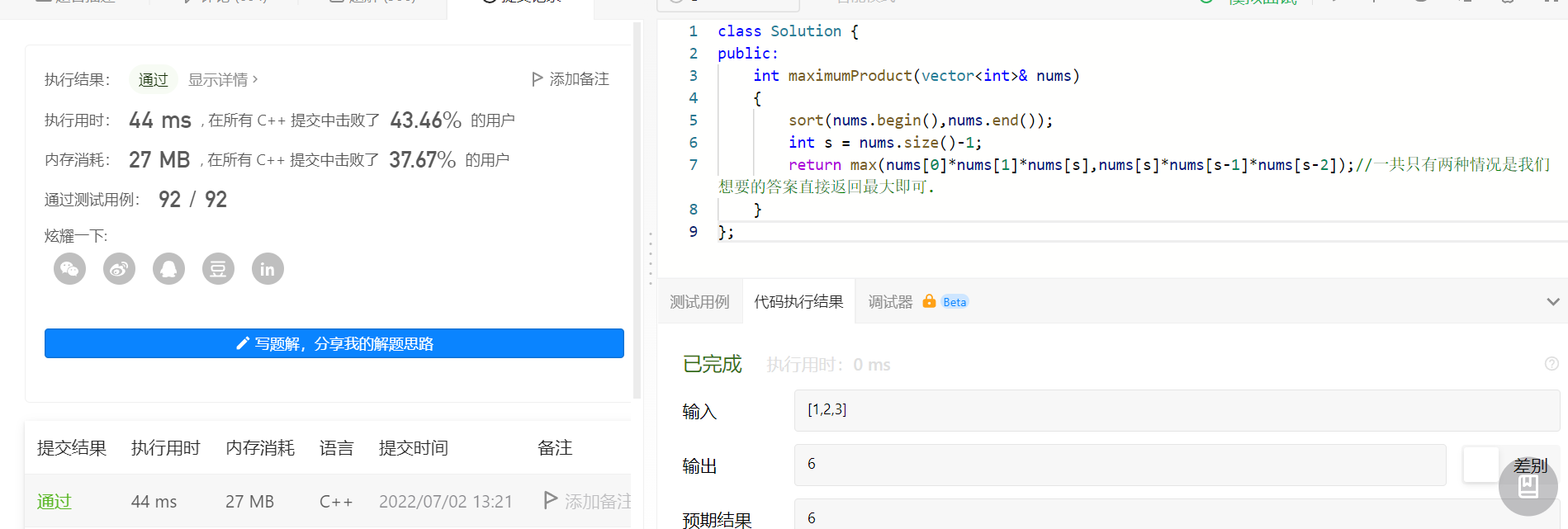

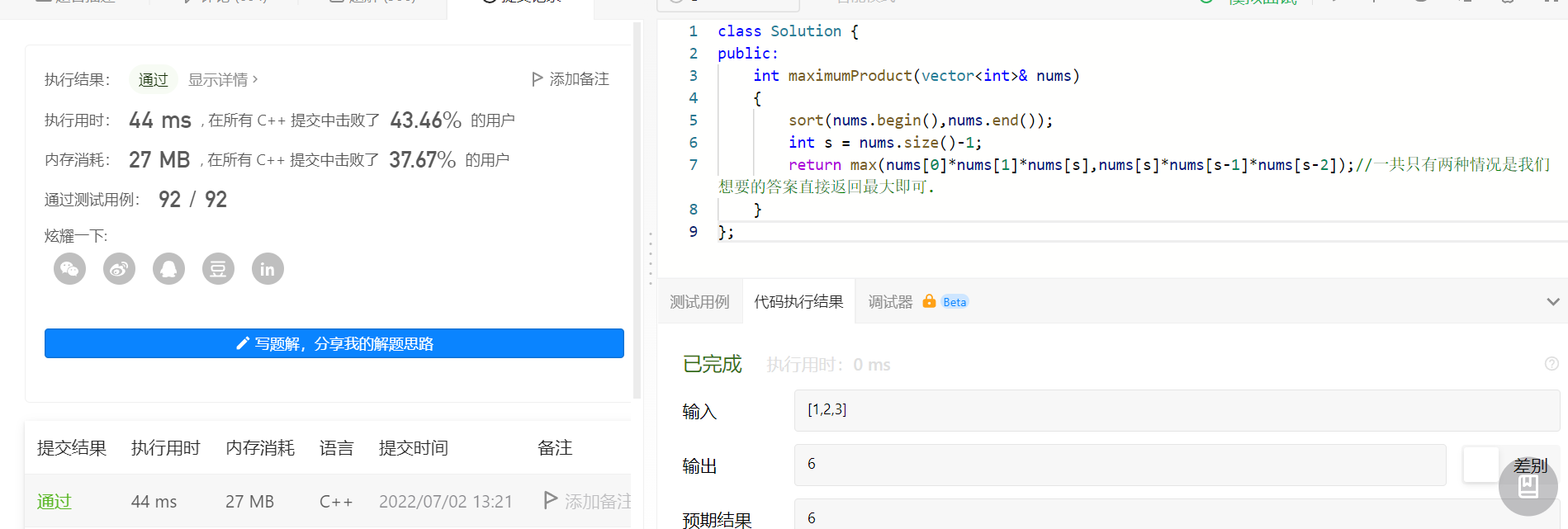

7.2刷题两个

Lucene hnsw merge optimization

7.2 brush two questions

Understanding of class

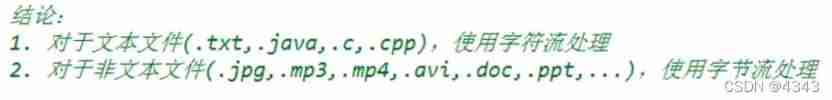

FileInputStream and fileoutputstream

技术干货|昇思MindSpore创新模型EPP-MVSNet-高精高效的三维重建

技术干货|利用昇思MindSpore复现ICCV2021 Best Paper Swin Transformer

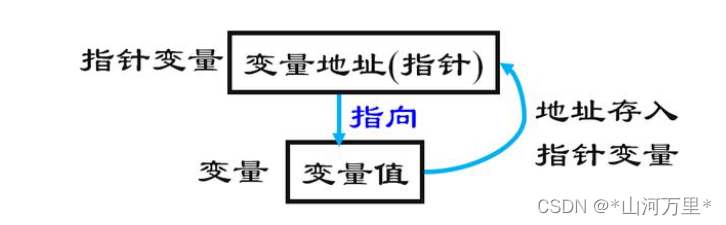

The concept of C language pointer

随机推荐

Industrial resilience

VMware virtual machine installation

The embodiment of generics in inheritance and wildcards

Technical dry goods Shengsi mindspire elementary course online: from basic concepts to practical operation, 1 hour to start!

Application of pigeon nest principle in Lucene minshouldmatchsumscorer

Le Seigneur des anneaux: l'anneau du pouvoir

Image recognition and detection -- Notes

HCIA notes

sharepoint 2007 versions

An overview of IfM Engage

Grpc message sending of vertx

Dora (discover offer request recognition) process of obtaining IP address

Summary of Arduino serial functions related to print read

2021-07-18

不出网上线CS的各种姿势

項目經驗分享:實現一個昇思MindSpore 圖層 IR 融合優化 pass

昇思MindSpore再升级,深度科学计算的极致创新

Technical dry goods Shengsi mindspire innovation model EPP mvsnet high-precision and efficient 3D reconstruction

Logging log configuration of vertx

[coppeliasim4.3] C calls UR5 in the remoteapi control scenario