当前位置:网站首页>Technology dry goods | luxe model for the migration of mindspore NLP model -- reading comprehension task

Technology dry goods | luxe model for the migration of mindspore NLP model -- reading comprehension task

2022-07-03 07:34:00 【Shengsi mindspire】

LUKE The model proposed by the author is in BERT Of MLM Using a new pre training task to train . This task involves predicting randomly hidden words and entities retrieved from Wikipedia's large entity annotation corpus . The author also proposes a self attention mechanism of entity perception , It is transformer Expansion of self attention mechanism in , And when calculating the attention score token( Words or entities ) The type of . The main contributions are :

1、 The author proposes a new context representation method for dealing with entity related tasks LUKE(Language Understanding with Knowledge-based Embeddings).LUKE Using a large number of entity annotation corpora obtained from Wikipedia , Prediction random mask Words and entities .

2、 The author proposes a self attention mechanism of entity perception , It's right transformer Effective expansion of the original attention mechanism , This mechanism takes markers into account when calculating attention scores ( Words or entities ) The type of .

3、LUKE In order to Roberta As a basic pre training model , And through simultaneous optimization MLM The goal and the task we proposed are to pre train the model . And in 5 The most advanced results were obtained on popular data sets .

luke Official source code [1]:

https://github.com/studio-ousia/luke

luke The paper (EMNLP2020)[2]:

https://aclanthology.org/2020.emnlp-main.523.pdf

Preface

The environment of this article :

System :ubuntu18

GPU:3090

MindSpore edition :1.3

Data sets :SQuAD1.1( Reading comprehension task )

Read and understand the task definition :

Machine reading is understood as QA A new field in question and answer Technology , Allow users to enter unstructured text and questions , Machines are based on reading comprehension , Find answers from the text to answer user questions .

01

Data processing

First, refer to the source code to process the data as required by the model Mindrecord file .

First refer to the source code roberta_tokenizer Convert text to token, obtain word_ids,word_segment_ids And so on . among word_ids part padding It needs to be completed 1,entity_position_ids Entity location information needs to be supplemented -1, The rest of the fields are complements 0. Because the source code is padding Length to 256, Therefore, here we also refer to the source code for each field padding, Specifically padding The code is as follows :

import json

from mindspore.mindrecord import FileWriter

SQUAD_MINDRECORD_FILE = "./data/dev_features.mindrecord"

pad = lambda a,i : a[0:i] if len(a) > i else a + [0] * (i-len(a))

pad1 = lambda a,i : a[0:i] if len(a) > i else a + [1] * (i-len(a))

pad_entity = lambda a,i : a[0:i] if len(a) > i else np.append(a,[-1] * (i-len(a)))

#list_dict by tokenizer After that token list contain word_ids,word_segment_ids And so on

# padding

for slist in list_dict:

slist["entity_attention_mask"] = pad(slist["entity_attention_mask"], 24)

slist["entity_ids"] = pad(slist["entity_attention_mask"], 24)

slist["entity_segment_ids"] = pad(slist["entity_segment_ids"], 24)

slist["word_ids"] = pad1(slist["word_ids"], 256)

slist["word_segment_ids"] = pad(slist["word_segment_ids"], 256)

slist["word_attention_mask"] = pad(slist["word_attention_mask"], 256)

# entity_position padding

entity_size = len(slist["entity_position_ids"])

slist["entity_position_ids"] = np.array(slist["entity_position_ids"])

temp = [[-1]*24 for i in range(24)]

for i in range(24):

if i < entity_size-1:

temp[i]=(pad_entity(slist["entity_position_ids"][i], 24))

slist["entity_position_ids"] =temp

if os.path.exists(SQUAD_MINDRECORD_FILE):

os.remove(SQUAD_MINDRECORD_FILE)

os.remove(SQUAD_MINDRECORD_FILE + ".db")

writer = FileWriter(file_name=SQUAD_MINDRECORD_FILE, shard_num=1)

data_schema = {

"unique_id": {"type": "int32", "shape": [-1]},

"word_ids": {"type": "int32", "shape": [-1]},

"word_segment_ids": {"type": "int32", "shape": [-1]},

"word_attention_mask": {"type": "int32", "shape": [-1]},

"entity_ids": {"type": "int32", "shape": [-1]},

"entity_position_ids": {"type": "int32", "shape": [24,24]},

"entity_segment_ids": {"type": "int32", "shape": [-1]},

"entity_attention_mask": {"type": "int32", "shape": [-1]},

#"start_positions": {"type": "int32", "shape": [-1]},

#"end_positions": {"type": "int32", "shape": [-1]}

}

writer.add_schema(data_schema, "it is a preprocessed squad dataset")

data = []

i = 0

for item in list_dict:

i += 1

sample = {

"unique_id": np.array(item["unique_id"], dtype=np.int32),

"word_ids": np.array(item["word_ids"], dtype=np.int32),

"word_segment_ids": np.array(item["word_segment_ids"], dtype=np.int32),

"word_attention_mask": np.array(item["word_attention_mask"], dtype=np.int32),

"entity_ids": np.array(item["entity_ids"], dtype=np.int32),

"entity_position_ids": np.array(item["entity_position_ids"], dtype=np.int32),

"entity_segment_ids": np.array(item["entity_segment_ids"], dtype=np.int32),

"entity_attention_mask": np.array(item["entity_attention_mask"], dtype=np.int32),

#"start_positions": np.array(item["start_positions"], dtype=np.int32),

#"end_positions": np.array(item["end_positions"], dtype=np.int32),

}

data.append(sample)

#print(sample)

if i % 10 == 0:

writer.write_raw_data(data)

data = []

if data:

writer.write_raw_data(data)

writer.commit()The specific data examples are as follows :

02

LUKE Model migration

You can mainly refer to the official website API Rewrite the mapped document .

link :https://www.mindspore.cn/docs/migration_guide/zh-CN/r1.5/api_mapping/pytorch_api_mapping.html

2.1 Entity embedded part

for example Entity_Embedding part , Before rewriting pytorch Source code :

class EntityEmbeddings(nn.Module):

def __init__(self, config: LukeConfig):

super(EntityEmbeddings, self).__init__()

self.config = config

self.entity_embeddings = nn.Embedding(config.entity_vocab_size, config.entity_emb_size, padding_idx=0)

if config.entity_emb_size != config.hidden_size:

self.entity_embedding_dense = nn.Linear(config.entity_emb_size, config.hidden_size, bias=False)

self.position_embeddings = nn.Embedding(config.max_position_embeddings, config.hidden_size)

self.token_type_embeddings = nn.Embedding(config.type_vocab_size, config.hidden_size)

self.LayerNorm = nn.LayerNorm(config.hidden_size, eps=config.layer_norm_eps)

self.dropout = nn.Dropout(config.hidden_dropout_prob)

def forward(

self, entity_ids: torch.LongTensor, position_ids: torch.LongTensor, token_type_ids: torch.LongTensor = None

):

if token_type_ids is None:

token_type_ids = torch.zeros_like(entity_ids)

entity_embeddings = self.entity_embeddings(entity_ids)

if self.config.entity_emb_size != self.config.hidden_size:

entity_embeddings = self.entity_embedding_dense(entity_embeddings)

position_embeddings = self.position_embeddings(position_ids.clamp(min=0))

position_embedding_mask = (position_ids != -1).type_as(position_embeddings).unsqueeze(-1)

position_embeddings = position_embeddings * position_embedding_mask

position_embeddings = torch.sum(position_embeddings, dim=-2)

position_embeddings = position_embeddings / position_embedding_mask.sum(dim=-2).clamp(min=1e-7)

token_type_embeddings = self.token_type_embeddings(token_type_ids)

embeddings = entity_embeddings + position_embeddings + token_type_embeddings

embeddings = self.LayerNorm(embeddings)

embeddings = self.dropout(embeddings)

return embeddingsAfter rewriting :

class EntityEmbeddings(nn.Cell):

"""entity embeddings for luke model"""

def __init__(self, config):

super(EntityEmbeddings, self).__init__()

self.entity_emb_size = config.entity_emb_size

self.hidden_size = config.hidden_size

self.entity_embeddings = nn.Embedding(config.entity_vocab_size, config.entity_emb_size, padding_idx=0)

if config.entity_emb_size != config.hidden_size:

self.entity_embedding_dense = nn.Dense(config.entity_emb_size, config.hidden_size, has_bias=False)

self.position_embeddings = nn.Embedding(config.max_position_embeddings, config.hidden_size)

self.token_type_embeddings = nn.Embedding(config.type_vocab_size, config.hidden_size)

self.layer_norm = nn.LayerNorm([config.hidden_size], epsilon=config.layer_norm_eps)

self.dropout = nn.Dropout(config.hidden_dropout_prob)

self.unsqueezee = ops.ExpandDims()

def construct(self, entity_ids, position_ids, token_type_ids=None):

"""EntityEmbeddings for luke"""

if token_type_ids is None:

token_type_ids = ops.zeros_like(entity_ids)

entity_embeddings = self.entity_embeddings(entity_ids)

if self.entity_emb_size != self.hidden_size:

entity_embeddings = self.entity_embedding_dense(entity_embeddings)

entity_position_ids_int = clamp(position_ids)

position_embeddings = self.position_embeddings(entity_position_ids_int)

position_ids = position_ids.astype(mstype.int32)

position_embedding_mask = 1.0 * self.unsqueezee((position_ids != -1), -1)

position_embeddings = position_embeddings * position_embedding_mask

position_embeddings = ops.reduce_sum(position_embeddings, -2)

position_embeddings = position_embeddings / clamp(ops.reduce_sum(position_embedding_mask, -2), minimum=1e-7)

token_type_embeddings = self.token_type_embeddings(token_type_ids)

embeddings = entity_embeddings + position_embeddings

embeddings += token_type_embeddings

embeddings = self.layer_norm(embeddings)

embeddings = self.dropout(embeddings)

return embeddings

def clamp(x, minimum=0):

mask = x > minimum

x = x * mask + minimum

return xThe parameters of the model in the source code are :

LukeConfig {

"architectures": null,

"attention_probs_dropout_prob": 0.1,

"bert_model_name": "roberta-large",

"bos_token_id": 0,

"do_sample": false,

"entity_emb_size": 256,

"entity_vocab_size": 500000,

"eos_token_ids": 0,

"finetuning_task": null,

"hidden_act": "gelu",

"hidden_dropout_prob": 0.1,

"hidden_size": 1024,

"id2label": {

"0": "LABEL_0",

"1": "LABEL_1"

},

"initializer_range": 0.02,

"intermediate_size": 4096,

"is_decoder": false,

"label2id": {

"LABEL_0": 0,

"LABEL_1": 1

},

"layer_norm_eps": 1e-05,

"length_penalty": 1.0,

"max_length": 20,

"max_position_embeddings": 514,

"model_type": "bert",

"num_attention_heads": 16,

"num_beams": 1,

"num_hidden_layers": 24,

"num_labels": 2,

"num_return_sequences": 1,

"output_attentions": false,

"output_hidden_states": false,

"output_past": true,

"pad_token_id": 0,

"pruned_heads": {},

"repetition_penalty": 1.0,

"temperature": 1.0,

"top_k": 50,

"top_p": 1.0,

"torchscript": false,

"type_vocab_size": 1,

"use_bfloat16": false,

"vocab_size": 50265

}2.2 Entity perceived self attention mechanism

The input sequence is

, among , The output sequence is , The output vector is calculated by weighted summation after the conversion of the input vector . In this model , Each input and output is associated with a token Related to , One token It can be a word or an entity . In a sequence of words and entities , set up , The first i The calculation process of each output vector is as follows :

among

, Respectively as query、key and value matrix , Parameters L For the number of attention heads ,D To hide dimensions , The experiment is mentioned later L=64,D=1024.

because LUKE Handle two token Categories , Therefore, the paper assumes that when calculating the attention score (

) When , Use target token Category information is useful . In this way of thinking , The author introduces entity aware query (query) Mechanism to enhance the extraction of category information , The mechanism works for every possible token Yes , Use different query matrices . Final attention score ( ) The calculation of is as follows :

Specifically MindSpore Realization , as follows :

class EntityAwareSelfAttention(nn.Cell):

"""EntityAwareSelfAttention"""

def __init__(self, config):

super(EntityAwareSelfAttention, self).__init__()

self.num_attention_heads = config.num_attention_heads

self.attention_head_size = int(config.hidden_size / config.num_attention_heads)

self.all_head_size = self.num_attention_heads * self.attention_head_size

self.query = nn.Dense(config.hidden_size, self.all_head_size)

self.key = nn.Dense(config.hidden_size, self.all_head_size)

self.value = nn.Dense(config.hidden_size, self.all_head_size)

self.w2e_query = nn.Dense(config.hidden_size, self.all_head_size)

self.e2w_query = nn.Dense(config.hidden_size, self.all_head_size)

self.e2e_query = nn.Dense(config.hidden_size, self.all_head_size)

# dropout need (1 - config.attention_probs_dropout_prob)

self.dropout = nn.Dropout(1 - config.attention_probs_dropout_prob)

self.concat = ops.Concat(1)

self.concat2 = ops.Concat(2)

self.concat3 = ops.Concat(3)

self.sotfmax = ops.Softmax()

self.shape = ops.Shape()

self.reshape = ops.Reshape()

self.transpose = ops.Transpose()

self.softmax = ops.Softmax(axis=-1)

def transpose_for_scores(self, x):

new_x_shape = ops.shape(x)[:-1] + (self.num_attention_heads, self.attention_head_size)

out = self.reshape(x, new_x_shape)

out = self.transpose(out, (0, 2, 1, 3))

return out

def construct(self, word_hidden_states, entity_hidden_states, attention_mask):

"""EntityAwareSelfAttention construct"""

word_size = self.shape(word_hidden_states)[1]

w2w_query_layer = self.transpose_for_scores(self.query(word_hidden_states))

w2e_query_layer = self.transpose_for_scores(self.w2e_query(word_hidden_states))

e2w_query_layer = self.transpose_for_scores(self.e2w_query(entity_hidden_states))

e2e_query_layer = self.transpose_for_scores(self.e2e_query(entity_hidden_states))

key_layer = self.transpose_for_scores(self.key(self.concat([word_hidden_states, entity_hidden_states])))

w2w_key_layer = key_layer[:, :, :word_size, :]

e2w_key_layer = key_layer[:, :, :word_size, :]

w2e_key_layer = key_layer[:, :, word_size:, :]

e2e_key_layer = key_layer[:, :, word_size:, :]

w2w_attention_scores = ops.matmul(w2w_query_layer, ops.transpose(w2w_key_layer, (0, 1, 3, 2)))

w2e_attention_scores = ops.matmul(w2e_query_layer, ops.transpose(w2e_key_layer, (0, 1, 3, 2)))

e2w_attention_scores = ops.matmul(e2w_query_layer, ops.transpose(e2w_key_layer, (0, 1, 3, 2)))

e2e_attention_scores = ops.matmul(e2e_query_layer, ops.transpose(e2e_key_layer, (0, 1, 3, 2)))

word_attention_scores = self.concat3([w2w_attention_scores, w2e_attention_scores])

entity_attention_scores = self.concat3([e2w_attention_scores, e2e_attention_scores])

attention_scores = self.concat2([word_attention_scores, entity_attention_scores])

attention_scores = attention_scores / np.sqrt(self.attention_head_size)

attention_scores = attention_scores + attention_mask

attention_probs = self.softmax(attention_scores)

attention_probs = self.dropout(attention_probs)

value_layer = self.transpose_for_scores(

self.value(self.concat([word_hidden_states, entity_hidden_states]))

)

context_layer = ops.matmul(attention_probs, value_layer)

context_layer = ops.transpose(context_layer, (0, 2, 1, 3))

new_context_layer_shape = ops.shape(context_layer)[:-2] + (self.all_head_size,)

context_layer = self.reshape(context_layer, new_context_layer_shape)

return context_layer[:, :word_size, :], context_layer[:, word_size:, :]It should be noted that MindSpore Medium dropout And pytorch Of dropout Dissimilarity .

for example pytorch in dropout The input parameter of is 0.1, be MindSpore Of dropout The input parameter should be 0.9.

03

Weight migration pytorch->MindSpore

Because the official website has provided fine-tuning weight information , So we try to convert the weight directly to predict .

We first need to know the model weight name and shape , need pytorch And MindSpore The models correspond one to one .

And then in the output MindSpore Model :

Finally, write the weight conversion function :

## torch2ms

import os

import collections

from mindspore import log as logger

from mindspore.common.tensor import Tensor

from mindspore.common.initializer import initializer

from mindspore import save_checkpoint

from mindspore import Parameter

def build_params_map(layer_num=24):

"""

build params map from torch's LUKE to mindspore's LUKE

map=> key:value,torch_name:ms_name

key :torch Weight name , value :mindspore Weight name

:return:

"""

weight_map = collections.OrderedDict({

'embeddings.word_embeddings.weight': "luke.embeddings.word_embeddings.embedding_table",

'embeddings.position_embeddings.weight': "luke.embeddings.position_embeddings.embedding_table",

'embeddings.token_type_embeddings.weight': "luke.embeddings.token_type_embeddings.embedding_table",

'embeddings.LayerNorm.weight': 'luke.embeddings.layer_norm.gamma',

'embeddings.LayerNorm.bias': 'luke.embeddings.layer_norm.beta',

'entity_embeddings.entity_embeddings.weight':'luke.entity_embeddings.entity_embeddings.embedding_table',

'entity_embeddings.entity_embedding_dense.weight':'luke.entity_embeddings.entity_embedding_dense.weight',

'entity_embeddings.position_embeddings.weight':'luke.entity_embeddings.position_embeddings.embedding_table',

'entity_embeddings.token_type_embeddings.weight':'luke.entity_embeddings.token_type_embeddings.embedding_table',

'entity_embeddings.LayerNorm.weight':'luke.entity_embeddings.layer_norm.gamma',

'entity_embeddings.LayerNorm.bias':'luke.entity_embeddings.layer_norm.beta',

'qa_outputs.weight':'qa_outputs.weight',

'qa_outputs.bias':'qa_outputs.bias',

# 'pooler.dense.weight':'pooler.weight',

# 'pooler.dense.bias':'pooler.bias'

})

# add attention layers

for i in range(layer_num):

weight_map[f'encoder.layer.{i}.attention.self.query.weight'] = \

f'luke.encoder.layer.{i}.attention.self_attention.query.weight'

weight_map[f'encoder.layer.{i}.attention.self.query.bias']= \

f'luke.encoder.layer.{i}.attention.self_attention.query.bias'

weight_map[f'encoder.layer.{i}.attention.self.key.weight']= \

f'luke.encoder.layer.{i}.attention.self_attention.key.weight'

weight_map[f'encoder.layer.{i}.attention.self.key.bias']= \

f'luke.encoder.layer.{i}.attention.self_attention.key.bias'

weight_map[f'encoder.layer.{i}.attention.self.value.weight']= \

f'luke.encoder.layer.{i}.attention.self_attention.value.weight'

weight_map[f'encoder.layer.{i}.attention.self.value.bias']= \

f'luke.encoder.layer.{i}.attention.self_attention.value.bias'

weight_map[f'encoder.layer.{i}.attention.self.w2e_query.weight']= \

f'luke.encoder.layer.{i}.attention.self_attention.w2e_query.weight'

weight_map[f'encoder.layer.{i}.attention.self.w2e_query.bias']= \

f'luke.encoder.layer.{i}.attention.self_attention.w2e_query.bias'

weight_map[f'encoder.layer.{i}.attention.self.e2w_query.weight']= \

f'luke.encoder.layer.{i}.attention.self_attention.e2w_query.weight'

weight_map[f'encoder.layer.{i}.attention.self.e2w_query.bias']= \

f'luke.encoder.layer.{i}.attention.self_attention.e2w_query.bias'

weight_map[f'encoder.layer.{i}.attention.self.e2e_query.weight']= \

f'luke.encoder.layer.{i}.attention.self_attention.e2e_query.weight'

weight_map[f'encoder.layer.{i}.attention.self.e2e_query.bias']= \

f'luke.encoder.layer.{i}.attention.self_attention.e2e_query.bias'

weight_map[f'encoder.layer.{i}.attention.output.dense.weight']= \

f'luke.encoder.layer.{i}.attention.output.dense.weight'

weight_map[f'encoder.layer.{i}.attention.output.dense.bias'] = \

f'luke.encoder.layer.{i}.attention.output.dense.bias'

weight_map[f'encoder.layer.{i}.attention.output.LayerNorm.weight'] = \

f'luke.encoder.layer.{i}.attention.output.layernorm.gamma'

weight_map[f'encoder.layer.{i}.attention.output.LayerNorm.bias'] = \

f'luke.encoder.layer.{i}.attention.output.layernorm.beta'

weight_map[f'encoder.layer.{i}.intermediate.dense.weight'] = \

f'luke.encoder.layer.{i}.intermediate.weight'

weight_map[f'encoder.layer.{i}.intermediate.dense.bias'] = \

f'luke.encoder.layer.{i}.intermediate.bias'

weight_map[f'encoder.layer.{i}.output.dense.weight'] = \

f'luke.encoder.layer.{i}.output.dense.weight'

weight_map[f'encoder.layer.{i}.output.dense.bias'] = \

f'luke.encoder.layer.{i}.output.dense.bias'

weight_map[f'encoder.layer.{i}.output.LayerNorm.weight'] = \

f'luke.encoder.layer.{i}.output.layernorm.gamma'

weight_map[f'encoder.layer.{i}.output.LayerNorm.bias'] = \

f'luke.encoder.layer.{i}.output.layernorm.beta'

# add pooler

# weight_map.update(

# {

# 'pooled_fc.w_0': 'ernie.ernie.dense.weight',

# 'pooled_fc.b_0': 'ernie.ernie.dense.bias',

# 'cls_out_w': 'ernie.dense_1.weight',

# 'cls_out_b': 'ernie.dense_1.bias'

# }

# )

return weight_map

def _update_param(param, new_param):

"""Updates param's data from new_param's data."""

if isinstance(param.data, Tensor) and isinstance(new_param.data, Tensor):

if param.data.dtype != new_param.data.dtype:

logger.error("Failed to combine the net and the parameters for param %s.", param.name)

msg = ("Net parameters {} type({}) different from parameter_dict's({})"

.format(param.name, param.data.dtype, new_param.data.dtype))

raise RuntimeError(msg)

if param.data.shape != new_param.data.shape:

if not _special_process_par(param, new_param):

logger.error("Failed to combine the net and the parameters for param %s.", param.name)

msg = ("Net parameters {} shape({}) different from parameter_dict's({})"

.format(param.name, param.data.shape, new_param.data.shape))

raise RuntimeError(msg)

return

param.set_data(new_param.data)

return

if isinstance(param.data, Tensor) and not isinstance(new_param.data, Tensor):

if param.data.shape != (1,) and param.data.shape != ():

logger.error("Failed to combine the net and the parameters for param %s.", param.name)

msg = ("Net parameters {} shape({}) is not (1,), inconsistent with parameter_dict's(scalar)."

.format(param.name, param.data.shape))

raise RuntimeError(msg)

param.set_data(initializer(new_param.data, param.data.shape, param.data.dtype))

elif isinstance(new_param.data, Tensor) and not isinstance(param.data, Tensor):

logger.error("Failed to combine the net and the parameters for param %s.", param.name)

msg = ("Net parameters {} type({}) different from parameter_dict's({})"

.format(param.name, type(param.data), type(new_param.data)))

raise RuntimeError(msg)

else:

param.set_data(type(param.data)(new_param.data))

def _special_process_par(par, new_par):

"""

Processes the special condition.

Like (12,2048,1,1)->(12,2048), this case is caused by GE 4 dimensions tensor.

"""

par_shape_len = len(par.data.shape)

new_par_shape_len = len(new_par.data.shape)

delta_len = new_par_shape_len - par_shape_len

delta_i = 0

for delta_i in range(delta_len):

if new_par.data.shape[par_shape_len + delta_i] != 1:

break

if delta_i == delta_len - 1:

new_val = new_par.data.asnumpy()

new_val = new_val.reshape(par.data.shape)

par.set_data(Tensor(new_val, par.data.dtype))

return True

return False

def extract_and_convert(torch_model, ms_model):

"""extract weights and convert to mindspore"""

print('=' * 20 + 'extract weights' + '=' * 20)

state_dict = []

weight_map = build_params_map(layer_num=24)

for weight_name, weight_value in torch_model.items():

if weight_name not in weight_map.keys():

continue

state_dict.append({'name': weight_map[weight_name], 'data': Tensor(weight_value.numpy())})

value = Parameter(Tensor(weight_value.numpy()),name=weight_map[weight_name])

key = ms_model[weight_map[weight_name]]

_update_param(key, value)

print(weight_name, '->', weight_map[weight_name], weight_value.shape)

save_checkpoint(model, os.path.join("./luke-large-qa.ckpt"))

print('=' * 20 + 'extract weights finished' + '=' * 20)

extract_and_convert(torch_model,ms_model)The names here must correspond one by one . If the model is changed later , You also need to check whether this conversion function can correspond to .

04

Evaluation test

We post process the output probability , You can get the final prediction answer .

Finally, evaluate the predicted value with the standard answer , Get the last F1 fraction .

( Training related will be updated later )

Reference link :

[1] luke Source code :

https://github.com/studio-ousia/luke

[2] luke The paper :

https://aclanthology.org/2020.emnlp-main.523.pdf

[3] Reference article :

https://zhuanlan.zhihu.com/p/381626609

MindSpore Official information

GitHub : https://github.com/mindspore-ai/mindspore

Gitee : https : //gitee.com/mindspore/mindspore

official QQ Group : 871543426

边栏推荐

- Image recognition and detection -- Notes

- SharePoint modification usage analysis report is more than 30 days

- Analysis of the problems of the 10th Blue Bridge Cup single chip microcomputer provincial competition

- Custom generic structure

- C WinForm framework

- Spa single page application

- 【开发笔记】基于机智云4G转接板GC211的设备上云APP控制

- La différence entre le let Typescript et le Var

- Leetcode 198: 打家劫舍

- IP home online query platform

猜你喜欢

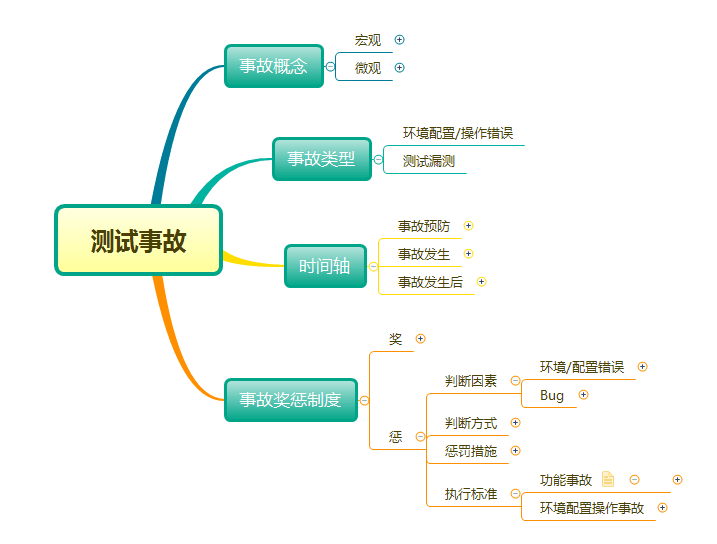

Take you through the whole process and comprehensively understand the software accidents that belong to testing

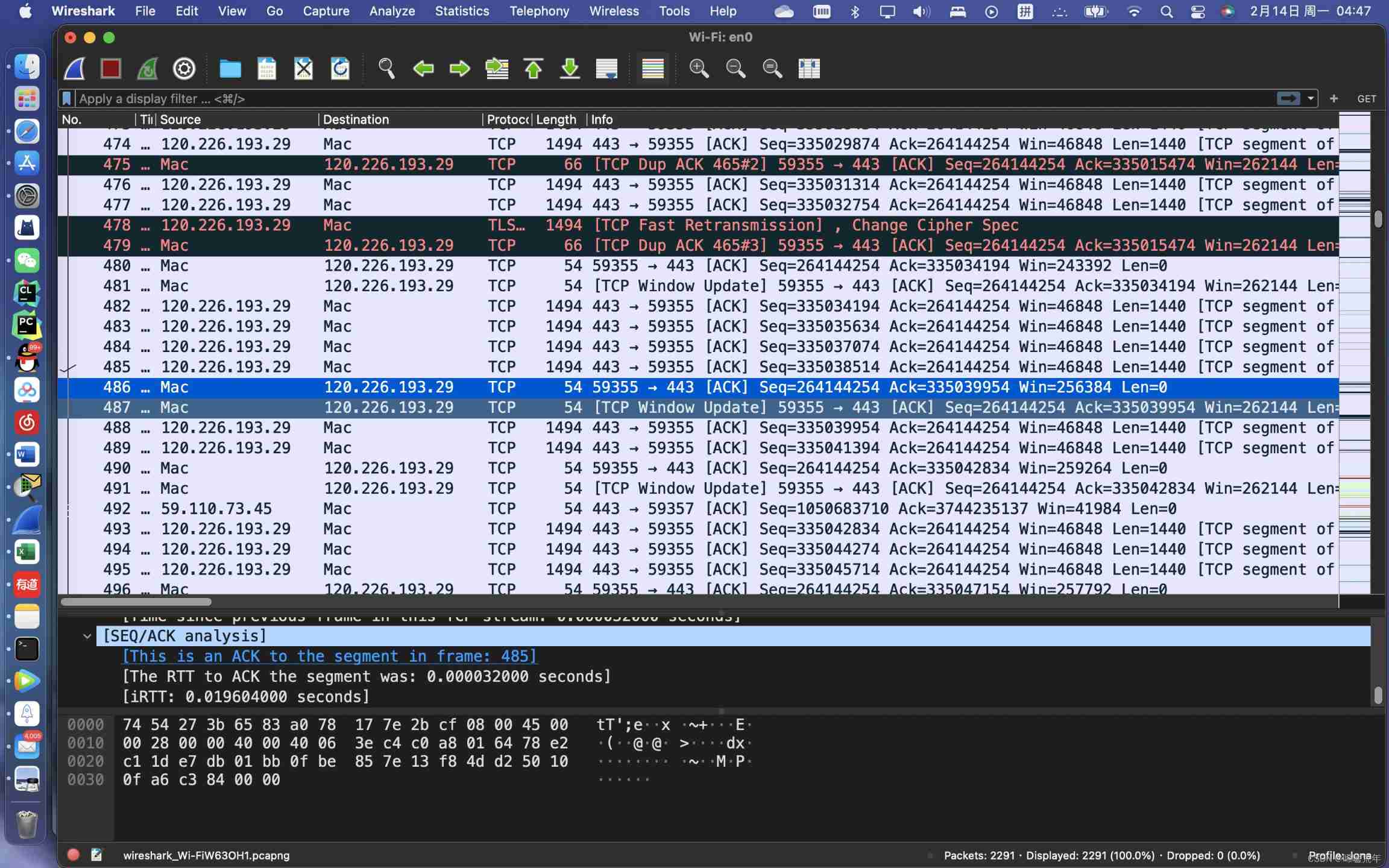

TCP cumulative acknowledgement and window value update

VMware network mode - bridge, host only, NAT network

Inverted chain disk storage in Lucene (pfordelta)

Analysis of the problems of the 7th Blue Bridge Cup single chip microcomputer provincial competition

Le Seigneur des anneaux: l'anneau du pouvoir

Collector in ES (percentile / base)

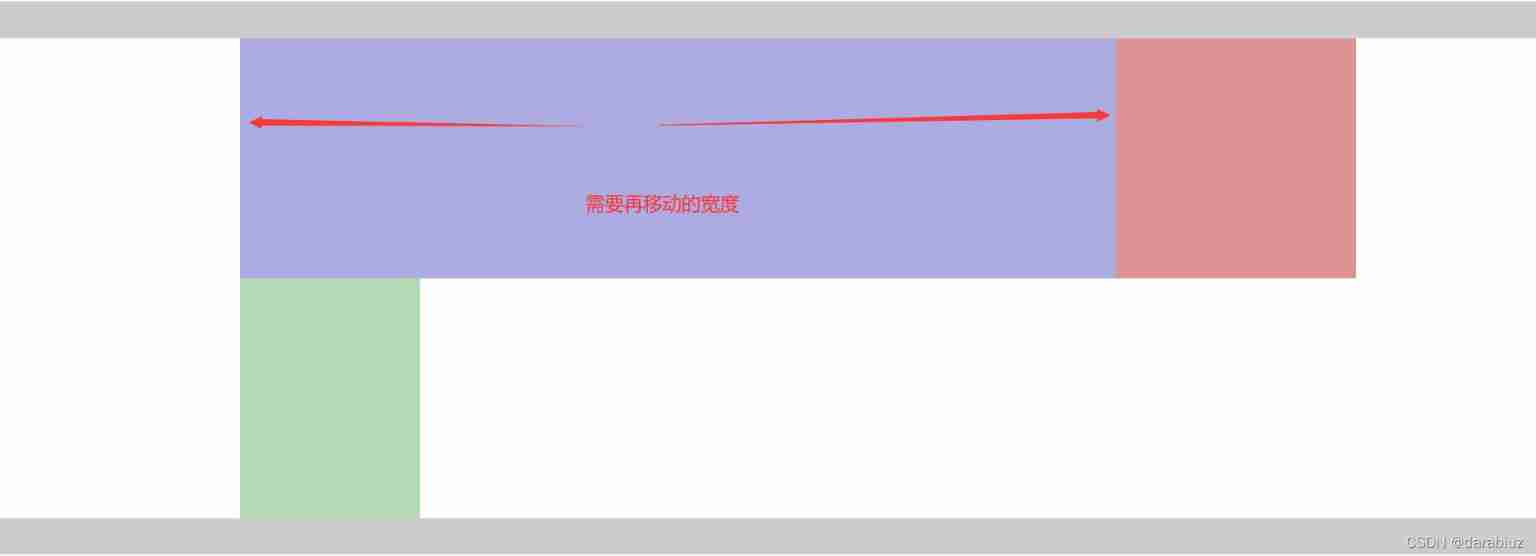

Margin left: -100% understanding in the Grail layout

专题 | 同步 异步

你开发数据API最快多长时间?我1分钟就足够了

随机推荐

HCIA notes

VMWare网络模式-桥接,Host-Only,NAT网络

Spa single page application

Summary of Arduino serial functions related to print read

Vertx multi vertical shared data

Vertx metric Prometheus monitoring indicators

4everland: the Web3 Developer Center on IPFs has deployed more than 30000 dapps!

【MindSpore论文精讲】AAAI长尾问题中训练技巧的总结

[coppeliasim4.3] C calls UR5 in the remoteapi control scenario

The underlying mechanism of advertising on websites

Lucene merge document order

[untitled]

PdfWriter. GetInstance throws system Nullreferenceexception [en] pdfwriter GetInstance throws System. NullRef

IP home online query platform

Industrial resilience

Image recognition and detection -- Notes

Mail sending of vertx

论文学习——鄱阳湖星子站水位时间序列相似度研究

4EVERLAND:IPFS 上的 Web3 开发者中心,部署了超过 30,000 个 Dapp!

The concept of C language pointer