当前位置:网站首页>Mongodb stats counts the space occupied by the collection

Mongodb stats counts the space occupied by the collection

2022-07-26 19:10:00 【johnny233】

summary

Use MongoDB In the process of , It is found that the disk space will be full , Therefore, this article is obtained through simple research .

Query version :db.version();

Get full set name :db.getCollectionNames();

Statistics

stay Mongodb There are two and stats Related methods :db.stats() and db.collection.stats().

db.stats()

db.stats() Return the basic information of the whole database , You can pass in a number Type parameter , It will display the data in a specified multiple , Such as db.stats(1024) Then press kb Display the data , Press default byte Show :

[

{

"avgObjSize": 375.1006008728961,

"collections": 676,

"dataSize": 10408153436,

"db": "ai_cbd_iview_api",

"indexSize": 387710976,

"indexes": 1349,

"numExtents": 0,

"objects": 27747632,

"ok": 1,

"storageSize": 3219529728,

"views": 0

}

]

Reading :

- db: database

- collections: How many collections does the database have ; By running

show collectionsCheck the current database collection - objects: All of the current database collection How many lines of data are there in total . The data shown is an estimate , It's not very precise

- avgObjSize: The size of each row of data , Estimated value , Company bytes

- dataSize: The total size of all data in the current database , It's not about occupying the disk size . Company bytes

- storageSize: The current database occupies disk size , Company bytes, because mongodb There is a mechanism for pre allocation of space , To prevent the pressure on the disk when a large amount of data is inserted , So we will allocate more disk space in advance

- numExtents: Continuously allocated data blocks

- indexes: The number of indexes

- indexSize: Index footprint

- nsSizeMB: Namespace file size

db[''].stats()

Query statistics of a set :db['tab_f02742bc4568a7b92b85a0054e4c6836'].stats();, Output :

[

{

"avgObjSize": 459,

"capped": false,

"count": 2090003,

"indexSizes": {

"_id_": 20877312,

"iview_mongo_index_1": 7118848

},

"nindexes": 2,

"ns": "ai_cbd_iview_api.tab_0bb1fb66b4fd3927b4679e8499d9f88e",

"ok": 1,

"size": 959734617,

"storageSize": 424046592,

"totalIndexSize": 27996160,

"wiredTiger": {

"metadata": {

"formatVersion": 1

},

"creationString": "access_pattern_hint=none,allocation_size=4KB,app_metadata=(formatVersion=1),block_allocation=best,block_compressor=snappy,cache_resident=false,checksum=on,colgroups=,collator=,columns=,dictionary=0,encryption=(keyid=,name=),exclusive=false,extractor=,format=btree,huffman_key=,huffman_value=,ignore_in_memory_cache_size=false,immutable=false,internal_item_max=0,internal_key_max=0,internal_key_truncate=true,internal_page_max=4KB,key_format=q,key_gap=10,leaf_item_max=0,leaf_key_max=0,leaf_page_max=32KB,leaf_value_max=64MB,log=(enabled=true),lsm=(auto_throttle=true,bloom=true,bloom_bit_count=16,bloom_config=,bloom_hash_count=8,bloom_oldest=false,chunk_count_limit=0,chunk_max=5GB,chunk_size=10MB,merge_max=15,merge_min=0),memory_page_max=10m,os_cache_dirty_max=0,os_cache_max=0,prefix_compression=false,prefix_compression_min=4,source=,split_deepen_min_child=0,split_deepen_per_child=0,split_pct=90,type=file,value_format=u",

"type": "file",

"uri": "statistics:table:ai_cbd_iview_api/collection-30358-6087677533101142803",

"LSM": {

"bloom filter false positives": 0,

"bloom filter hits": 0,

"bloom filter misses": 0,

"bloom filter pages evicted from cache": 0,

"bloom filter pages read into cache": 0,

"bloom filters in the LSM tree": 0,

"chunks in the LSM tree": 0,

"highest merge generation in the LSM tree": 0,

"queries that could have benefited from a Bloom filter that did not exist": 0,

"sleep for LSM checkpoint throttle": 0,

"sleep for LSM merge throttle": 0,

"total size of bloom filters": 0

},

"block-manager": {

"allocations requiring file extension": 34827,

"blocks allocated": 34992,

"blocks freed": 236,

"checkpoint size": 423632896,

"file allocation unit size": 4096,

"file bytes available for reuse": 397312,

"file magic number": 120897,

"file major version number": 1,

"file size in bytes": 424046592,

"minor version number": 0

},

"btree": {

"btree checkpoint generation": 194049,

"column-store fixed-size leaf pages": 0,

"column-store internal pages": 0,

"column-store variable-size RLE encoded values": 0,

"column-store variable-size deleted values": 0,

"column-store variable-size leaf pages": 0,

"fixed-record size": 0,

"maximum internal page key size": 368,

"maximum internal page size": 4096,

"maximum leaf page key size": 2867,

"maximum leaf page size": 32768,

"maximum leaf page value size": 67108864,

"maximum tree depth": 3,

"number of key/value pairs": 0,

"overflow pages": 0,

"pages rewritten by compaction": 0,

"row-store internal pages": 0,

"row-store leaf pages": 0

},

"cache": {

"bytes currently in the cache": 237681481,

"bytes read into cache": 28369,

"bytes written from cache": 979663693,

"checkpoint blocked page eviction": 0,

"data source pages selected for eviction unable to be evicted": 8,

"hazard pointer blocked page eviction": 0,

"in-memory page passed criteria to be split": 278,

"in-memory page splits": 131,

"internal pages evicted": 0,

"internal pages split during eviction": 2,

"leaf pages split during eviction": 105,

"modified pages evicted": 105,

"overflow pages read into cache": 0,

"overflow values cached in memory": 0,

"page split during eviction deepened the tree": 1,

"page written requiring lookaside records": 0,

"pages read into cache": 1,

"pages read into cache requiring lookaside entries": 0,

"pages requested from the cache": 2090203,

"pages written from cache": 34969,

"pages written requiring in-memory restoration": 0,

"tracked dirty bytes in the cache": 0,

"unmodified pages evicted": 264

},

"cache_walk": {

"Average difference between current eviction generation when the page was last considered": 0,

"Average on-disk page image size seen": 0,

"Clean pages currently in cache": 0,

"Current eviction generation": 0,

"Dirty pages currently in cache": 0,

"Entries in the root page": 0,

"Internal pages currently in cache": 0,

"Leaf pages currently in cache": 0,

"Maximum difference between current eviction generation when the page was last considered": 0,

"Maximum page size seen": 0,

"Minimum on-disk page image size seen": 0,

"On-disk page image sizes smaller than a single allocation unit": 0,

"Pages created in memory and never written": 0,

"Pages currently queued for eviction": 0,

"Pages that could not be queued for eviction": 0,

"Refs skipped during cache traversal": 0,

"Size of the root page": 0,

"Total number of pages currently in cache": 0

},

"compression": {

"compressed pages read": 1,

"compressed pages written": 34475,

"page written failed to compress": 0,

"page written was too small to compress": 494,

"raw compression call failed, additional data available": 0,

"raw compression call failed, no additional data available": 0,

"raw compression call succeeded": 0

},

"cursor": {

"bulk-loaded cursor-insert calls": 0,

"create calls": 7,

"cursor-insert key and value bytes inserted": 968012264,

"cursor-remove key bytes removed": 0,

"cursor-update value bytes updated": 0,

"insert calls": 2090003,

"next calls": 2,

"prev calls": 2,

"remove calls": 0,

"reset calls": 33445,

"restarted searches": 0,

"search calls": 0,

"search near calls": 0,

"truncate calls": 0,

"update calls": 0

},

"reconciliation": {

"dictionary matches": 0,

"fast-path pages deleted": 0,

"internal page key bytes discarded using suffix compression": 70902,

"internal page multi-block writes": 17,

"internal-page overflow keys": 0,

"leaf page key bytes discarded using prefix compression": 0,

"leaf page multi-block writes": 137,

"leaf-page overflow keys": 0,

"maximum blocks required for a page": 62,

"overflow values written": 0,

"page checksum matches": 1593,

"page reconciliation calls": 365,

"page reconciliation calls for eviction": 1,

"pages deleted": 2

},

"session": {

"object compaction": 0,

"open cursor count": 0

},

"transaction": {

"update conflicts": 0

}

}

}

]

Reading :

- ns: Namespace , Collection name

- size: Set size

- count: Total number of documents in the collection

- avgObjSize: Every obj Size

- storageSize: Allocated storage space , When deleting a document from a collection , This value will not decrease

- capped: Whether to fix the set

- wiredTiger:wiredTiger Store engine related information

- nindexes: Number of indexes

- totalIndexSize: Disk size occupied by index

- indexSizes: Set index list and occupation size of each index

Count the size of the set and sort it in descending order

Can be directly in DataGrip 2021.1.2 Executive JS Code , About DataGrip Connect MongoDB Data sources , You can refer to DataGrip Connect MongoDB And CRUD operation

var sizeArray = [];

// Find statistics of every collection and add to an array

db.getCollectionNames().forEach(function(cName) {

sizeArray.push(db[cName].stats());

});

// Sort the stats array by size. The field 'size' is used

sizeArray = sizeArray.sort(function(a, b) {

return b['size'] - a['size']; });

// Print output in Mb

for (var stat in sizeArray) {

print(sizeArray[stat]['ns'] + ": count=" + sizeArray[stat]['count']

+ ": " + (sizeArray[stat]['size']/(1024 * 1024)).toFixed(2) + " Mb");

}

tables = db.getCollectionNames();

tables.forEach( function (item) {

stats=db.runCommand({

collStats:item});

sizeGB = stats.storageSize/1024/1024/1024;

prettyGB = Math.round(sizeGB)+ 'GB';

print(item, prettyGB)

})

Count the size of the set index and sort it in descending order

var sizeMap = {

}

// Iterate through every collection

db.getCollectionNames().forEach(function(cName) {

indexes = db[cName].stats().indexSizes

// Now iterate through every index of the current collection

// We create a map here with key as combination of collection name and index name

for (i in indexes) sizeMap[cName + " - " + i] = indexes[i];

});

var sizeArray = [];

// Map is converted to an array each element of which is a two member array

// This inner arrary contains the collection+index name key and the size itself

for (key in sizeMap) sizeArray.push([key, sizeMap[key]])

// Now sort outer array using the second column of inner array

var sizeArray = sizeArray.sort(function(a, b) {

return b[1] - a[1]})

// Print list of index size in sorted form

for (x in sizeArray) print( sizeArray[x][0] + ": " + (sizeArray[x][1]/1024).toFixed(2) +" kb");

Clean up redundant space

// primary

db.runCommand({compact:'flow_down_stream_info',force:true})

// secondary

db.runCommand({compact:'flow_down_stream_info'})

It is recommended to run on the slave library first , After the observation is OK, then primary Up operation .

Not recommended repairDatabase() command , It may cause data damage

Just to clarify, please be careful about using repairDatabase on a replica set node. repairDatabase is meant to be used to salvage readable data i.e. after a disk corruption, so it can remove unreadable data and let MongoDB start in the face of disk corruption.

If this node has an undetected disk corruption and you run repairDatabase on it, this could lead into that particular node having a different data content vs. the other node as a result of repairDatabase. Since MongoDB assumes all nodes in a replica set contains identical data, this could lead to crashes and hard to diagnose problems. Due to its nature, this issue could stay dormant for a long time, and suddenly manifest itself with a vengeance, seemingly without any apparent reason.

WiredTiger will eventually reuse the empty spaces with new data, and the periodic checkpointing that WiredTiger does could potentially release space to the OS without any intervention on your part.

If you really need to give space back to the OS, then an initial sync is the safest choice if you have a replica set. On a standalone, dump/restore will achieve the same result. Otherwise, compact is the safer choice vs. repairDatabase. Please backup your data before doing any of these, since in my opinion this would qualify as a major maintenance

Reference resources

how-to-get-sorted-list-of-collection-and-index-sizes-in-a-mongodb-database

MongoDB / WiredTiger: reduce storage size after deleting properties from documents

https://blog.csdn.net/yanxiaojia521/article/details/122565291

边栏推荐

- JS使用readline来实现终端输入数据

- JS刷题计划——链表

- 图解用户登录验证流程,写得太好了!

- rancher部署kubernetes集群

- 2022t elevator repair examination questions and online simulation examination

- VTK (the Visualization Toolkit) loads STL models

- Kubectl common commands and simple explanations

- 简述MES系统的11大核心功能模块

- Briefly describe the 11 core functional modules of MES system

- Here comes the most complete introduction to MES system

猜你喜欢

Operations research 69 | explanation of classic examples of dynamic planning

5 best overseas substitutes for WPS Office

一些时序建模策略(一)

项目中@RequestMapping的作用以及如何使用

JS uses readLine to realize terminal input data

SD NAND与eMMC优劣势对比

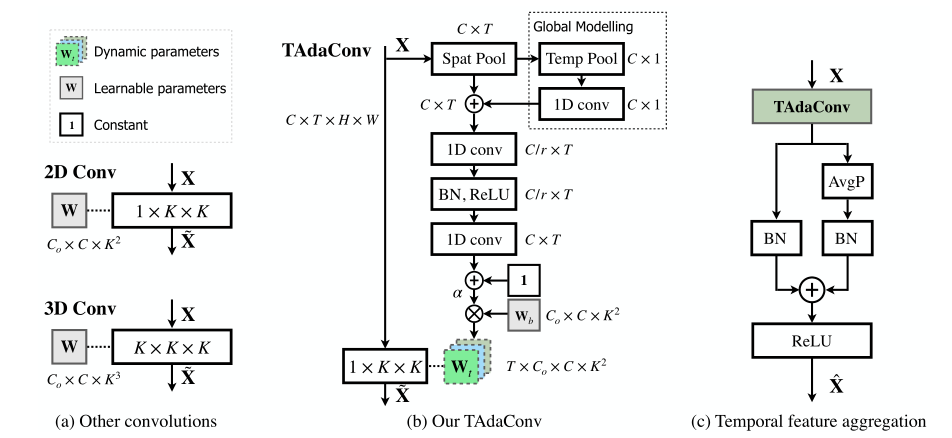

(ICLR-2022)TADA!用于视频理解的时间自适应卷积

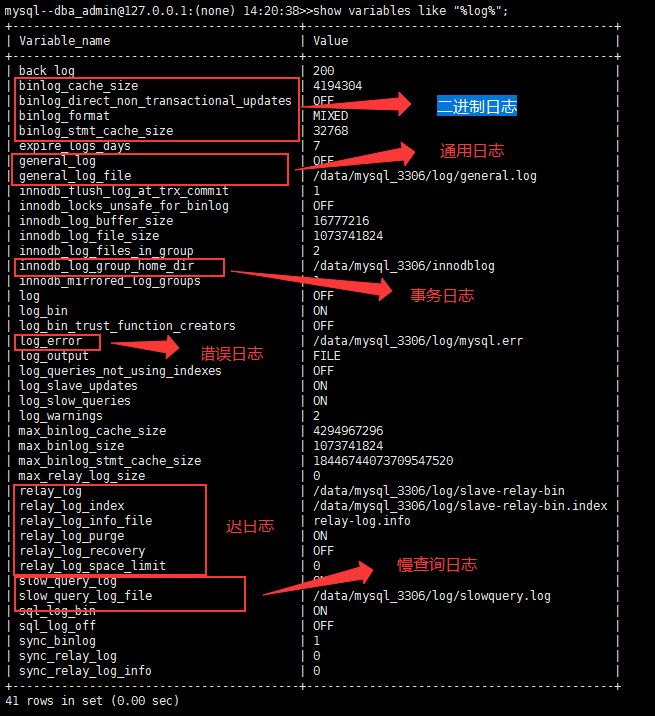

MySQL日志介绍

Interview summary of some large factories

LeetCode简单题之数组能形成多少数对

随机推荐

Here comes the most complete introduction to MES system

2022G1工业锅炉司炉上岗证题库及模拟考试

Comparison of advantages and disadvantages between SD NAND and EMMC

I'm cool, so I'm here

How many pairs can an array of leetcode simple questions form

2022年焊工(初级)操作证考试题库及模拟考试

TypeScript阶段学习

[AUTOSAR RTE] - 1-talk about RTE (run time environment)

Interview summary of some large factories

Database expansion can also be so smooth, MySQL 100 billion level data production environment expansion practice

[postgraduate entrance examination vocabulary training camp] day 14 - Panini, predict, access, apologize, sense, transport, aggregation

OpenSSF 基金会总经理 Brian Behlendorf :预计 2026 年将有 4.2 亿个开源

场景之分页查询设计

Racher deploys kubernetes cluster

CoVOS:无需解码!利用压缩视频比特流的运动矢量和残差进行半监督的VOS加速(CVPR 2022)...

Write a starter

The United States, Japan and South Korea jointly developed 6G with the intention of anti surpassing, but China has long been prepared

MES系统最全介绍来了

Multi merchant mall system function disassembly Lecture 16 - platform side member growth value record

立创EDA使用笔记