当前位置:网站首页>The first scratch crawler

The first scratch crawler

2022-07-25 12:20:00 【Tota King Li】

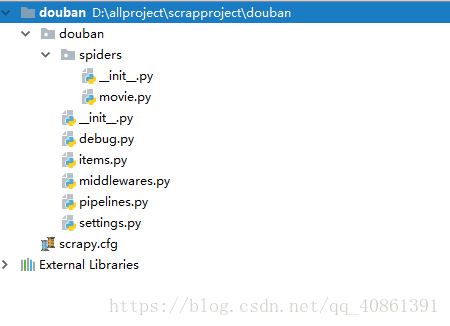

scrapy The directory structure is as follows

What we want to crawl is the title of the book in the book network , author , And the description of the book

First, we need to define the model of crawling data , stay items.py In file

import scrapy

class MoveItem(scrapy.Item): # Define the model of crawled data title = scrapy.Field() auth = scrapy.Field() desc = scrapy.Field() The main thing is spiders In the catalog move.py file

import scrapy

from douban.items import MoveItem

class MovieSpider(scrapy.Spider):

# Indicates the name of the spider , The name of each spider must be unique

name = 'movie'

# Indicates the domain name filtered and crawled

allwed_domians = ['dushu.com']

# Indicates the first thing to crawl url

start_urls = ['https://www.dushu.com/book/1188.html']

def parse(self,response):

li_list = response.xpath('/html/body/div[6]/div/div[2]/div[2]/ul/li')

for li in li_list:

item = MoveItem()

item['title'] = li.xpath('div/h3/a/text()').extract_first()

item['auth'] = li.xpath('div/p[1]/a/text()').extract_first()

item['desc'] = li.xpath('div/p[2]/text()').extract_first()

# generator

yield item

href_list = response.xpath('/html/body/div[6]/div/div[2]/div[3]/div/a/@href').extract()

for href in href_list:

# Crawl on the page url completion

url = response.urljoin(href)

# A generator ,response Link inside , Then proceed to request, Keep executing parse, It's a recursion .

yield scrapy.Request(url=url,callback=self.parse)

The only way to persist data is to save it : stay settings.py Set in file

stay pipelines.py In the document :

import pymongo

class DoubanPipeline(object):

def __init__(self):

self.mongo_client = pymongo.MongoClient('mongodb://39.108.188.19:27017')

def process_item(self, item, spider):

db = self.mongo_client.data

message = db.messages

message.insert(dict(item))

return item边栏推荐

- Jenkins配置流水线

- Add a little surprise to life and be a prototype designer of creative life -- sharing with X contestants in the programming challenge

- Scott+Scott律所计划对Yuga Labs提起集体诉讼,或将确认NFT是否属于证券产品

- Those young people who left Netease

- 水博士2

- Zuul gateway use

- 利用wireshark对TCP抓包分析

- 【二】栅格数据显示拉伸色带(以DEM数据为例)

- R language ggplot2 visualization: use the ggstripchart function of ggpubr package to visualize the dot strip chart, set the palette parameter to configure the color of data points at different levels,

- 协程

猜你喜欢

第一个scrapy爬虫

Technical management essay

微软Azure和易观分析联合发布《企业级云原生平台驱动数字化转型》报告

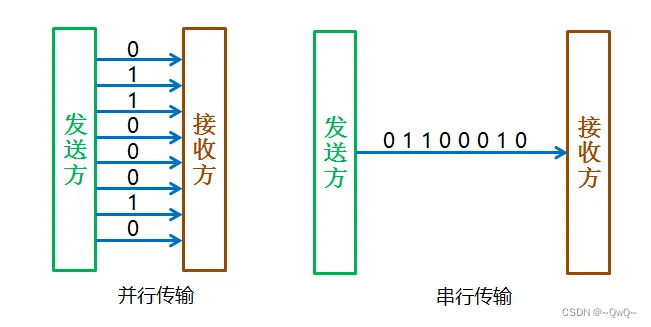

通信总线协议一 :UART

Meta learning (meta learning and small sample learning)

技术管理杂谈

WPF project introduction 1 - Design and development of simple login page

【GCN-RS】Region or Global? A Principle for Negative Sampling in Graph-based Recommendation (TKDE‘22)

Intelligent information retrieval (overview of intelligent information retrieval)

水博士2

随机推荐

Transformer variants (spark transformer, longformer, switch transformer)

Behind the screen projection charge: iqiyi's quarterly profit, is Youku in a hurry?

Fiddler抓包APP

【七】图层显示和标注

aaaaaaaaaaA heH heH nuN

【五】页面和打印设置

2.1.2 application of machine learning

从云原生到智能化,深度解读行业首个「视频直播技术最佳实践图谱」

【GCN-RS】Region or Global? A Principle for Negative Sampling in Graph-based Recommendation (TKDE‘22)

logstash

【GCN-RS】Towards Representation Alignment and Uniformity in Collaborative Filtering (KDD‘22)

Mirror Grid

【十】比例尺添加以及调整

[dark horse morning post] eBay announced its shutdown after 23 years of operation; Wei Lai throws an olive branch to Volkswagen CEO; Huawei's talented youth once gave up their annual salary of 3.6 mil

Plus版SBOM:流水线物料清单PBOM

WPF project introduction 1 - Design and development of simple login page

Unexpected rollback exception analysis and transaction propagation strategy for nested transactions

客户端开放下载, 欢迎尝鲜

R language ggpubr package ggarrange function combines multiple images and annotates_ Figure function adds annotation, annotation and annotation information for the combined image, adds image labels fo

Client open download, welcome to try