当前位置:网站首页>Improve the accuracy of 3D reconstruction of complex scenes | segmentation of UAV Remote Sensing Images Based on paddleseg

Improve the accuracy of 3D reconstruction of complex scenes | segmentation of UAV Remote Sensing Images Based on paddleseg

2022-07-04 18:26:00 【Paddlepaddle】

This article has been published on the official account of the flying oar , Please check the link :

Improve the accuracy of 3D reconstruction of complex scenes | be based on PaddleSeg Segment UAV remote sensing image

Project background

Recovery of motor structure (Structure from Motion, SfM) It is a technology that can automatically recover camera parameters and scene three-dimensional structure from multiple images or video sequences . Due to low cost , This method is widely used , Involving augmented reality 、 Autonomous Navigation 、 Motion capture 、 Hand eye calibration 、 Image and video processing 、 Image based 3D modeling and other fields [1]. Take the application of urban scenes as an example , By taking UAV as the main carrying platform , Obtain a wide range of urban aerial remote sensing images , Use SfM It can restore the three-dimensional structure of the city , It can also collect aerial data of a single building , Restore its fine three-dimensional model according to the sequence image . Although now many studies are aimed at improving SfM A lot of efforts have been made in accuracy , But most SfM Methods are affected by matching results , In complex scenes , Traditional image matching algorithms such as SIFT It is easy to produce mismatching [2], How to effectively filter mismatches is SfM Key issues in the field .

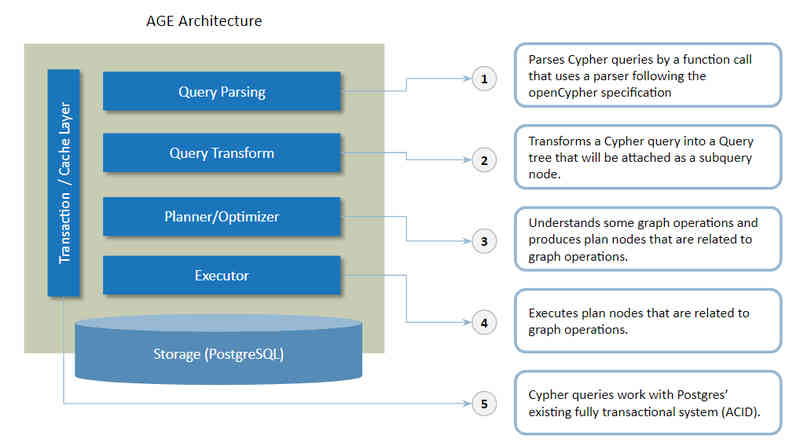

In recent years , With the booming development of semantic segmentation tasks , We can easily use excellent semantic segmentation models to provide high confidence semantic information for specific downstream tasks . for example Zhai wait forsomeone [3] Use the information obtained from the semantic segmentation model to optimize ORB-SLAM2, So that it can maintain stability and optimal positioning performance in a dynamic environment .Chen wait forsomeone [2] Then the semantic segmentation model is used to extract the semantic information of the image to be matched , Provide robust semantic constraints for feature point matching , Improve the accuracy of feature point matching . meanwhile , Semantic constraints extracted from the results of semantic segmentation , Build beam adjustment with equality constraints to optimize 3D Structure and camera pose . The experimental results show that semantic information is used to assist SfM Better reconstruction accuracy can be achieved with the same efficiency . The idea of this paper is worth learning , At the same time, I also noticed that the model used in this article is DeeplabV3+. In a video I saw earlier this year , stay cityscapes-C Running simultaneously on SegFormer and DeeplabV3+, In the video SegFormer Robustness ratio DeeplabV3+ Much better , therefore , I plan to use the propeller image segmentation development kit PaddleSeg Provided in SegFormer The model trains the data set of the paper , by SfM Provide more precise and stable semantic constraints .

Project introduction

SegFormer Model is introduced

PaddleSeg Provided based on the implementation of the propeller SegFormer Model [4], The model will Transformer And lightweight multi-layer perception (MLP) Decoder combination , performance SOTA, Better performance than the SETR、Auto-Deeplab and OCRNet Wait for the Internet . Besides ,PaddleSeg Provides SegFormer-B0 To SegFormer-B5 A series of models , With SegFormer-B4 For example , The model has 64M Parameters , stay ADE20K Data set on the implementation of 50.3% Of mIoU, Smaller parameters than the previous best method 5 times , The accuracy is improved 2.2%.

For a detailed introduction of the model, please refer to :

- The original address of the thesis :

https://arxiv.org/abs/2105.15203

- Github Code address :

https://github.com/PaddlePaddle/PaddleSeg/tree/release/2.5/configs/segformer

- Segformer Reading AI Studio Project address :

https://aistudio.baidu.com/aistudio/projectdetail/3728553

Data set introduction

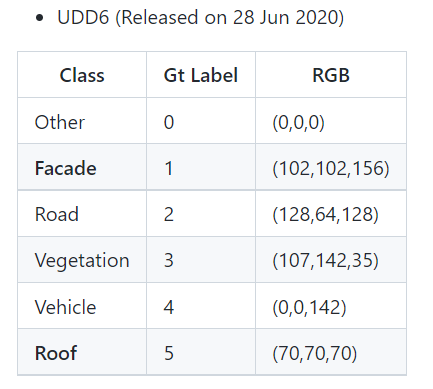

The data set used in this project is UDD6 Data sets , It is used by the graphics and Interaction Laboratory of Peking University DJI-Phantom 4 Drones in China 4 City 60-100m Height collected and marked , For aerial scene understanding 、 Reconstructed dataset . The resolution of the data is 4k(4096*2160) or 12M(4000*3000), It contains various urban scenes . The categories contained in this dataset are shown in the following figure .

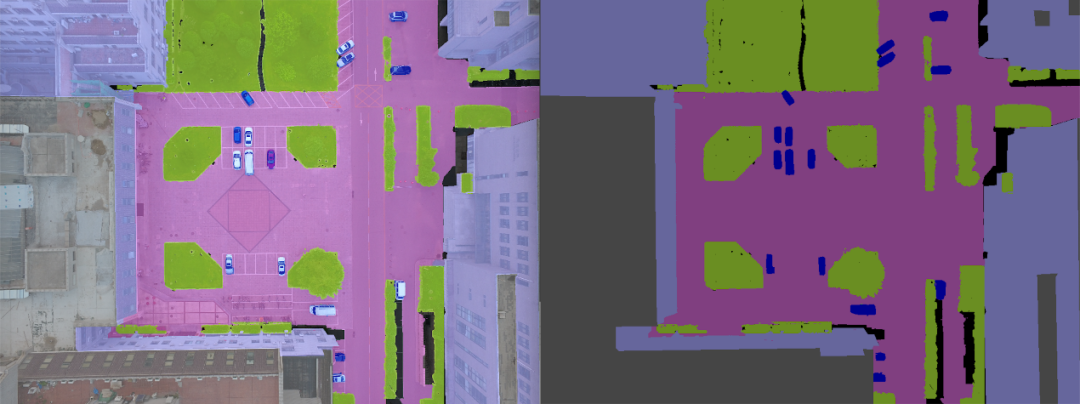

UDD6 Including the building facade 、 road 、 Vegetation 、 automobile 、 Building roofs and others 6 class , An example of data is shown in the figure below .

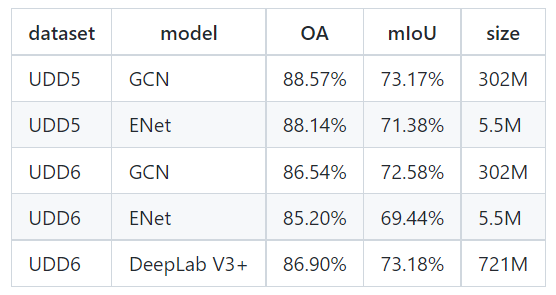

UDD6 Data sets have training sets 160 Zhang He verification set 45 Zhang , No test set . Each segmentation model pair as a benchmark UDD6 The test effect of data validation dataset is shown in the following figure , You can see the best effect is DeeplabV3+,mIoU by 73.18%, Model size by 731M.

UDD6 Dataset address :

https://github.com/MarcWong/UDD

be based on PaddleSeg

Use ****SegFormer Segmentation of aerial remote sensing images

Now let's start with AI Studio The platform is based on PaddleSeg Kit use SegFormer Model segmentation of aerial remote sensing images . It should be noted that ,AI Studio On is Notebook Environment , So it's running cd You need to add a percent sign in front of the order “%”, Run other shell When ordered , You need to add an exclamation mark in front “!”.

Click to read the original text to get the project link

Welcome to fork&star

https://aistudio.baidu.com/aistudio/projectdetail/3565870

First step download PaddleSeg Kit

Users can start from Github download PaddleSeg, In order to facilitate domestic users to download , The oars also provide Gitee Download mode .

!git clone https://gitee.com/paddlepaddle/PaddleSeg

The second step Get ready UDD6 Data sets

The dataset is already attached to the project , Decompress the dataset with the following command .

!mkdir work/UDD6

!unzip -oq data/data75675/UDD6.zip -d work/UDD6/

meanwhile , because UDD6 The image size in the data is (4096, 2160) So before training crop Processing into (1024, 1024) Small images to reduce IO The occupation of . Cutting code process_data.py Code in work Under the folder , You can specify the size of the cut 、 Step size and the number of thread pools to speed up efficiency , Run the following command to process .

%cd work/

!python process_data.py --tag val # Process validation sets

!python process_data.py --tag train # Processing training sets

The third step Install dependencies

Because we are AI Studio Has been installed paddlepaddle-gpu The library of , So next, just run the following command to install the dependencies related to data processing .

%cd /home/aistudio/PaddleSeg

!pip install -r requirements.txt

Step four Dataset list generation

because PaddleSeg The suite is based on the file name list .txt File to define the dataset , Therefore, you need to generate list files of training sets and verification sets .

The suite contains code for dividing data sets to generate file name lists . The code uses the help document URL :

https://github.com/PaddlePaddle/PaddleSeg/blob/release/2.5/docs/data/marker/marker_cn.md

Run the following command .

# Training data set txt Generate

!python tools/split_dataset_list.py \

../work/UDD6 train_sub train_labels_sub \

--split 1.0 0.0 0.0 \

--format JPG png \

--label_class Other Facade Road Vegetation Vehicle Roof

!mv ../work/UDD6/train.txt ../work/UDD6/train_true.txt # Change file name

# Validation data set txt Generate

!python tools/split_dataset_list.py \

../work/UDD6 val_sub val_labels_sub \

--split 0.0 1.0 0.0 \

--format JPG png \

--label_class Other Facade Road Vegetation Vehicle Roof

!rm ../work/UDD6/train.txt # Delete the generated by the second run train.txt

!mv ../work/UDD6/train_true.txt ../work/UDD6/train.txt # Change the file name back

Step five Environment configuration and YML Document preparation

Run the following code , Specify an available graphics card .

!export CUDA_VISIBLE_DEVICES=0

We use SegFormer-B3 This model , The configuration file segformer_b3_UDD.yml stay work Under the folder , You can modify batch_size、iters、learning_rate Wait for super parameters , Run the following command to copy the configuration file to configs In the folder .

!cp ../work/segformer_b3_UDD.yml configs/

Step six Start training

Execute the following command to call train.py Script start training , During the training, the best model will be saved in output/best_model Under the folder .

!python train.py \

--config configs/segformer_b3_UDD.yml \

--do_eval \

--use_vdl \

--save_interval 500 \

--save_dir output

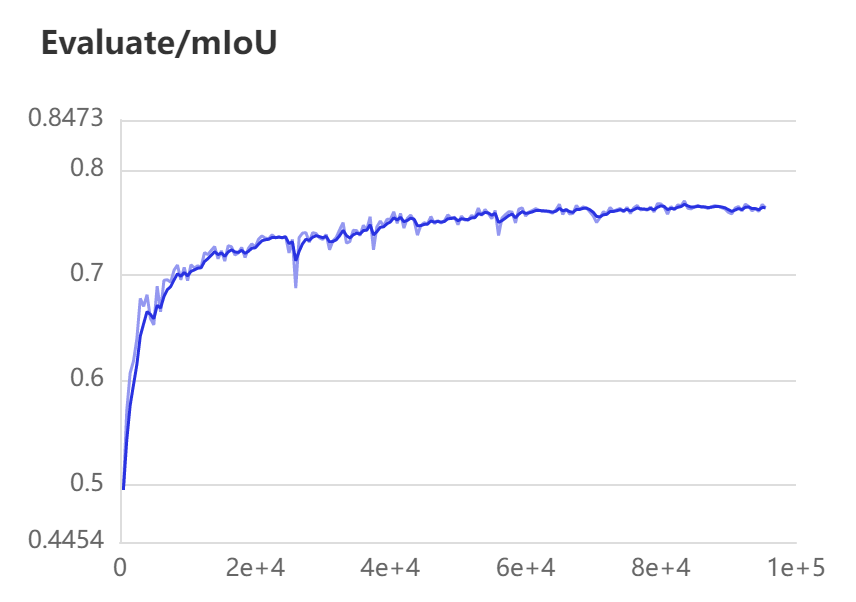

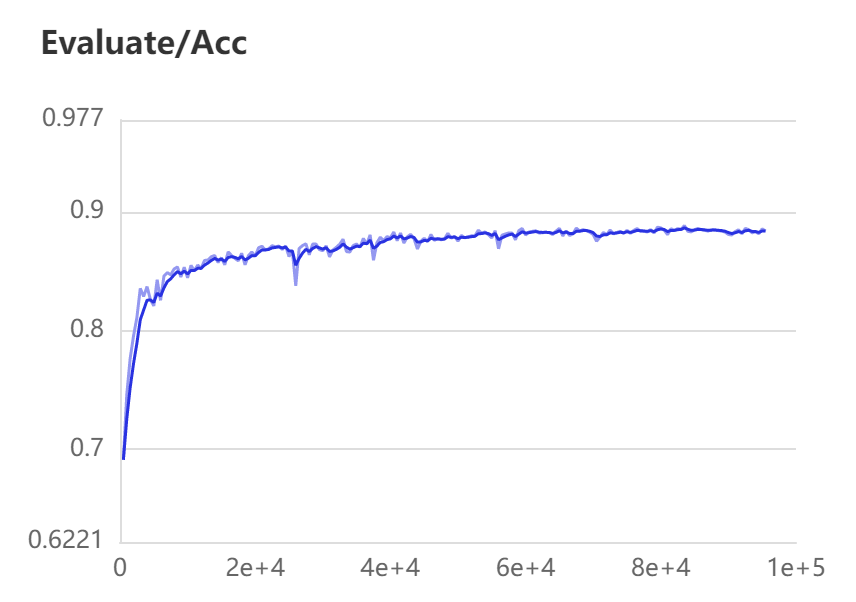

In the process of training , Verification set of mIoU The change of indicators is shown in the figure below .

Verification set of Acc The change of indicators is shown in the figure below .

Step seven Prediction and evaluation of results

Execute the following command to call val.py, Use the trained weights to predict and evaluate the validation set .

!python val.py \

--config configs/segformer_b3_UDD.yml \

--model_path ./output/best_model/model.pdparams

The result of the prediction is UDD6 Data set mIOU achieve 74.50% , Compared with the original paper DeepLabV3+ Of mIOU by 73.18%, high 1.32%.

meanwhile , We can use the trained weights to predict our own aerial images , Execute the following command . among image_path Is the folder path of the image to be predicted .

!python predict.py \

--config ../work/segformer_b3_UDD.yml \

--model_path ./output/best_model/model.pdparams \

--image_path ../work/demo \

--save_dir ../work/result \

--is_slide \

--crop_size 1024 1024 \

--stride 512 512

reference

[1] Wei Y M , Kang L , Yang B , et al. Applications of structure from motion: a survey[J]. Journal of Zhejiang University :C Volume English , 2013, 14(7):9.

[2] Yu C , Yao W , Peng L , et al. Large-Scale Structure from Motion with Semantic Constraints of Aerial Images[J]. Springer, Cham, 2018.

[3]Zhai R, Yuan Y. A Method of Vision Aided GNSS Positioning Using Semantic Information in Complex Urban Environment [J]. Remote Sens. 2022, 14, 869.

[4] Xie E , Wang W , Yu Z , et al. SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers[C]// 2021.

Focus on 【 Flying propeller PaddlePaddle】 official account

Get more technical content ~

边栏推荐

- 未来几年中,软件测试的几大趋势是什么?

- 12 - explore the underlying principles of IOS | runtime [isa details, class structure, method cache | t]

- 线上MySQL的自增id用尽怎么办?

- S5PV210芯片I2C适配器驱动分析(i2c-s3c2410.c)

- 【每日一题】871. 最低加油次数

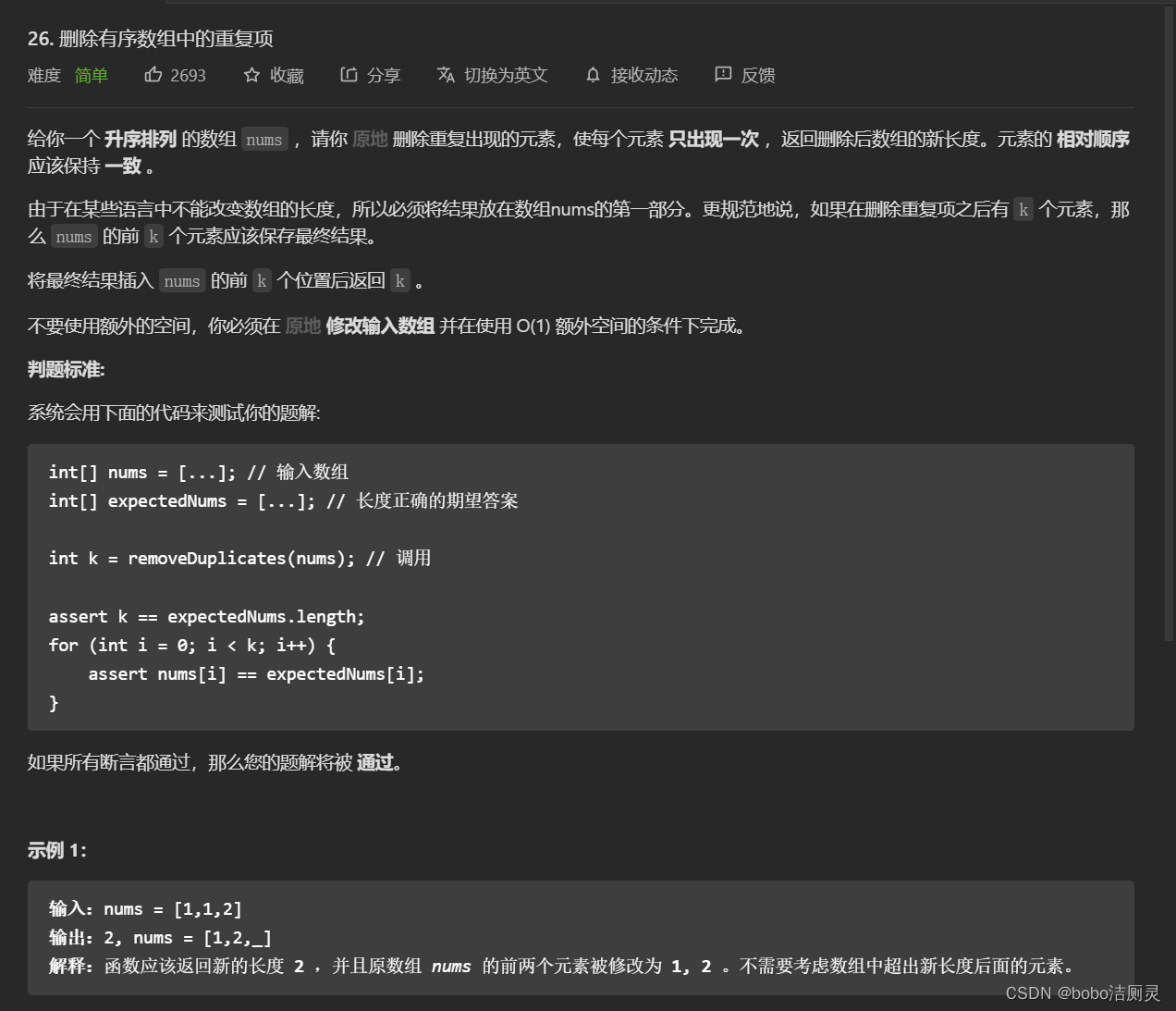

- 力扣刷题日记/day8/7.1

- General environmental instructions for the project

- 78 year old professor Huake impacts the IPO, and Fengnian capital is expected to reap dozens of times the return

- 要上市的威马,依然给不了百度信心

- 曾经的“彩电大王”,退市前卖猪肉

猜你喜欢

同事悄悄告诉我,飞书通知还能这样玩

爬虫初级学习

要上市的威马,依然给不了百度信心

VMware Tools和open-vm-tools的安装与使用:解决虚拟机不全屏和无法传输文件的问题

用于图数据库的开源 PostgreSQL 扩展 AGE被宣布为 Apache 软件基金会顶级项目

力扣刷题日记/day6/6.28

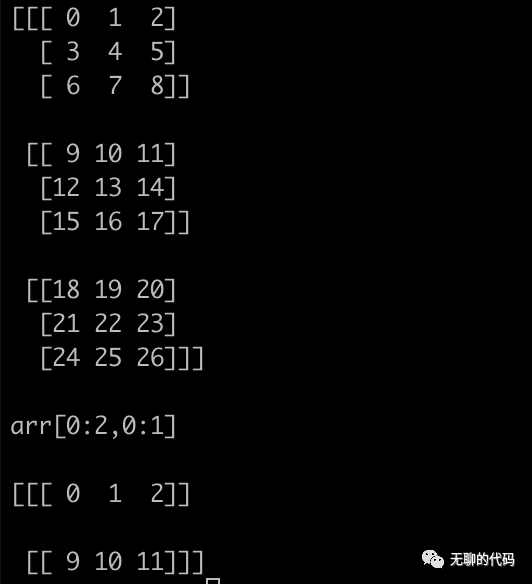

Numpy 的仿制 2

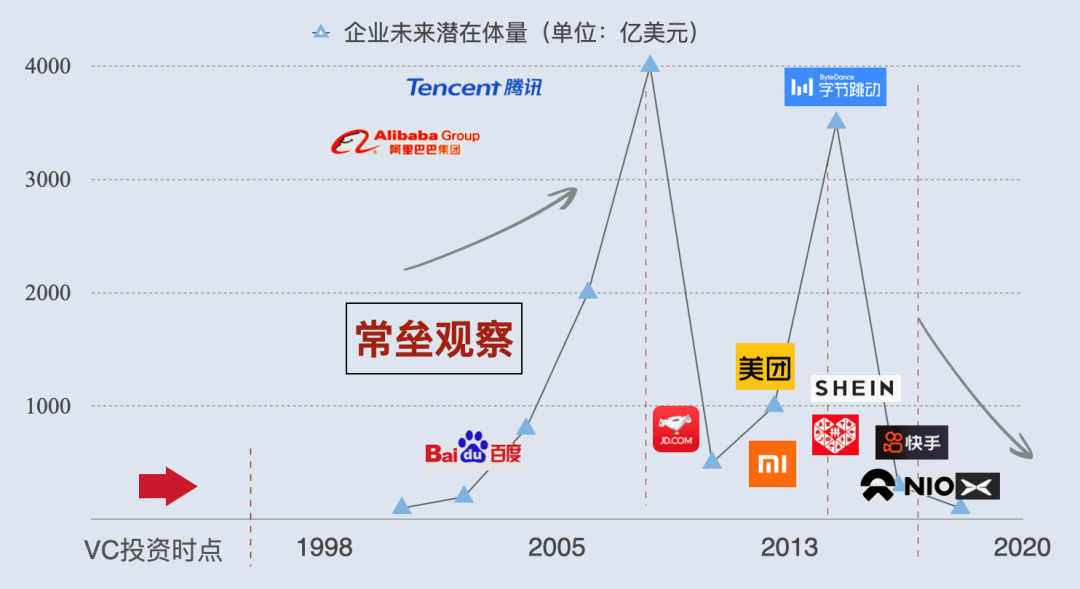

Self reflection of a small VC after two years of entrepreneurship

MySQL common add, delete, modify and query operations (crud)

With an estimated value of 90billion, the IPO of super chip is coming

随机推荐

regular expression

LD_ LIBRARY_ Path environment variable setting

Li Kou brush question diary /day5/2022.6.27

fopen、fread、fwrite、fseek 的文件处理示例

力扣刷题日记/day1/2022.6.23

android使用SQLiteOpenHelper闪退

TCP waves twice, have you seen it? What about four handshakes?

Why are some online concerts always weird?

[daily question] 871 Minimum refueling times

Thawte通配符SSL证书提供的类型有哪些

通过事件绑定实现动画效果

Imitation of numpy 2

S5PV210芯片I2C适配器驱动分析(i2c-s3c2410.c)

Once the "king of color TV", he sold pork before delisting

You should know something about ci/cd

能源行业的数字化“新”运维

Numpy 的仿制 2

如何进行MDM的产品测试

【Go语言刷题篇】Go完结篇|函数、结构体、接口、错误入门学习

力扣刷题日记/day6/6.28