当前位置:网站首页>Yolov5 project based on QT

Yolov5 project based on QT

2022-07-03 03:13:00 【AphilGuo】

Yolov5Qt engineering

main.cpp

#include "mainwindow.h"

#include <QApplication>

int main(int argc, char *argv[])

{

QApplication a(argc, argv);

MainWindow w;

w.show();

return a.exec();

}

mainwindow.cpp

#include "mainwindow.h"

#include "ui_mainwindow.h"

MainWindow::MainWindow(QWidget *parent)

: QMainWindow(parent)

, ui(new Ui::MainWindow)

{

ui->setupUi(this);

setWindowTitle(QStringLiteral("YoloV5 Target detection software "));

timer = new QTimer(this);

timer->setInterval(33);

connect(timer,SIGNAL(timeout()),this,SLOT(readFrame()));

ui->startdetect->setEnabled(false);

ui->stopdetect->setEnabled(false);

Init();

}

MainWindow::~MainWindow()

{

capture->release();

delete capture;

delete [] yolo_nets;

delete yolov5;

delete ui;

}

void MainWindow::Init()

{

capture = new cv::VideoCapture();

yolo_nets = new NetConfig[4]{

{

0.5, 0.5, 0.5, "yolov5s"},

{

0.6, 0.6, 0.6, "yolov5m"},

{

0.65, 0.65, 0.65, "yolov5l"},

{

0.75, 0.75, 0.75, "yolov5x"}

};

conf = yolo_nets[0];

yolov5 = new YOLOV5();

yolov5->Initialization(conf);

ui->textEditlog->append(QStringLiteral(" Default model category :yolov5s args: %1 %2 %3")

.arg(conf.nmsThreshold)

.arg(conf.objThreshold)

.arg(conf.confThreshold));

}

void MainWindow::readFrame()

{

cv::Mat frame;

capture->read(frame);

if (frame.empty()) return;

auto start = std::chrono::steady_clock::now();

yolov5->detect(frame);

auto end = std::chrono::steady_clock::now();

std::chrono::duration<double, std::milli> elapsed = end - start;

ui->textEditlog->append(QString("cost_time: %1 ms").arg(elapsed.count()));

cv::cvtColor(frame, frame, cv::COLOR_BGR2RGB);

QImage rawImage = QImage((uchar*)(frame.data),frame.cols,frame.rows,frame.step,QImage::Format_RGB888);

ui->label->setPixmap(QPixmap::fromImage(rawImage));

}

void MainWindow::on_openfile_clicked()

{

QString filename = QFileDialog::getOpenFileName(this,QStringLiteral(" Open file "),".","*.mp4 *.avi;;*.png *.jpg *.jpeg *.bmp");

if(!QFile::exists(filename)){

return;

}

ui->statusbar->showMessage(filename);

QMimeDatabase db;

QMimeType mime = db.mimeTypeForFile(filename);

if (mime.name().startsWith("image/")) {

cv::Mat src = cv::imread(filename.toLatin1().data());

if(src.empty()){

ui->statusbar->showMessage(" Image does not exist !");

return;

}

cv::Mat temp;

if(src.channels()==4)

cv::cvtColor(src,temp,cv::COLOR_BGRA2RGB);

else if (src.channels()==3)

cv::cvtColor(src,temp,cv::COLOR_BGR2RGB);

else

cv::cvtColor(src,temp,cv::COLOR_GRAY2RGB);

auto start = std::chrono::steady_clock::now();

yolov5->detect(temp);

auto end = std::chrono::steady_clock::now();

std::chrono::duration<double, std::milli> elapsed = end - start;

ui->textEditlog->append(QString("cost_time: %1 ms").arg(elapsed.count()));

QImage img = QImage((uchar*)(temp.data),temp.cols,temp.rows,temp.step,QImage::Format_RGB888);

ui->label->setPixmap(QPixmap::fromImage(img));

ui->label->resize(ui->label->pixmap()->size());

filename.clear();

}else if (mime.name().startsWith("video/")) {

capture->open(filename.toLatin1().data());

if (!capture->isOpened()){

ui->textEditlog->append("fail to open MP4!");

return;

}

IsDetect_ok +=1;

if (IsDetect_ok ==2)

ui->startdetect->setEnabled(true);

ui->textEditlog->append(QString::fromUtf8("Open video: %1 succesfully!").arg(filename));

// Get the whole number of frames QStringLiteral

long totalFrame = capture->get(cv::CAP_PROP_FRAME_COUNT);

int width = capture->get(cv::CAP_PROP_FRAME_WIDTH);

int height = capture->get(cv::CAP_PROP_FRAME_HEIGHT);

ui->textEditlog->append(QStringLiteral(" The whole video is %1 frame , wide =%2 high =%3 ").arg(totalFrame).arg(width).arg(height));

ui->label->resize(QSize(width, height));

// Set the start frame ()

long frameToStart = 0;

capture->set(cv::CAP_PROP_POS_FRAMES, frameToStart);

ui->textEditlog->append(QStringLiteral(" From %1 Frame start reading ").arg(frameToStart));

// Get frame rate

double rate = capture->get(cv::CAP_PROP_FPS);

ui->textEditlog->append(QStringLiteral(" The frame rate is : %1 ").arg(rate));

}

}

void MainWindow::on_loadfile_clicked()

{

QString onnxFile = QFileDialog::getOpenFileName(this,QStringLiteral(" Choose a model "),".","*.onnx");

if(!QFile::exists(onnxFile)){

return;

}

ui->statusbar->showMessage(onnxFile);

if (!yolov5->loadModel(onnxFile.toLatin1().data())){

ui->textEditlog->append(QStringLiteral(" Failed to load model !"));

return;

}

IsDetect_ok +=1;

ui->textEditlog->append(QString::fromUtf8("Open onnxFile: %1 succesfully!").arg(onnxFile));

if (IsDetect_ok ==2)

ui->startdetect->setEnabled(true);

}

void MainWindow::on_startdetect_clicked()

{

timer->start();

ui->startdetect->setEnabled(false);

ui->stopdetect->setEnabled(true);

ui->openfile->setEnabled(false);

ui->loadfile->setEnabled(false);

ui->comboBox->setEnabled(false);

ui->textEditlog->append(QStringLiteral("=======================\n"

" Start detection \n"

"=======================\n"));

}

void MainWindow::on_stopdetect_clicked()

{

ui->startdetect->setEnabled(true);

ui->stopdetect->setEnabled(false);

ui->openfile->setEnabled(true);

ui->loadfile->setEnabled(true);

ui->comboBox->setEnabled(true);

timer->stop();

ui->textEditlog->append(QStringLiteral("======================\n"

" Stop testing \n"

"======================\n"));

}

void MainWindow::on_comboBox_activated(const QString &arg1)

{

if (arg1.contains("s")){

conf = yolo_nets[0];

}else if (arg1.contains("m")) {

conf = yolo_nets[1];

}else if (arg1.contains("l")) {

conf = yolo_nets[2];

}else if (arg1.contains("x")) {

conf = yolo_nets[3];}

yolov5->Initialization(conf);

ui->textEditlog->append(QStringLiteral(" Use model categories :%1 args: %2 %3 %4")

.arg(arg1)

.arg(conf.nmsThreshold)

.arg(conf.objThreshold)

.arg(conf.confThreshold));

}

yolov5.h

#ifndef YOLOV5_H

#define YOLOV5_H

#include <opencv2/opencv.hpp>

#include <opencv2/dnn.hpp>

#include <opencv2/core/cuda.hpp>

#include <fstream>

#include <sstream>

#include <iostream>

#include <exception>

#include <QMessageBox>

struct NetConfig

{

float confThreshold; // class Confidence threshold

float nmsThreshold; // Non-maximum suppression threshold

float objThreshold; //Object Confidence threshold

std::string netname;

};

class YOLOV5

{

public:

YOLOV5(){

} // Constructors

void Initialization(NetConfig conf);

bool loadModel(const char* onnxfile);

void detect(cv::Mat& frame);

private:

const float anchors[3][6] = {

{

10.0, 13.0, 16.0, 30.0, 33.0, 23.0}, {

30.0, 61.0, 62.0, 45.0, 59.0, 119.0},{

116.0, 90.0, 156.0, 198.0, 373.0, 326.0}};

const float stride[3] = {

8.0, 16.0, 32.0 };

std::string classes[80] = {

"person", "bicycle", "car", "motorbike", "aeroplane", "bus",

"train", "truck", "boat", "traffic light", "fire hydrant",

"stop sign", "parking meter", "bench", "bird", "cat", "dog",

"horse", "sheep", "cow", "elephant", "bear", "zebra", "giraffe",

"backpack", "umbrella", "handbag", "tie", "suitcase", "frisbee",

"skis", "snowboard", "sports ball", "kite", "baseball bat",

"baseball glove", "skateboard", "surfboard", "tennis racket",

"bottle", "wine glass", "cup", "fork", "knife", "spoon", "bowl",

"banana", "apple", "sandwich", "orange", "broccoli", "carrot",

"hot dog", "pizza", "donut", "cake", "chair", "sofa", "pottedplant",

"bed", "diningtable", "toilet", "tvmonitor", "laptop", "mouse",

"remote", "keyboard", "cell phone", "microwave", "oven", "toaster",

"sink", "refrigerator", "book", "clock", "vase", "scissors",

"teddy bear", "hair drier", "toothbrush"};

const int inpWidth = 640;

const int inpHeight = 640;

float confThreshold;

float nmsThreshold;

float objThreshold;

//========= test =========

std::vector<int> blob_sizes{

1, 3, 640, 640};

cv::Mat blob = cv::Mat(blob_sizes, CV_32FC1, cv::Scalar(0.0));

//========== pro ========

//cv::Mat blob;

std::vector<cv::Mat> outs;

std::vector<int> classIds;

std::vector<float> confidences;

std::vector<cv::Rect> boxes;

std::vector<int> indices;

cv::dnn::Net net;

void drawPred(int classId, float conf, int left, int top, int right, int bottom, cv::Mat& frame);

void sigmoid(cv::Mat* out, int length);

};

static inline float sigmoid_x(float x)

{

return static_cast<float>(1.f / (1.f + exp(-x)));

}

#endif // YOLOV5_H

yolov5.cpp

#include "yolov5.h"

using namespace std;

using namespace cv;

void YOLOV5::Initialization(NetConfig conf)

{

this->confThreshold = conf.confThreshold;

this->nmsThreshold = conf.nmsThreshold;

this->objThreshold = conf.objThreshold;

classIds.reserve(20);

confidences.reserve(20);

boxes.reserve(20);

outs.reserve(3);

indices.reserve(20);

}

bool YOLOV5::loadModel(const char *onnxfile)

{

// try {

// this->net = cv::dnn::readNetFromONNX(onnxfile);

// int device_no = cv::cuda::getCudaEnabledDeviceCount();

// if (device_no==1){

// this->net.setPreferableBackend(cv::dnn::DNN_BACKEND_CUDA);

// this->net.setPreferableTarget(cv::dnn::DNN_TARGET_CUDA);

// }else{

// QMessageBox::information(NULL,"warning",QStringLiteral(" Being used CPU Reasoning !\n"),QMessageBox::Yes,QMessageBox::Yes);

// }

// return true;

// } catch (exception& e) {

// QMessageBox::critical(NULL,"Error",QStringLiteral(" Error loading model , Please check and try again !\n %1").arg(e.what()),QMessageBox::Yes,QMessageBox::Yes);

// return false;

// }

this->net = cv::dnn::readNetFromONNX(onnxfile);

this->net.setPreferableBackend(cv::dnn::DNN_BACKEND_DEFAULT);

this->net.setPreferableTarget(cv::dnn::DNN_TARGET_CPU);

// if(1 == cv::cuda::getCudaEnabledDeviceCount()){

// this->net.setPreferableBackend(cv::dnn::DNN_BACKEND_CUDA);

// this->net.setPreferableTarget(cv::dnn::DNN_TARGET_CUDA);

// }

// this->net.setPreferableBackend(cv::dnn::DNN_BACKEND_DEFAULT);

// this->net.setPreferableTarget(cv::dnn::DNN_TARGET_CPU);

// this->net.setPreferableBackend(cv::dnn::DNN_BACKEND_INFERENCE_ENGINE);

// this->net.setPreferableTarget(cv::dnn::DNN_TARGET_CPU);

}

void YOLOV5::detect(cv::Mat &frame)

{

cv::dnn::blobFromImage(frame, blob, 1 / 255.0, Size(this->inpWidth, this->inpHeight), Scalar(0, 0, 0), true, false);

this->net.setInput(blob);

this->net.forward(outs, this->net.getUnconnectedOutLayersNames());

/generate proposals

classIds.clear();

confidences.clear();

boxes.clear();

float ratioh = (float)frame.rows / this->inpHeight, ratiow = (float)frame.cols / this->inpWidth;

int n = 0, q = 0, i = 0, j = 0, nout = 8 + 5, c = 0;

for (n = 0; n < 3; n++) /// scale

{

int num_grid_x = (int)(this->inpWidth / this->stride[n]);

int num_grid_y = (int)(this->inpHeight / this->stride[n]);

int area = num_grid_x * num_grid_y;

this->sigmoid(&outs[n], 3 * nout * area);

for (q = 0; q < 3; q++) ///anchor Count

{

const float anchor_w = this->anchors[n][q * 2];

const float anchor_h = this->anchors[n][q * 2 + 1];

float* pdata = (float*)outs[n].data + q * nout * area;

for (i = 0; i < num_grid_y; i++)

{

for (j = 0; j < num_grid_x; j++)

{

float box_score = pdata[4 * area + i * num_grid_x + j];

if (box_score > this->objThreshold)

{

float max_class_socre = 0, class_socre = 0;

int max_class_id = 0;

for (c = 0; c < 80; c++) get max socre

{

class_socre = pdata[(c + 5) * area + i * num_grid_x + j];

if (class_socre > max_class_socre)

{

max_class_socre = class_socre;

max_class_id = c;

}

}

if (max_class_socre > this->confThreshold)

{

float cx = (pdata[i * num_grid_x + j] * 2.f - 0.5f + j) * this->stride[n]; ///cx

float cy = (pdata[area + i * num_grid_x + j] * 2.f - 0.5f + i) * this->stride[n]; ///cy

float w = powf(pdata[2 * area + i * num_grid_x + j] * 2.f, 2.f) * anchor_w; ///w

float h = powf(pdata[3 * area + i * num_grid_x + j] * 2.f, 2.f) * anchor_h; ///h

int left = (cx - 0.5*w)*ratiow;

int top = (cy - 0.5*h)*ratioh; /// Restore the coordinates to the original drawing

classIds.push_back(max_class_id);

confidences.push_back(max_class_socre);

boxes.push_back(Rect(left, top, (int)(w*ratiow), (int)(h*ratioh)));

}

}

}

}

}

}

// Perform non maximum suppression to eliminate redundant overlapping boxes with

// lower confidences

indices.clear();

cv::dnn::NMSBoxes(boxes, confidences, this->confThreshold, this->nmsThreshold, indices);

for (size_t i = 0; i < indices.size(); ++i)

{

int idx = indices[i];

Rect box = boxes[idx];

this->drawPred(classIds[idx], confidences[idx], box.x, box.y,

box.x + box.width, box.y + box.height, frame);

}

}

void YOLOV5::drawPred(int classId, float conf, int left, int top, int right, int bottom, Mat &frame)

{

rectangle(frame, Point(left, top), Point(right, bottom), Scalar(0, 0, 255), 3);

string label = format("%.2f", conf);

label = this->classes[classId] + ":" + label;

int baseLine;

Size labelSize = getTextSize(label, FONT_HERSHEY_SIMPLEX, 0.5, 1, &baseLine);

top = max(top, labelSize.height);

putText(frame, label, Point(left, top), FONT_HERSHEY_SIMPLEX, 0.75, Scalar(0, 255, 0), 1);

}

void YOLOV5::sigmoid(Mat *out, int length)

{

float* pdata = (float*)(out->data);

int i = 0;

for (i = 0; i < length; i++)

{

pdata[i] = 1.0 / (1 + expf(-pdata[i]));

}

}

边栏推荐

- How to implement append in tensor

- [Fuhan 6630 encodes and stores videos, and uses RTSP server and timestamp synchronization to realize VLC viewing videos]

- 3D drawing example

- [principles of multithreading and high concurrency: 1_cpu multi-level cache model]

- How to use asp Net MVC identity 2 change password authentication- How To Change Password Validation in ASP. Net MVC Identity 2?

- From C to capable -- use the pointer as a function parameter to find out whether the string is a palindrome character

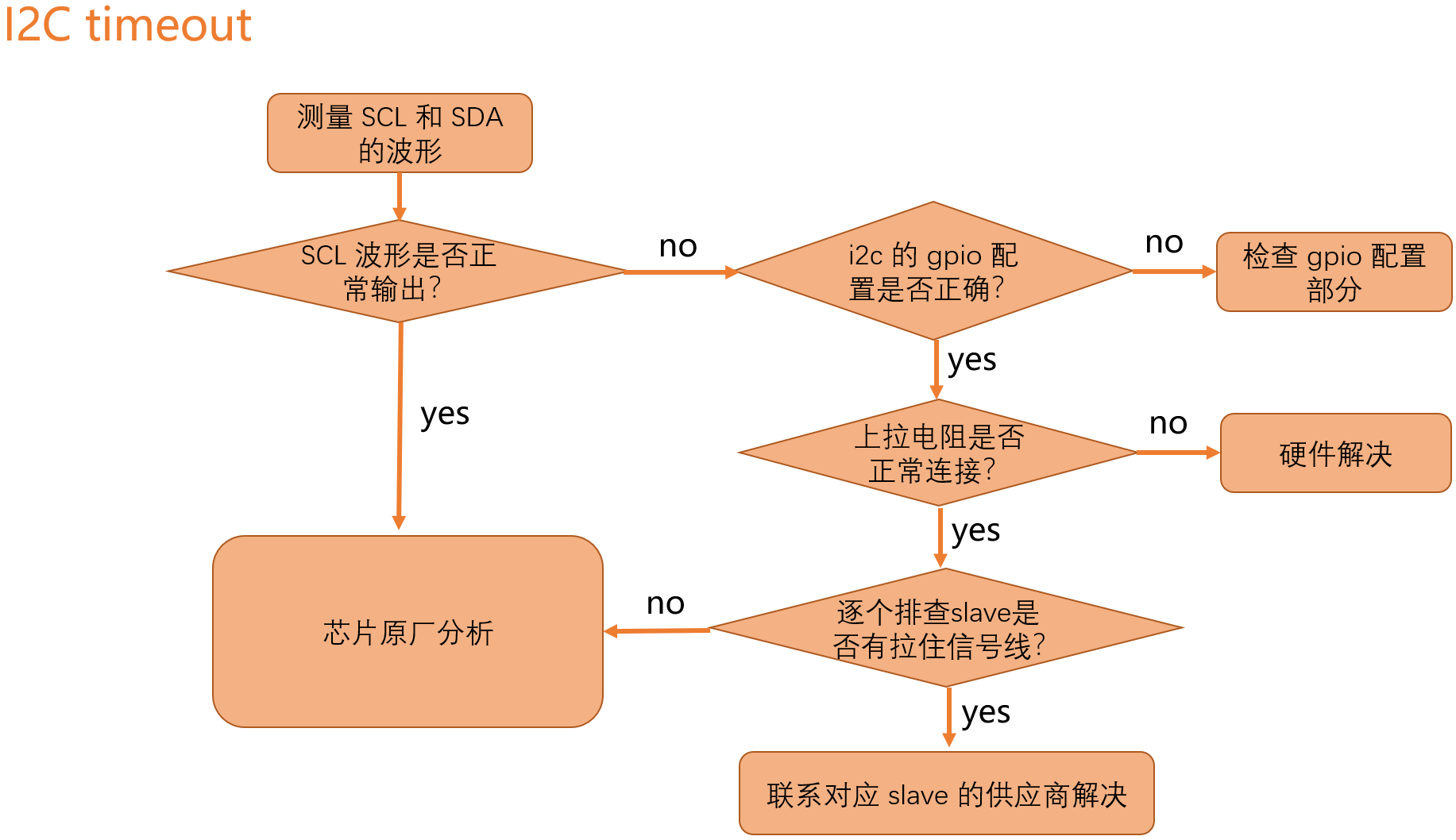

- I2C 子系统(三):I2C Driver

- The XML file generated by labelimg is converted to VOC format

- How to select the minimum and maximum values of columns in the data table- How to select min and max values of a column in a datatable?

- Agile certification (professional scrum Master) simulation exercises

猜你喜欢

LVGL使用心得

Spark on yarn资源优化思路笔记

I2C 子系统(三):I2C Driver

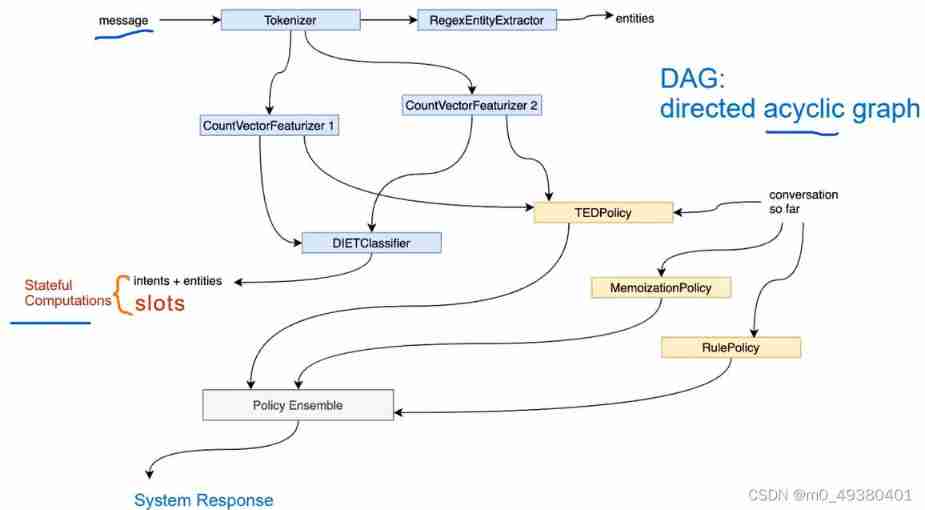

Gavin teacher's perception of transformer live class - rasa project's actual banking financial BOT Intelligent Business Dialogue robot architecture, process and phenomenon decryption through rasa inte

The process of connecting MySQL with docker

Practice of traffic recording and playback in vivo

Use of El tree search method

用docker 連接mysql的過程

Sous - système I2C (IV): débogage I2C

力扣------网格中的最小路径代价

随机推荐

从输入URL到页面展示这中间发生了什么?

Idea set method call ignore case

The file marked by labelme is converted to yolov5 format

Update and return document in mongodb - update and return document in mongodb

VS 2019配置tensorRT

TCP 三次握手和四次挥手机制,TCP为什么要三次握手和四次挥手,TCP 连接建立失败处理机制

左连接,内连接

解决高並發下System.currentTimeMillis卡頓

敏捷认证(Professional Scrum Master)模拟练习题-2

Practice of traffic recording and playback in vivo

Pytorch配置

QT based tensorrt accelerated yolov5

Vs 2019 configure tensorrt to generate engine

Opengauss database development and debugging tool guide

Model transformation onnx2engine

二维数组中的元素求其存储地址

Docker install redis

为什么线程崩溃不会导致 JVM 崩溃

基于Qt的yolov5工程

Force freeing memory in PHP