当前位置:网站首页>Summary of wuenda's machine learning course (14)_ Dimensionality reduction

Summary of wuenda's machine learning course (14)_ Dimensionality reduction

2022-06-28 00:21:00 【51CTO】

Q1 Motive one : data compression

Dimension reduction of features , Such as reducing the relevant two-dimensional to one-dimensional :

Three dimensional to two dimensional :

And so on 1000 Dimension data is reduced to 100 D data . Reduce memory footprint

Q2 Motivation two : Data visualization

Such as 50 The data of dimensions cannot be visualized , The dimension reduction method can reduce it to 2 dimension , Then visualize .

The dimension reduction algorithm is only responsible for reducing the dimension , The meaning of the new features must be discovered by ourselves .

Q3 Principal component analysis problem

(1) Problem description of principal component analysis :

The problem is to make n Dimensional data reduced to k dimension , The goal is to find k Vector , To minimize the total projection error .

(2) Comparison between principal component analysis and linear regression :

The two are different algorithms , The former is to minimize the projection error , The latter is to minimize the prediction error ; The former does not do any analysis , The latter aims to predict the results .

Linear regression is a projection perpendicular to the axis , Principal component analysis is a projection perpendicular to the red line . As shown in the figure below :

(3)PCA It's a new one “ Principal component ” The importance of vectors , Go to the front important parts as needed , Omit the following dimension .

(4)PCA One of the advantages of is the complete dependence on data , There is no need to set parameters manually , Independent of the user ; At the same time, this can also be seen as a disadvantage , because , If the user has some prior knowledge of the data , Will not come in handy , May not get the desired effect .

Q4 Principal component analysis algorithm

PCA take n Dimension reduced to k dimension :

(1) Mean normalization , Minus the mean divided by the variance ;

(2) Calculate the covariance matrix ;

(3) Compute the eigenvector of the covariance matrix ;

For one n x n The matrix of dimensions , On the type of U It is a matrix composed of the direction vector with the minimum projection error with the data , Just go to the front k Vectors get n x k The vector of the dimension , use Ureduce Express , Then the required new eigenvector is obtained by the following calculation z(i)=UTreduce*x(i).

Q5 Choose the number of principal components

Principal component analysis is to reduce the mean square error of projection , The variance of the training set is :

Hope to reduce the ratio of the two as much as possible , For example, I hope the ratio of the two is less than 1%, Select the smallest dimension that meets this condition .

Q6 Reconstructed compressed representation

Dimension reduction formula :

The reconstruction ( That is, from low dimension to high dimension ):

The schematic diagram is shown below : The picture on the left shows dimension reduction , The picture on the right is reconstruction .

Q7 Suggestions on the application of principal component analysis

Use the case correctly :

100 x 100 Pixel image , namely 10000 Whitman's sign , use PCA Compress it to 1000 dimension , Then run the learning algorithm on the training set , In the prediction of the , Apply what you learned before to the test set Ureduce Will test the x convert to z, Then make a prediction .

Incorrect usage :

(1) Try to use PCA To solve the problem of over fitting ,PCA Cannot solve over fitting , It should be solved by regularization .

(2) By default PCA As part of the learning process , In fact, the original features should be used as much as possible , Only when the algorithm runs too slowly or takes up too much memory, the principal component analysis method should be considered .

author : Your Rego

The copyright of this article belongs to the author , Welcome to reprint , But without the author's consent, the original link must be given on the article page , Otherwise, the right to pursue legal responsibility is reserved .

边栏推荐

- 每次启动项目的服务,电脑竟然乖乖的帮我打开了浏览器,100行源码揭秘!

- Grab those duplicate genes

- Arduino UNO通过电容的直接检测实现简易触摸开关

- Sell notes | brief introduction to video text pre training

- 炼金术(8): 开发和发布的并行

- MongoDB-在windows电脑本地安装一个mongodb的数据库

- 计数质数[枚举 -> 空间换时间]

- 智慧风电 | 图扑软件数字孪生风机设备,3D 可视化智能运维

- Sentinel

- [AI application] detailed parameters of NVIDIA Tesla v100-pcie-32gb

猜你喜欢

翻译(5): 技术债务墻:一种让技术债务可见并可协商的方法

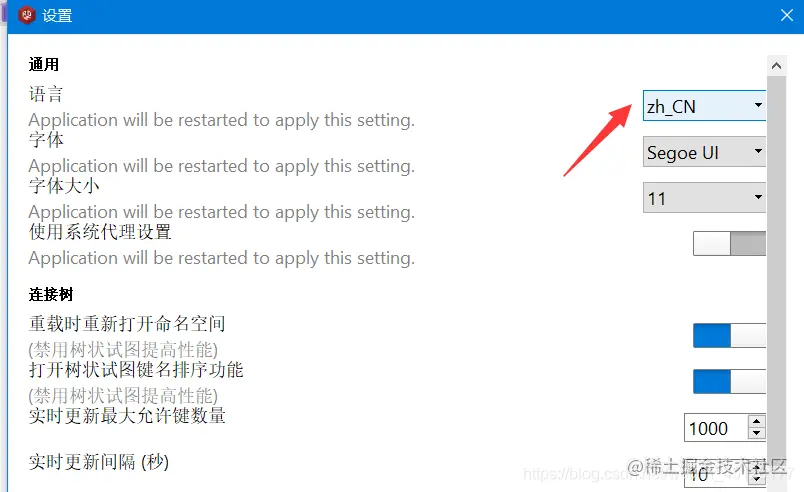

Local visualization tool connects to redis of Alibaba cloud CentOS server

MySQL企业级参数调优实践分享

Matlb| optimal configuration of microgrid in distribution system based on complex network

Webserver flow chart -- understand the calling relationship between webserver modules

2022 PMP project management examination agile knowledge points (3)

Comprehensive evaluation of free, easy-to-use and powerful open source note taking software

Arduino UNO通过电容的直接检测实现简易触摸开关

![计数质数[枚举 -> 空间换时间]](/img/11/c52e1dfce8e35307c848d12ccc6454.png)

计数质数[枚举 -> 空间换时间]

每次启动项目的服务,电脑竟然乖乖的帮我打开了浏览器,100行源码揭秘!

随机推荐

MySQL enterprise parameter tuning practice sharing

自定义MySQL连接池

Matlb| optimal configuration of microgrid in distribution system based on complex network

一个人可以到几家证券公司开户?开户安全吗

Local visualization tool connects to redis of Alibaba cloud CentOS server

SQL reported an unusual error, which confused the new interns

Safe, fuel-efficient and environment-friendly camel AGM start stop battery is full of charm

[AI application] detailed parameters of Jetson Xavier nx

线程池实现:信号量也可以理解成小等待队列

CRTMP视频直播服务器部署及测试

Webserver flow chart -- understand the calling relationship between webserver modules

Introduction to data warehouse

吴恩达《机器学习》课程总结(14)_降维

用两个栈实现队列[两次先进后出便是先进先出]

Pat class B 1013

Scu| gait switching and target navigation of micro swimming robot through deep reinforcement learning

ASP. Net warehouse purchase, sales and inventory ERP management system source code ERP applet source code

赛尔笔记|视频文本预训练简述

RecyclerView实现分组效果,多种实现方式

Redis主从复制、哨兵模式、集群的概述与搭建